Service mesh for Kubernetes microservices

Mesh Design

The ease with which microservices can be deployed, upgraded, and scaled makes them a compelling way to build an application, but as a microservice application grows in complexity and scale, so does the demand on the underlying network that is its lifeblood. A service mesh offers a straightforward way to implement a number of common and useful microservice patterns with no development effort, as well as providing advanced routing and telemetry functions for your microservice application straight out of the box.

Service Mesh Concepts

Unlike a monolithic application, in which the network is only a matter of concern at a limited number of ingress and egress points, a microservices application depends on IP-based networking for all its internal communications, and the more the application scales, the less "ideal" the behavior of that internal network becomes. As interactions between services become more complex, more voluminous, and more difficult to visualize, it's no longer safe for microservice app developers to assume that those interactions will happen transparently and reliably. It can become difficult to see whether a bug in the application is caused by one of the microservices (and if so, which one) or by the configuration of the network.

One way to mitigate non-ideal behaviors in the microservice network is by creating services that make allowances for the interactions. Developers can carefully calculate how long request timeouts should be, allowing for dependencies on other requests cascading down the chain. Another approach is for service developers to create a client library for use by developers of dependent services that interacts with their service's API in a resilient manner. Both approaches have serious drawbacks. The first causes a lot of duplicated effort – each team is spending resources trying to solve essentially the same problem. The second approach runs counter to the point of microservices – if you change your client library, all the services that use it have to be upgraded.

Enter the service mesh. In the domain of service-oriented architectures, including microservices, the meaning of this term has been elevated from its uninspiring literal interpretation – that of a fully interconnected collection of services – to a specialized operating environment in which individual microservices can be written and deployed as though the underlying network really was ideal and in which interservice communications can be managed and monitored in great detail. Lee Calcote, in his book on microservices [1], refers to this concept as "decoupling at Layer 5," the implication being that a service mesh frees the developer of any concerns below the Session Layer of the OSI model. The "decoupling" function of the service mesh can be referred to as its data plane. The data plane of a service mesh can be used to implement a number of common and useful microservice patterns, such as:

- Authentication: Can service A be sure that it is actually sending a request to service B, and not to an impostor?

- Authorization or role-based access control: Is service A allowed to send a particular request type to service B, and is service B allowed to respond to that request?

- Encryption of interservice traffic, to prevent man-in-the-middle attacks and allow the application to be deployed on a zero-trust network.

- Circuit breaking, which prevents a request timeout (caused by a failed service or pod) from cascading back up the request chain and creating a catastrophic failure of the application.

- Service discovery and load balancing.

Clearly, having all of these requirements taken care of by a freely downloadable service mesh is a far more appealing idea than having to implement and maintain them natively in each individual microservice.

If the service mesh's data plane is equipped with some sort of out-of-band communication abilities (the ability to receive control commands and to send logging and status information), then a control plane can be added, affording the opportunity to implement a number of high-level management and monitoring functions for the application using the mesh. These typically include:

- Traffic management – routing user requests to services according to criteria such as user identity, request URL, weightings (for A/B and canary testing), quota enforcement, and fault injection.

- Configuration – of all the data plane functions mentioned earlier.

- Tracing, monitoring, and telemetry – aggregating logs, status information, and request metrics from every service into a single place makes it easy for the service mesh control plane to find out anything, from the HTTP headers of a single given request to how the application's response time is affected by a given increase in usage rates.

In this article, I'll describe some service mesh architectures, provide a brief overview of popular sidecar-based service meshes that are currently available, and demonstrate Istio, which is a full-featured service mesh that can be easily deployed on a Kubernetes cluster.

Common Service Mesh Architectures

Not to be confused with the client library, mentioned above, libraries such as Hystrix and Ribbon, developed by Netflix, can be built into your microservice code and provide some of the data plane benefits described above. Hystrix provides resilience against network latency and circuit-breaking behavior with the aim of preventing cascading failure in a microservice application. Ribbon is used to provide load balancing.

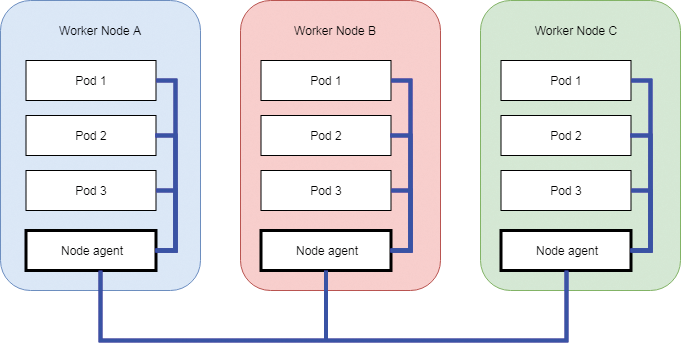

A node agent runs on each hardware node. The application's pods within the node communicate with each other as usual, but internode communication, which has to use a "real" network, is routed via an agent that provides the Data Layer benefits described above. Because a node agent has to be tightly coupled with the platform of the node itself, they are usually specific to the particular cloud vendor or hardware platform that is hosting the application (Figure 1).

A sidecar is an additional container inserted into each pod that proxies all the network traffic flowing into and out of that pod by applying new iptables rules inside the pod that "redirect" the traffic to and from the application's container. The sidecar provides all the data plane functions and is the currently preferred architecture for implementing a full-featured service mesh; the entire mesh is deployed in containers, so it's easy to make a single mesh work on a wide range of platforms and cloud providers.

Microservices are unaware of the sidecar cohabiting their pod, and because each container has its own sidecar, the granularity of control and observability is much higher than can be achieved with the node agent model. Figure 2 shows pods containing sidecars alongside the application container. Sidecars are typically written in native code to work as fast as possible, minimizing any extra network latency, and are the subject of the remainder of this article.

Sidecar Service Mesh Implementations

The most common open source sidecar-based service meshes currently available include the following:

Linkerd 1.x [2], created by Buoyant and now an open source project of the Cloud Native Computing Foundation. Notable users include SalesForce, PayPal, and Expedia. Linkerd 1.x is a data plane-only service mesh that supports a number of environments, including Kubernetes, Mesos, Consul, and ZooKeeper. Consider choosing this if you only want data plane functions, and you aren't using Kubernetes.

Linkerd 2.x [3], formerly Conduit, is a Kubernetes-only (faster) alternative to Linkerd 1.x. This complete service mesh features a native code sidecar proxy written in Rust and a control plane with a web user interface and command-line interface (CLI). Emphasis is on light weight, ease of deployment, and dedication to Kubernetes.

Istio [4] is a complete (control and data plane) service mesh. Although it uses the Envoy proxy (written in C++) as its default sidecar, it can optionally use Linkerd as its data plane. Version 1.1.0 was just released in March 2019. Istio 1.1 provides significant reductions in CPU usage and latency over Istio 1.0 and incremental improvements to all the main feature groups. Istio is marketed as platform independent (example platforms are Kubernetes, GCP, Consul, or simply running it with services that run directly on virtual or physical servers). You might choose Istio if you want a comprehensive set of control plane features that you can extend by means of a plugin API. Istio presents its feature set as traffic management, security, telemetry, and policy enforcement.

In this article, I focus on Istio's core functions and components and deploy them in a sample microservice application – a WordPress website on a Kubernetes cluster. In case you were wondering, istio is the Greek verb to sail, which places it pretty firmly within the Kubernetes (helmsman) Helm and Tiller nomenclature. Despite being platform independent, its configuration APIs and container-based nature make it a natural fit with Kubernetes, and as you'll see below, you can deploy Istio to and remove it from your Kubernetes cluster quickly, with minimal effect on your application.

Istio Architecture and Components

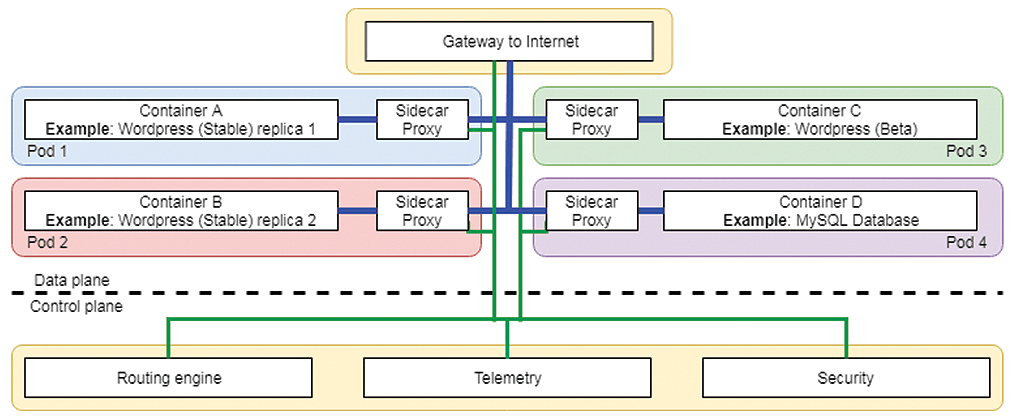

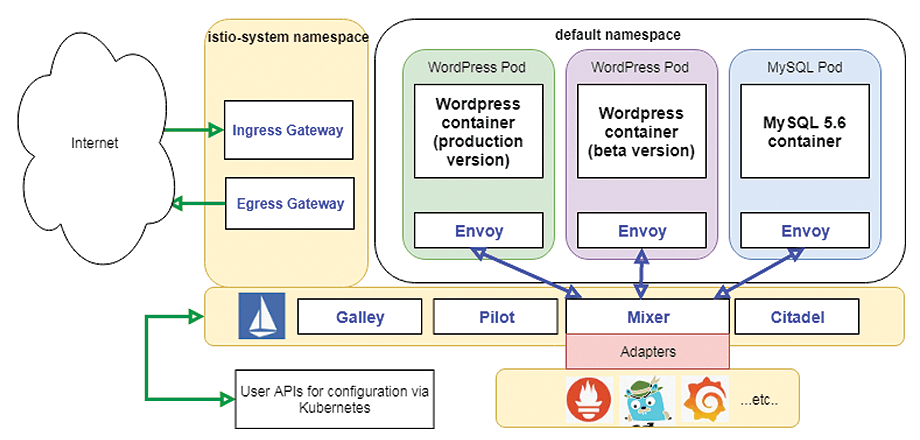

Istio comprises the core data and control plane components it deploys as services in its own namespace and is shown in Figure 3 in a data plane/control plane arrangement. Third-party monitoring apps (Prometheus, Grafana, and Jaeger are included in the demos) are connected by means of adapters. The ingress and egress gateways control communications with the outside world.

Envoy [5] is the sidecar proxy that Istio injects into each application namespace container. Envoy provides a comprehensive set of data plane features, including load balancing and weighted routing, dynamic service discovery, TLS termination (meaning services within the application that don't encrypt their traffic can nonetheless enjoy encrypted communication tunneled via Envoy), circuit breaking, health checking, and metrics.

The Mixer component aggregates the metrics received from all the Envoy proxies and configures and monitors the proxies' enforcement of access control and usage policies.

Pilot translates traffic management and routing configuration into specific configurations for each sidecar. It maintains a "model" of the application/mesh for service discovery (i.e., it allows each sidecar to know what other services are available) and generates and applies secure naming information for each pod that is used to identify what service accounts are allowed to access a given service for authorization purposes.

Citadel is a certificate management and authentication/authorization for users accessing services and for services accessing each other. Citadel monitors the Kubernetes API server and creates certificate/key pairs for each application service, which it stores as Kubernetes secrets and mounts to a service's pods when they start up. The pods will then use them to identify themselves as legitimate providers of their service in future requests.

Galley ingests user-provided Istio API configurations, validates those configurations, and propagates them to the other Istio components.

The ingress and egress gateways are the perimeter proxies used for routing and access control of the application's external traffic. For a complete Istio deployment, these are used in place of the default Kubernetes LoadBalancer and NodePort service types.

Installing Istio on Kubernetes

To get started with Istio, you'll simply need a Kubernetes cluster hosting your microservice app and the Kubernetes command-line tool kubectl running locally. To replicate the demo in this article, you need to run kubectl from a terminal on a desktop, because the telemetry functions rely on accessing dashboards in your web browser by means of localhost port forwarding. If you want to play with Istio but don't have an application to use it with, fear not – the Istio download also contains a sample app.

For this demo, however, I want to try out a service mesh on a live website, so I upgraded my free IBM Cloud account to get the full public load balancer option on a fresh two-node Kubernetes cluster. Although the application will ultimately use Istio's ingress gateway, not the standard Kubernetes load balancer, I still need those real public IPs to make this work.

Next, visit Istio's website [4] and click the Get Started button at the bottom to reach the setup/Kubernetes page. Follow the instructions on the Download page to download and extract the latest release into a local directory, named istio-1.1.0 at the time of writing, and cd into that directory. In the website sidebar, click Install | Quick Start Evaluation Install to see how to install Istio in your cluster. The quick-start installation uses a demo profile that installs the full set of Istio services and some third-party monitoring apps, including Jaeger, Grafana, Prometheus, and Kiali. After installing the Custom Resource Definitions into your cluster, choose one of the two demo profile variants, shown as tabs on the quick-start page:

-

permissive mutual TLS – This option allows both plain text and mTLS traffic between services and is a safe choice for trying out Istio in an environment where the services in your cluster need to communicate with external (non-mesh) services that won't be able to participate in mTLS. This manifest can be found at

install/kubernetes/istio-demo.yaml. -

strict mutual TLS – As the name suggests, this option enforces mTLS for all traffic between services. It's safe to use this when all of your application's services reside in the cluster on which you're installing Istio, because each service will have a sidecar and be able to perform mTLS. This manifest is

install/kubernetes/istio-demo-auth.yaml

For production installations, you are encouraged to use one of the Helm-based installation methods, described under the Customizable Install with Helm page in the sidebar. These also allow you to fine-tune the components and settings of your Istio installation by setting options as key/value pairs.

-

helm template– generates a manifest.yamlfile representing your custom installation. This can then be applied to your cluster withkubectl. -

helm install– deploys one of the supplied Helm charts (modified with any of your chosen options) direct to your cluster via the Tiller service, which has to be running on your cluster. Tiller is the server-side component of Helm, and together they work like a package manager for Kubernetes clusters, where the packages take the form of charts. This setup makes it easy for a team to manage a customized installation of Istio in a production environment. In some contexts, the elevated permissions that Tiller requires are considered a security risk, so another approach to team management of a production cluster configuration would simply be to put all of the required.yamlmanifests under normal version control and deploy them withkubectl.

The demo manifests, combined with automatic sidecar injection, are sufficient in themselves to add value to any Kubernetes-deployed app. Without needing any extra changes or configuration, they will give you interservice authentication and the metrics, telemetry, and third-party apps Jaeger (request tracing), Prometheus (request metrics), and Grafana (time series graphs of metrics) right out of the box; they also define and automatically generate a comprehensive range of metrics (response times, rates of different HTTP response codes, node CPU usage, etc.). They will not implement any ingress gateway functions or any traffic management of inbound requests.

To take full advantage of Istio's features, significant configuration is necessary; the best way to ascend that learning curve is first to complete each of the Tasks listed on the Istio website and then review the Reference section for an understanding of the different object types, configuration options, and commands available (accessible in the sidebar). Covering them all is certainly beyond the scope of this article; instead, the following WordPress example shows how to configure and verify external security, traffic management, mutual authentication, and telemetry features.

For this demo, I installed the strict mutual TLS demo profile with the command:

$ kubectl apply -f install/kubernetes/istio-demo-auth.yaml

This command creates the istio-system namespace and all of the deployments, services, and Istio-specific objects that make up the Istio mesh. The large amount of output generated by this command gives a detailed view of what exactly is being installed into the cluster. After the command has completed, run

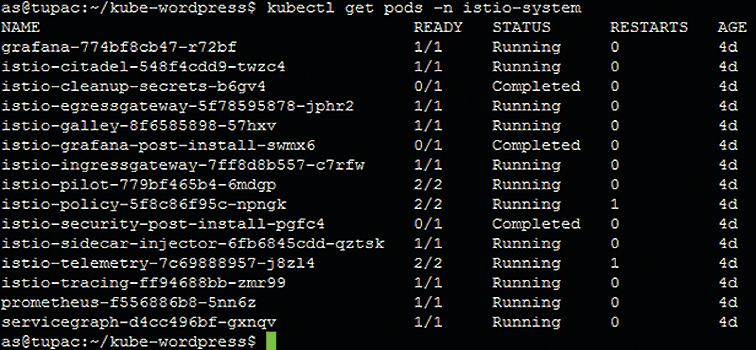

$ kubectl get pods -n istio-system

to check that the containers for Istio's components and third-party apps are running (or, in some cases, have already completed). Figure 4 shows what to expect at this stage. Next, examine Istio's services with

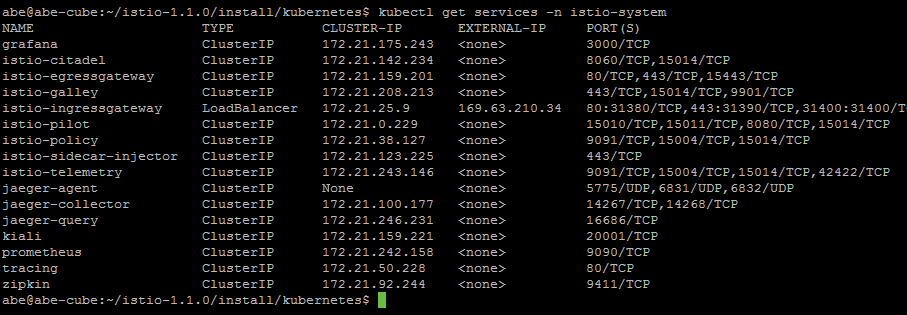

$ kubectl get services -n istio-system

istio-demo-auth.yaml manifest, check for new pods in the istio-system namespace.Figure 5 shows the IP addresses and ports for each of Istio's services and the third-party apps installed by the demo manifest. Note the public IP address assigned to istio-ingressgateway; after the ingress gateway is configured, this will be the application's public entry point.

Within the cluster, the web interfaces for the third-party apps (e.g., Grafana) are accessible on the ports listed in Figure 5. To access them from a local machine, the kubectl port forwarding functionality is used, which I demonstrate in the later sections about tracing and metrics.

At this stage, Istio's control plane is fully up and running; however, without a data plane to control, it's useless, so it's time to inject sidecars into the application's pods.

Automatic Sidecar Injection

Making all your pods members of your service mesh is the easiest option (to leave any pods out would somewhat defeat the object of a service mesh, anyway), and it's already enabled in the demo manifest and controlled by the setting sidecarInjectorWebhook.enabled: true, which will cause sidecars to be injected in any pod created in a namespace that has the label istio-injection=enabled applied to it. So, you need to add that label to your application namespace (the default namespace, in this case). Then check that the label is present:

$ kubectl label namespace default istio-injection=enabled $ kubectl get namespace -L istio-injection

It doesn't matter whether you choose to install Istio before or after deploying an application. When Istio is installed, the automatic sidecar injection process ensures that the proxy is injected into every pod at creation time. To enable Istio on a preexisting deployment, you have to delete the existing pods in your default namespace after installing Istio and setting up automatic sidecar injection. When Kubernetes recreates the pods, the sidecar will be present and will start working automatically with the control plane.

Deploying an Application

I picked WordPress as the demonstration application for this article [6], to get a usable result that could be developed in a number of ways. I selected the wordpress:php7.2 and mysql:5.6 images (which I'll call the "production" deployment) from Docker Hub for my containers. To run it as a microservice, I deployed it as shown in Figure 3, separating the deployments of the WordPress web app and its MySQL database, each having their own local storage volume (WordPress needs this for storing media files, themes, plugins, etc.).

To demonstrate Istio's traffic management features, I created a second WordPress deployment that uses the same storage volume as the first, but specifies a different WordPress image version – wordpress:php7.3 (which I call the "prerelease" deployment). Thus, each service is using the same WordPress version, but with a different underlying PHP version.

This (somewhat contrived) example shows how canary testing might be done on a highly available web app. Although it's tangential to the subject of this article, I'd certainly like to share this hard-won piece of knowledge. If you want to run MySQL in a Kubernetes environment, it'll run several times faster if you apply the skip-name-resolve option to your MySQL container, because this will prevent it trying and failing to resolve the hostname of the requesting client service. This can be done using a Kubernetes ConfigMap object to create a custom MySQL configuration file for the database service.

After applying the application manifests with kubectl, I checked that the Istio sidecars were injected and running. On the assumption that your application follows the one-container-per-pod convention, check for the existence of two containers in each pod; you can tell this from the 2/2 values in the second column of Figure 6.

A full description of one of these pods (Figure 7) shows that the Istio sidecar is indeed present:

$ kubectl describe pod wordpress-77f7f9c485-k7tt9

So far, Istio has been installed on the cluster (in its own namespace, istio-system), and the demo application (WordPress with a MySQL back end and some Persistent Volume Claims for content file storage) has been installed in the default namespace. Automatic sidecar injection has been enabled and the pods recreated, so all ingress and egress traffic from each pod is being routed through Istio's Envoy proxies; those proxies are in constant communication with the Istio control plane mother ship. Now, I'll direct the mother ship to manage and observe the pods that make up the application.

Internal Security

In the introduction, key internal security duties of a service mesh were identified as authentication, authorization, and encryption. Istio has a philosophy of "security by default," meaning that these three duties can be fulfilled without requiring any changes to the host infrastructure or application on which Istio is being deployed.

Istio uses mutual TLS (mTLS) as its authentication solution for service-to-service communication. As mentioned in the section on service mesh concepts, in a sidecar service mesh, all requests from one service to another are tunneled through the sidecar proxies. By requiring the proxies to perform mTLS handshakes with one another before exchanging application data and by making them verify each other's certificate with a trusted store (Istio Citadel) as part of that process, authentication is achieved.

To make this work, every Envoy proxy created in an Istio service mesh has a key and certificate installed. The certificates encode a service account identity correlated with the cluster hostname or DNS name of the service that they represent. This is referred to as secure naming, and a secure naming check forms a part of the mTLS handshake process.

When you deploy Istio with the istio-demo-auth.yaml manifest supplied with the Istio download, mTLS is enabled mesh-wide. Depending on your requirements, you can also enable it on a per-namespace or per-service basis. There are two facets to configuring mTLS: configuring the way that incoming requests should be handled, by means of authentication policies, and configuring the way outgoing requests should be generated, by using DestinationRule objects.

For incoming requests, the MeshPolicy object configures a mesh-wide authentication policy; the Policy object configures it within a given namespace, which can be configured down to the service level by using target selectors within a policy configuration (Listing 1). This is the .yaml file you would have to apply to enable mTLS mesh-wide (and is already a part of the istio-demo-auth.yaml installation used in this article). For outgoing requests, the DestinationRule objects specify the traffic policy TLS mode (Listing 2).

Listing 1: Configuring Incoming Requests

apiVersion: "authentication.istio.io/v1alpha1"

kind: "MeshPolicy"

metadata:

name: "default"

spec:

peers:

- mtls: {}

Listing 2: Configuring Outgoing Requests

apiVersion: "networking.istio.io/v1alpha3"

kind: "DestinationRule"

metadata:

name: "mtls-rule"

spec:

host: "httpbin.default.svc.cluster.local"

trafficPolicy:

tls:

mode: ISTIO_MUTUAL

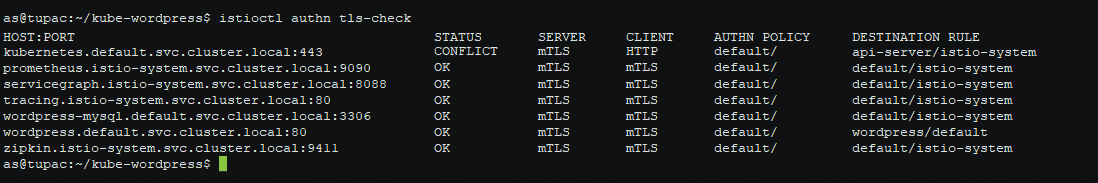

Having applied an authentication policy and destination rules to your desired mTLS setup, use the istioctl CLI tool to check the authentication status of all services, which makes sure all destination rules are compatible with the policies applied to the services to which they route and will show a CONFLICT status for any that are not; it also shows the authentication policy and destination rule that are currently in effect on each service.

If, for example, an authentication policy requires a service to accept only mTLS requests, but destination rules pointing to that service specified mode: DISABLE, its status would be CONFLICT, and as you would hope, any requests to it will fail with a 503 status code. Even with the demo profiles supplied by Istio, some control plane services are in a conflict status. I didn't fully investigate why this was the case, because it didn't affect the features I was using. However, if any of your own application's services show a conflict, it's certainly something you need to address. Run

$ istioctl authn tls-check <pod name>

to see that mTLS is successfully configured on all of your services (Figure 8).

istioctl.External Security and Traffic Management

In this example, I'll demonstrate configuring HTTPS (after all, I want to end up with a legitimate website) and weighted routing between my WordPress versions for the purpose of canary testing. It's easy to do this by configuring a Gateway and a VirtualService object on istio-ingressgateway.

Figure 5 shows that istio-ingressgateway has been assigned one of the real public IPs from my IBM Cloud Kubernetes Service cluster. As with any service providing a secure website, a valid SSL certificate and key are required. I am going to call this website blog.datadoc.info. To generate an SSL certificate and key for this name, I pointed this subdomain at another server running httpd, along with he Let's Encrypt certbot program, and generated a free certificate and private key. I copied the certificate and key to my local machine and then used kubectl to upload them to my cluster as a named secret:

$ kubectl create -n istio-system secret tls istio-ingressgateway-certs--key privkey.pem --cert fullchain.pem

Following the examples on the Istio website, I created a manifest for the ingress gateway, specifying to which services it should direct inbound traffic (Listing 3). The code creates three Istio objects: Gateway, VirtualService, and DestinationRules.

Listing 3: wp-istio-ingressgw.yaml

01 apiVersion: networking.istio.io/v1alpha3 02 kind: Gateway 03 metadata: 04 name: wordpress-gateway 05 spec: 06 selector: 07 istio: ingressgateway 08 servers: 09 - port: 10 number: 443 11 name: https 12 protocol: HTTPS 13 tls: 14 mode: SIMPLE 15 serverCertificate: /etc/istio/ingressgateway-certs/tls.crt 16 privateKey: /etc/istio/ingressgateway-certs/tls.key 17 hosts: 18 - "blog.datadoc.info" 19 --- 20 apiVersion: networking.istio.io/v1alpha3 21 kind: VirtualService 22 metadata: 23 name: wordpress 24 spec: 25 hosts: 26 - "blog.datadoc.info" 27 gateways: 28 - wordpress-gateway 29 http: 30 - route: 31 - destination: 32 subset: v1 33 host: wordpress 34 weight: 90 35 - destination: 36 subset: v2 37 host: wordpress 38 weight: 10 39 --- 40 apiVersion: networking.istio.io/v1alpha3 41 kind: DestinationRule 42 metadata: 43 name: wordpress 44 spec: 45 trafficPolicy: 46 tls: 47 mode: ISTIO_MUTUAL 48 host: wordpress 49 subsets: 50 - name: v1 51 labels: 52 version: v1 53 - name: v2 54 labels: 55 version: v2

Gateway is bound to the ingressgateway service by a selector, configuring it as an HTTPS server with the certificate and key that were uploaded earlier. It specifies that this gateway is only for requests sent to the hostname blog.datadoc.info and will prevent users from making requests direct to the IP address.

VirtualService routes traffic to the WordPress service, which is listening internally on port 80.

DestinationRules specifies that requests routed via these rules should use mTLS authentication. These traffic rules have to match the existing mTLS policy within the mesh, which (because I used the istio-demo-auth.yaml installation option) does require authentication for all requests. Failure to specify it here would cause an authentication conflict, as explained in the "Internal Security" section of this article.

DestinationRules also defines two named subsets of the WordPress service. Each subset specifies a different version of WordPress container to use. The version: labels in this definition correspond to the version: labels in the template specification of the WordPress app containers that I deployed earlier.

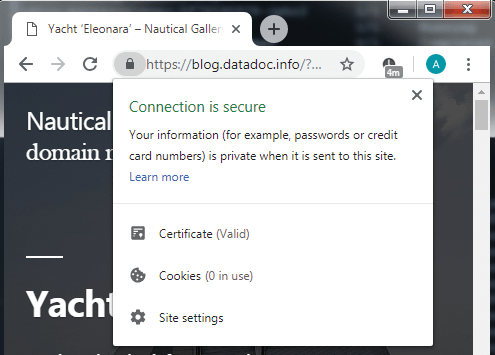

Check the Application

The acceptance test is simple: Browse to the configured hostname and check that a functioning WordPress site with a valid SSL certificate is available. For this demo, I downloaded a selection of public domain artwork related to ships and created a gallery of nautical artwork with a valid SSL certificate (Figure 9).

Distributed Tracing with Jaeger

Istio's telemetry functions are broadly divided into tracing (to see how individual requests behave) and metrics (statistics based on the outcomes of a large number of requests). Istio 1.1 introduced default metrics for TCP connections between services, in addition to the metrics for HTTP and gRPC communication that were generated by previous versions. This new default is useful here, because the requests from the WordPress apps to the MySQL database do not use HTTP. For more interesting traces, I created another simple service that would generate a Hello World-type message string in response to a curl request made by a WordPress plugin, to create internal HTTP traffic.

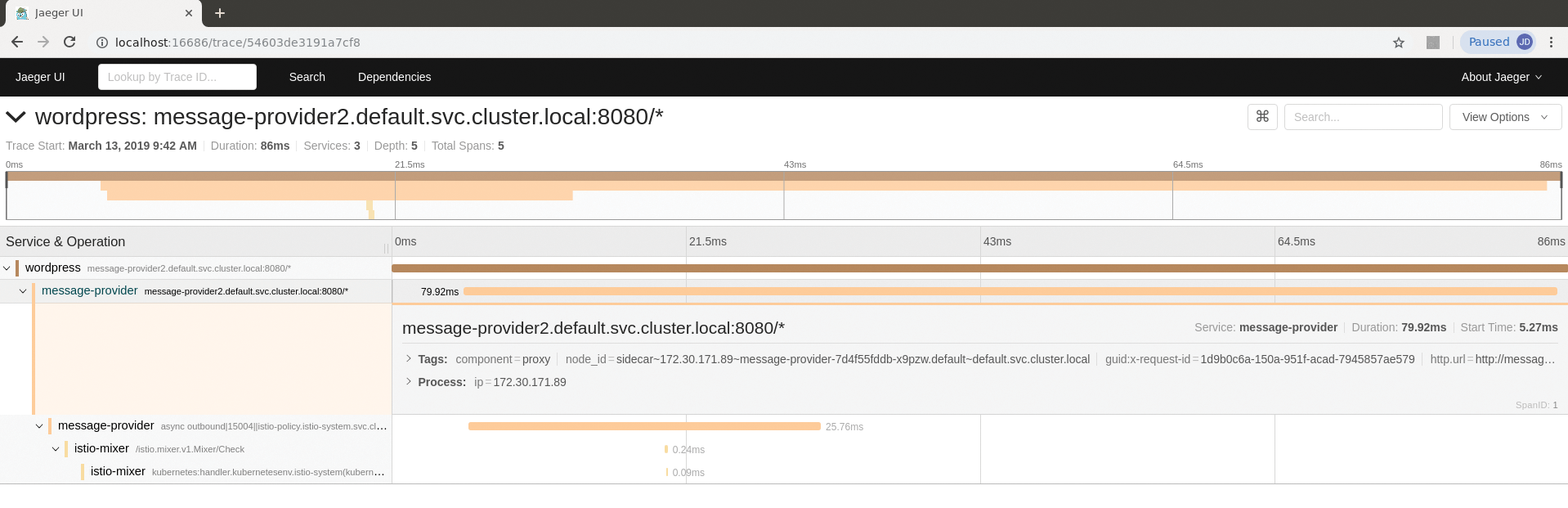

Istio generates tracing information by correlating spans together, a span being a request from one service to another. The sidecar proxies send the details of each span to Istio Mixer, which relies on HTTP header information, propagated from one span to another, to create a trace from related spans. The results of this can be viewed in the Jaeger app that's bundled with the Istio demo deployments. To access this, set up a port forward for Jaeger from kubectl on your local machine:

kubectl port-forward -n istio-system $(kubectl get pod -n istio-system -l app=jaeger -o jsonpath='{.items[0].metadata.name}') 16686:16686 &

Next, browse to localhost:16686 to access the Jaeger dashboard, and apply the service and time-based filters on the left-hand side to see spans of interest. Figure 10 shows a trace in which the WordPress service accesses the message-provider service, along with timing and response information for each.

Viewing Metrics in Grafana

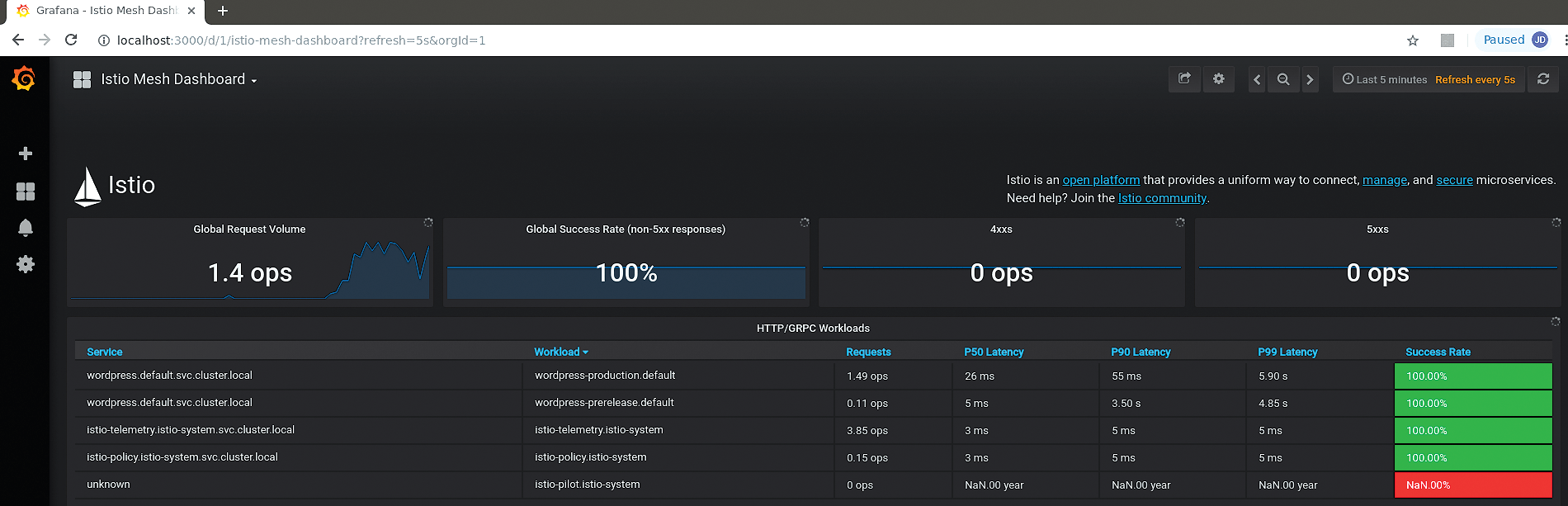

Grafana is an open source suite of tools for producing (primarily) time-based graphs, which lends itself to producing visualizations of all sorts of services. It is the default front end for Istio's telemetry system. The Grafana app that ships with Istio has a selection of preconfigured dashboards and common metrics that are generated automatically for each service in the application – all you need to do is make sure your Kubernetes services and deployments have named ports in their configurations; otherwise, Istio might not collect metrics. Because the demo website is publicly accessible, it's easy to generate some load with external tools for interesting graphs. I ran curl commands within loops in a Bash script to load up the website and then watched what was going on via the mesh and service dashboard graphs, which auto-update every few seconds.

To connect to the Grafana dashboards for your Istio service mesh, use the kubectl port forwarding service, so you can tunnel localhost requests to the Grafana service running in your istio-system namespace:

$ kubectl -n istio-system port-forward $(kubectl -n istio-system get pod -l app=grafana -o jsonpath= '{.items[0].metadata.name}') 3000:3000 &

Now, start a browser and point it at localhost:3000. The various dashboards, metrics, and display options are easy to navigate. In the routing rules for the VirtualService configured in Listing 3, a 90:10 split (lines 34 and 38) between the production and prerelease versions of the WordPress deployment was specified, and that is roughly reflected in the ops rates shown in the Requests column (Figure 11) for the two WordPress services – 1.49 and 0.11 ops, respectively, for the loading script I used. Selecting any of the services from the list on the main Mesh Dashboard page gives you a more detailed insight into the metrics for that service.

Conclusion

A service mesh decouples microservices from the underlying TCP/IP network, freeing developers from low-level interservice traffic concerns and providing opportunities to increase security, resilience, control, and observability of a microservice application. Istio is a comprehensive open source service mesh that's easy to deploy in a new or established Kubernetes cluster. Although its full-feature set cannot be covered in a single article, I hope I've given you enough here to pique your interest and show you how to take the first steps toward discovering the benefits possible with Istio.