Automated OpenStack instance configuration with cloud-init and metadata service

Cloud Creator

OpenStack has been constantly improved to meet the requirements of the demanding virtualization and cloud computing market. To challenge the competitive cloud solutions, OpenStack creators have been implementing a number of improvements and features in automation, orchestration, and scalability. Metadata services in cooperation with the cloud-init script deserve special attention.

The administration of large-scale production cloud environments requires the management of dozens of customer's virtual servers on a daily basis. Manual configuration of the multiple newly created instances in the OpenStack cloud would be a real pain for cloud administrators. Of course, you could use popular automation tools (e.g., Ansible, Chef, Puppet) to make a post-installation configuration on the virtual machines, but it still requires additional effort and resources to create the master server, templates, manifests, and playbooks and to build a host inventory or set up communication to virtual hosts. That's where the metadata and cloud-init duo comes in.

Metadata Service

The OpenStack metadata service usually runs on a controller node in a multinode environment and is accessible by the instances running on the compute nodes to let them retrieve instance-specific data (e.g., an IP address or hostname). Instances access the metadata service at http://169.254.169.254. The HTTP request from an instance either hits the router or DHCP namespace, depending on the route in the instance. The metadata proxy service adds the instance IP address and router ID information to the request. The metadata proxy service then sends this request to the neutron-metadata-agent service, which forwards the request to the Nova metadata API service (nova-api-metadata server daemon) by adding some new headers (i.e., instance ID) to the request. The metadata service supports two sets of APIs: an OpenStack metadata API and an EC2-compatible API.

To retrieve a list of supported versions for the OpenStack metadata API from the running instance, enter:

$ curl http://169.254.169.254/openstack 2012-08-10 2013-04-04 2013-10-17 2015-10-15 2016-06-30 2016-10-06 2017-02-22

To retrieve a list of supported versions for the EC2-compatible metadata API, enter:

$ curl http://169.254.169.254 1.0 2007-01-19 2007-03-01 2007-08-29 2007-10-10 2007-12-15 2008-02-01 2008-09-01 2009-04-04

The cloud-init package installed on an instance contains utilities for its early initialization according to the instance data. Instance data is a collection of configuration data usually provided by the metadata service, or it can be provided by a user data script or configuration drive attached to the instance during its creation.

Getting the Image with cloud-init

Assuming you already have access to the tenant in an OpenStack environment, you can easily download a ready-to-use OpenStack QCOW2 image, including cloud-init from the official image repository website [1].

All the images from the official OpenStack website have a cloud-init package installed and the corresponding service enabled, so it will start on an instance boot and fetch instance data from the metadata service, provided the service is available in the cloud. Once launched and running, instances can be accessed via SSH with their default login, given on the website, and passwordless key-based authentication.

To download a CentOS 7 QCOW2 image to your controller node, enter:

[root@controller ~]# wget http://cloud.centos.org/centos/7/images/CentOS-7-x86_64-GenericCloud-1808.qcow2

Because you are going to use the image on a tenant only, you can upload it as a private image available exclusively in your project. First, you need to import your tenant/project credentials by means of sourcing a Keystone file to your environment variables to gain access to the project resources:

[root@controller ~]# source keystonerc_gjuszczak [root@controller ~(keystone_gjuszczak)]#

Now, you should import your previously downloaded QCOW2 image to Glance so it is available for instances launching within your project (Listing 1).

Listing 1: Importing QCOW2 Image

[root@controller ~(keystone_gjuszczak)]# openstack image create --private --disk-format qcow2 --container-format bare --file CentOS-7-x86_64-GenericCloud-1808.qcow2 CentOS_7_cloud_init

Launching with cloud-init

To create a CentOS_7_CI instance based on a CentOS_7_cloud_init QCOW2 image, enter:

[root@controller ~(keystone_gjuszczak)]# openstack server create --flavor m2.tiny --image CentOS_7_cloud_init --nic net-id=int_net --key-name cloud --security -group default CentOS_7_CI

Next, assign a floating IP to the instance, so you can access it from an external public network:

[root@controller ~(keystone_gjuszczak)]# openstack server add floating ip CentOS_7_CI 192.168.2.235

As mentioned before, the default user for this particular image, centos, has no password set, and root access for the image is restricted, so you need to access the instance with cloud.key from the key pair used on instance creation:

$ ssh -i ~/.ssh/cloud.key centos@192.168.2.235

After accessing the instance, it's vital to check whether the cloud-init service is running:

[centos@centos-7-ci ~]$ sudo systemctl status cloud-init

Then, you should analyze cloud-init.log to verify what modules were run on instance startup:

[centos@centos-7-ci ~]$ sudo cat /var/log/cloud-init.log

Listing 2 shows an example snippet from cloud-init.log that presents some modules executed during boot. From the listing you can find out that cloud-init has adjusted the instance partition size to the storage capacity as defined through the flavor setting (see the OpenStack documentation for more on flavor, which defines the compute, memory, and storage capacity). cloud-init has also resized the filesystem to fit the partition and set the hostname according to the instance name in the cloud.

Listing 2: cloud-init.log Snippet

... 2018-10-09 20:42:56,669 - handlers.py[DEBUG]: finish: init-network/config-growpart: SUCCESS: config-growpart ran successfully 2018-10-09 20:42:57,666 - handlers.py[DEBUG]: finish: init-network/config-resizefs: SUCCESS: config-resizefs ran successfully 2018-10-09 20:43:00,261 - handlers.py[DEBUG]: finish: init-network/config-set_hostname: SUCCESS: config-set_hostname ran successfully ...

There is one thing worth mentioning here: The centos-7-ci hostname visible in the command prompt is a copy of an instance name, CentOS_7_CI, which was set when the instance was created. This is the typical example of how a metadata service works – the hostname is delivered to the instance OS on the basis of the instance name in OpenStack. The typical metadata-based data sources exposed to virtual hosts in OpenStack (hostname, instance ID, display name, etc.) is one of the improvements offered by the metadata service. While creating hundreds of instances in the tenant, you don't need to set the hostname for each separately; OpenStack does it automatically, saving you time.

cloud-init Config File

Five stages of cloud-init are integrated into the boot procedure:

1. Generator. In this stage, the cloud-init service is enabled by the systemd unit generator.

2. Local. The purpose of this stage is to locate local data sources (i.e., user data config files and scripts) and apply networking configuration to the system, including possible fallback to DHCP discovery.

3. Network. With networking configured on an instance, this stage runs the disk_setup and mounts modules and configures mount points.

4. Config. This stage runs modules included in the cloud_final_modules section of the cloud.cfg file.

5. Final. Executed as late as possible, this stage runs package installations and user scripts.

The main cloud-init configuration is included in the /etc/cloud/cloud.cfg file, which by default comprises the following sections:

-

cloud_init_modules(e.g.,growpart,resizefs,set_hostname,ssh) -

cloud_config_modules(e.g.,mounts,set-passwords,package-update-upgrade-install) -

cloud_final_modules(e.g.,scripts-per-instance,scripts-user,power-state-change) -

system_info(e.g.,default_user,distro,paths)

As you have probably noticed, the names of the module sections largely correspond to the mentioned cloud-init boot stages. What is most important here is that the cloud_final_modules section contains the scripts-user task, which allows you to inject customized configuration data to the instance during the final cloud-init stage.

Passing User Data to the Instance

User data can be passed to the instance by adding it as a parameter during instance launch. A number of file types are supported:

- GZIP-compressed content

- MIME multipart archive

- User data script

- cloud-config file

- Upstart job

- Cloud boothooks

- Part handler (contains custom code for new MIME types in multipart user data)

In the example here, I present the most common file types used in OpenStack for user data injection – that is, the cloud-config file and user data scripts.

cloud-config File

The cloud-config file is most likely the fastest and easiest way to pass user data to an instance. It has a clear and human-readable format and basically the same syntax as the cloud.cfg configuration file. Listing 3 shows an example cloud-config file that creates an additional user, executes a command on first boot, upgrades the OS, and finally installs additional packages. Please note, that a cloud-config file must use valid YAML syntax and must begin with #cloud-config; otherwise, the file won't be readable by a cloud-init script.

Listing 3: cloud-config File

#cloud-config

# create additional user

users:

- default

- name: test

gecos: Test User

sudo: ALL=(ALL) NOPASSWD:ALL

# run command on first boot

bootcmd:

- echo 192.168.2.99 myhost >> /etc/hosts

# upgrade OS on first boot

package_upgrade: true

# install additional packages

packages:

- vim

- epel-release

To launch an instance with cloud-config user data, you need to modify your command slightly by adding the user-data parameter:

[root@controller ~(keystone_gjuszczak)]# openstack server create --flavor m2.tiny --image CentOS_7_cloud_init --nic net-id=int_net --key-name cloud --security-group default --user-data cloud-config CentOS_7_CI_CC

As soon as the instance is up and running and a floating IP is assigned, you can log in and monitor the cloud-init log (Listing 4) – probably while some cloud-config tasks are still running.

Listing 4: Sample cloud-init Log

[centos@centos-7-ci-cc ~]$ sudo tail -f /var/log/cloud-init.log ... 2018-10-17 21:54:06,826 - helpers.py[DEBUG]: Running config-package-update-upgrade-install using lock (<FileLock using file '/var/lib/cloud/instances/21697d2c-fcdd-4c36-ba88-275b78e546a7/sem/config_package_update_upgrade_install'>) ... 2018-10-17 21:54:06,988 - helpers.py[DEBUG]: Running update-sources using lock (<FileLock using file '/var/lib/cloud/instances/21697d2c-fcdd-4c36-ba88-275b78e546a7/sem/update_sources'>) ... 2018-10-17 21:54:07,006 - util.py[DEBUG]: Running command ['yum', '-t', '-y', 'makecache'] with allowed return codes [0] (shell=False, capture=False) ... 2018-10-17 22:00:06,125 - util.py[DEBUG]: Running command ['yum', '-t', '-y', 'upgrade'] with allowed return codes [0] (shell=False, capture=False)

If you are not sure what kind of user data has been injected into the instance, you can always display the contents of the /var/lib/lib/cloud/instance/ directory, which includes user data in the user-data.txt file, as well as the time the final stage ended, stored in the boot-finished file.

User Data Script

A Bash script is another common way to inject user data into an instance. The file must be a valid Bash script, so it must begin with a #!/bin/bash line. For example, Listing 5 shows an instance configuration input file run on boot that installs two RPM packages, enables the Apache HTTP service, and creates the index.html file in the server's root directory.

Listing 5: user_data.sh

#!/bin/bash # install additional packages sudo yum install -y httpd vim # enable httpd service sudo systemctl enable httpd sudo systemctl start httpd # create file in http document root sudo echo "<p>hello world</p>" > /var/www/html/index.html

The command to launch an instance with a user data Bash script would look like Listing 6.

Listing 6: Launching Instance with Bash Script

root@controller ~(keystone_gjuszczak)]# openstack server create --flavor m2.tiny --image CentOS_7_cloud_init --nic net-id=int_net --key-name cloud --security-group default --user-data user_data.sh CentOS_7_CI_UD

Image Metadata Properties

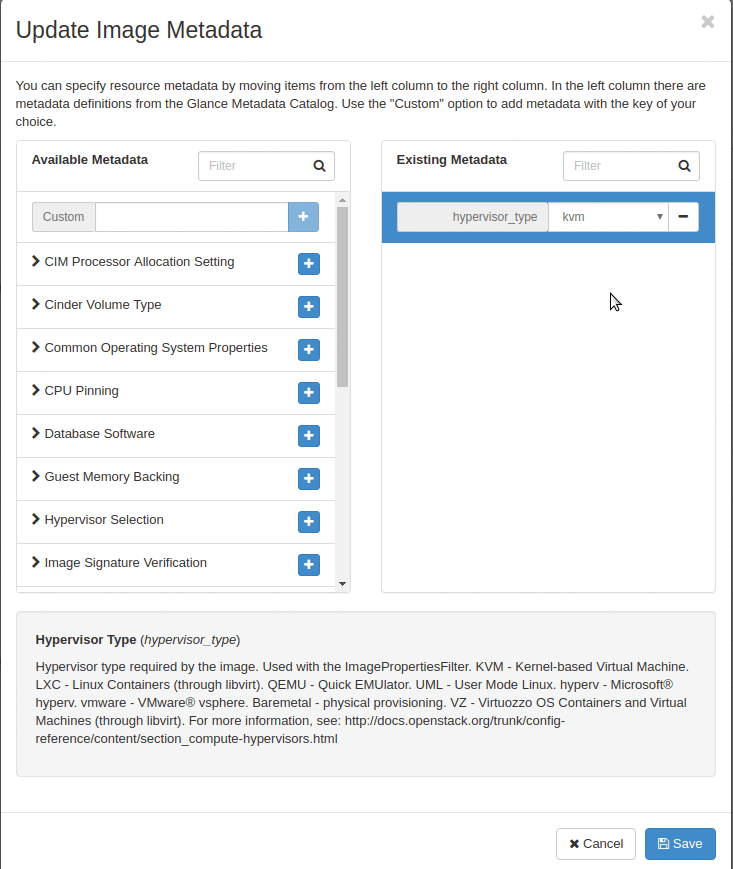

Worth mentioning is that images also can contain metadata properties that determine the purpose and nature of a particular image. OpenStack offers a variety of image properties that can be set for the image to define its purpose (e.g., CPU pinning, compute CPU architecture, or Cinder volume type required by the image).

Figure 1 shows the image properties configuration screen with the hypervisor_type parameter set to kvm, which means that launching an instance from this particular image requires a KVM-based compute node. Otherwise, the instance won't boot. Booting the instance on compute nodes other than KVM (e.g., LXC- or XEN-based nodes) results in an error.

You can also set an image property from the command line with the --property <key>=<type> parameter. For example,

[root@controller ~(keystone_admin)]# openstack image set --property hypervisor_type= kvm cirros-0.4.0

sets the hypervisor_type parameter to kvm.

Passing Metadata with a Config Drive

You can force OpenStack to write instance metadata to a special drive called a configuration drive, or simply config drive, that is attached to the instance during the boot process.

Thanks to the cloud-init script, a config drive is automatically mounted on the instance's OS, and its files can be read easily, as if they were coming from the metadata service. With a config drive, you can pass different user data formats, as well as ordinary files, to the instance. The main requirement for using a config drive is passing the --config-drive true parameter in the openstack server create command. You can also configure the compute node always to use a config drive by setting the following parameter in /etc/nova/nova.conf:

force_config_drive = true

The example command in Listing 7 enables a config drive and passes a previously created user data script and two files to the instance on boot. The first --file replaces the original /etc/hosts with a file with new content, and the second --file copies content to the /tmp directory on the instance:

Listing 7: Using a Config Drive

[root@controller ~(keystone_gjuszczak)]# openstack server create --config-drive true --flavor m2.tiny --image CentOS_7_cloud_init --nic net-id=int_net --key-name cloud --security-group default --user-data user_data.sh --file /etc/hosts=/root/hosts --file /tmp/example_file.txt=/root/example_file.txt CentOS_7_CI_CD

The Future of Cloud Administration

The cloud-init script brings the cloud computing experience to a new level. Today, the administration and operation of a cloud infrastructure wouldn't make sense without automation mechanisms like cloud-init in cooperation with the central instance configuration database (i.e., a metadata service). Cloud-init allows you to configure a large number of virtual machines in the cloud, coming from different projects, with just a few previously prepared instance configuration data files. The number of user data input file formats and the variety of methods available to pass user data to an instance makes this automation script a powerful and flexible tool that aids OpenStack administrators in their daily work. Furthermore, cloud-init is available for many cloud platforms besides OpenStack (e.g., Amazon EC2, Microsoft Azure, VMware, Apache CloudStack), which makes it a leader in the IT market in the field of assets configuration across multiple cloud technologies.