The AWS CDK for software-defined deployments

Dreaming of Clouds

The financial benefits of the cloud seem quite obvious on the surface. One powerful point that deserves not only emphasis but in-depth analysis is software-defined deployments. With the advent of cloud platforms, API-driven services and infrastructure are now the norm, not the exception, making it possible not only to automate the packaging and deployment of the software, but to provision the infrastructure that hosts the applications deployed. Although tools like Amazon Web Services (AWS) CloudFormation and HashiCorp's Terraform are heavyweights, I really think the AWS Cloud Development Kit (CDK) deserves a serious look as a strong contender in this arena.

Bring on the Clouds!

With every major cloud provider come additional benefits – in addition to the immediately obvious financial benefits – like the as-a-service offerings. For example, the Kubernetes-as-a-service offerings, such as Google Kubernetes Engine [1], Amazon Elastic Container Service for Kubernetes (EKS) [2], and Microsoft Azure Kubernetes Service (AKS) [3], make it possible to provision immensely complex orchestration platforms and tools with point-and-click web interfaces or a simple command-line invocation. In this dimension, many additional benefits that might not be immediately obvious are worth mentioning:

- Cost optimization and savings through reduction of labor. Working from the Kubernetes-as-a-service example, the time saved in not having to learn how to architect and deploy a multimaster, resilient, high-availability cluster is immense and is but one of many such services available in an easy-to-consume offering.

- Exposure and access to new technologies. It's probably fair to say that many developers would never experiment with a function-as-a-service (serverless) platform or a non-relational database if it were not offered in a form as simple to use as Amazon DynamoDB.

- Software-defined deployments. As the point that forms the crux of this article, the importance of software-defined deployments cannot be underscored strongly enough in the cloud revolution.

Pareidolia

Seeing shapes in clouds isn't just a youthful pastime anymore. These days, API-driven cloud platforms give you the power to define the form and shape of your deployments in the cloud. All major cloud providers have command-line-based toolkits to script deployments in scripting languages like Bash or PowerShell. Although these languages and the products built with them are extremely powerful, they do leave room for improvement.

For example, when modifying aspects of previously defined deployments and infrastructure, you are typically left to different APIs and, hence, the command line (CLI) to make changes to your deployments. For sufficiently complex deployments, it becomes an increasingly complex task to maintain separate scripts (or, alternatively, a single monstrosity that accepts an ever-increasing number of flags and arguments) to handle any type of modification to a deployment, create new deployments, and destroy deployments that are no longer needed.

Further improvements in this space yielded a newer generation of tools that make use of declarative state machines to create (and further modify) deployments. In 2011, AWS introduced CloudFormation [4]. In its initial incarnation, CloudFormation allowed you to define a deployment through the use of JSON-based templates, which were then POSTed to an API endpoint.

Then, as now, services internal to AWS parse the template, both to evaluate logic embedded [5] in the templates and to figure out the order in which resources should be provisioned according to dependencies; provision services to match the template's specifications; handle success and failure scenarios; and maintain a state of the defined resources. In stark contrast to the aforementioned shell scripts of increasingly complex logic to handle create/modify/delete scenarios, the JSON (and subsequently YAML) templates of CloudFormation-defined deployments are static and declarative, allowing you to define your initial set of templates and provision your services.

When it is time to update (or destroy) your services, you update your template to your new specifications and once again POST it to the API. The services behind the API figure out the delta between your specified template and the state currently known to the service of your existing resource set; from there, the service creates, modifies, and deletes services and resources as necessary to bring your deployment in line with the specifications of your template.

Although CloudFormation gave AWS users exclusively a powerful tool, 2014 gave a wider infrastructure API consumer audience a powerful new tool in their tool belt with the arrival of Terraform [6]. Terraform brought a similar set of concepts: a declarative template definition language interpreted by a run time capable of understanding the differences between a current and desired state that leverages an abstracted set of provider APIs to make the current state match the desired state. The benefits of Terraform are numerous, and although the extent of some of those benefits relative to other tools might be a matter of opinion or circumstance, I find these benefits almost universally agreed upon:

- Open source. It is available under the Mozilla Public License (MPL) v2.0 [7].

- Visibility into the deployment state. It exposes its state to inspection (CloudFormation does not expose its state in any way, although it recently began to offer drift detection [8]). The executable also provides a robust set of commands and tools to inspect and (if necessary) manipulate the state of a deployment directly.

- Flexible. Terraform offers a great deal of flexibility and power in its implementation (i.e., its null resources [9] and external data sources [10]), although, in my opinion, this comes with a somewhat steeper learning curve than something like CloudFormation. CloudFormation's somewhat analogous custom resources are relegated to running on the AWS platform.

- Portable. The run time is portable and basically can be run anywhere, assuming the executable has the ability to communicate with whatever APIs it's leveraging.

- Interoperable. It can be made to interoperate with any service that exposes an API that implements create, read, update, and delete (CRUD) operations (through custom plugin or "provider" development) [11].

Tool Fatigue

Is another tool really necessary in this space? Although I mostly use CloudFormation and Terraform in this article as points of comparison, the list of other tools that can provision infrastructure is long: Troposphere [12], Sceptre [13], Bash scripts, custom apps leveraging SDKs, Ansible [14], Selenium scripts automating the AWS console (OK, I've never done that, and it's probably a terrible idea – but it would work!), and the list goes on. Honestly, I blew off the AWS CDK [15] the first time I heard about it, because it seemed like yet another tool vying for my attention in an already crowded field that already has some very strong contenders. After some time, though, my curiosity won out over my skepticism, and I decided to take the CDK for a spin.

AWS CDK

Getting started with the CDK is pretty simple. In this article, I make a few assumptions:

- You have some familiarity with AWS – enough to install, set up, and understand how to use its CLI.

- You have used a tool like CloudFormation or Terraform (or maybe the SDK or CLI) before.

- You have some programming background and are comfortable with language tool chains (e.g., Node.js npm [16] and Yarn [17]). During the rest of this article, I use TypeScript [18] as the CDK language and leverage Node.js ecosystem utilities.

Assuming you have an up-to-date node and npm installed, installing the AWS CDK base libraries is as simple as

$ npm install -g aws-cdk

which installs the CDK Node.js libraries. With Node set up accordingly, it places the cdk executable in your $PATH, allowing you to run cdk commands from your command line. If you are curious to explore the multitude of subcommands and options now available, type cdk help. Alternatively, you can head over to the GitHub pages [19] set up by AWS for documentation.

The CDK makes starting a new project a cinch. To begin, I'll create a new project called hello-cdk in three simple steps from the terminal:

$ mkdir hello-cdk $ cd hello-cdk $ cdk init app --language=typescript

These commands create a new local directory named hello-cdk, descends into that directory, and then asks the CDK to create a new application there. The CDK looks at the name of the directory it is run from with the init command and uses that as the name of the app before setting up the base files and directories you need to get started and initializing a Git repo in the directory. In the end, you can see in Listing 1 what resides in your hello-cdk directory after the init.

Listing 1: Project Root Directory

drwxr-xr-x - bradmatic 8 Jan 23:52 . drwxr-xr-x - bradmatic 8 Jan 23:52 |---- bin .rw-r--r-- 194 bradmatic 8 Jan 23:52 | |---- hello-cdk.ts .rw-r--r-- 37 bradmatic 8 Jan 23:52 |---- cdk.json drwxr-xr-x - bradmatic 8 Jan 23:52 |---- lib .rw-r--r-- 246 bradmatic 8 Jan 23:52 | |---- hello-cdk-stack.ts drwxr-xr-x - bradmatic 8 Jan 23:52 |---- node_modules drwxr-xr-x - bradmatic 8 Jan 23:52 | |---- @aws-cdk drwxr-xr-x - bradmatic 8 Jan 23:52 | |---- @types ... | |---- ... drwxr-xr-x - bradmatic 8 Jan 23:52 | |---- zip-stream .rw-r--r-- 79k bradmatic 8 Jan 23:52 |---- package-lock.json .rw-r--r-- 345 bradmatic 8 Jan 23:52 |---- package.json .rw-r--r-- 320 bradmatic 20 Dec 2018 |---- README.md .rw-r--r-- 558 bradmatic 20 Dec 2018 |---- tsconfig.json

With many subdirectories under node_modules, I truncated the output. Primarily, I'll be focusing on the contents of the bin and lib directories.

What's in the Box?

The CDK just created a ton of files, so I'll unpack things a bit. First, the node_modules folder contains dependent libraries, plain and simple. As well, you will see quite a few config files. For right now, focus on the bin and lib directories and their contents. Listing 2 shows the content of bin/hello-cdk.ts.

Listing 2: bin/hello-cdk.ts

#!/usr/bin/env node

import cdk = require('@aws-cdk/cdk');

import { HelloCdkStack } from '../lib/hello-cdk-stack';

const app = new cdk.App();

new HelloCdkStack(app, 'HelloCdkStack');

app.run();

The import lines import the base CDK library and a stack-specific file from lib. Next, you define a new CDK app and instantiate a new instance of HelloCdkStack.

Listing 3 shows lib/hello-cdk-stack.ts, which has a clearly defined point to hook into the framework and define your resources. Although you could just throw code into this file and define resources, it's worthwhile to sit back, pause, and think about a few things before you do so – namely, reusability and modularity. I'll address these concerns individually.

Listing 3: lib/hello-cdk-stack.ts

import cdk = require('@aws-cdk/cdk');

export class HelloCdkStack extends cdk.Stack {

constructor(parent: cdk.App, name: string, props?: cdk.StackProps) {

super(parent, name, props);

// The code that defines your stack goes here

}

}

Reusability

Imagine that you are tasked with defining the entire stack for the application, from the base network infrastructure up, with whatever method you use to define your deployment (in this case, the CDK). In the case of AWS, that means you will very likely start with a virtual private cloud (VPC), subnets, security groups, route tables, route table associations, network address translation (NAT) gateway(s), and so on – just for networking.

A somewhat common pattern in most organizations when starting from scratch is to reuse a single VPC to host multiple applications (oftentimes grouped by type of environment, e.g., development, QA, test, production, etc.). In light of this, bundling the base networking infrastructure together with the application stack would greatly limit the possibility of reuse and means it would not be possible to deploy a new instance of the application – perhaps for development or testing purposes – apart from its backing resources. This arrangement also bears cost implications, because resources such as NAT gateways (and perhaps other necessary infrastructure elements) incur cost the moment they are provisioned.

Your first takeaway to keep in mind as you structure your code, then, is that you'd like somehow to keep shared resources separate from the resources that are specific to your application so that those shared resources aren't coupled to instances of your application stack.

Modularity

Having just talked about reusability, it's hard to shake the notion that this sounds a lot like reusability by another name: modularity. Although they are related concepts, they are not the same. Essentially, reuse is an outcome of modular code. As you structure code, focus on modularity and let the reuse flow naturally from it. I wanted to introduce the concept formally, though, because I'll be teasing apart the VPC and networking aspects of the stack from the application-specific resources by creating modules.

The other critical piece in designing a good, robust module is ensuring consistent and clean interfaces that hide (or abstract, if you prefer the academic term) the inner workings of the module that aren't necessary for the consumer of the module. The primary focus will be the construction of two modules: one for shared services (i.e., the networking elements), which I'll cover in this article, and one for a web application running behind a load balancer, to be covered in a future article.

Next, I'll look at how to orchestrate these individual modules to produce all of the infrastructure needed for the application, and finally, I'll look at some lifecycle considerations, while considering the tightly knit relationship between CloudFormation, the CDK code, and CDK resources.

VPC from the Ground Up, CDK Style

To keep your code not only logically but also physically modular, create a new file in the lib directory that will contain the code for the networking resources:

$ touch lib/hello-cdk-base.ts

Listing 4 contains the code that should go in this file [20]. When the time comes to build, you'll need the @aws-cdk/aws-ec2 npm module installed, so you should take care of that now:

$ npm install --save @aws-cdk/aws-ec2

Listing 4: lib/hello-cdk-base.ts

01 import cdk = require('@aws-cdk/cdk');

02 import ec2 = require('@aws-cdk/aws-ec2');

03

04 export class HelloCdkBase extends cdk.Stack {

05 public readonly vpc: ec2.VpcNetworkRefProps;

06

07 constructor(parent: cdk.App, name: string, props?: cdk.StackProps, env?: string) {

08 super(parent, name, props);

09

10 const maxZones = this.getContext('max_azs')[`${env}`]

11

12 const helloCdkVpc = new ec2.VpcNetwork(this, 'VPC', {

13 cidr: this.getContext('cidr_by_env')[`${env}`],

14 natGateways: maxZones,

15 enableDnsHostnames: true,

16 enableDnsSupport: true,

17 maxAZs: maxZones,

18 natGatewayPlacement: { subnetName: 'DMZ' },

19 subnetConfiguration: [

20 {

21 cidrMask: 24,

22 name: 'Web',

23 subnetType: ec2.SubnetType.Public,

24 },

25 {

26 cidrMask: 24,

27 name: 'App',

28 subnetType: ec2.SubnetType.Private,

29 },

30 {

31 cidrMask: 27,

32 name: 'Data',

33 subnetType: ec2.SubnetType.Isolated,

34 },

35 ],

36 tags: {

37 'stack': 'HelloCdkCommon',

38 'env': `${env}`,

39 'costCenter': 'Shared',

40 'deleteBy': 'NEVER',

41 },

42 });

43

44 this.vpc = helloCdkVpc.export();

45 }

46 }

Although I won't cover the complexities of VPCs, subnets, and all of the accompanying network elements that accompany them in depth, I will mention them for the sake of discussing how they appear in the CDK code.

Subnets, AZs, and NAT Gateways

A look through the VPC code in Listing 4 shows a build-out of a reference three-tier networking architecture with dedicated subnets for a front end, where resources like load balancers, proxies, or ingress controllers live. Accordingly, these subnets are designated Web (line 22). The ec2.SubnetType.Public designation used for the subnetType parameter for these particular subnets tells the CDK that this subnet will receive public traffic.

The natGateways parameter (line 14) tells the VpcNetwork constructor function how many AWS NAT Gateways you'd like to provision. By changing the number of availability zones (AZs) that are being used according to environment type (e.g., the "dev" environment will only use two AZs, compared with three for the "qa" and "prod" environments; see the "How Context Works in the AWS CDK" box for more details on specifying context), you're limiting cost in lower environments by restricting the number of AZs into which to deploy resources. Accordingly, the maxAZs parameter is what tells the constructor function the number of AZs you want to use; omitting this parameter will cause the aforementioned default behavior of utilizing all AZs available for a given region.

Listing 5: cdk.json

01 {

02 "app": "node bin/hello-cdk.js",

03 "context": {

04 "cidr_by_env": {

05 "dev": "10.100.0.0/16",

06 "qa": "10.200.0.0/16",

07 "prod": "10.300.0.0/16"

08 },

09 "max_azs": {

10 "dev": 2,

11 "qa": 3,

12 "prod": 3

13 }

14 }

15 }

From the code in the subnetConfiguration block, you can see three types of subnets: Public, Private, and Isolated. As mentioned, the Web subnets are of the Public type. The Web and App subnets are provisioned with /24 netmasks, meaning you have 251 usable IP addresses (256 addresses total minus five AWS-reserved addresses; see the "AWS Networking Primer" box for more details on networking within AWS). For the Data subnets (e.g., where databases and other data-related services might reside), smaller subnets are used: a /27, yielding 27 usable addresses per subnet (32 theoretical available addresses minus five AWS-reserved addresses).

For subnet and subnet types, the natGatewayPlacement parameter tells the CDK where you want it to place your NAT gateways when you create your VPC. The DNS tags parameters (lines 36-41) give additional flexibility for the use of custom internal DNS domains within your VPC. The tags parameter, while providing conventional AWS tag sets on all the networking resources (which are abundantly useful in and of themselves within the AWS ecosystem for a multitude of reasons), also take on additional functionality within the context of the CDK, which I will cover in future discussions.

CDK Magic

The public, read-only vpc attribute (lines 4 and 5) is defined within the HelloCdkBase class itself. This attribute provides an interface for your class to export its VPC definition. Earlier, when talking of reuse, I mentioned the scenario in which additional application development teams might leverage your shared networking resources when defining their own applications with the CDK. This attribute provides the mechanism to make that functionality possible. At the end of the class, the VPC created within the class is made available outside of the class (through the attribute) by virtue of setting the value of the attribute to an export of helloCdkVpc (line 44).

Build It!

I'm as eager as you are to watch CDK do its magic, but first you need to update bin/hello-cdk.ts (Listing 2). Update yours such that it looks like the code in Listing 6.

Listing 6: New bin/hello-cdk.ts

#!/usr/bin/env node

import cdk = require('@aws-cdk/cdk');

import { HelloCdkBase } from '../lib/hello-cdk-base';

const app = new cdk.App();

const stackName = 'HelloCdkBase-' + app.getContext('ENV')

new HelloCdkBase(app, stackName, {}, app.getContext('ENV'));

app.run();

Here, you set your main CDK app to import only from lib/hello-cdk-base.ts, which is the file where your VPC code resides. In a future installment, I'll begin to build out the resources necessary for your application in the lib/hello-cdk-stack.ts file, at which point you'll re-include it in bin/hello-cdk.ts. You'll also see here the use of a context variable, ENV (again, see the "How Context Works in the AWS CDK" box regarding dynamic context), to create dynamic names for your apps.

Generating CloudFormation with the CDK

Although this next step won't actually build resources in your AWS account, if you've ever spent countless hours slogging it out creating CloudFormation templates, you'll see it is incredibly cool. From your CLI, run the commands:

$ mkdir -p "./cft/qa" $ cdk synth -c ENV=qa "HelloCdkBase-qa" >./cft/qa/vpc.yml

You should now see a cft directory with a qa subdirectory in the root of your project. Within the qa subdirectory, you'll find a file called vpc.yml, which contains CloudFormation code that directly correlates to your TypeScript CDK code. Spend a few minutes looking back and forth between the YAML file and the TypeScript file: Which one would you rather spend a few minutes (or hours, depending on your choice) editing? My choice is definitely TypeScript.

Just Build It, Already

From your (AWS authenticated) CLI in the root of the project, run:

$ npm run build $ cdk deploy -c ENV=qa

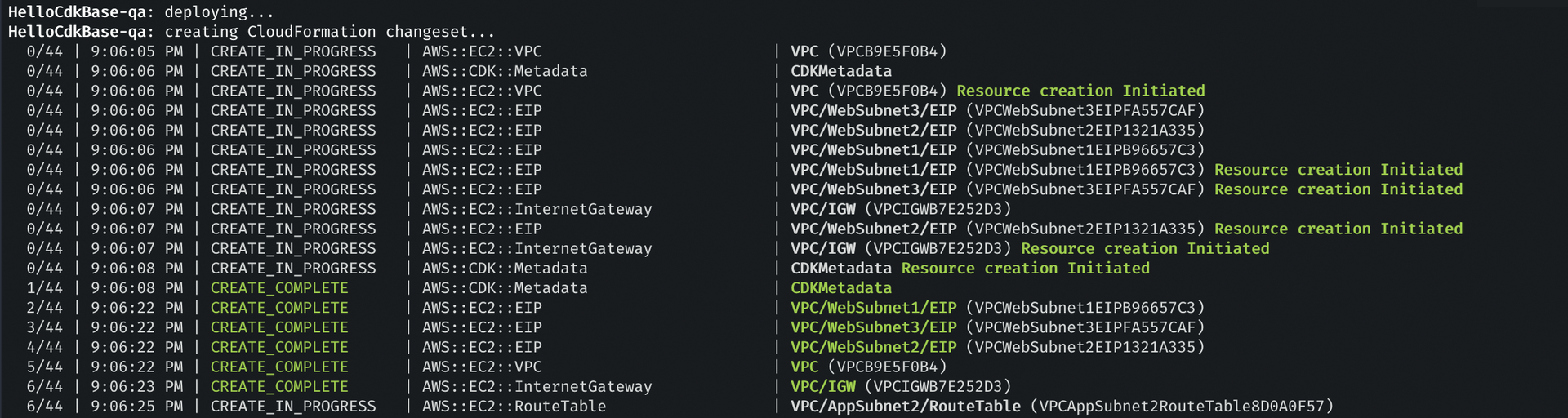

You will get some absolutely beautiful log output (a small sample of my own output from a run is included in Figure 1), and within about three minutes, you'll have a VPC, subnets, routes, route tables, route table associations, and NAT gateways – all the building blocks of an AWS networking setup – neatly provisioned in your account.

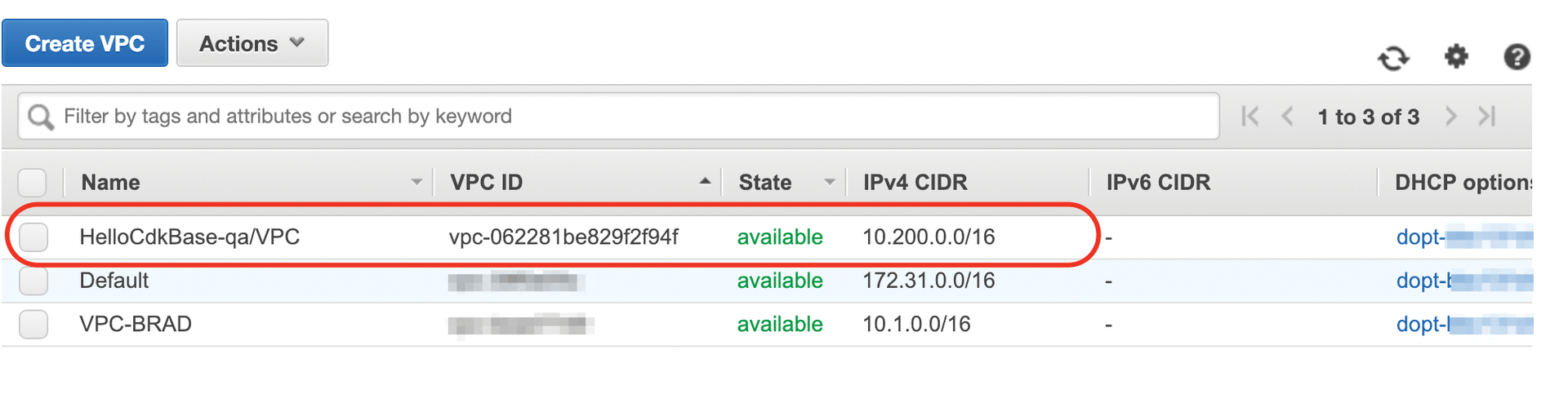

Figure 2 shows the VPC section of my AWS console, which shows the VPC created by the CDK. You'll also notice that it's appropriately assigned a 10.200.0.0/16 base CIDR, as defined for any qa environment in the cdk.json file (Listing 5).

Cleaning Up After Yourself

You now have code to provision a well-designed VPC, so what do you do now? Tear it down! Although it might seem counterintuitive to destroy these resources, your application CDK code is not quite ready, yet. (Come back for a future installment, where I show you how to build it out.) Until that code is ready, you can reap the cost-savings benefits of your infrastructure-as-code solution by tearing it down. From your CLI, the command

$ cdk destroy -c ENV=qa

should take care of destroying these resources until you're ready to come back and add on!

Conclusion

The AWS CDK provides an extremely flexible and powerful tool to fuel infrastructure-as-code solutions in the world of cloud-powered, API-driven infrastructure hosting. Even a jaded cloud architect can appreciate the power a tool like this has, to build bridges between development and cloud operations teams, where it seems previous tools have been less successful. Even better, it provides backward compatibility with CloudFormation, if needed. With support for C#, Java, and TypeScript, it offers programmers of different backgrounds the chance to leverage its power. I highly suggest giving the AWS CDK a try for your next project.