Cloud-native storage for Kubernetes with Rook

Memory

A witticism making the rounds at IT conferences these days says that Internet providers operate servers to make their customers serverless. On the one hand, customers increasingly strive to move their setups into the cloud and avoid dealing with the operation of classic IT infrastructure. On the other hand, no software can run on air and goodwill.

Today's IT infrastructure providers, therefore, face challenges that hardly differ from those of earlier years – specifically, persistent data. Clearly, modern architectural approaches that comprise microservices are increasingly based on dynamic handling of data, fragmentation, cluster mechanisms, and, last but not least, inherent redundancy.

None of this changes the fact that in every setup, a point comes in which data needs to be stored safely somewhere, such as when you need to avoid the failure of a single server that would cause container and customer data to disappear into a black hole.

Cloud environments such as OpenStack [1] take a classic approach to the problem by providing components that act as intermediaries between the physical memory on one side and the virtual environments on the other. The virtual environments are typical virtual machines (VMs), so persistent storage can be connected without problem.

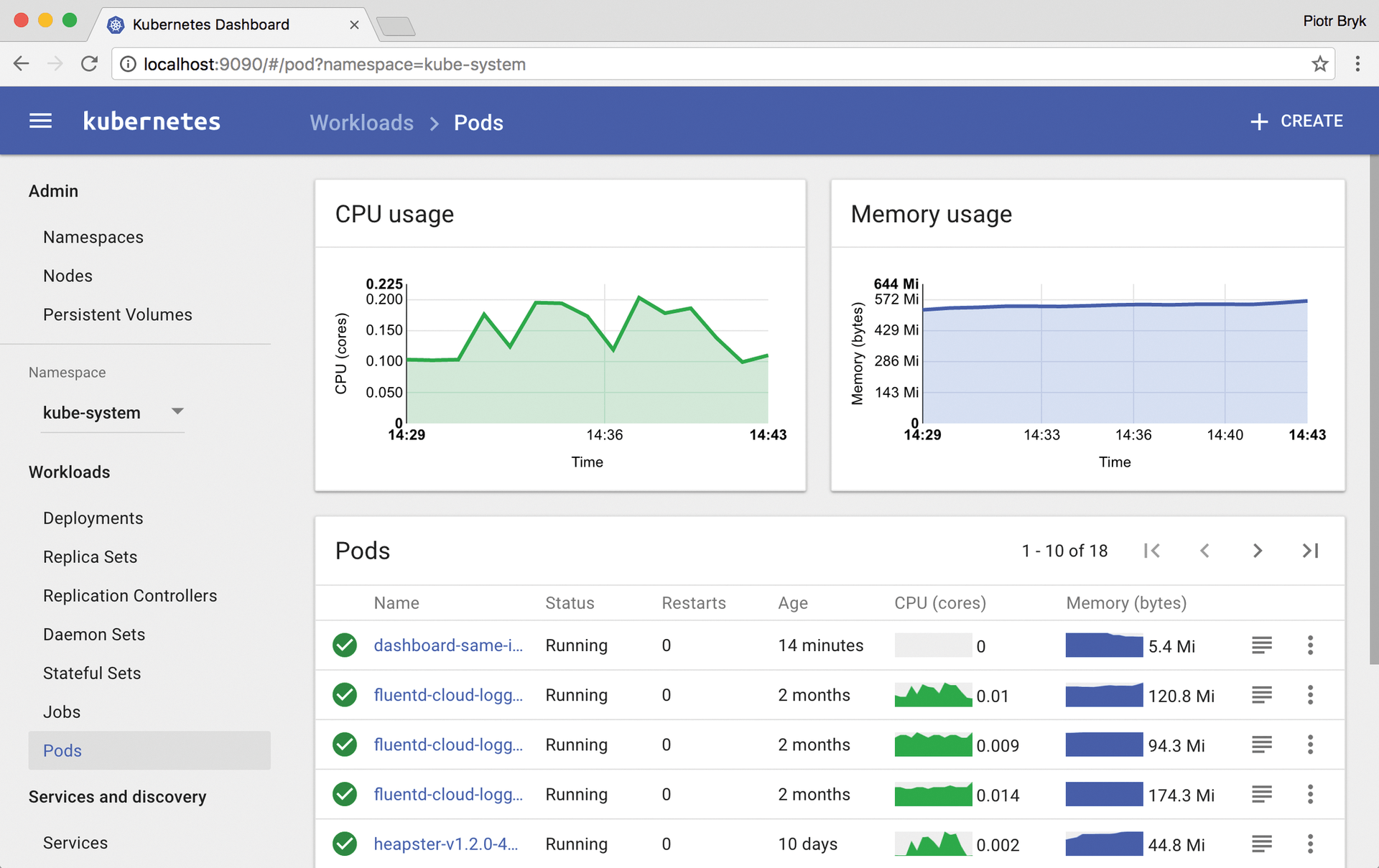

Containers, on the other hand, present a different battle plan: Kubernetes [2] (Figure 1), Docker [3], and the many alternatives somehow all have a solution for persistent storage of data, but they all do not really fit in with the concept of cloud native.

Rook [4] promises no less than cloud-native storage for persistent data in the context of Kubernetes. The idea is simple: Combine Rook with Ceph [5] (the currently recommended object store). As soon as Rook is running in Kubernetes, a complete virtual Ceph cluster is available. The Ceph volumes can then be connected by Kubernetes, providing the cluster with data silos that are then managed in the usual way.

Rook integrates perfectly into Kubernetes so that it can be controlled centrally by an API. The customer then has a new type of volume available in their Kubernetes cluster, which can be used like any other type of volume in Kubernetes.

The storage created with Rook is not just glommed on, it is integrated into all Kubernetes processes, as is the case with classic volumes and containers. Rook thus promises admins a quick and simple solution for a vitally important problem – reason enough to take a closer look.

Cloud Native

Before taking a look at Rook, though, a short excursion into cloud marketing is essential. What cloud-native storage actually means is only intelligible to those who deal regularly with the subject, and they probably already have Rook on their radar. Remember that cloud-native applications are generally a new type of application designed to run in the cloud from the outset, and the cloud makes different demands on apps than do conventional setups. Cloud installations assume, for example, that the applications take care of their own redundancy, instead of relying on auxiliary constructs such as cluster managers.

The "cloud native" concept implies a microarchitecture. Whereas formerly, a huge, monolithic program was developed, today a number of small, self-sufficient, but very fast components is preferable, ultimately resulting in a harmonious overall response. If an application meets these requirements, it is generally considered to be cloud native.

Containers are an extremely popular tool for building such programs. Because they do without the huge overhead of VMs, they are particularly light-footed and resource-saving. Moreover, tools like Kubernetes can orchestrate containers very well – another factor that plays a major role in cloud-native applications.

However, cloud orchestrators like Kubernetes are very reluctant to deal with the topic of persistent storage. From the container environment's point of view, the facts are clear: If the application needs persistent storage, it should take care of this itself, just as MySQL and Galera do as a team, wherein the entire dataset always exists in multiple instances. If one set of data fails, a new one can be ramped up immediately; after a short while, the data is again synchronized with its cluster partners.

If you have enjoyed the experience of dealing with Galera in a full-blown production setup, you know it is not so easy. In fact it is very complicated – on the administrative as well as the development level. Therefore, developers of cloud-native apps often refuse to consider the subject at all and prefer to point their fingers at the container environment instead.

As the admin, though, you are left out in the cold if neither the container environment nor the application addresses the topic, and you are left to think about how to make persistent memory possible. In recent years, some hacks have been created that add replication solutions to Kubernetes and somehow enable redundant persistent storage. That's not the way to go, though.

The Rook Remedy

The Rook developers noticed that the software needed to enable persistent storage in cloud environments already exists in the form of Ceph. Red Hat had good reason to acquire Inktank and involve one of the Ceph founders, Sage Weil, years ago. It is no accident that Ceph has become the de facto standard when software-defined storage is used to offer scalable storage in clouds nor that some of the world's largest clouds use Ceph to provide persistent storage.

Although Ceph requires block storage as a kind of data silo, all the intelligence that takes care of scalability and internal redundancy is in software components. Ceph doesn't care if what it gets as persistent storage is a real hard drive or a virtual volume attached to a container. As long as Ceph can store its user data somewhere, the world is fine from its point of view.

The Missing Link

Although it might appear that Ceph has already solved the classic problems of redundant persistent memory for the developers of cloud-native applications, there has been no way until now to manage Ceph effectively in container environments.

From the user's point of view, the thought of manually creating containers in which a Ceph cluster runs is not very attractive. These VMs would not be integrated into the processes of the container environment and could not be controlled through a central API. Moreover, they would massively increase the complexity of the setup on the administration side.

From the provider's point of view, things are hardly any better. Although the provider could operate a Ceph cluster with the appropriate configuration in the background, which customers could then use, some usable process for container and storage integration would have to be considered. Although Docker has a Ceph volume driver, it's neither very well maintained nor very functional.

Rook is the link between containers and orchestration on the one hand and cloud-native applications on the other. How does this work in practice?

Simpler Start

The basic requirement before you can try Rook is a running Kubernetes cluster. Rook does not place particularly high demands on the cluster. The configuration only needs to support the ability to create local volumes on the individual cluster nodes with the existing Kubernetes volume manager. If this is not the case on all machines, Rook's pod definitions let you specify explicitly which machines of the solution are allowed to take storage and which are not.

To make it as easy as possible, the Rook developers have come up with some ideas. Rook itself comes in the form of Kubernetes pods. You can find example files on GitHub [6] that start these pods. The operator namespace contains all the components required for Rook to control Ceph. The cluster namespace starts the pods that run the Ceph components themselves.

Remember that for a Ceph cluster to work, it needs at least the monitoring servers (MONs) and its data silos, the object storage daemons (OSDs). In Ceph, the monitoring servers take care of both enforcing a quorum and ensuring that clients know how to reach the cluster by maintaining two central lists: The MON map lists all existing monitoring servers, and the OSD map lists the available storage devices.

However, the MONs do not act as proxy servers. Clients always need to talk to a MON when they first connect to a Ceph cluster, but as soon as they have a local copy of the MON map and the OSD map, they talk directly to the OSDs and also to other MON servers.

Ceph, as controlled by Rook, makes no exceptions to these rules. Accordingly, the cluster namespace from the Rook example also starts corresponding pods that act as MONs and OSDs. If you run the

kubectl get pods -n rook

command after starting the namespaces, you can see this immediately. At least three pods will be running with MON servers, as well as various pods with OSDs. Additionally, the rook-api pod, which is of fundamental importance for Rook itself, handles communication with the other Kubernetes APIs.

At the end of the day, a new volume type is available in Kubernetes after the Rook rollout. The volume points to the different Ceph front ends and can be used by users in their pod definitions like any other volume type.

Complicated Technology

Rook does far more work in the background than you might think. A good example of this is integration into the Kubernetes Volumes system. Because Ceph running in Kubernetes is great, but also useless if the other pods can't use the volumes created there, the Rook developers tackled the problem and wrote their own volume driver for use on the target systems. The driver complies with the Kubernetes FlexVolume guidelines.

Additionally, a Rook agent runs on every kubelet node and handles communication with the Ceph cluster. If a RADOS Block Device (RBD) originating from Ceph needs to be connected to a pod on a target system, the agent ensures that the volume is also available to the target container by calling the appropriate commands on that system.

The Full Monty

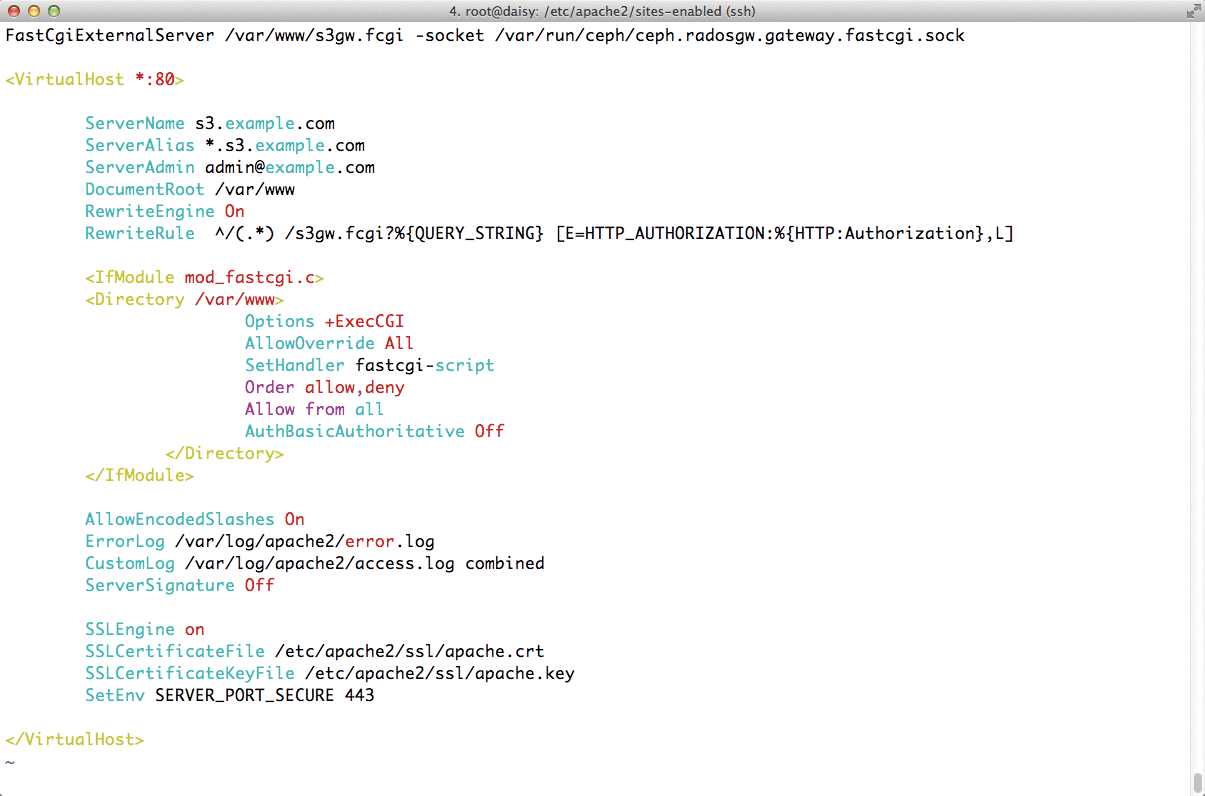

Ceph currently supports three types of access. The most common variant is to expose Ceph block devices, which can then be integrated into the local system by the rbd kernel module. Also, the Ceph Object Gateway or RADOS Gateway (Figure 2) enables an interface to Ceph on the basis of RESTful Swift and S3 protocols. For some months now, CephFS has finally been approved for production; that is, a front end that offers a distributed, POSIX-compatible filesystem with Ceph as its back-end storage.

From an admin point of view, it would probably already have been very useful if Rook were only able to use one of the three front ends adequately: the one for block devices. However, the Rook developers did not want to skimp; instead, they have gone whole hog and integrated support into their project for all three front ends.

If a container wants to use persistent storage from Ceph, you can either create a real Docker volume using a volume directive, organize access data for the RADOS Gateway for RESTful access, or integrate CephFS locally. The functional range of Rook is quite impressive.

Control by Kubernetes

Rook describes itself as cloud-native storage mainly because it comes with the API mentioned earlier – an API that advertises itself to the running containers as a Kubernetes service. In contrast to handmade setups, wherein the admin works around Kubernetes and pushes Ceph to containers, Rook provides the containers with all of Kubernetes' features in terms of volumes. For example, if a container requests a block device, it looks like a normal volume in the container but points to Ceph in the background.

Rook changes the configuration of the running Ceph cluster automatically, if necessary, without the admin or a running container having to do anything. Storing a specific configuration is also directly possible through the Rook API.

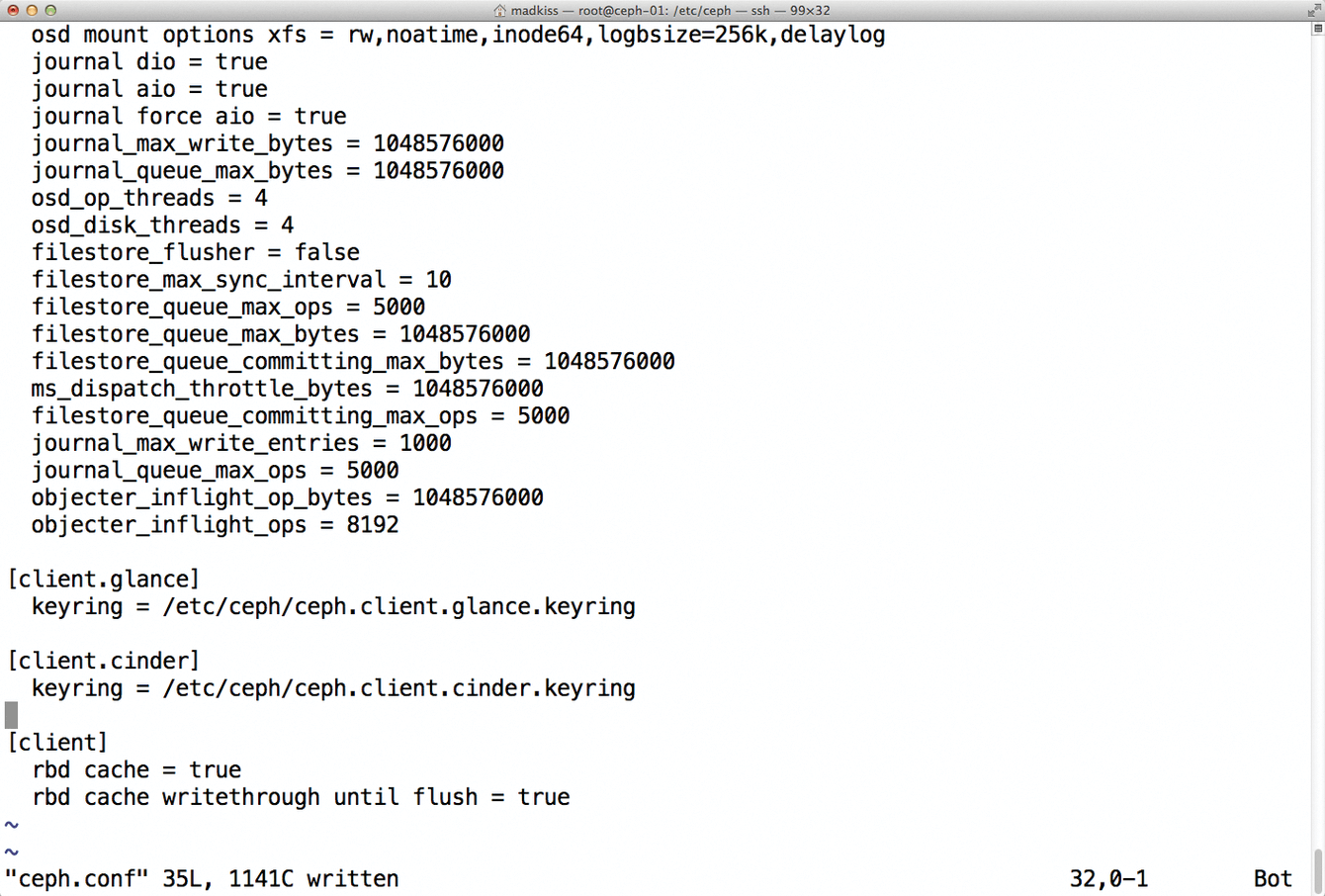

This capability has advantages and disadvantages. The developers expressly point out that one of their goals was to keep the administrator as far removed from running the Ceph cluster as possible. In fact, Ceph offers a large number of configuration options that Rook simply does not map (Figure 3), so they cannot be controlled with the Rook API.

ceph.conf, but Rook does not abstract them all.Changing the Controlled Replication Under Scalable Hashing (CRUSH) map is a good example. CRUSH is the algorithm that Ceph uses to distribute data from clients to existing OSDs. Like the MON map and the OSD map, it is an internal database that the admin can modify, which is useful if you have disks of different sizes in your setup or if you want to distribute data across multiple fire zones in a cluster. Rook, however, does not map the manipulation of the CRUSH map, so corresponding API calls are missing.

In the background, Rook automatically ensures that the users notice any problems as little as possible. For example, if a node on which OSD pods are running fails, Rook ensures that a corresponding OSD pod is started on another cluster node after a wait time configured in Ceph. Rook uses Ceph's self-healing capabilities: If an OSD fails, meaning that the required number of replicas no longer exists for all binary objects in storage, Ceph automatically copies the affected objects to other OSDs. The customer's application does not notice this process; it just keeps on accessing its volume as usual.

However, a certain risk cannot be denied with this approach. Ceph is highly complex and completely independent of whether an admin wants to experiment with CRUSH or other cluster parameters. Familiarity with the technology is necessary, as well, because for now, when the cluster behaves unexpectedly or a problem crops up that needs debugging, even the most amazing abstraction by Rook will be of no use. If you do not know what you are doing, the worst case scenario is the loss of data in the Ceph cluster.

At least the Rook developers are aware of this problem, and they are working on making various Ceph functions accessible through the Rook APIs in the future – explicitly, CRUSH.

Monitoring, Alerting, Trending

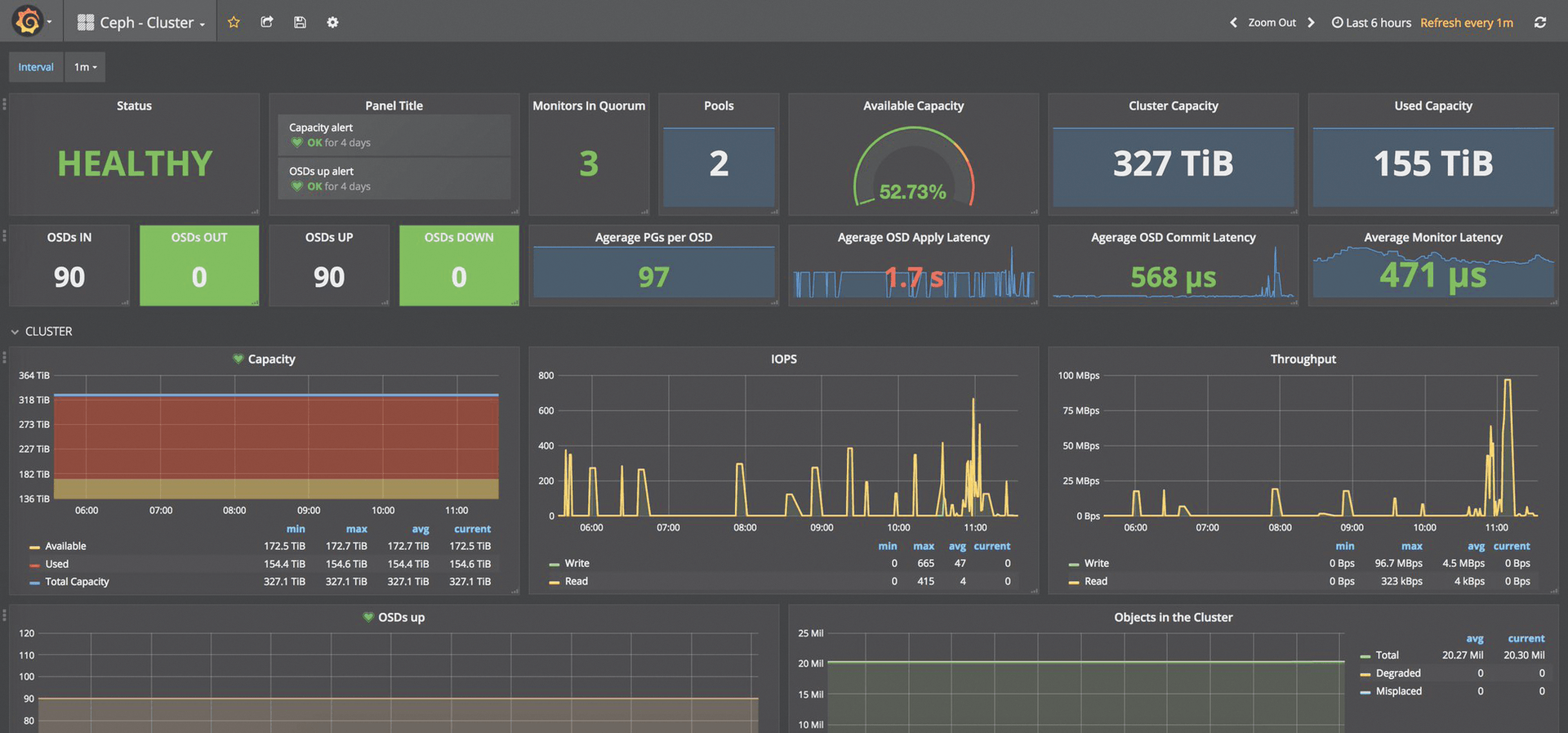

Even if Ceph, set up and operated by Rook, is largely self-managed, you will at least want to know what is currently happening in Ceph. Out of the box, Ceph comes with a variety of interfaces for exporting metric data about the current usage and state of the cluster. Rook configures these interfaces so that they can be used from the outside.

Of course, you can find ready-made Kubernetes templates online by Prometheus, also one of the hip cloud-ready apps that launches a complete monitoring, alerting, and trending (MAT) cluster in a matter of seconds [7]. The Rook developers, in turn, offer matching pods that wire the Rook Ceph to the Prometheus container. Detailed instructions can be found on the Rook website [8].

Ultimately, what you get is a complete MAT solution, including colorful dashboards in Grafana (Figure 4) that tell you how your Ceph cluster is getting on in real time.

Looking to the Future

By the way, Rook is not designed to work exclusively with Ceph, even if you might have gained this impression in the course of reading this article. Instead, the developers have big plans: CockroachDB [9], for example, is a redundant, high-performance SQL database that is also on the Rook to-do list, as is Minio [10], a kind of competitor to Ceph. Be warned against building production systems with these storage solutions, though. Both drivers are still in the full sway of development. Like Rook itself, Ceph is also tagged beta. However, the combination of Ceph and Rook is by far the most widespread, so large-scale teething pain is not to be expected.

By the way, Rook is backed by Upbound [11], a cloud-native Kubernetes provider. Although the list of maintainers does not contain many well-known names, a Red Hat employee is listed in addition to two people from Upbound, so you probably don't have to worry about the future of the project.

Of course, Red Hat itself is interested in creating the best possible connection between Kubernetes and Ceph, because Ceph is also marketed commercially as a replacement for conventional storage. Rook very conveniently came along as free software under the terms of Apache license v2.0.

Conclusions

The idea behind Rook is not particularly revolutionary. Packing Ceph, the storage solution that has been tried and tested for years, into containers and then rolling them out to Kubernetes is an obvious choice. However, the way Rook tackles the problem is impressive, because the solution for a supposedly trivial task involved a huge amount of brain power, development, and quality assurance. The Rook people are quite aware that they are dealing with 21st century gold (i.e., data), and they deal with it very carefully.

Given that Rook is still officially beta, it works excellently. If you want to mate up Kubernetes and Ceph, you will definitely want to take a look at Rook. To date, it is the best solution for this problem.

Rook keeps the promise of redundant storage for cloud-native apps. A Rook volume is no different from a typical Kubernetes volume in terms of the inside view. In the background, however, Rook ensures that replication and redundancy are handled by Ceph. In doing so, Rook solves the dilemma that neither the container environment, the developers, nor the customers cared to tackle – redundant storage.