Migrate your workloads to the cloud

Preparing to Move

Cloud computing has revolutionized IT, which in turn has led to a gradual shift in priorities among service providers and customers, starting with classic hosting service providers, who are increasingly becoming platform providers and no longer operate servers for individual customers.

Many startups and smaller service providers now see their opportunities, because customers are taking advantage of variety rather than committing to vendor tie-in, as in the past. Finally, customers are increasingly using approaches such as serverless computing and have completely stopped operating their own infrastructure.

Hardware Is a Pain

The customer has good reasons for doing without their own hardware. Maintaining and operating hardware is a troublesome, cost-intensive task. If you entrust this work to a platform provider, you can kill several birds with one stone. You get rid of the assets in the data center and save a considerable amount of paperwork. Moreover, clouds are even cheaper because providers pass on the savings they have made thanks to efficient processes to their customers.

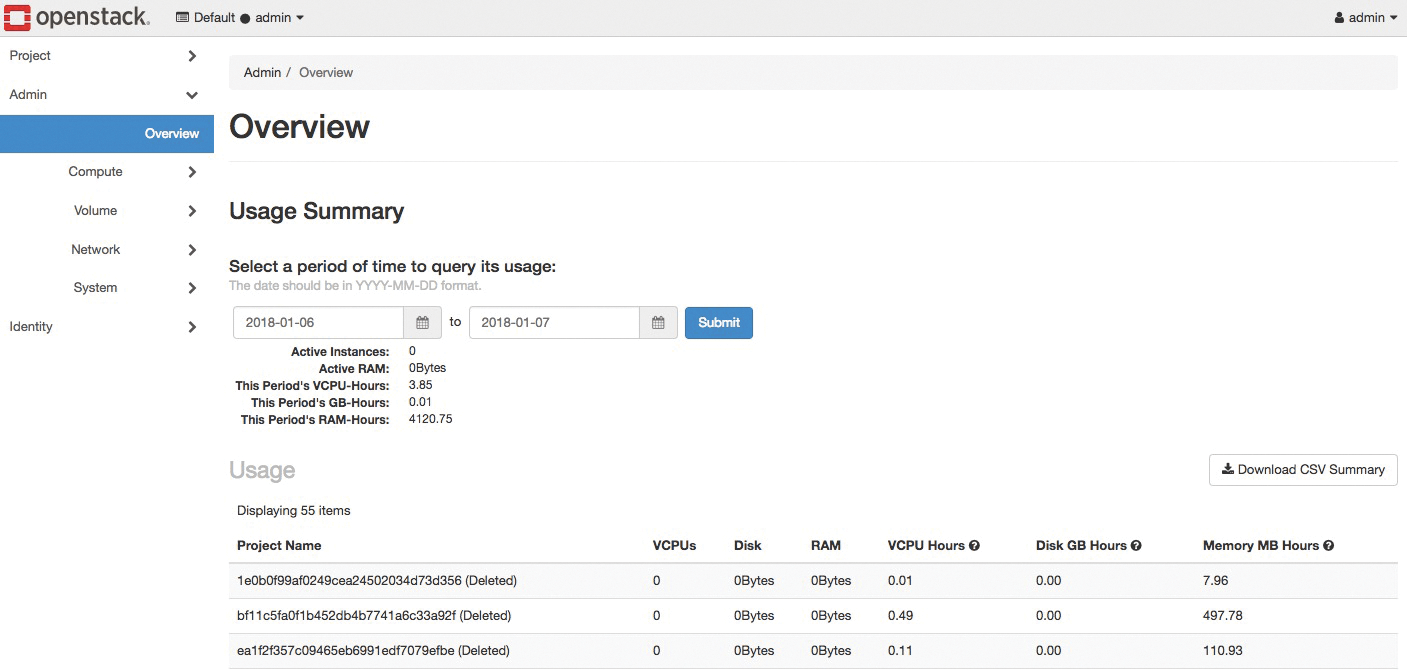

The one flaw in the beauty of this setup is that the move from bare metal to the cloud does not simply happen on its own. If you have a mental image of cool admins sitting in their command centers with drinks in their hands, watching their apps move to the cloud, you definitely need a reality check. Migrating to a cloud environment is a tedious task, with many pitfalls just waiting for the admin to stumble into. In most cases it is not enough simply to create virtual machines (VMs) in the cloud and copy the data over (Figure 1).

Cloud Services

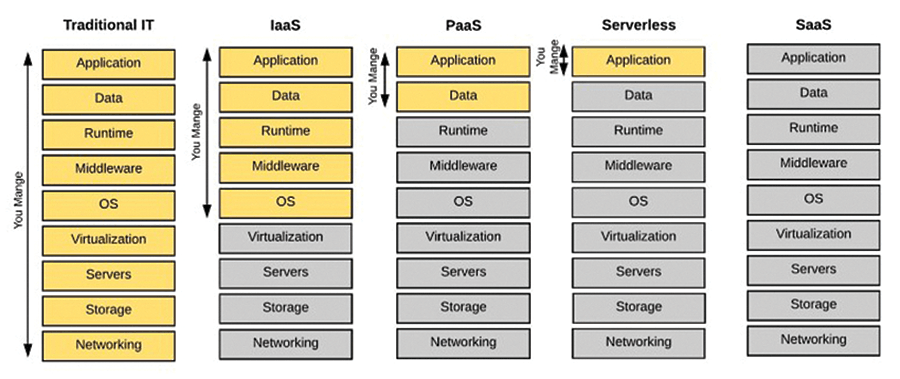

A modern cloud offers many types of services. Classic Infrastructure as a Service (IaaS) offers virtual systems in the form of VMs or containers, to which you roll out your services as needed, just as on physical hosts. Therefore, almost all workloads that were previously running on the physical servers can be moved to an IaaS environment.

One point to keep in mind is that Pacemaker and others that rely on the principle of a service IP that simply moves to another host in the event of a failure are difficult to implement in clouds.

Additionally, IaaS can only leverage the benefits of cloud environments to a limited extent. Clouds today offer far more functionality. Platform as a Service (PaaS), serverless computing, and various other as-a-service offerings are designed to make life easier for administrators. In the context of moving to the cloud, such services almost inevitably play a role.

The central issue about which admins should be concerned from the beginning is time. The difference between whether you have time to plan for the move to the cloud or whether the move will be a head over heels affair (e.g., because data center contracts are expiring and a late decision was made to not extend them) is considerable. Of course, the task for people in a hurry is far more complex.

Here, I first show you the ways and means of moving from classic hosted setups to IaaS. I then turn to the question of how the services in a cloud can be used to make the setup better. Finally, I also look at design principles for those who can afford the luxury of starting out on a greenfield.

Assess Your Starting Position

For the example here, a company wants to move its entire workload to the cloud. Previously, it operated the application on rented servers from a standard hosting service provider. In such scenarios, admins often make bad decisions under the pressure of time.

Out of necessity, the admin begins to plan a direct migration by first converting the currently running systems to a hard disk image that is then uploaded into the cloud, and finally starting a new VM with that image. Sounds good, but read the "Why a Direct Move is Difficult" box to see why this approach doesn't work in most cases.

Instead, admins would do well to adapt their workflows to suit the cloud. Step 1 in this procedure is an inventory that provides an overview of which systems your environment contains. Of course, this is best achieved if a Data Center Infrastructure Management (DCIM) system is present (e.g., NetBox [1]), which provides the relevant information at the push of a button.

However, such information systems are often missing, especially in conventional setups with historical growth. Your only option then is manual work. The better your inventory of the existing setup, the lower the risk of failure on moving to a cloud.

How Good Is the Automation?

Once you have determined what you actually need to have in the cloud, the next step is to ask yourself how to upload the existing setup to a cloud. Highly automated operations make this far easier than their non-automated relatives. After all, operating system images are available for all relevant Linux varieties in the popular clouds. If you can start a series of VMs and fire your preconfigured automation at them, all you have to do is worry about migrating your existing data; otherwise, you're done with your work.

If the degree of automation is low, as is often the case in conventional environments, things get trickier. When time is of the essence, admins will hardly have the opportunity to integrate automation quickly into the existing setup; rather, this task has to be done after the migration as part of cloud-specific optimization. If you have to deal with a setup that has very little automation, your first steps in the cloud will still be classic manual work.

Whether manual or automated, in the next step of the move, you ideally want to create a virtual environment in your section of the cloud that matches your existing setup in terms of type, scope, and performance.

Cloud Differences

If you migrate existing setups, you should also consider the many small differences between clouds and normal environments, such as the network configuration already mentioned. In clouds, it is based on DHCP; static allocation of IPs to VMs is also feasible with some tinkering but does not allow you to manage the networks with the cloud APIs, because the cloud is not aware of the statically configured IP on your VM.

High availability is a tricky topic in clouds. Outside of clouds, a cluster manager is often used (e.g., Pacemaker). If you want redundant data storage, you will typically turn to shared storage with RAID or software solutions like a distributed replicated block device (DRBD). The storage part is easy to implement in clouds. Most clouds offer a volume service that is redundant in itself.

By storing the relevant data on a volume that can be attached to VMs as required, you can effectively resolve this part of the problem.

More difficult is the question as to how typical services, such as a database, can be set up to provide high availability. The combination of Pacemaker and Corosync, which switches IP addresses back and forth, cannot be used in AWS. The additional IP is not visible to other VMs on the same subnet unless it is permanently assigned to a system through the AWS API.

However, this contradicts the idea of a reversible IP address, and letting Pacemaker talk directly to the AWS API is such an amateur solution that nobody has taken it seriously or implemented it to date.

If you want to implement high availability in clouds, you are almost inevitably forced to rely on the cloud-specific tools for the respective environment. Using AWS as an example, you can understand what this might look like thanks to an online tutorial [2], where the author explains how to use built-in AWS resources to obtain a switching MariaDB instance with high availability.

How Data Gets into the Cloud

Without the cloud environment's technology, it will be difficult to answer the question of how existing data finds its way in. What sounds like a trivial task can become a real pitfall – how complex the process actually is depends on the volume of data to be transferred.

For example, if the customer database is only a few gigabytes, it won't be a big problem to copy it once only from A to B within a maintenance window. However, if the volume of data is larger, a different approach is needed because the data is not allowed to change on the existing side after synchronization from the old to the new setup.

One possible way out of this dilemma is to operate a database in the target setup as a slave of what is still the production database. Most cloud providers now have a VPN-as-a-Service function that lets you use a VPN to connect to the virtual network of the virtual environment in the cloud. Your own client then behaves as if it were also a VM. If you connect the old network and the new network in this way, one instance of MariaDB can run in slave mode on the new network and receive updates from the master.

This approach also solves the problem of the new setup not having the latest data at the moment when the switchover takes place. If you copy the data manually from A to B in the traditional way, you would have to trigger a new sync before the go-live of site B to copy the latest chunks of data that changed since the last sync.

The answer to the question of how classic assets like image files find their way into the cloud, and how you can configure your setup so that all systems that need access to it have that access later, is not quite as easy. Sure, if you have set up NFS, you can also set up an NFS server in the cloud and have it host your data assets, but the copy problem remains.

If you are setting up a virtual VPN anyway, you can use tools like Rsync and create incremental backups. If the source and target systems somehow meet up on the network and at least one of them has a public IP, Rsync can also be used via SSH in most cases. However, a final sync is then necessary, as with the database.

Planning for D-Day

Apropos switching, ideally, administrators always look to plan required maintenance windows well in advance, and the answer to the question of how you can make sure customers are directed to the new system from a certain point in time, rather than continuing to access the old system, is very important. This can be done ad hoc with protocols (e.g., the Border Gateway Protocol, BGP), which requires a complex network setup and access to parts of the cloud that cannot be accessed, at least in large public clouds.

The DNS-based approach is better: First reduce the time to live (TTL) of your host entries or at least those entries that play a role in the move. Once the switchover time and maintenance window have arrived, the DNS entries are changed accordingly. Because of shorter TTL, the name servers used by your customers get the new DNS data the next time they access the system – all done.

Of course, some DNS servers ignore the TTL settings in domains. However, most customers should be satisfied in this way.

Harvesting Cloud Benefits

Once the transition to the cloud has been completed successfully, by all means go ahead and pop the champagne corks, but if you move a setup to the cloud as described here, you still will be confronted with several other tasks. For many aspects, however, the public clouds at least offer additional features that make operating a virtual environment far easier.

Databases are a perfect example: Every public cloud offers some form of database as a service, which is basically Software as a Service (SaaS). You do not start a VM and manually fire up a database in it. Instead, you simply communicate the key data of the required database to the cloud through the appropriate API interface (e.g., MariaDB, root password admin, high availability). The cloud then takes care of the rest.

Although a regular VM will still be running in this kind of setup, it will automatically configure the cloud environment as specified by the admin. Accordingly, the VM is no longer managed as a logical value; its unit is the database itself. Depending on the cloud implementation, a variety of practical functions are available, such as backup at the push of a button, snapshots, or the ability to create user accounts in the database via the cloud API.

This approach obviously is more flexible than maintaining a VM with a built-in database. Additionally, admins do not have to worry about issues such as data retention and system maintenance of the VM; if required, a new Database-as-a-Service instance can be started in most clouds to which existing data is connected.

The plan to use different as-a-service offerings in the selected cloud does not apply just to obvious components like databases. It is always a good idea to take a closer look at the options your choice of cloud offers because PaaS offerings can now be found almost everywhere (Figure 3).

If you need a web server to run a PHP or Go application for your web environment, you can of course run a fleet of Apache VMs and the corresponding configurations; or, you can hand this task over to the PaaS component of the respective cloud. The cloud expects, for example, a tarball with the application to be operated and rolls out a corresponding VM in which the application then runs.

The advantage is that the cloud's PaaS components often offer smart add-on functions such as automatic load monitoring, which launches additional instances of the application if required. Of course, this only works if you use the Load Balancer-as-a-Service (LBaaS) function of the respective cloud, which is highly recommended anyway, because LBaaS also actively takes some work off the admin's hands.

All told, you should consider everything that removes IaaS components from the setup. The fewer classic VMs you have to manage, the easier it is to maintain the setup (Figure 4).

![Cloud services such as dynamically configurable load balancers make life considerably easier for the admin (Microsoft Azure docs [3]). Cloud services such as dynamically configurable load balancers make life considerably easier for the admin (Microsoft Azure docs [3]).](images/F04-cloud-migration_5.png)

The icing on the cake is orchestration. If you combine various as-a-service cloud offerings in such a way that they work together and automate their IaaS components in a meaningful way, you can ultimately use orchestration to bundle all their resources. A complete virtual environment with all the required components can then be set up in a few minutes with an orchestration template.

Orchestrated environments of this type make good use of the benefits that clouds deliver; they create virtual networks and virtual storage devices along with their respective VMs.

Everything New

If you follow the advice given so far when moving to the cloud, you will end up with a versatile and cloud-oriented setup, although it still requires some work and is still not perfectly adapted.

Happy is the admin who can prepare an environment for operation in a cloud at the push of a button. Many companies see moving to a cloud environment as a radical and welcome opportunity to break with old customs, which often means getting rid of legacy software if it doesn't suit the typical cloud mantras.

Remember that "cloud ready" actually means that an application is made to run in a cloud with its various as-a-service offerings and APIs, which implies various details that strongly affect the application design. One important factor, for example, is breaking down an application into microservices.

The one-component, one-task rule applies, offering great flexibility in day-to-day operations and making it easy to pack the individual components into containers and operate them as part of a Kubernetes cluster, for example. After all, this could just as easily be a public cloud.

One thing must be clear: If you choose this approach, you are opting for a marathon and not a sprint. A rewrite can mean a huge time investment, especially in scenarios in which functionality currently implemented by monolithic software needs to be migrated to the cloud.

The reward is that you end up with a product that is perfectly adapted to the needs of clouds, follows classic cloud-ready standards, and avoids various issues that arise when conventional software is migrated to the cloud. If you rely on microservices and use standardized REST APIs (e.g., to let the individual components of an app communicate with each other), you can avoid many problems with regard to high availability from the outset.

Conclusions

Whether you can allow yourself the luxury of rewriting an application for the cloud or need to migrate existing workloads to the cloud, most environments operate well in clouds. If you want perfect "cloud readiness," you will rarely be able to avoid rewriting the application. However, you can create a new application according to the latest standards that fits the cloud like a glove.

Even if you can't do that, you still have no reason to panic: Conventional applications will run well in clouds with a few tricks. However, admins must view the cloud as an ally and not as an enemy that needs the most efficient workaround that can be found.

Even if you end up running legacy workloads, you shouldn't ignore the various modern features of the target cloud – on the contrary, it's better to employ them actively.