Optimization and standardization of PowerShell scripts

Beautiful Code

The use of scripts in the work environment has changed considerably over the past decade. Initially, they were used to handle batch processing with rudimentary control structures, functions and executables that were only called as a function of events, and return values. The functional scope of the scripting language itself was therefore strongly focused on processing character strings.

The languages of the last century (e.g., Perl, Awk, and Bash shell scripting) are excellent tools for analyzing logfiles or the results of a command with regular expressions. PowerShell, on the other hand, focuses far more on the interfaces of server services, systems, and processes, with no need to detour through return values.

Another change in scripting relates to the relevance and design of a script app: Before PowerShell, scripts were typically developed by administrators to support their work. Their understanding of the use of the application as something fairly personal also affected the applied standards: In fact, there weren't any. The usual principles back then were:

- Quick and dirty: Only the function is important.

- Documentation is superfluous: After all, I wrote the script.

The lack of documentation can have negative consequences for the author, though, leading to cost-intensive delays in migration projects three years down the road if the admin no longer understands code or the purpose of the code.

Basic Principles for Business-Critical Scripts

The significance of PowerShell is best described as "enterprise scripting." On many Microsoft servers, PowerShell scripts are the only way to ensure comprehensive management – Exchange and Azure Active Directory being prime examples. The script thus gains business-critical relevance. When you create a script, you need to be aware of how its functionality is maintained in the server architecture when faced with staff changes, restructuring, and version changes.

The central principles are therefore ease of maintenance, outsourcing, and reusability, as well as detailed documentation, and these points should be the focus of script creation:

- Standardization of the inner and outer structure of a script

- Modularization through outsourcing of components

- Naming conventions for variables and functions

- Exception handling

- Definition of uniform exit codes

- Templates for scripts and functions

- Standardized documentation of the code

- Rules for optimal flow control

Additionally, it would be worth considering talking to your "scripting officer" to ensure compliance with corporate policy. Creating company-wide script repositories also helps prevent redundancy during development.

Building Stable Script Frameworks

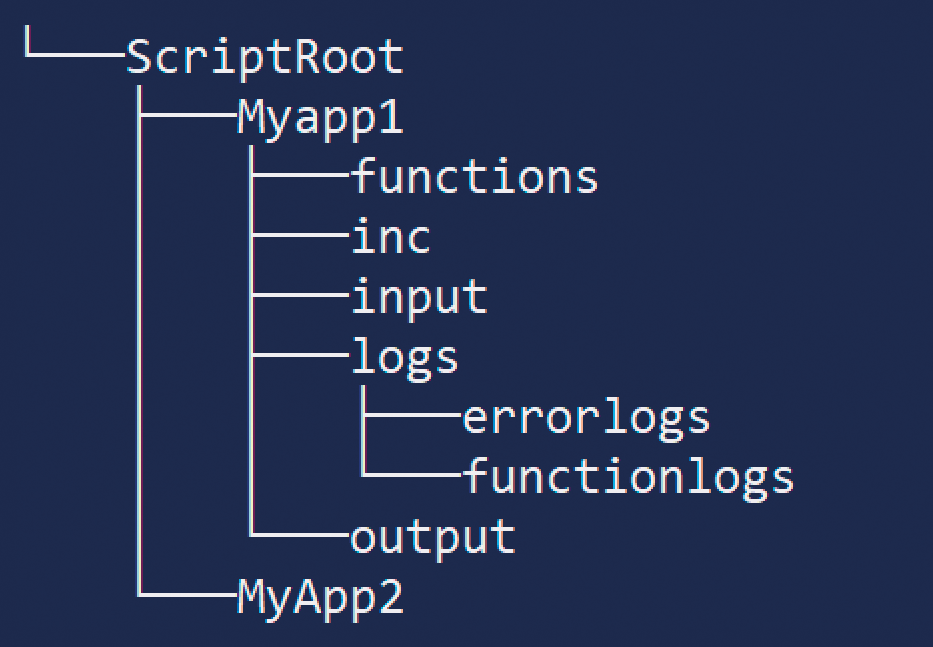

A uniform, relative path structure allows an application to be ported to other systems. Absolute paths should be avoided because adapting them means unnecessary overhead. Creating a subfolder structure as shown in Figure 1 has proven successful: Below the home folder for all script apps are individual applications (e.g., Myapp1 and MyApp2). Each application folder contains only the main processing file, which uses the same name as the application folder (e.g., Myapp1.ps1). The application folder can be determined dynamically from within the PowerShell script:

$StrScriptFolder = (myinvocation.MyCommand).Path |split-Path -Parent

The relative structure can then be represented easily in the code:

$StrOutputFolder = $ActualScriptPath + "\output"; [...]

The subfolders are assigned to the main script components Logging, Libraries, Reports, and External Control. Each script should be traceable: If critical errors occur during processing, they should be written to an errorlogs subfolder. For later analysis, I recommend saving as a CSV file with unambiguous column names: date and time of the error; processing that caused the error; and error levels like error, warning, info, and optionally line in source code are good choices. To standardize your error logs, it makes sense to use a real function (as opposed to Add-Content).

In addition to errors or unexpected return values, you should always log script actions for creating, deleting, moving, and renaming objects. To distinguish these logs from the error log, they are stored in the functionlogs subfolder. When a script creates reports, the output folder is the storage location. This also corresponds to the structure given by comment-based help, which is explained in the Documentation section.

Control information (e.g., which objects should be monitored in which domain and how to monitor them) should not reside within the source code. For one thing, retrospective editing is difficult because the information has to be found in the programming logic; for another, transferring data maintenance to specialist personnel without programming skills becomes difficult. The principle of maintainability is thus violated. The right place for control information is the input folder.

In addition to data, script fragments and constants can also be swapped out. A separate folder is recommended for these "scriptlets" with a view to reusability. In the history of software development, inc, short for "include" has established itself as the typical folder name for these components.

Format Source Code Cleanly

A clear internal structure greatly simplifies troubleshooting and error elimination. Here, too, uniform specifications should be available, as Figure 2 shows: The region keyword combines areas of the source code into a logical unit, but they are irrelevant for processing by the interpreter. The regions can be nested (i.e., they can also be created along the parent-child axis). Besides the basic regions init, process, and clear described in the figure, a test region is recommended. You can check whether paths in the filesystem or external libraries exist. Further regions can be formed, for example, from units within the main sections that are related in terms of content.

Readable code also includes the delimitation of statement blocks. Wherever you have foreach, if, and so on in a nested form, a standardized approach to indentation becomes important (e.g., two to four blanks or a Tab setting). Some editors, such as Visual Studio Code, provide support for formatting the source code. Although the position of the opening and closing curly brackets is controversial among developers, placing the brackets on a separate line is a good idea (Figure 3).

Using Normalized Script Notation

With no conventions for naming variables, constants, and functions in administrative scripting, processes become untraceable because variables are not only value stores, they fulfill documentation tasks. When assigning identifiers, you can use conventions from general software development.

The problem of mandatory notation has existed since the first programs were developed. Normalization approaches relate to syntax and variable name components (i.e., to increase the readability of an identifier or derive the type and function of a value from the name).

Two solutions for script naming normalization – upper CamelCase and Hungarian notation – solve these two problems. Upper CamelCase assumes that names usually comprise composite components. If this is the case, each substring should begin with an uppercase letter (e.g., StrUser). Hungarian notation, conceived by Charles Simonyi, uses a prefix to describe the function or type of a variable. For example, the p prefix designates a pointer type, dn a domain, and rg a field.

These examples alone illustrate the problem of a clear classification of type. Does "type" mean the data type or the function in the program? Because this cannot be answered unequivocally, Hungarian notation has branched off into two separate directions: Apps Hungarian notation as a functional approach and Systems Hungarian notation as a data type-specific declaration. These two approaches are not practicable for the development of scripts in PowerShell because of their disadvantages:

- The prefix is difficult to read because of difficulties in separating the two parts of a name.

- Apps Hungarian contains old-fashioned C-style data types (e.g.,

wfor word). - Type changes in both systems would mean renaming.

- The Systems Hungarian extension of the type system is too complex.

A script specification needs to be intuitive and easy to implement. Normalized script notation (NSN) is a combination of approaches. In principle, it differentiates between the processing of objects, structures, and value containers. A few basic data types are used when storing character strings or properties of an object (Table 1):

$StrToolPath = $StrActualScriptPath + "\tools\tools.ps1"; $BlnFlag = $FALSE;

Tabelle 1: NSN Prefixes

|

Data Type |

Prefix |

|---|---|

|

String |

|

|

Integer |

|

|

Double |

|

|

Boolean |

|

|

Date and Time |

|

|

Object |

|

|

Variant |

|

In contrast, when objects are processed, the schematic type determines the prefix. In addition to the data storage type, the variable name also distinguishes between items and lists. After the two-digit prefixes for the provider type, l would display a list and i a single item. This standard could be used as a basis for working with Microsoft Active Directory:

- User list: AdUl

- User item: AdUi

- Group list: AdGl

- Group item: AdGi

- Computer list: AdCl

- Computer item: AdCi

Constants – memory areas that cannot be changed after the first assignment – are a special feature. Because PowerShell has no CONST keyword to distinguish constants from variables, complete capitalization of a constant (e.g., $STRADSPATH = "ou=Scripting,dc=Mydom,dc=com) is useful.

Pitfalls When Naming Functions

Functions make good scripts. The ability to encapsulate instructions and pass in parameters makes enterprise scripting both possible in the first place and reusable. However, errors in naming are a serious possibility. For example, if you want an application to find unneeded groups in Active Directory, PowerShell will need to access the LDAP metabase of the Active Directory objects to find valid data. If you encapsulate this access in a function named Get-AdObject, you have implemented the verb-noun naming principle. However, the Active Directory PowerShell module already has a cmdlet with that name. Once the function is loaded, it overwrites the cmdlet, with unwanted side effects. When processing identical commands, a cmdlet takes third place after aliases and functions.

To avoid duplication of names, you should use a label in a function name. For example, Verb-UD_Noun is a good scheme, where Verb stands for the actions known in PowerShell (see Get-Verb), UD_ stands for "user defined," and Noun is the administrative target.

Software Without Documentation Is Worthless

Script authors become painfully aware of inadequate documentation when they look at their work after a few months. A PowerShell script should contain two forms of documentation: comment-based help and inline. Comment-based help replaces the script header in other languages and relies on keywords in a comment block. The meaning of the individual sections can be retrieved by integrated help with

Get-Help about_Comment_Based_Help

in the PowerShell console. Descriptions, examples, and parameter usage should be the minimum content for comment-based help.

Comments within the source text always precede the statement that is to be explained. The content should be detailed and designed in such a way that co-workers who were not involved in the development of the program can also follow the operations in the script.

Handle Exceptions, Not Errors

When you develop PowerShell scripts, you need to perform an extensive testing phase before the scripts go live. Support is provided by PowerShell modules such as Pester (Get-Help about_Pester). Additionally, debug-level settings can be helpful. To start line-by-line debugging with variable tracking, use:

> Set-PSDebug -trace 2

However, despite careful development, run-time errors can be caused by missing network connections, insufficient privileges, unavailable servers, and so on. To field such errors, you first need to set the ErrorActionPreference variable more strictly. (See also the "Error Handling Checklist" box.) The default value is continue, which only handles critical errors. To classify each error as critical, set the value to stop; then, statement-specific error handling can be used with try/catch/finally.

If the application is terminated in response to an error, it is important to assign a value to the exit statement, which can be retrieved with the $LastExitCode environment variable. If the script app is part of a workflow, it could then determine the exit point.

Finally, some hints as to what should not be in a script:

- The PowerShell already offers a self-documenting approach through the consistent verb-noun principle. This should not be wasted by misleading aliases or positioned or abbreviated parameters.

- Be careful if you frequently use pipes because overall processing time suffers. A test with

Measure-Commandgives you clarity quickly. - Missing dependency declarations, required host versions, and execution permissions are remedied by

#requires(Get-Help about_requires). - Commented out code elements as the remnants of a test is simply confusing. To test different approaches, work with branches and versioning tools.

Conclusions

Freedom in scripting is both a blessing and a curse. As soon as scripts assume an important function in the company, standardization becomes mandatory. For the IT manager, the team situation can be nerve-racking with just one scripter, so team play should start with the source code. Comprehensive guidance will increase the quality of your PowerShell scripting and future-proof your scripts.