Installing and operating the Graylog SIEM solution

Log Inspector

Linux has long mastered the art of log forwarding and remote logging, which are prerequisites for external log analysis. From the beginning, security was the focus: An attacker who compromises a system most likely would also try to manipulate or delete the syslog files to cover his tracks. However, if the administrator uses a loghost, the files are less likely to fall into the hands of hackers and, thus, can still be analyzed after an attack.

As the number of servers increases, so do the size of logfiles and the risk of overlooking security-relevant entries. Security information and event management (SIEM) products usually determine costs by the size of logs. The Graylog [1] open source alternative discussed in this article processes many log formats; however, if the volume exceeds 5GB per day, license fees kick in.

Why SIEM?

As soon as several servers need to be managed, generating overall statistics or detecting problems that affect multiple servers becomes more and more complex, even if all necessary information is available. Because of the sheer quantity of information from different sources, the admin has to rely on tools that allow all logs to be viewed in real time and help with the evaluation.

SIEM products and services help you detect correlations in a jumble of information by enabling:

- Access to logfiles, even without administrator rights on the production system.

- Accumulation of the logfiles of all computers in one place.

- Analysis of logs with support for correlation analysis.

- Automatic notification for rule violations.

- Reporting on networks, operating systems, databases, and applications.

- Monitoring of user behavior.

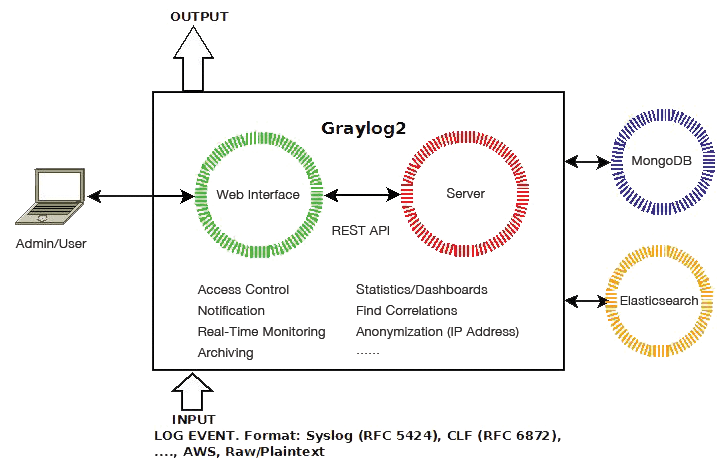

Installing and configuring Graylog is quite easy. The Java application uses resources sparingly and stores metadata in MongoDB and logs in an Elasticsearch cluster. Graylog consists of a server and a web interface that communicate via a REST interface (Figure 1).

Installation

Prerequisites for the installation of Graylog 2.4 – in this example, under CentOS 7 – are Java version 1.8 or higher, Elasticsearch 5.x [2], and MongoDB 3.6 [3]. If not already present, installing Java (as root or using sudo) before Elasticsearch and MongoDB is recommended:

yum install java-1.8.0-openjdk-headless.x86_64

You should remain root or use sudo for the following commands, as well. To install Elasticsearch and MongoDB, create a file named elasticsearch.repo and mongodb.repo in /etc/yum.repos.d (Listings 1 and 2); then, install the RPM key and the packages for MongoDB, Elasticsearch, and Graylog (Listing 3) to set up the basic components.

Listing 1: elasticsearch.repo

[elasticsearch-5.x] name=Elasticsearch repository for 5.x packages baseurl=https://artifacts.elastic.co/packages/5.x/yum gpgcheck=1 gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch enabled=1 autorefresh=1 type=rpm-md

Listing 2: mongodb.repo

[mongodb-org-3.0] name=MongoDB Repository ** baseurl=http://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/3.0/x86_64/ gpgcheck=0 enabled=1

Listing 3: Installing Components

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch ** yum install elasticsearch yum -y install mongodb-org ** rpm -Uvh https://packages.graylog2.org/repo/packages/graylog-2.4-repository_latest.rpm yum install graylog-server

Configuration

The best place to start the configuration is with Elasticsearch. In its configuration file, you specifically need to assign the cluster.name parameter. The only configuration file for Graylog itself is server.conf, which is located in the /etc/graylog/ directory and uses ISO 8859-1/Latin-1 character encoding. This extensive file begins with the definition of the master instance and ends with the encrypted password of the Graylog root user. The most important parameters define email, TLS, and the root password.

To start Graylog at all, the parameters password_secret and root_password_sha2 must be set. The password_secret parameter should be set with a string of at least 64 characters. Graylog uses this string for salting and encoding the password. The pwgen command generates a password and encrypts with sh256sum:

pwgen -N 1 -s 100 echo -n <Password> | sha256sum

The encrypted password is then assigned to the parameter root_password_sha2. Table 1 gives a summary of the important parameters.

Tabelle 1: Important Configuration Parameters

|

Parameter |

Description |

Remarks |

|---|---|---|

|

Important Graylog Parameters |

||

|

|

Defined master/slave |

Must be set; otherwise, Graylog does not start |

|

|

String, at least 64 characters long for salting and encrypting password |

Must be set; otherwise, Graylog does not start |

|

|

Login name for admin |

Default is |

|

|

Hash of the password as a result of the |

Must be set; otherwise, Graylog does not start |

|

|

Canonical ID for time zone (e.g., |

Very important |

|

|

Email address of root |

|

|

|

Path to plugin directory |

Relative or absolute |

|

|

|

Important |

|

|

|

Important |

|

|

|

Important |

|

|

|

Important |

|

|

|

Important |

|

|

|

Important |

|

|

|

Important |

|

Important Elasticsearch Parameters |

||

|

|

|

Default is |

|

|

|

Default is |

|

|

The appropriate parameter depends on the value of the |

Permissible values for |

|

Important MongoDB Parameters |

||

|

|

|

Important if replicated |

|

|

Number of allowed connections (e.g., |

|

|

|

Multiplier to determine how many threads can wait for a connection |

Default is |

Connecting Windows Server

On Windows, unlike Linux, logfiles cannot be forwarded with a simple configuration. Dispatching relies on an agent, from which you have several to choose. Graylog itself recommends two: Graylog Sidecar or NXLog (used in this example). The agent reads the logfiles from Windows and applications and forwards them to a defined Graylog port. Agents require their own configuration (discussed later).

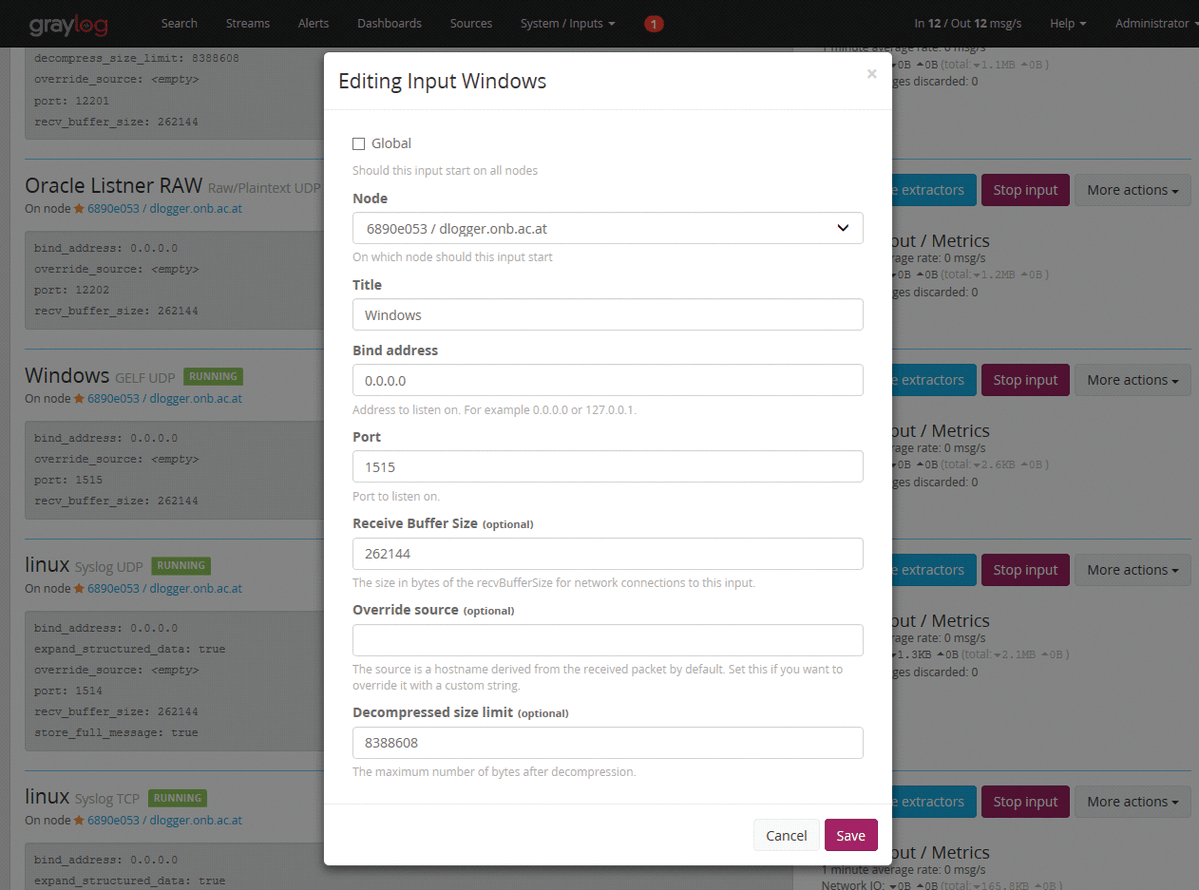

The first step in connecting a Windows server to Graylog is to define an input under System | Inputs, choosing GELF UDP and then Launch new input. The window that then opens has seven self-explanatory fields. In the example for this article, Graylog listens on port 1515 (Figure 2).

The open source NXLog agent supports RFC 3164 standards, log formats from RFC 5424 to 5426, and many other formats, including CSV, GELF, JSON, and XML. The agent reads the logs and sends the data to the Graylog port. The best solution for collecting log data is rsyslogd [4]. Because Windows does not support this, you must tell the agent in which format the data will arrive and in which format they should be forwarded.

Writing the specifications to the NXLog configuration file defines input to and output from NXLog. Prefabricated modules can be used for both, but self-defined input or output formats are also possible. More about that later.

For the NXLog installation, run the downloaded EXE file on the Windows server. Installation under C:\Program Files (x86) creates an nxlog directory, and below it a conf directory where the configuration file (Listing 4) resides and where you save the pm_buffer module, which buffers the data on the hard drive when Graylog is not reachable. Finally, you can start the NXLog service under Windows by typing:

sc start nxlog

Listing 4: NXLog Configuration

01 define ROOT C:\Program Files (x86)\nxlog 02 Moduledir %ROOT%\modules 03 CacheDir %ROOT%\data 04 Pidfile %ROOT%\data\nxlog.pid 05 SpoolDir %ROOT%\data 06 LogFile %ROOT%\data\nxlog.log 07 <extension gelf> 08 Modules xm_gelf 09 </Extension> 10 <Input in> 11 # Use for windows vista/2008 and higher: 12 modules in the_msvistalog 13 14 # Use for windows Windows XP/2000/2003: 15 # Modules in_mseventlog 16 </Input> 17 18 <processor buffer> 19 Modules pm_buffer 20 MaxSize 102400 # 100 MByte buffer on the hard disk 21 Type disk 22 </Processor> 23 24 <Output out> 25 Modules om_udp 26 Host GraylogServerName 27 Port 15150 28 OutputType GELF 29 </Output> 30 31 <Route 1> 32 Path in => out 33 </Route>

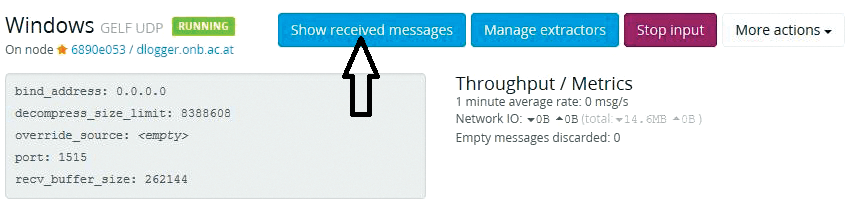

From this point on, you will find the incoming log entries in Graylog under System | Inputs by clicking Show received messages in the Windows section (Figure 3).

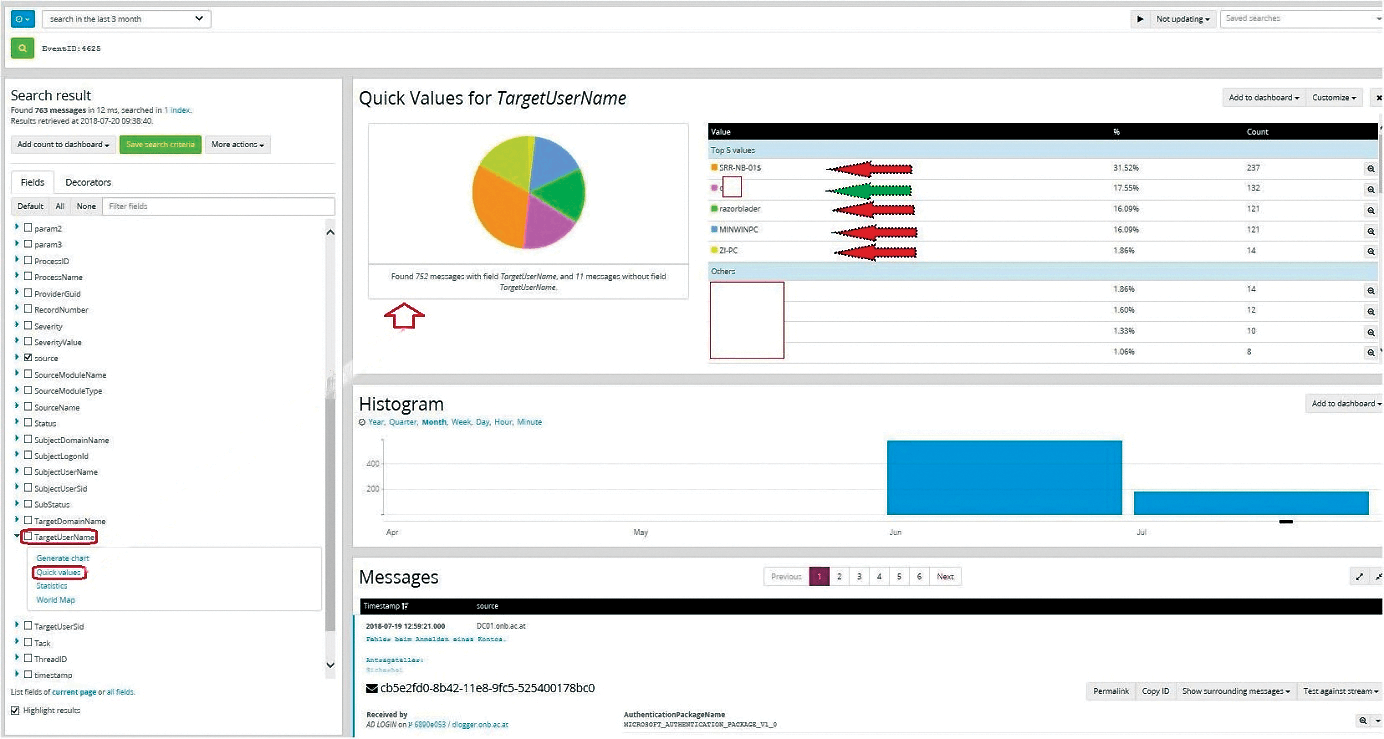

Now it is possible to search for certain events with the Graylog search function. For example, you might be interested in finding failed logins in Active Directory (Figure 4), because these could be indicative of a brute force attack. You will find the EventID for all events on TechNet [5]; for a failed login, EventID is 4625. By searching for this number, you can list all failed login attempts, along with the corresponding information, such as the IP address of the client.

If you need more information in graphical form, open the TargetUserName field and click Quick values. The result is an infographic and list showing the most common logins. Figure 4 shows 237 Active Directory attempts with the name SRR-NB-01$ and 121 Active Directory attempts with the login name razorblader. These usernames are unknown on the system, so they are probably genuine attacks. On the other hand, 132 attempts (green arrow) were made by a registered user.

NXLog for Any Format

In special cases (e.g., logs that do not follow standard rules), the NXLog agent itself might have to monitor a logfile, convert it, and then send it to the Graylog server. The following example uses the in_file module in the <Input in> section, changes the content of the log, and passes everything to the output. The Output out tag determines whether the result is sent to Graylog via UDP or TCP. The syntax of the configuration is similar to Perl syntax (Listing 5).

Listing 5: NXLog Event Processing

01 <Extension gelf> 02 Modules xm_gelf 03 </Extension> 04 05 <Input in> 06 Modules in_file 07 File C:\Program Files (x86)\App\log\app.log" 08 09 # If there is a date and time in the logfile, extract it. If the date and time are not available, take the system time. 10 Exec if $raw_event =~ /(\d\d\d\d\d\-\d\d\d-\d\d \d\d:\d\d:\d\d\d)/ 11 $EventTime = parsedate($1); 12 13 # Normally the hostname is set by default, for security's sake it can be entered in this way. 14 Exec $Hostname = 'myhost'; 15 16 # Now the type of message (severity level) is set. The example uses the default syslog values. 17 18 # ALERT: 1, CRITICAL: 2, ERROR: 3, WARNING: 4, NOTICE: 5, INFO: 6, DEBUG: 7 19 Exec if $raw_event =~ /ERROR/ $SyslogSeverityValue = 3; else $SyslogSeverityValue = 6; 20 21 # The name of the file is also sent with the file 22 Exec $FileName = file_name(); 23 24 # The SourceName variable is set by default to 'NXLOG'. To send the application names, too, use $SourceName = 'AppName' instead. 25 Exec $SourceName = ' AppName'; 26 </Input> 27 28 <Output out> 29 Modules om_udp 30 Host GraylogServerName 31 Port 12201 32 OutputType GELF 33 </Output> 34 35 <Route r> 36 Path in => out 37 </Route>

Connecting Linux Servers

As already mentioned, Linux has supported log forwarding and remote logging for a long time. The syslogd system daemon receives all messages, sorts them by urgency and source, and archives them in one or more logfiles in the /var/log/ directory.

Syslog only supports UDP. Rsyslog, a later project from 2004, is an extension and uses the Reliable Event Logging Protocol (RELP), which is based on TCP and can therefore also be used with TLS. An important extension of rsyslog over syslog is that it can buffer local messages if the remote server is not ready to receive. The example in this article uses rsyslog.

The main configuration file is usually found in /etc/ as syslog.conf; rsyslog.d also resides in /etc/. Here, you need to create a new file, as shown in Listing 6.

Listing 6: Rsyslog Configuration

*.* <@host.domain.org>:1516;RSYSLOG_SyslogProtocol23Format ** *.* @@<host.domain.org>:1516;RSYSLOG_SyslogProtocol23Format

The expression *.* means "forward everything the syslog daemon processes." The @ character stands for the UDP protocol on the transport route. Note that this protocol is not suitable for encryption. The @@ entry means that TCP must be used for the transport. Finally, RSYSLOG_SyslogProtocol23Format: stands for a built-in function that determines the format.

Now Graylog should be able to receive something. Under System/Inputs | Syslog TCP, click Launch new input and fill in the form. The most important parameters are shown in Listing 7.

Listing 7: Important Graylog Input Parameters

allow_override_date: true bind_address: 0.0.0.0 expand_structured_data: true force_rdns: false max_message_size: 2097152 override_source: <empty> port: 1516 recv_buffer_size: 1048576 store_full_message: true tcp_keepalive: true tls_cert_file: /path... tls_client_auth: disabled tls_client_auth_cert_file: <empty> tls_enable: true tls_key_file: /path... tls_key_password: ******** use_null_delimiter: false

Annoying the Man in the Middle

If a server or device is located outside the internal network, encrypted communication is a must-have. Graylog, rsyslog, and NXLog manage your encrypted communication. On Graylog, you have to set the tls_enable parameter to true and fill in the tls_cert_file and tls_key_file parameters accordingly.

On Linux, you will want to choose the TCP protocol (@@) and set all the necessary parameters important for encryption. Parameter order is not arbitrary. The configuration file for sending is shown in Listing 8.

Listing 8: Sender-Side Configuration

$DefaultNetstreamDriver gtls $DefaultNetstreamDriverCAFile </Path>/cert.pem $ActionSendStreamDriver gtls # Use Gtls netstream driver $ActionSendStreamDriverMode 1 # Absolutely TLS $ActionSendStreamDriverAuthMode anon # Client authentication is not necessary *.* @@host.domain.ac.at:1516;RSYSLOG_SyslogProtocol23Format

Note that the send stream driver gtls is included in the rsyslog-gnutls package. Under Windows with NXLog, a few lines are also needed in the config file for secure transmission. The om_ssl module must be defined in the output tag, and the path to the CA file must be specified (Listing 9).

Listing 9: Windows SSL Communication

<Output out> Modules om_ssl Host GraylogServerName Port 1516 CAFile %CERTDIR%/filename.crt AllowUntrusted FALSE </Output>

Apache Anonymously On Board

Many applications create logfiles independent of rsyslog. The integration of most application logs of this type into rsyslog is basically possible but requires extensive configuration on both sides and knowledge of how to send the log to rsyslog within the specific application.

Graylog solves this problem with just a few steps, now demonstrated with the Apache log. Set up a GELF TCP input in Graylog; then, configure Apache on the source server by defining a log format and forwarding it with Netcat.

The European Union (EU) General Data Protection Regulation (GDPR) does not allow companies to store the IP addresses of visitors from the EU to a website without their consent or without "legitimate interest." Because SIEM archives log data, it is advisable to anonymize the IP addresses from the outset.

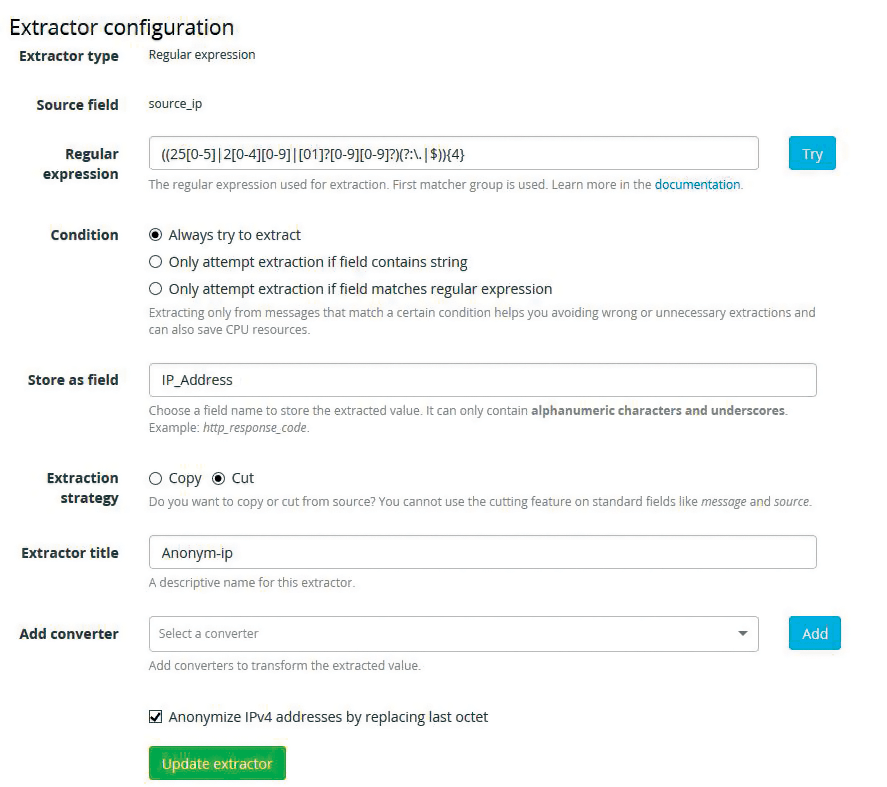

In Graylog, it is possible to anonymize IP addresses using an extractor: Under System/Inputs select the IP address field in Inputs | Manage extractors | Add extractor | Get started | Load Message; then, select Regular Expression as the extractor type. In this case, fill out the source_ip form that opens and insert the values shown in Table 2 and Figure 5. The regular expression shown searches for IP addresses.

Tabelle 2: Source IP Extractor Config

|

Parameter |

Value |

|---|---|

|

Regular expression (searches for IP address) |

((250-5|20-40-9|[01]?0-90-9?)(?:\.|$)){4} |

|

Condition |

Always try to extract |

|

Store as field |

IP_Address |

|

Extraction strategy |

Cut |

|

Extractor title |

Anonym-ip |

|

Add converter |

<empty> |

|

Anonymize IPv4 addresses by replacing last octet |

Check |

Your Own Agent

Some applications (e.g., listener.log or alert.log from Oracle) generate very peculiar logfiles that lack information like the hostname and a message. A self-written script (Listing 10) that adds these fields before sending prevents misunderstandings between the sender and receiver. The script reads the original logfile and forwards the content.

Listing 10: Editing Oracle Logs

01 #!/bin/bash 02 #set -x 03 file=/tmp/listner.log 04 if [ ! -e "$file" ]; then 05 touch /tmp/listner.log 06 fi 07 tail -n 0 -F /db/oraclese/product/diag/tnslsnr/pics-db11/listener/trace/listener.log | while read LINE 08 do 09 echo "\"host:\" "\"picsdb\", \"message:\" "\"$LINE\"" >> /tmp/listner.log 10 11 if [ $? = 1 ] 12 then 13 echo -e "$LINE ... \n found on $HOSTNAME" | mail -s "Something's wrong on $(hostname)" bf@onb.ac.at 14 fi 15 done & 16 tailf /tmp/listner.log | nc -u dlogger.onb.ac.at 12202

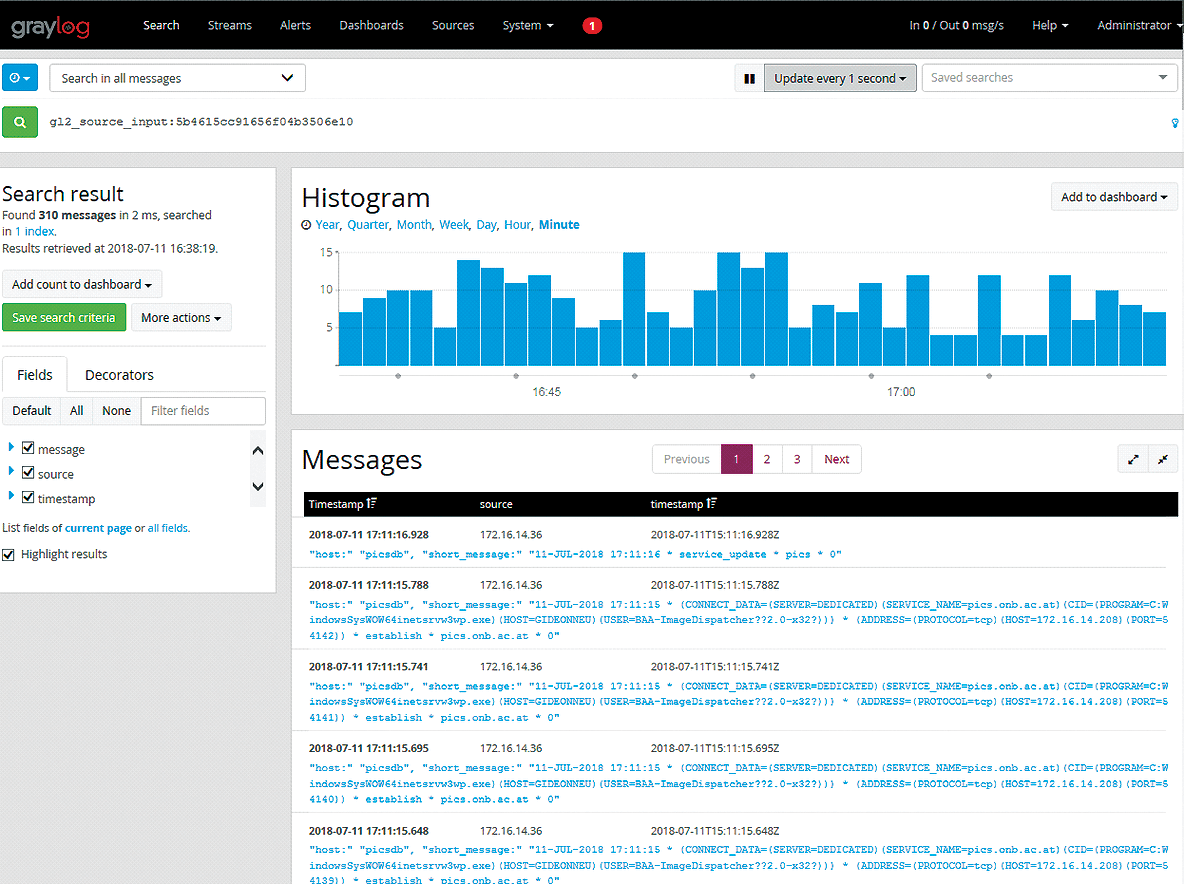

On the Graylog side, with only one GELF TCP input to implement, you already see the log entries (Figure 6). By setting up an alert, you can send notifications when Graylog receives error messages (usually starting with the string ORA).

Correlation

One of the most important SIEM tasks is correlation. To this end, fields must be structured and named uniformly. HTTP codes, for example, have different names on different systems (e.g., http_response_code on one system and status_code on another). Graylog has an important tool that unifies field names. With the extractor under System | Inputs | Manage extractors, the field names can be converted to uniform names.

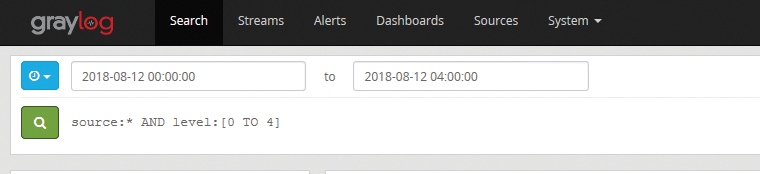

Equally important is that the dates and times of log entries are the same for all computers, so you can find all error messages across the entire enterprise system that have occurred within a certain period of time. The extractor described above also helps here, because it can convert the date and time information extracted from the system computers to a uniform timestamp format. Figure 7 shows how easy it is to find errors retroactively from a certain time span for the entire enterprise system.

In Figure 7, the source field is linked to a wildcard and assigned to message levels 0 to 4. On Linux, the levels are numbered from 0 to 7, where 0 means Emergency, 1 is Alert, 2 is Critical, 3 is Error, …, and 7 is Debug. Under Windows, however, the levels are organized differently: Graylog stores the message levels that correspond to those on Linux, in the severity level field.

Alerts

SIEM places much value on security. Graylog allows you to correlate data from different sources to find the proverbial needle in the haystack. If a specific constellation recurs within a specified period of time, Graylog triggers an alert, which in turn enables administrators to react promptly.

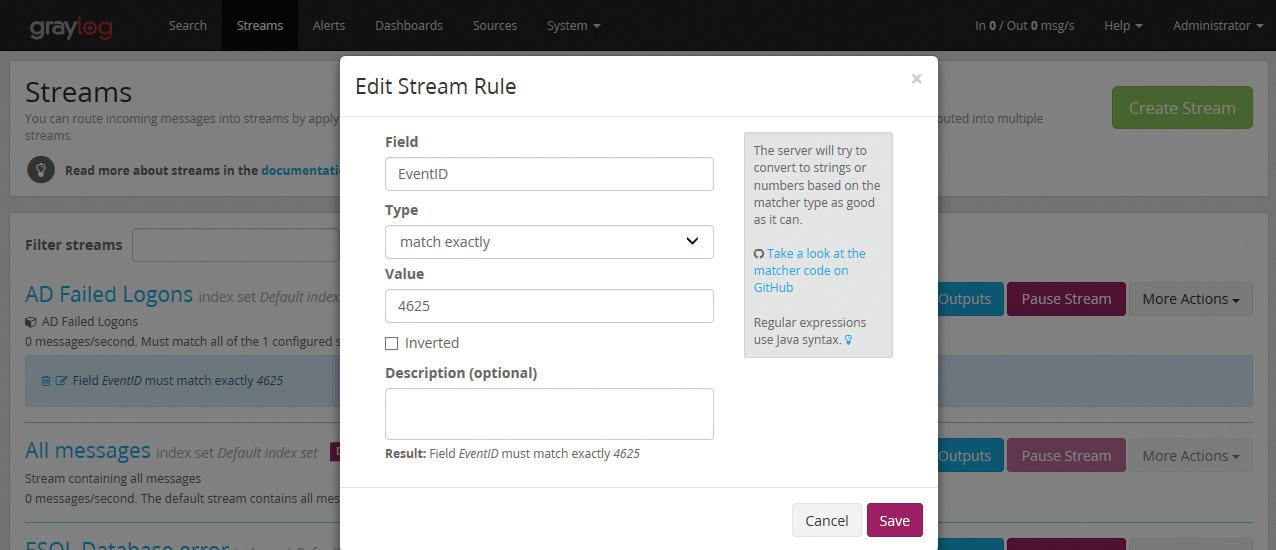

Graylog alerts are based on streams. By default, a stream named All messages that does not support any rules takes in all notifications. A new rule creates a new stream. The Active Directory example earlier in the article created a stream with the rule (Figure 8) "search all messages with the field name EventID that contain the value 4625."

An alert can be set up for this stream. Selecting Alerts | Manage condition | add new condition takes you to a form where you can define the stream and the conditions for the alert. In this example, choose the AD Failed Logons stream and select the alert Message count condition from among the three types of conditions:

- Message count condition: The alert is triggered if the selected stream received x messages in the last y minutes (e.g., very good at detecting brute force attacks).

- Field aggregation condition: The alert is triggered when a numeric field in a stream reaches a minimum or maximum threshold (e.g., suitable for determining whether the response time of a particular application has exceeded a maximum value).

- Field content condition: The alert is triggered if a field contains a certain value (e.g., Unknown source, which means that an untrusted source installed a program).

Clicking on Add alert condition opens another form in which the values of the parameters in Table 3 can be entered.

Tabelle 3: Configuring Alerts

|

Parameter |

Value |

Remarks |

|---|---|---|

|

Title |

Failed Login AD |

|

|

Time Range |

1 |

Evaluate all incoming messages every xth minute |

|

Threshold Type |

More than |

Threshold types are more than or less than |

|

Threshold |

5 |

Number of messages fulfilling the condition |

|

Grace Period |

1 |

Number of minutes after which the condition should become active again |

|

Message Backlog |

1 |

Number of messages to be attached in the alert |

After defining all conditions for an alert, you can start setting up a notification. Under Alerts | Manage notifications | Add new notification, you can specify the stream in question and determine who should be notified in case of a problem. You can choose between an HTTP and an Email alert notification. The recipient of the message can be either a registered Graylog user or any email address entered in the form.

Conclusions

Central log management is indispensable in a modern IT landscape. On the one hand, it removes the need for administrators to perform manual checks; on the other hand, it increases the rate of error detection and improves security. SIEM systems systematically help detect anomalies or attacks and respond appropriately. They are thus the next generation of logging and are suitable for countering the increasing complexity of programs and attacks.

SIEM is additionally important because it has real-time monitoring capabilities and immediate notification of rule violations, as well as long-term archiving for analysis and reporting.