Workflow-based data analysis with KNIME

Analyze This!

Data analysts like to use flexible scripting languages such as R or Python that come with large ecosystems of libraries and extensions. But many users don't want to have to write and debug their own custom programs just to analyze data.

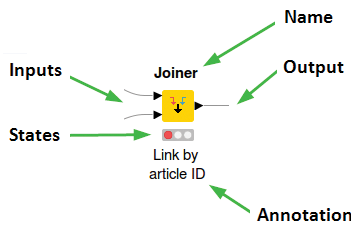

Visual workflows offer a different approach. You can use visual workflows to break down the analysis processes into modular, sequential steps. Each step is symbolized by a graphic element called a node. Each node performs an action, which might be a calculation, a formatting function, or another step related to data analysis and manipulation. By linking the nodes on the screen, users can create workflows for complex investigations of the data – without producing any code.

Visual workflows are the central element of the KNIME Analytics Platform. In the KNIME environment, a workflow is a graph with nodes showing a series of sequential steps for processing and analyzing the data. The user defines a pathway for the data by connecting the output of one node to the input of another node. A type system ensures that you can only connect compatible output and input. Real programming code is only necessary if you want to integrate KNIME with R or Python – or if you want to develop your own modules.

KNIME, which is pronounced "nime," was originally known as the Konstanz Information Miner; it began in 2006 at the Department of Bioinformatics and Data Mining at the University of Konstanz under the direction of Prof. Michael Berthold. The basic idea was to make data analysis easily accessible and affordable for users from different disciplines. The developers, therefore, turned their creation into an open source project, paying particular attention to making the tool extensible and user-friendly.

KNIME Analytics Platform is written in Java and is based on Eclipse and Open Services Gateway Initiative (OSGI) technology. The latest version (version 3.7) is available at the project website [1], where you will also find other introductory materials, including blogs, videos, and sample workflows.

The KNIME Analytics Platform is open source; anyone can download and use it free of charge. Start the installer or unpack the archive, and KNIME is ready to go. However, you might wish to add some KNIME extensions, which are the source for many additional nodes. To add an extension, go to File | Install KNIME Extensions, check the list for the desired extension, and follow the instructions. Check out the documentation [2] and node guide [3] at the KNIME website for more on working with KNIME, or bring your questions to the KNIME forum [4].

Looking Around

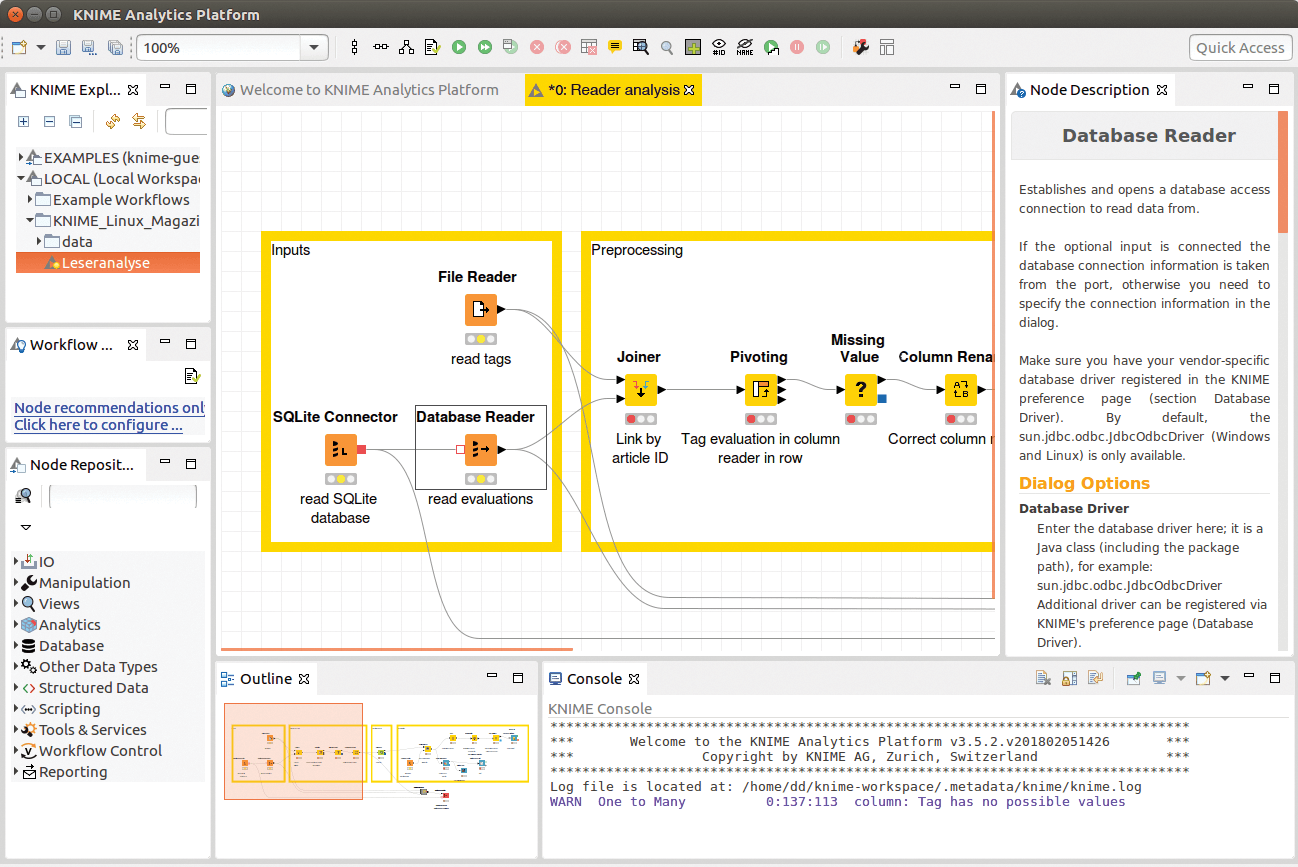

Figure 1 shows the KNIME user interface. The KNIME Explorer in the top-left corner of the workspace provides an overview of the workflows. Click the View menu and select Workflow Coach to access the Workflow Coach, which offers suggestions on building a workflow. The Node Repository (bottom left) lists the nodes of all installed extensions.

The nodes are the building blocks of any KNIME workflow, and the vast library of available nodes gives KNIME its versatility and power. KNIME nodes perform tasks such as:

- data access

- data manipulation

- visualization

- analytics

- reporting

- flow control

- scripting

- big data

The node description in the window on the right of the user interface gives the user the necessary documentation on the function and use of a node. In the middle is the workflow editor, where users string the nodes together to develop the actual workflow. During the course of developing a workflow, the user connects, configures, and executes the KNIME nodes individually and collectively (Figure 2).

Once a node has been executed, which is indicated by a green traffic light symbol below the node, you can display the resulting data as a table, bar chart, or other format. If the traffic light symbol is red, the node is not yet configured. Yellow means the node is ready for execution.

Nodes can have between zero and an arbitrary number of inputs and outputs (ports). The ports' shape and color indicate what kind of data the node needs or outputs at a particular input or output (a black triangle indicates a table).

Sample Scenario

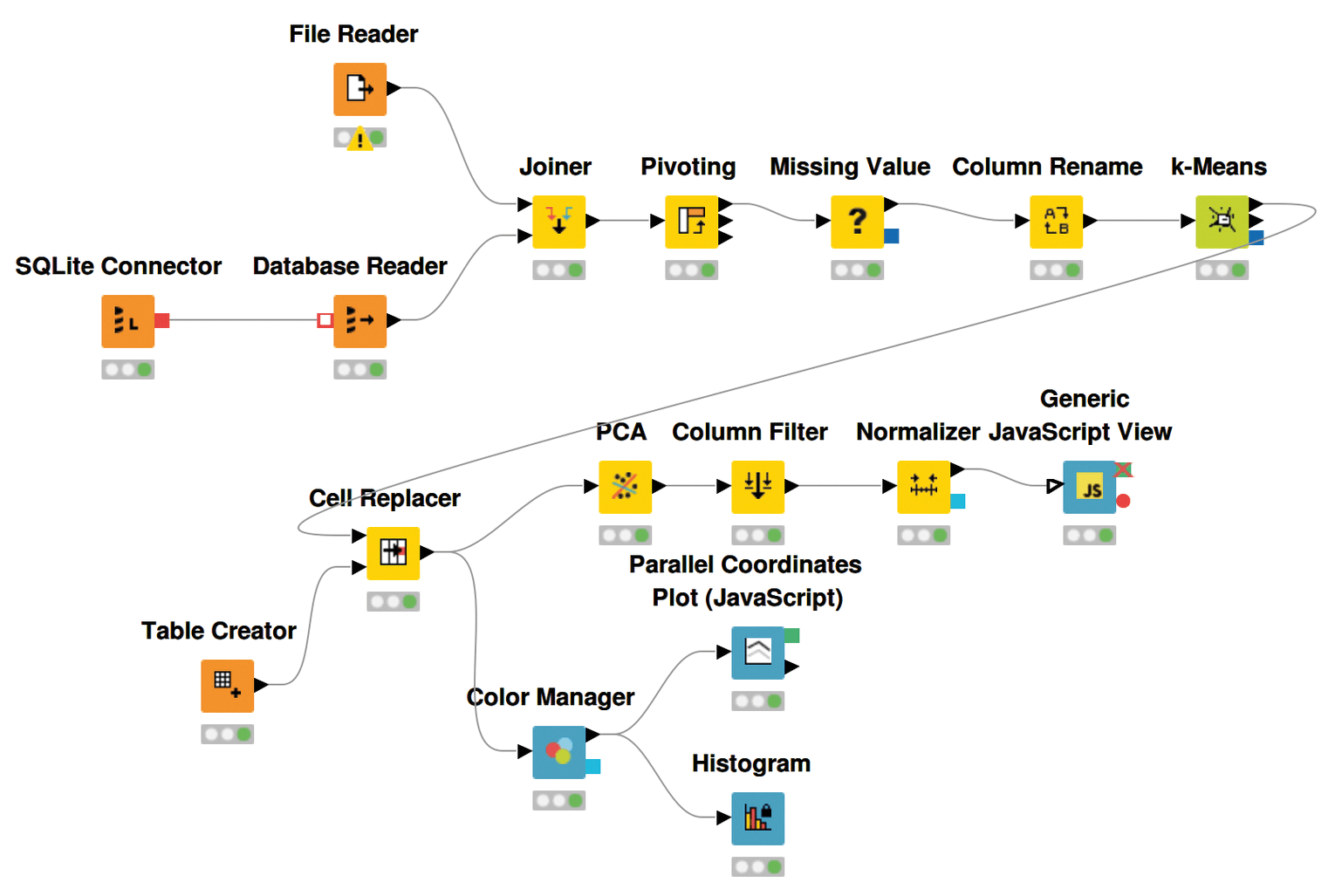

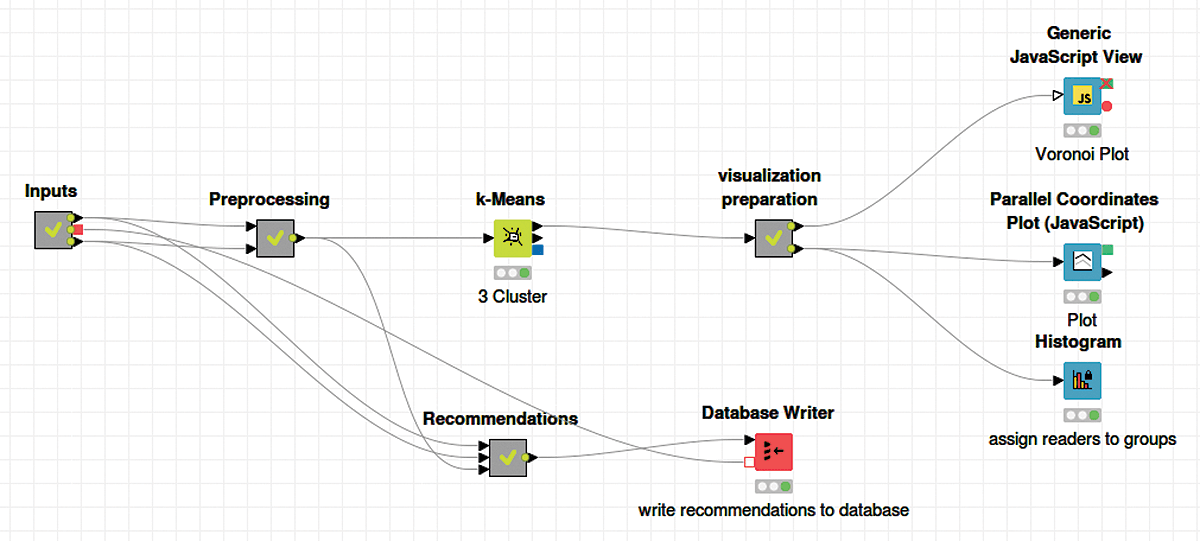

The best way to get to know KNIME is to work through an example workflow (Figure 3). The editors of a fictitious online magazine would like to know more about their readers' preferences. They have gathered some data from a rating system that gives readers the opportunity to rate magazine articles with up to five stars. Each article is also classified in at least one of the five categories: Hardware, Software, Development, Security, and Internet.

The editors hope to draw conclusions from the ratings on reader preferences and to identify groups of readers with common interests. They then want to suggest further articles to each reader that match their interests.

The starting points for the analysis are a SQLite database with the article ratings (Table 1) and a CSV file with the classification of the categories for the articles. Both data sources are therefore available in a table format.

Tabelle 1: SQLite Database Evaluation

|

Reader ID |

Article ID |

Evaluation |

|---|---|---|

|

Reader 1 |

Article 11 |

1 |

|

Reader 93 |

Article 31 |

3 |

|

Reader 45 |

Article 3 |

4 |

|

… |

||

Loading the Data

The first step of any data analysis is loading the required data (the red nodes in Figure 3). For KNIME, the data can come from a variety of sources, such as text files, documents, databases, or web services.

Once loaded in KNIME, it doesn't matter where the data came from, because the software always converts the data into an internal format. In this case, the data needs to be loaded from an SQLite database and a CSV file. To help you access databases, KNIME has several nodes to assist with data input, including the Database Reader node, which delivers the results of an SQL query as a KNIME table.

The node works with different database types and receives the connection information via its inbox. You can prefix an SQLite connector, which is configured with the connection information and forwards it to other nodes.

The easiest way for KNIME to load text files is to use the File Reader. This node automatically attempts to guess the file format, including column separators and row length, and displays a preview of the table in its configuration dialog.

Opening a Connection

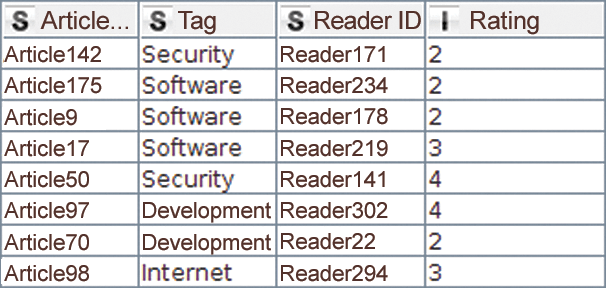

The next step is to relate the imported data to each other. If you are familiar with databases, you know that the best way to do this is with a join. A join consists of one or more predicates that determine which rows belong together in two tables. The rows that belong together are then combined in an output table.

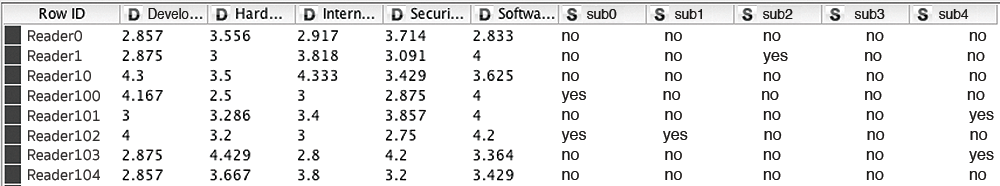

This example needs to combine the two tables based on the article ID. In this case, the join predicate determines that ratings for an article must be linked to the categories to which the same article is assigned. The node that KNIME uses for this is Joiner. Its output table is shown in Figure 4. Like its database counterpart, Joiner supports various modes, including inner, outer, left, and right joins. The user can also select which columns are to be copied from which table into the output.

Pivoting

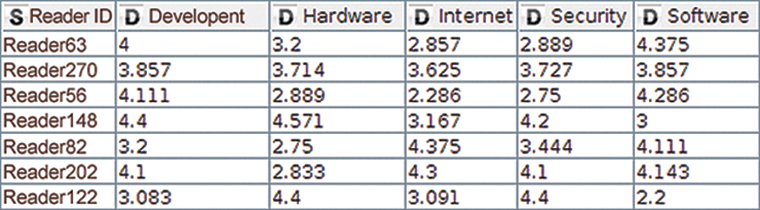

The next step is to create a kind of fingerprint for each reader, describing the reader's preferences by calculating the reader's average rating for the articles in each category.

The result is a table in which each reader becomes a row and each category becomes a column. The cells of the intersection points display the reader's average rating for articles in each category (Figure 5).

This transformation is handled by the Pivoting node. The Pivoting node dialog may prove intimidating at first glance, since it requires information on the group, pivot, and aggregation columns in three separate tabs. The values in the group columns determine which rows need to be combined into a single row – that is, all those that have the same values in these columns. For the sample workflow, the reader ID is a group column, the category is the pivot column, and the aggregation column contains the ratings that an average function averages.

If a reader has not rated a single article for a category, the cell in the pivotized table does not contain a value. KNIME indicates this with a red question mark. You can replace missing values using the Missing Value node. In the example, it makes sense to assume that the reader does not like a category in which they have never rated an article. Instead of the missing value, the table will use a zero.

It is often the case that a node produces a table with long or incomprehensible column names. For example, the Pivoting node from the previous category creates columns in the format Category + Rating. The suffix, which is the same in each column, is not important. Two important KNIME nodes assist with renaming columns. Column Rename lets you rename each column name explicitly; Column Rename (Regex) lets you change the column names using regular expressions.

Cluster Analysis

Now that the data is transformed into a workable format, you can begin to group readers according to their preferences. Readers with similar preferences should end up in the same group. Cluster analysis is a useful technique for this kind of problem, and KNIME has different nodes to support it, including k-means, fuzzy c-means, hierarchical agglomerative clustering, or k-medoids.

What all these cluster algorithms have in common is that users have to decide for themselves how many groups they want to receive. They also all need a way to calculate the similarity between two datapoints. In the previous category, a table row was created for each reader, containing five numeric values – one for each category.

The workflow for the example uses the k-Means node, which is quite fast and generally produces good results. The algorithm works with different distance measurements – in KNIME it is implemented with the Euclidean distance (see the "Computing the Distance" box). The k-Means node in KNIME, which executes the algorithm, appends another column to the input table that remembers the group in which the datapoint lies.

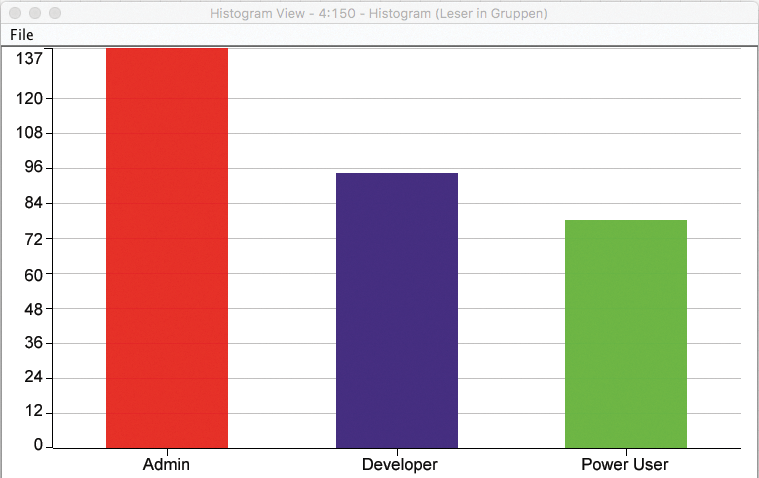

Cluster analysis reveals that readers who give a high rating to articles in the categories Hardware and Security usually lie in cluster 0, while readers from cluster 1 prefer the categories Internet and Software, and cluster 2 prefers Development and Software. Once you know the results, it is possible to give the clusters meaningful names. Due to their preferences, for example, readers in Cluster 0 can be addressed as administrators, those in Cluster 1 as power users, and those in Cluster 2 as developers. These new names are added using the Cell Replacer node.

Visualization: Histogram

A histogram, provided by the Histogram node, provides an overview of how many readers lie in which group. The Histogram node can be connected directly to the Cell Replacer from the previous step, but the whole thing looks a bit colorless. The Color Manager node lets you assign a color – based on the value of a column – to the rows of a table. If the same column is also used for the x-axis of the histogram, KNIME automatically takes over the color for the bars (Figure 6).

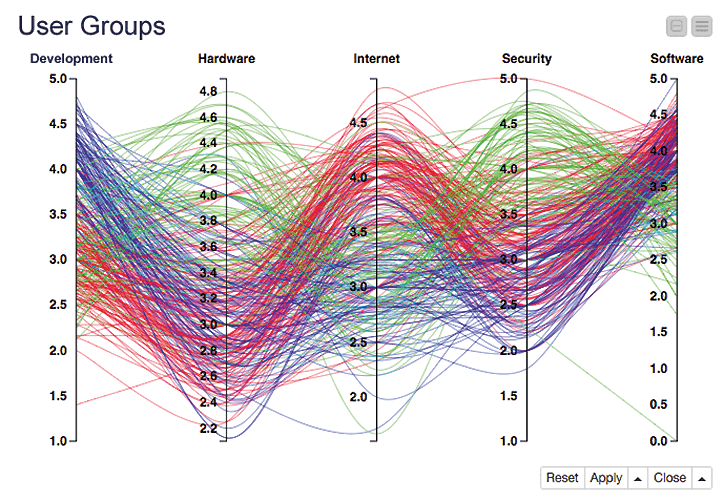

Visualizing Multiple Dimensions

Visualizations are available to display data and also show whether the discovered clusters have any significance. In the past years, many visualization methods were implemented in KNIME with the help of JavaScript and the D3.js framework [5].

These visualizations are available in the KNIME JavaScript Views extension, which is where you will also find the Parallel Coordinates visualization. Parallel Coordinates represents the properties of the data with parallel y-axes (Figure 7).

D3.js, the JavaScript library on which most KNIME JavaScript visualizations are based, is one of the most widely used libraries for creating interactive data visualizations in the browser. However, the KNIME user can only use some of its capabilities.

For all cases that KNIME does not yet cover, use the Generic JavaScript View. Configuring this node means you can enter arbitrary JavaScript and CSS code to compute a colorful image from a table. The code executed by the node has access to the node table and the browser Document Object Model (DOM) and can generate HTML and SVG elements based on the data.

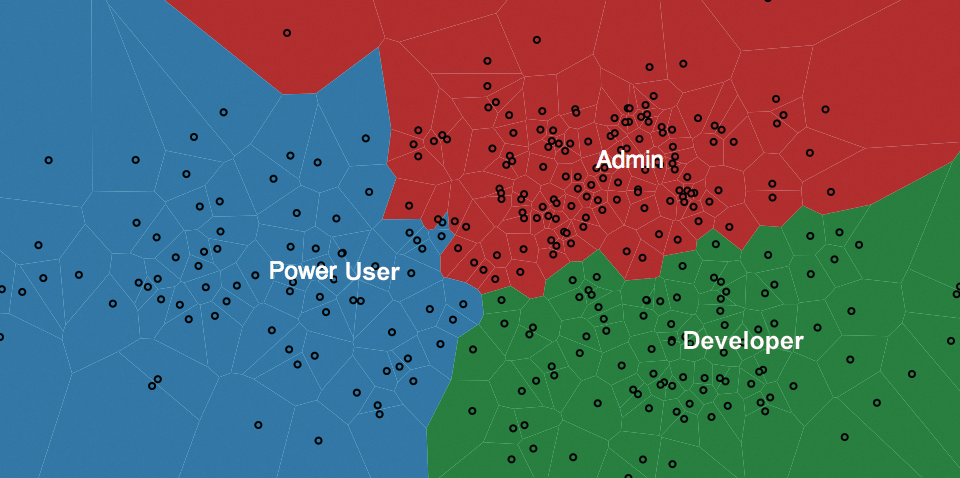

For example, you can use the Generic JavaScript View to create a Voronoi diagram (Figure 8), which visualizes the clustered reader groups in 2D. To ensure that the data is in a format suitable for visualization, you must first reduce the number of dimensions. Up to now, the example has used five dimensions per reader (one for each section), but can be broken down using the principal component analysis as calculated by the PCA (Principal Component Analysis) node. This type of transformation reduces the dimensionality, but at the same time tries to keep the variance in the data, so that as little information as possible is lost.

By this point, a proper data analysis workflow has already been created: from importing the data, through transformation and grouping, to visualizing the results. All this helps to gain interesting insights into the raw data. In a further step, it is now possible to identify for each reader the articles that a reader has not yet read, but which could also be interesting for the reader because of their preferences. A web application can then suggest these articles to the reader.

Keeping Track

With each further step, the workflow threatens to become more complex and confusing. To ensure that it remains comprehensible, it is a good idea to encapsulate individual parts in modules to conceal the complexity. This encapsulation is made possible using so-called meta nodes. Meta nodes also let you group the sections of the workflow using meaningful names. Figure 9 shows a possible restructuring of the workflow using meta nodes.

Recommended Reading

The point of the exercise is to recommend articles that are closest to the reader's preferences. For example, if the reader is particularly interested in hardware and security, it would be a good idea to suggest articles from these categories – or even articles that are in both categories at the same time. The current workflow has already explored the extent to which a certain reader has a preference for each category, and the result is available in the form of a vector (Figure 5).

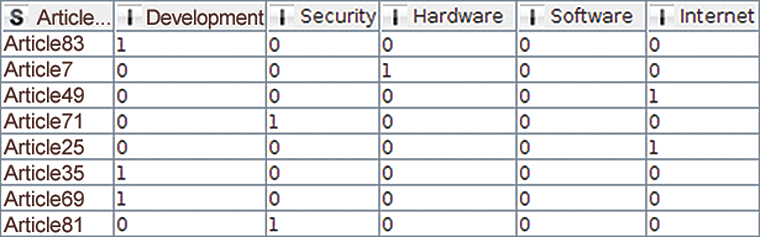

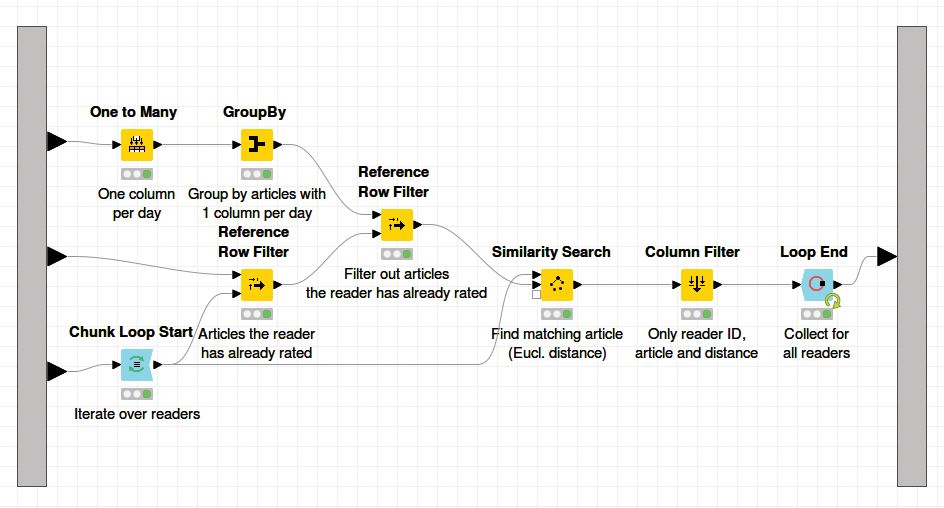

You can create a very similar vector for each article, where the columns of those categories contain a 1, to which the article is assigned. Category columns without connection to the article, on the other hand, contain a 0. The One to Many node makes this possible: The transformation of the article-heading assignments (Table 2) into a representation by a binary vector per article (Figure 10).

Tabelle 2: Categories Table

|

Article ID |

Category |

|---|---|

|

Article 11 |

Hardware |

|

Article 11 |

Software |

|

Article 31 |

Development |

|

… |

|

Once the two vector types have been created, the Similarity Search node can simply determine a distance between the vector of a reader (which represents its preferences) and the vector of an article (which indicates to which categories it is assigned). The smaller the distance, the more the reader's preference corresponds to the categories in which the article is classified.

From all articles that a certain reader has not yet read (see Row Reference Filter node), it is now easy to determine the article that has the shortest distance to the reader's preferences. This article is finally recommended for reading.

A loop (consisting of the Chunk Loop Start and Loop End nodes) corresponds to a For loop across all rows of the table. The loop determines the smallest distance of the vectors for each reader. The overall result with one article recommendation per reader (Figure 11) could then be written back to a database with the help of the Database Writer node.

Another Example: Machine Learning

Automatic detection of patterns in large datasets is one of KNIME's prime objectives. Machine learning has hit the headlines mainly in connection with autonomous cars, along with the dubious machinations of large corporations that are eager to collect data, but artificial intelligence and machine learning can generate added value in many areas because their algorithms identify structure in apparently random data.

From the very beginning, the developers of KNIME attached great importance to the integration of the latest machine learning algorithms, starting with support vector machines and simple neural networks. Today, KNIME has also mastered newer methods, such as random forests and deep learning.

The many native KNIME machine learning nodes can also be combined easily with numerous other machine learning tools that are also integrated with KNIME, including scikit-learn [6], R algorithms [7], H2O [8], Weka [9], LIBSVM [10], and the deep learning frameworks Keras [11], TensorFlow [12], and DL4J [13].

Supported by the various possibilities to import, export, visualize, and manipulate data, KNIME offers a platform that maps the entire analysis process as a graphical workflow.

Extended Scenario

The online fictional publisher also offers newsletters on five different topics. For those readers that have registered, the aim is to make these newsletters more appealing to potentially interested readers. Instead of flooding every reader with the newsletters on all five subjects, the publisher wants to move forward in a slightly more intelligent way.

On the basis of known reader preferences and experience with previous subscribers, newsletter recommendations are only to be sent to readers who might genuinely be interested in one of the five topics. Not only does this plan save in terms of the volume of mail to be sent, it also avoids annoying readers who are completely uninterested in some or all topics on offer and who might even reject all of the publisher's offerings as a result.

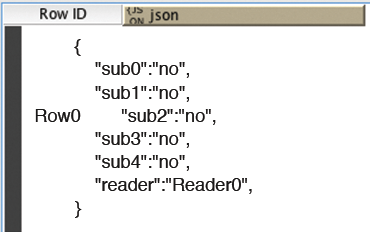

Known preferences for certain topics, as determined from reader evaluations of journal articles, serve as the data basis (refer to Figure 5). Additionally, information about who has signed up for a newsletter subscription is available as a new data source. This information can be retrieved through a REST interface, which provides the data in JSON format.

REST

REST (representational state transfer) is based, among other things, on the principle of statelessness and addressability of resources on the web. Normally, REST interfaces use the JSON or XML format. Corresponding data types and processing nodes exist in KNIME for both formats (e.g., the JSON Path and XPath nodes).

To begin, you have to acquire the data. KNIME offers a GET Request node in the Tools & Services | REST Web Services category. This category also includes nodes for sending data to a REST service and for deleting and modifying resources. The user simply passes the URL of the interface to the GET Request node and then downloads the data during execution and displays it in a table.

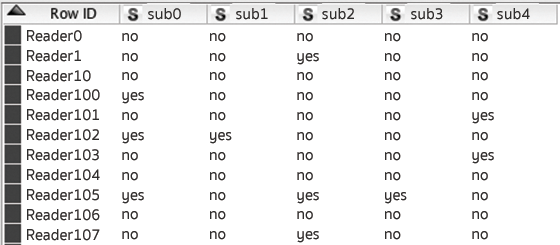

In the sample workflow, the GET Request node is replaced by a JSON Reader node, because the interface for the subscription data does not exist. The output from JSON Reader is shown in Figure 12.

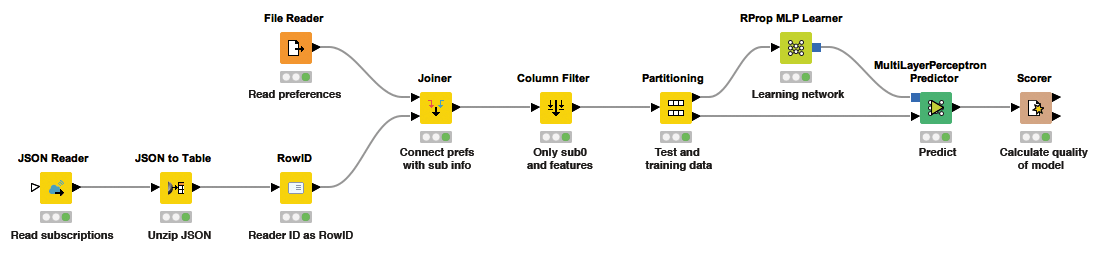

Because most KNIME nodes need data in tabular format and cannot inspect a JSON object, you need to transform the received data so that each reader ID corresponds to a row and each subscription to a column. To do so, combine the JSON to Table node and the RowID node to create the table as shown in Figure 13. With the Joiner node, the subscription data can now be linked with the reader preferences (determined in the previous example), as can be seen from the workflow in Figure 14.

Machine Learning

Now that all the required data is summarized in a table, you can create prediction models that will make it possible to predict later whether a reader without a subscription might be interested in signing up for a newsletter on a specific topic. Strictly speaking, each topic requires its own model, but we are just using a single model here to present KNIME's machine learning capabilities.

In machine learning, a general distinction is made between supervised and unsupervised learning. The clustering in the first article on KNIME was an example of unsupervised learning, because the processed datapoints had no known classification or label.

In supervised learning, on the other hand, data has labels that assign a value to a datapoint, which then needs to be predicted later for new data. Predicting a numerical value is referred to as a regression, and predicting a category is a classification.

Predicting whether a reader would be interested in a newsletter is a classification, because the value to be predicted is either yes or no (i.e., a binary classification). A little more background information on this topic can be found in the box "Classification in a Nutshell."

To determine whether the model to be learned works well, you first need to split the data into a test dataset to estimate its generalization capability (i.e., to see how good the predictions are for previously unseen data) and a training dataset to learn the model.

An evaluation with the same data as used during training generally leads to overly optimistic results. The Partitioning node divides the data into two tables. An 80/20 split is usually a good guideline, with 80 percent for learning and 20 percent for evaluation.

Machine learning is a large field of research and, accordingly, has many different approaches to statistical modeling. One very popular approach is the neural network. In KNIME, a neural network is taught by the RProp MLP Learner node (RProp stands for the algorithm used here, and MLP for Multilayer Perceptron, a network with many layers).

When configuring the learner algorithm, the KNIME user only selects the column that contains the value to be predicted. Therefore, if the learner is connected to the 80 percent output from the Partitioning node and executed, the node trains a neural net that has learned to determine which subscriptions the readers are interested in on the basis of their preferences. Armed with the MultiLayerPerceptron Predictor and Scorer nodes, you can use the previously ignored 20 percent of the data now to check how good the model really is (right two nodes in Figure 14). Other suitable learners include Random Forest, Gradient Boosted Trees, and Support Vector Machines.

Loops

Each of the five newsletters has its own model to learn. Although the simple solution would be to create five workflows, it is not really a practical solution, because if the learning process changes later, several workflows (or workflow parts) would have to be adapted. An easier method is to use loops that execute part of the workflow more than once with different parameters.

In KNIME, you can encapsulate multiple-execution sections in various paired Loop Start and Loop End nodes. Depending on the type of the start node, the workflow between the loop nodes is executed once per line, or for specific columns, or until a specific condition occurs. The end node needs to collect the data from the loop passes and aggregate them, if necessary.

The table the models use for learning (Figure 15) has an extra column for each newsletter (subscription) type (0 to 4). The goal is thus to learn one model per column that predicts the column's value. KNIME offers the Column List Loop Start node for iterating over columns. In its configuration dialog, you can select the columns over which the workflow part of the loop will execute.

The reader preferences in columns 2 through 6 and one of the five sub0 to sub4 columns are available within the loop. Now you can connect RProp MLP Learner to the Column List Loop Start node to learn the corresponding model.

The division into test and training data in the loop is unnecessary, because that division is only there to evaluate whether a model is capable of delivering good results. Once this has been determined, the entire available dataset is ready for learning. The changed workflow that uses the loops is shown in Figure 16.

Controlling and Configuring the Flow

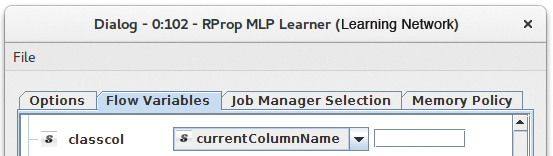

The column loop still has a problem: The learner needs to know which column is to be predicted; however, it cannot be hardwired into the model, because the column name changes with every iteration (sub0, sub1, etc.). Here is where flow variables come into play.

Flow variables are basically key-value pairs that the user sends through the workflow with the data. These variables can be seen, for example, in the window for the output data of a node in the Flow Variables tab. Within the loop, the name of the column currently being processed is available as the currentColumnName flow variable.

Now you need to configure RProp MLP Learner so that it uses the value of this variable to determine the column with the class information (i.e., sub0, sub1, etc.). To do this, you open the configuration dialog for the node and click on the Flow Variables tab. You will see a list with settings.

RProp MLP Learner has two entries per setting: one for internal use and one for normal use. They can be identified by the selection box to the right. In this box, you can specify a flow variable to be used instead of the currently selected value for this setting. If you want to use currentColumnName as the class column, select this entry under classcol (Figure 17).

Saving and Sharing Models

The next step is to save the newly learned model so that you can reuse it later. Output from the RProp MLP Learner (i.e., the model) neural network is formatted in the Predictive Model Markup Language (PMML) [14]. This standardized format for predictive models is widely used in machine learning and therefore well suited for exchanging models between different platforms and tools.

The PMML Writer node is required to write the model to disk. However, because it runs within the loop, the file name in the configuration dialog depends on the current iteration, or the column to be learned. The tool must therefore combine a folder and a dynamic file name to form a path.

This chore is best accomplished with the Create File Name node. Its input is graphically marked with an unfilled red circle, indicating that it is an optional input for flow variables. You need to connect it to Column List Loop Start, but at first glance, this does not have a compatible output "port." However, appearances are deceptive. The flow variable outputs and inputs of most nodes are simply hidden. They can be made visible by right-clicking a node and selecting the Show Flow Variable Ports menu item.

In the Create File Name configuration dialog you can now specify any folder and set .pmml as the file extension. The Base file name, on the other hand, is defined by the current column (sub0, sub1, etc.), which can be found in the currentColumnName flow variable. You can assign it to the configuration field by clicking the small button next to the text field, checking the Use variable checkbox, and selecting the flow variable in the list next to it.

The output from the node must be connected to the corresponding input of the PMML Writer node. To do this, the flow variable input does not necessarily have to be visible; you just need to drag a connection to the upper left corner of the node. In the configuration dialog for the PMML Writer node, you can then use the flow variable button to select the filePath variable again under Output location to save the model at this location.

The loop ends with a Variable Loop End node connected to the flow variable output of PMML Writer. Now KNIME iterates through the loop once for each of the five subscription columns, and five prediction models end up in the folder specified in Create File Name. Figure 16 shows the workflow for teaching and saving models.

Prediction

The five models determined will only be truly useful if you use them to predict whether readers without a newsletter subscription might be interested in a subscription and which one.

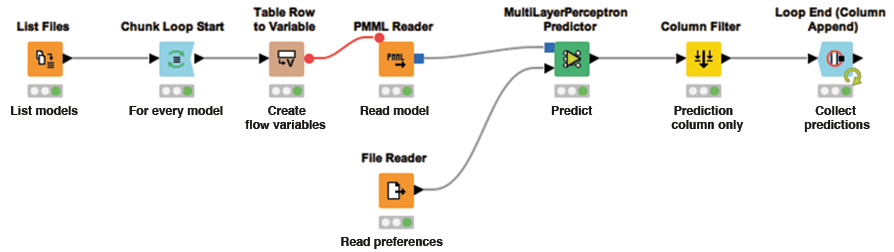

The best way to accomplish this is to create a new workflow that first loads the previously determined models with the List Files node. The filter must be .pmml and file extension(s). The folder to be searched is, of course, the one to which the prediction models were written earlier.

The result is a table in which each row corresponds to a matching file in the folder. If you connect them to a Chunk Loop Start node, the subsequent part of the workflow runs separately for each file. With the use of a Table Row to Variable node, the individual rows can be converted into flow variables, in which each column becomes a variable. The URL variable can then be used in a PMML Reader node, the counterpart to PMML Writer, as a value for the input file.

The model at the output of the node can now be passed to a MultiLayer Perceptron Predictor along with the data to be predicted (i.e., the preferences of readers without subscriptions). The predictor applies the model to the new data and predicts whether the reader might be interested in the subscription represented by the model.

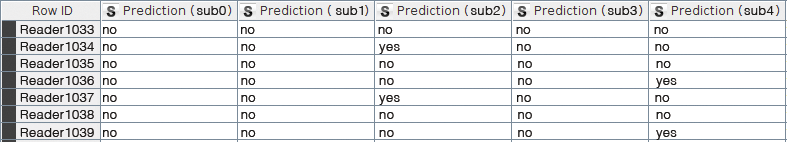

You will only want to keep the column with the prediction from the output table of the forecasting node. For this you need the Column Filter node, in which you select the Wildcard/Regex Selection option in its configuration dialog and enter Prediction (sub*) as a pattern. The output from the Column Filter node is linked to a Loop End (Column Append) node to obtain a table (Figure 18) that predicts for each newsletter subscription whether a reader will have any interest. Figure 19 shows the workflow for this process.

KNIME Can Do More

This article has shown how you can process, visualize, and analyze data in KNIME. These examples used simple tables with numbers and text, but thanks to the free extensions provided by KNIME and other developers, much more is possible. For example, mass spectrometry and gene sequence data can be stored and processed in KNIME tables.

If you are interested in discovering other KNIME options, take a look at the Node Guide [15] and the KNIME community website [16]. A number of instructional videos explain the functions and concepts on YouTube [17], and if you get stuck, you are in good hands at the KNIME forum, where proven experts are happy to help