Link aggregation with kernel bonding and the Team daemon

Battle of Generations

No matter how many network interfaces a server has and how the admin combines them, hardware alone is not enough. To turn multiple network cards into a true failover solution or a fat data provider, the host's operating system must also know that the respective devices work in tandem. If you are confronted with this problem on Linux, you usually use the bonding driver in the kernel; however, an alternative is libteam in user space, which implements many other functions and features, as well.

A Need for NICs

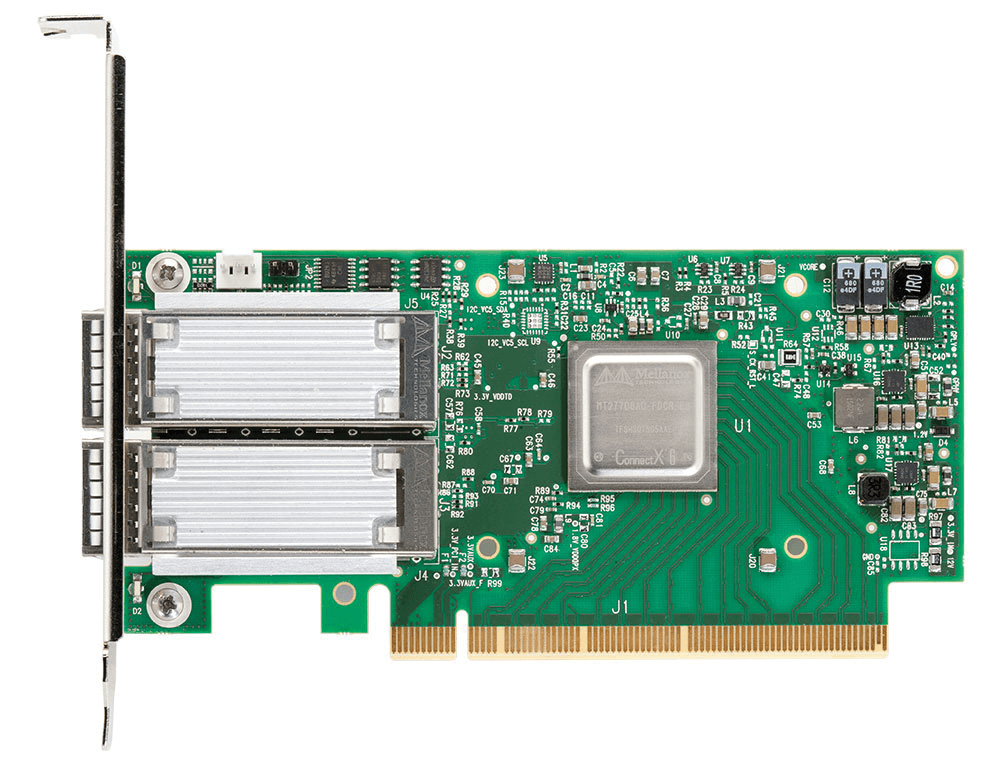

Many people who see a data center from the inside for the first time are surprised by the large number of LAN ports on an average server (Figure 1): Virtually no server can manage with just one network interface, for several reasons.

- The owner might use several network interfaces to increase redundancy. In such a setup, the server is connected to separate switches by different network ports, so that the failure of one network device does not take down the server.

- The admin might want to bundle ports to double the bandwidth.

- The network card itself might fail, or even the PCI bus into which it is inserted.

Although redundancy and performance can be combined, it requires at least four network ports, and to take into account Murphy's Law, two of the ports should reside on a different network card than the other two.

To alert a Linux operating system of multiple network cards, you would usually use the bonding driver in the kernel and manage it with ifenslave at the command line. Most Linux distributions have full-fledged ifenslave integration controlled directly through network configuration of the respective system. Because of this integration, many admins are not even aware of alternatives to classical link aggregation under Linux.

Alternatives

One alternative is libteam [1], which also interconnects network cards on Linux systems. In contrast to the ifenslave-based link aggregation approach, libteam resides exclusively in user space and virtually does without kernel support. The main developer, Jirí Pírko, describes it as a lightweight alternative to the heavyweight legacy code in the Linux kernel – although legacy and inflexible do not necessarily have to mean bad and slow.

Now is the time, therefore, to let the two opponents battle it out directly – not so much with regard to definitive performance, which was very much comparable in the test and at the limit of what the hardware was able to deliver, but with regard to a focus on which functions the two approaches support and where they differ.

Nomenclature

In the Linux context, admins usually refer to bonding when they mean link aggregation of multiple network interfaces for better performance or greater redundancy. However, the term "teaming" can also be found in various documents. Sometimes it appears to be synonymous with bonding, whereas on other occasions you will find detailed explanations of how bonding and teaming differ (e.g., in the Red Hat world) [2]. Others again consider the terms merely to be descriptions of the same technology in the context of different operating systems.

In this article, I use bonding and teaming as synonyms for any kind of link aggregation. So wherever one of the terms is used here, the other would have been equally as good.

Availability

At the outset, admins will ask themselves what type of bonding they can get to run on their systems with as little overhead as possible. The kernel bonding driver clearly has its nose in front here, because it is part of Linux and therefore automatically included in every current Linux distribution. Additionally, it is directly integrated into the network administration of the respective systems and can be configured easily with configuration tools such as Yast or Kickstart.

On desktops this argument is not so convincing, because popular distributions such as Fedora and openSUSE include libteam packages, and it doesn't matter whether the network configuration has to be adjusted manually in the meantime.

Servers, the natural habitat of the bonding driver, typically do not run Fedora or openSUSE, but rather RHEL, CentOS, SLES, or some other enterprise distribution. They usually come with more or less recent libteam packages, but the setup is not as smooth as configuring the bonding interfaces using the system tool.

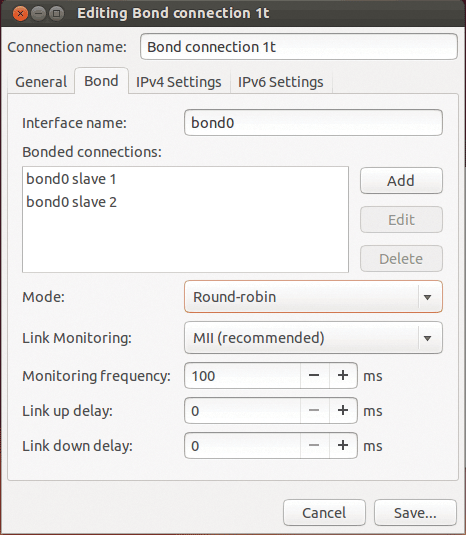

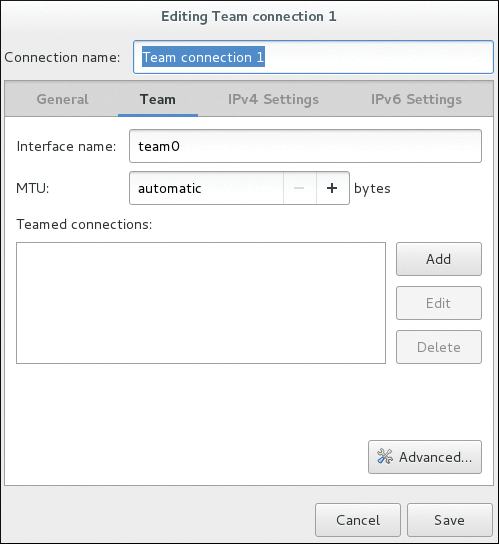

The points for convenience clearly go to the classic kernel-based bonding driver (Figure 2), but on RHEL systems, at least, it is no problem to configure libteam with system tools, because it was invented by Red Hat (Figure 3).

ifenslave on almost any Linux system.

libteam in User Space

In the second round of tests, the architecture of the two candidates is put to the test. How are the bonding driver and libteam designed under the hood? Does one have obvious advantages for one solution or another? Libteam goes first in this round for a change.

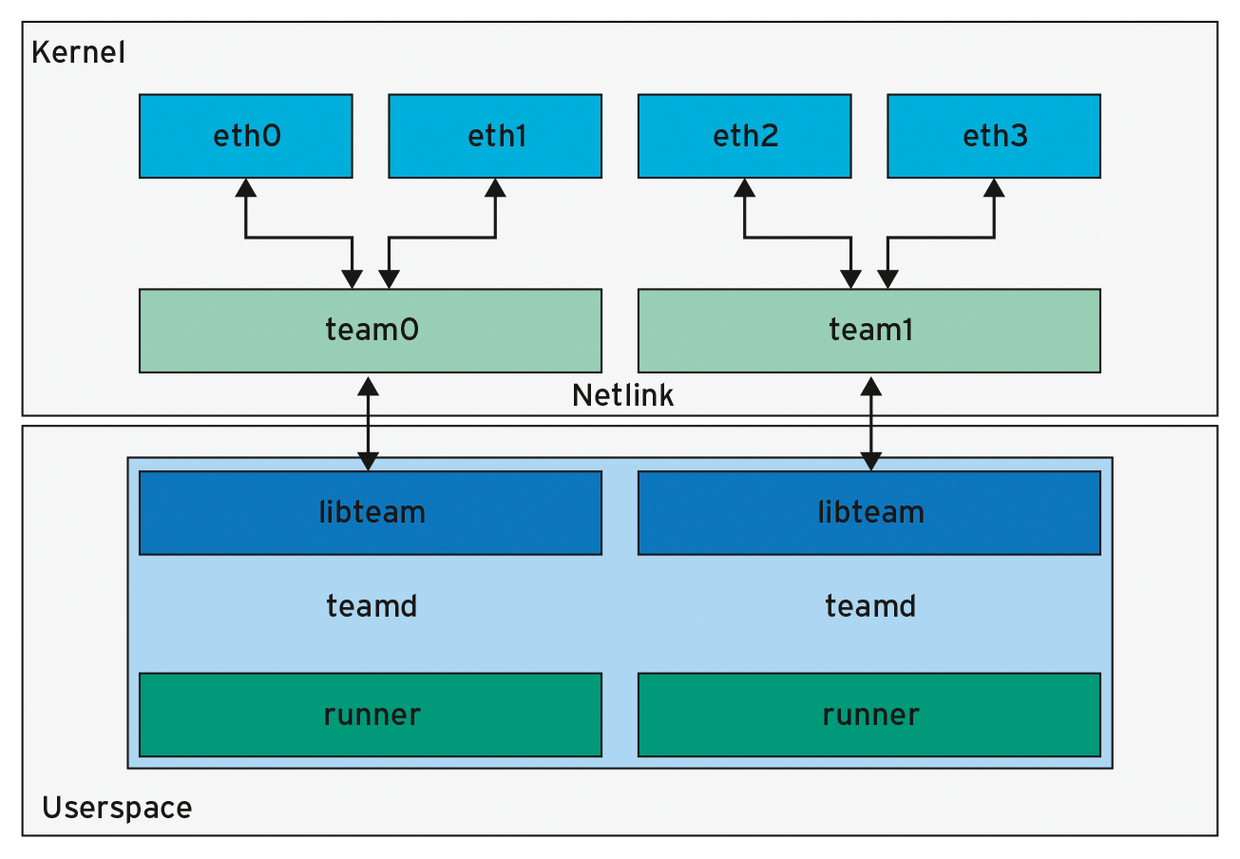

Libteam is published on GitHub and is available under the GNU LGPL; thus, it meets the accepted criteria for free software. The library is divided into three components: The libteam library, which provides various functions; the Team daemon teamd; and the network Team driver. Since Linux 3.3, it has been part of the Linux kernel and therefore, like the bonding driver, can be found on any recent Linux system. Moreover, teamd has to run on systems with Team-flavored bonding for teaming to work.

The Team daemon and libteam do without kernel functions to the extent possible and live almost exclusively in user space. The kernel module's only task is to receive and send packets as quickly as possible, a function that seems to be most efficient in the kernel. The strict focus on userland is simply an architectural decision by Pírko to keep out of kernel space as much as possible, not an inability to engage in kernel development, as his various kernel patches in recent years show.

The modular design of teamd, with libteam backing it up, is responsible for Netlink communication with the Team module in the kernel. However, the implementation of teaming functionality (i.e., the various standard bonding modes) is implemented by teamd in the form of loadable modules, or "runners," which are units of code compiled into the Team daemon when an instance of the daemon is created (Figure 4).

The broadcast runner implements a mode wherein Linux sends all packets over all available ports. If you load the roundrobin runner instead, you get load balancing based on the round-robin principle. The activebackup runner implements classic failover bonding, for when a network component dies on you.

The great flexibility of teamd in userland is an advantage. If a company wants to use a bonding mode that teamd does not yet support, it can write the corresponding module itself with relatively little effort according to the Team Netlink API. The author explicitly encourages users to do so and states that teamd is a community product and is actively developed jointly.

Bonding in Contrast

The bonding driver is implemented in Linux with the exact opposite approach of libteam. For more than 20 years, bonding.ko has been part of the Linux kernel, and all of the driver's functionality is implemented in kernel space. Only the configuration of bonding devices is done in user space with ifenslave; however, the program uses Netlink to communicate with the Linux kernel to inform it of the bonding configuration the admin intends to use for the system.

With good reason, bonding.ko can therefore be described as a classic implementation of a Linux kernel driver from the old days, true to the motto: Let the kernel do whatever it can do.

From an admin point of view, this can be a real disadvantage: If you want to use a feature that the bonding driver simply doesn't have in the kernel, in the worst case, you will have to start tweaking the kernel itself. A module API like the Team daemon is not found in the kernel bonding driver, so points for elegance of architecture and expandability of design clearly go to teamd. Score: 1 to 1.

Configuration

One central question from the admin's point of view is how the bonding or teaming solution can be configured and how practical administration works in everyday life. Earlier, you already saw that bonding.ko in particular is deeply integrated into the kernel and that support for bonding is therefore part of the standard scope of delivery in current distributions.

However, this does not mean that the configuration is automatically intuitive, simple, or easy to understand. Anyone who has ever locked themselves out of a newly installed CentOS system by putting a wrong bonding configuration into operation, which could only be corrected through the BMC interface afterward, will be familiar with the problem.

As with the architecture, libteam and bonding are fundamentally different when it comes to configuration and handling. On the one hand, the kernel bonding driver has to be processed in userland by ifenslave. The configuration in CentOS and related distros is generally not much more intuitive than this tool, and not many admins will be able to edit the necessary configuration files to achieve a working bonding configuration at the end of the day without referring to the documentation.

Ubuntu, Debian, and SLES are not much smarter. Admins can get into a terrible mess if they don't want to operate the bonding modules with the standard parameters but attempt to define custom values (e.g., for the MII link monitoring frequency). In the worst case, only a modinfo against the bonding module will help you find the required parameter – combined with the system documentation to find out where to write the parameter for the bonding driver to actually use it.

All told, the user experience for configuring bonding in Linux is about the same as the standard 20 years ago, which is mainly because it was created about 20 years ago.

JSON Configuration

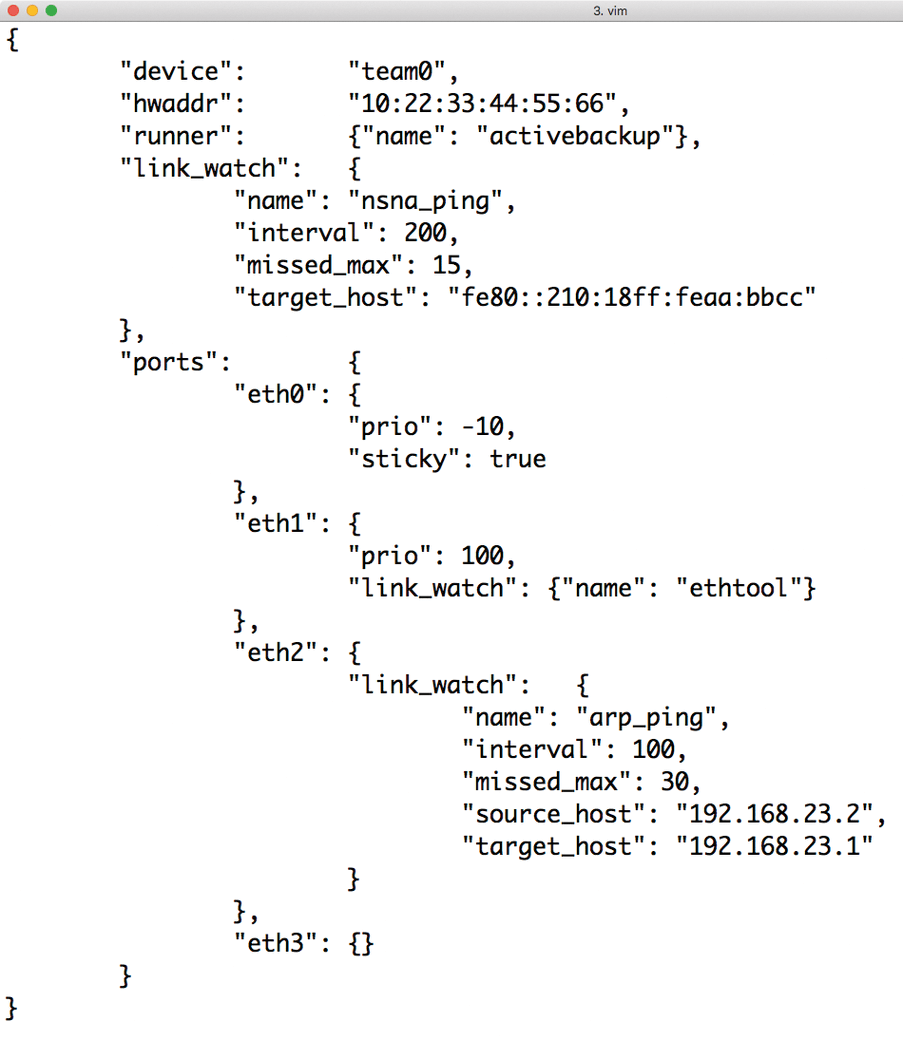

The Team daemon works in a completely different way. The administrator stores the configuration for the service in the form of a JSON file in the /etc folder, although the path can change depending on the distribution. In the teamd.conf file, you then add everything that teamd needs to know to create the team devices after it starts up.

Parameters desired by the user, such as the Team mode or the ARP monitoring mode, can be defined in teamd.conf for each device. A nice man page (man 5 teamd.conf) for the file explains all relevant parameters in detail, including the respective default values (Figure 5).

teamd.conf configuration file, with no manual tinkering – unlike bonding.ko.If you configure your team devices in this way, it's almost boring: Gone are the days when restarting the network configuration was associated with the inherent risk of locking yourself out of the system. Team daemon configuration in JSON format is well structured, equipped with understandable keywords, and excellently documented. Again, a point clearly goes to libteam and teamd, putting the score at 2 to 1.

Basic Functions

The most beautiful architecture and the greatest configuration is completely useless if you cannot use the respective solution because of a lack of functionality. The question then arises as to whether and to what extent the basic bonding modes are implemented and with which functions the developers equip their charges.

The Team daemon supports five bonding modes out the box: In addition to broadcast, roundrobin, and activebackup runners, lacp and loadbalancer runners are also available. The lacp runner provides support for the link aggregation control protocol (LACP, per IEEE 802.3ad), and loadbalancer is based on the Berkeley Packet Filter (BPF) and comes with various options that are defined in the configuration file. Among other things, it is also possible to influence the hash algorithm used in the teamd load balancer runner with specific parameters.

Configuration options are not limited to the load balancer runner, by the way. Each of the runners that can be selected in teamd.conf comes with specific options – all of which are reliably listed in the man page. Some of the runner options provide information about certain teamd features. LACP can also be used with round robin runners; the Team daemon is thus a few steps ahead of its Linux kernel counterpart, especially when it comes to monitoring Ethernet links.

Home Cooking

The bonding driver, on the other hand, is a bit calmer when it comes to basic functionality. To avoid creating a false impression: bonding.ko supports the basic features for operating bonding setups, just like teamd and with comparable quality. If you need LACP or bonding with broadcast, round robin, or load balancing in active or passive mode, you will be just as happy with the bonding driver as with teamd.

Especially when sending data, bonding à la teamd relies on a hash function to determine the correct device. However, the admin cannot influence this hash function when using bonding.ko, because it is not implemented by BPF, but buried deep in the bonding driver. LACP for round-robin bonding is also on the list of things the admin has to do without using the kernel driver.

Whereas in kernel bonding, only one priority can be defined for the individual aggregated network ports, teamd offers different priority configurations and a stickiness setting that works quasi-dynamically. The ability to configure the monitoring configuration for each port is also not available with the bonding driver – the settings made here always apply to each port of the entire network.

On the other hand, teamd and the bonding driver agree again when it comes to virtual LANs (VLANs): They are included in both solutions and are supported without problem. The same applies to integration with the Network Manager, which handles the network configuration on many systems: Both the kernel bonding driver and teamd can talk to it directly.

If you compare the basic functions of the two solutions, the picture becomes clear: In terms of the basics, the two solutions have practically no weaknesses, but as before, libteam uses clever additional functions that are missing in bonding.ko at various points.

The perfect example is the BPF-based load balancing functionality, which is completely absent from the bonding driver – not least because the BPF did not even exist when the bonding driver was introduced into the Linux kernel. In this round, the point again goes to libteam – making the score 3 to 1.

Performance

Performance is an exciting aspect when you are comparing different solutions for the same problem. However, the test confirmed exactly what Red Hat, as the driving force behind the Teaming driver, already announced on its own website: teamd and bonding don't differ greatly when it comes to performance.

The performance measures provided by Red Hat [3] suggest that the teamd trunk delivers more throughput and plays out this strength with 1KB packets in particular. Really significant performance differences from the kernel driver do not arise at this point. The average latency of the two devices in direct comparison is even identical in Red Hat's stats – which is not unimportant when running an application on a server that is particularly prone to latency. In the end, both opponents get one point each: 4 to 2.

Get on D-Bus

In the final contest, it is almost unfair to compare teamd and bonding. Of course, teamd is the newer solution and various tools that libteam supports natively are unknown to the legacy kernel bonding driver, but the clearly visible attention to detail amazes time and time again when using libteam.

The Team daemon, for example, has a connection to the D-Bus system bus and can then be comprehensively monitored and modified by other D-Bus applications. The Team daemon offers further connectivity to other services with its own ZeroMQ interface, which is a RabbitMQ, or Qpid-style message bus but comes without its own server component and behaves more like a library.

The bonding driver does not even compete in this category, so it would be unfair to award another point to teamd.

Conclusions

The direct comparison of the bonding driver and teamd looks like a battle of two generations. On the one hand, the established kernel driver is firmly embedded in the kernel and thus suggests that you know what you are getting. To this day, the prejudice commonplace in the minds of many admins is that only the functionality implemented in the kernel is truly good and that functionality in kernel space is automatically better than that "only" present in user space.

This fallacy, however, has now clearly been disproved by various tools, and teamd is one of them, because this solution requires just a small kernel module that specializes only in moving packets back and forth in the kernel, with no negative effect whatsoever.

The many functions and features implemented in teamd are a plus. Basically, the bonding driver is not capable of any function that libteam and teamd cannot handle; however, the obverse is not true: You would miss the intuitive and easy-to-understand configuration file in JSON format as painfully as the ability to monitor bonding devices by the D-Bus interface.

The bonding driver can score directly in terms of availability, because both ifenslave and bonding.ko can be found in any flavor of Linux. In comparison, admins have to install the tools for teamd, although in many distributions, this turns out to be no problem thanks to comprehensive offerings of the required packages.

Smart add-ons, such as teamd talking directly to ZeroMQ, round off the overall picture. If you are a network aggregation enthusiast, you will thus want to test teamd and libteam exhaustively – or at least take a closer look.