Security risks from insufficient logging and monitoring

Turning a Blind Eye

Whether or not an application or a server logs something is initially of no interest to an attacker; neither is whether or not someone evaluates the logged data. No attack technique allows the server to be compromised because of a lack of logging. Nor is it possible to use missing log monitoring directly for attacks against users. The only thing that has happened so far has been direct attacks by logfiles: If a cross-site scripting (XSS) vulnerability allows the injection of JavaScript malware into logfiles and the administrator evaluates the logfiles with a tool that executes JavaScript, an attack is possible (e.g., by manipulating the web application with the administrator's account or by infecting the computer with malicious code through a drive-by infection).

Knowing Nothing Is a Weakness

Despite the apparent insignificance of logs in system security, "Insufficient Logging & Monitoring" made it into the Open Web Application Security Project (OWASP) 2017 Top 10 [1] in 10th place, whereas the cross-site request forgery (CSRF) attack, which can cause actual damage, is in 13th place [2]. CSRF attacks got the lower rating because most web applications are now developed using frameworks, and most of them now include CSRF protection. In fact, CSRF vulnerabilities have only been found in about five percent of applications. Another reason for the ranking is that, although insufficient logging and monitoring cannot be exploited directly for attacks, it contributes significantly to the fact that attacks that take place are not detected, which plays into the hands of the attackers.

How much does a penetration test show? The pen tester's actions should be logged so extensively that the attack and its consequences can be traced. If this is not the case, you will have a problem in emergencies: You will either not detect an attack at all or not determine its consequences correctly.

Not detecting attacks is often a problem, even if the attempts are unsuccessful, because most attacks start with the search for a vulnerability. If these attack attempts are not detected and subsequently stopped, they will eventually guide the attacker to the target. In 2016, it took an average of 191 days for a successful attack (data breach) to be detected, and the attack took an average of 66 days [3]. Both are more than enough time for the attacker to cause massive damage.

Monitoring Protects

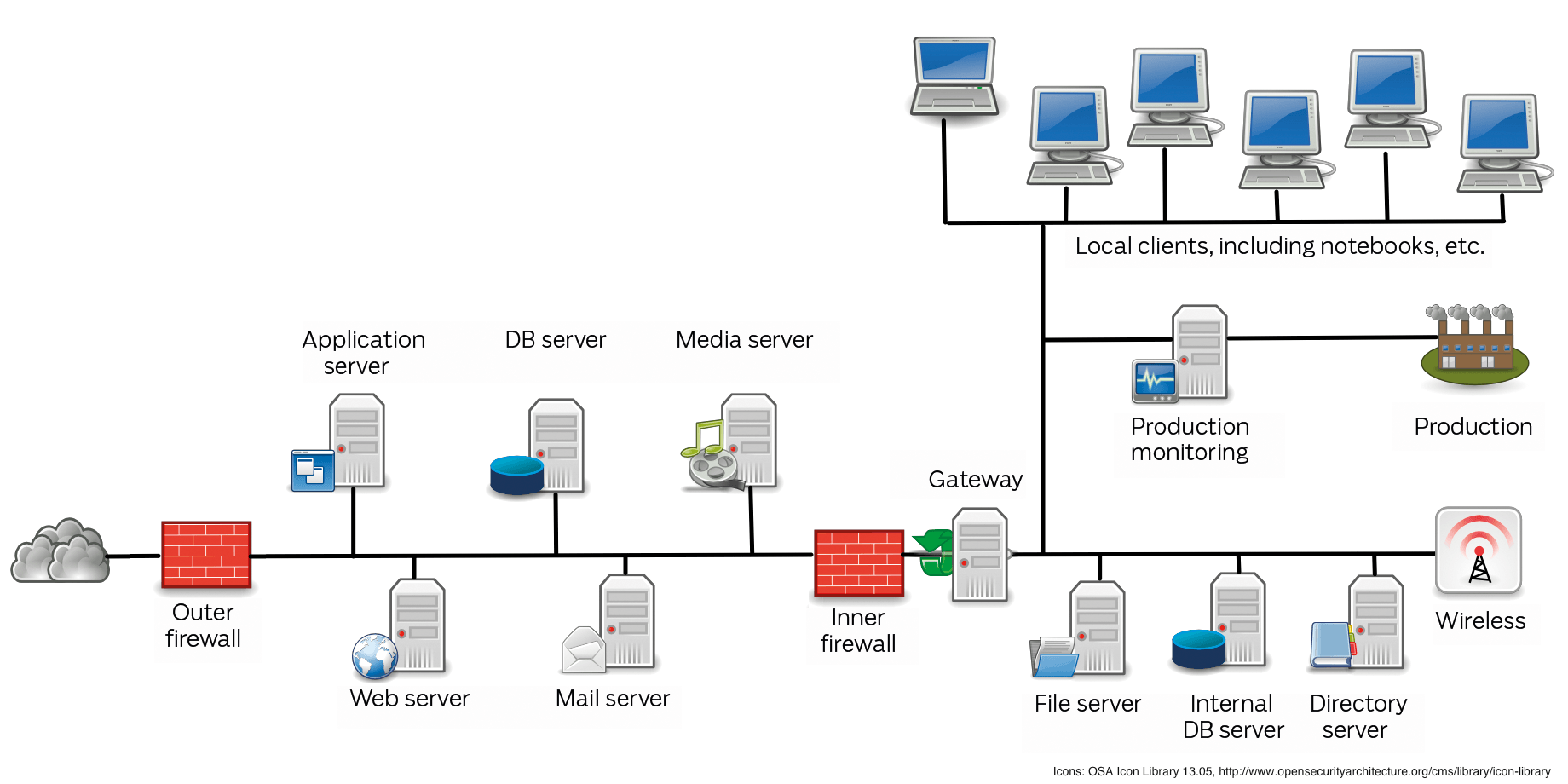

Figure 1 shows a sample network, including a DMZ, where both the web server (with the application, database, and media servers it uses) and the mail server reside. Additionally, you will find a local network with a computer for monitoring production and a large number of clients, plus the central file, database, and directory service servers.

Attackers will see numerous possibilities for attacking the corporate network: They could bring the web server under their own control, then compromise other servers in the DMZ, and work their way forward into the local network. If the web server allows file uploads, they could upload an image file with an exploit that compromises an employee's computer when the employee opens the file. The attacker could send email to an employee with malware attached. Alternatively, they could infect the laptop of a sales representative outside the protected network with malware that works its way through a local server when the laptop is then connected to the corporate network. The bad guy also could spoof a website typically visited by employees within the framework of a "waterhole attack" and load it with a drive-by infection.

If just one of these attacks is not detected and stopped, the attacker has gained a foothold into their first computer on the corporate network and can continue to penetrate from there until they finally reach a computer with sensitive data. At that point, the attacker might also be able to route the data out of the network without being noticed, because if the attack is not detected, the company's data loss prevention systems will not typically be any better.

Such targeted attacks are usually not even the biggest problem, because they do not occur so often. Web servers are often attacked because cybercriminals want to use them to spread malware. The website operator's local network does not typically interest these attackers. However, the local network is constantly threatened by malware.

Typical Gaps in Monitoring

Insufficient logging, inadequate detection of security incidents, and insufficient monitoring and response can raise their ugly heads in many places on a web server, including:

- Verifiable events that are not logged (e.g., logins, failed logins, and critical transactions).

- Warnings and errors that generate either no logfile entries or entries that are insufficient or unclear.

- Application and API logfiles that are not monitored for suspicious activity.

- Logfiles that are only stored locally and can be manipulated by the attacker (i.e., central evaluation is not possible).

- Inadequate warning thresholds and no adequate escalation process or ineffective existing processes.

- Penetration tests and scans with security tools (Dynamic Application Security Testing, DAST) that do not generate alerts, such as the OWASP Zed Attack Proxy [4], which means even a real attack would not set off an alarm.

- Applications unable to detect, escalate, or warn against active attacks in real time, or at least near real time. (See also the "Web Application Protection" box.)

Even if these cases do not occur in an application or installation, a pitfall still exists: Logging and alerting events that are visible to the user, and thus to a potential attacker, make the attacker's job easier. The application then has an information leak, which ranks third in the OWASP Top 10 as "Sensitive Information Exposure."

Possible Consequences of an Attack

OWASP mentions the following three attack scenarios as examples in the documentation of the Top 10:

- The server of an open source forum software developed by a small team was compromised by a vulnerability in its own software. The attacker was able to delete the internal source code repository with the next version of the software and all forum content. Although the source code could be recovered, the lack of monitoring, logging, or alerting led to a much worse leak. As a result, the project is no longer active. Although this is a good example, why did the attacker delete the source code repository? Unless they were acting on behalf of a competitor or were simply interested in vandalism, they did not gain anything by doing so. It would make more sense (for the attacker) to install a hidden backdoor in the next version of the forum software, through which they could later compromise all servers running this software. Because nothing was monitored, this kind of manipulation would very probably have gone unnoticed.

- An attacker scanned for users with a common password to hijack the affected user accounts. For all unaffected users, this scan only created a single failed login attempt. After a few days, the attack would be tried again with a different password, and so on. Without adequate monitoring, this attack remained undetected.

- A large retailer in the US used an internal analysis sandbox to check attachments for malware. It detected potentially undesirable software, but no one responded to the reports. The sandbox output warnings for some time, but they were ignored. A successful attack was only noticed when suspicious card transactions were noticed by an external bank. In this case, there seemed to be a misconfiguration. Why were the suspicious attachments delivered to the users so that they could cause harm? They should have been quarantined or even deleted. Under no circumstances should they reach the recipients.

In all three cases, the attack could very probably have been fended off by good logging and monitoring – or at least stopped at an early stage.

Preventing Insufficient Logging and Monitoring

Depending on the need for data security, various measures are required. In general, you should ensure that every login and error detected by access control and server-side input validation is logged with as much user context as necessary to identify suspicious or malicious user accounts and for a sufficiently long period of time so that the data is also available for forensic analysis. Of course, data protection regulations must be observed – you cannot simply start storing all data for all eternity. Personal data, which includes not only the user ID but also the IP address used, may only be stored within the framework of legal regulations.

Also ensure that all logfiles are generated in a format that can be processed by a centralized logfile management solution and that an audit trail with integrity checks is created for valuable transactions to prevent manipulation or deletion. You can achieve this, for example, by using append-only database tables.

You can also implement effective monitoring and alerting so you can identify suspicious activities early and respond appropriately. An incident response and recovery plan can trigger predefined procedures in the event of an attack, as well.

So far, the main focus has been on monitoring the web server. This tempting target is accessible from the outside and is interesting for many attackers. However, an attacker has many more possibilities as to where and how to strike, and these attacks must also be detected and fended off as early as possible.

First Plan, Then Configure

You must choose wisely what data is relevant for logging. If too much is logged, the interesting messages are lost in the background noise. In the worst case, if available storage space is insufficient, the messages are discarded or overwritten by unimportant messages.

The opposite case should not be underestimated either: If logging is not sufficiently planned, some systems or applications might not be monitored at all, or at least not sufficiently. As a result, security-relevant events will not be identified and handled appropriately on those components of the overall system.

However, correct planning is only half the battle, because logging also requires correct configuration; otherwise, important information might not be logged at all or not sufficiently – in contradiction of company policy. Also, logs could be inconsistent or in the wrong format, which makes evaluation difficult or even impossible. Similarly, misconfiguration can cause data to be logged that should not be logged (e.g., personal data). Even if all relevant data is available, though, you could still run up against problems, such as the failure to synchronize time on all systems so that some data cannot be assigned correctly.

Protecting Logs

One more point is easily overlooked: the need to protect the logged data. Some systems or applications do log personal information (e.g., usernames, IP or email addresses, etc.). You need to protect these logfiles just as well as the stored or processed data itself; otherwise, although an attacker might not be able to sniff out the well-protected primary data, he could access the information because the logging data is transmitted and stored without encryption.

Protection of confidentiality and integrity also is necessary for all other logged data, even if the data does not contain sensitive information. In this case, the logs themselves are data worthy of protection: If an attacker can manipulate or delete the logs, they can mask their attack.

Logging Requirements

When it comes to logging requirements, creating a security policy takes top priority. Before the IT manager can begin the implementation, they need to know who logs what, how, and where. The policy lays down the essential requirements:

- Define a person responsible for logging and determine what should (and may) be logged.

- List the existing logging functions and define a location for storing the logs.

- Ensure that the logging complies with all legal frameworks.

- Synchronize the system time of all logging systems and applications and use the same time and date format for all logfiles.

Finally, you need to evaluate the acquired information and respond to security-related incidents. This step can be endangered by inadequate qualifications of the responsible persons, missing or inadequate logging, or faulty administration of the detection systems used – thus, the importance of creating a security policy as the first requirement, this time to ensure the detection of security-related incidents.

To react quickly and effectively to detected attacks, a predefined and proven procedure for handling security incidents is required. As always, the first step is to determine who has to do what and when. A test of these processes is also very important, because in an emergency, the detected security incident, usually an attack but sometimes only a misconfiguration, must be resolved as quickly as possible.

Additionally, evidence for the forensic investigation must be secured, or at least not destroyed. Once the incident is resolved, the IT manager must investigate how it happened, what happened, and what the consequences of the attack are. In contrast to most other building blocks, you do not have to create a security policy first, but rather a policy with the initial response to be taken. At an early stage it is also advisable to consider whether your own forensic team or a service provider should carry out the forensic investigation.

Conclusions

To react appropriately to security-related incidents, whether targeted attacks, standard malware, or a misconfiguration, the IT manager must have a plan that the IT team follows in the event of an incident. These incidents must be detected in the first place, which is only possible with suitable and continuously evaluated logs. Deficiencies in logging or monitoring lead to attacks not being detected at all or being detected only when it is too late, which is why insufficient logging and monitoring has quite rightly found its way into the current OWASP Top 10.