Service discovery, monitoring, load balancing, and more with Consul

Cloud Nanny

The cloud revolution has brought some form of cloud presence to most companies. With this transition, infrastructure is dynamic and automation is inevitable. HashiCorp's powerful tool Consul, described on its website as "… a distributed service mesh to connect, secure, and configure services across any runtime platform and public or private cloud" [1], performs a number of tasks for a busy network. Consul offers three main features to manage application and software configurations: service discovery, health check, and key-value (KV) stores.

Consul Architecture

As shown in Figure 1, each data center has its own set of servers and clients. All nodes within the data center are part of the LAN gossip pool, which has many advantages; for example, you do not need to configure server addresses, because server detection is automatic, and failure detection is distributed among all nodes, so the burden does not end up on the servers only.

![Consul's high-level architecture within and between data centers (image source: HashiCorp [2]). Consul's high-level architecture within and between data centers (image source: HashiCorp [2]).](images/F01.png)

The gossip pool is also used as a messaging layer between nodes within the data center to communicate with each other. The server nodes elect one node among themselves as the Leader node, and all the RPC requests from the client nodes sent to other server nodes are forwarded to that Leader node. The leader selection process uses a consensus protocol based on the Raft consensus algorithm.

When it comes to cross-data-center communication, only server nodes from each data center participate in a WAN gossip pool, which uses the gossip protocol to manage membership and broadcast messages to the cluster. Requests are relatively fast, because the data is not replicated across data centers. When a request for a remote data-center resource is made, it's forwarded to one of the remote center's server nodes, and that server node returns the results. If the remote data center is not available, then that resource also won't be available, although it shouldn't affect the local data center.

Raft

For this article, I consider a simple data center, named Datacenter-A, with three server and two client nodes – a web server node and an application node. I chose three server nodes because of the Raft protocol, which implements the distributed consensus. In a nutshell, the Raft protocol uses the voting process to elect a leader among the server nodes that announce themselves as candidates to become the leader node. To support this voting process, a minimum of three nodes are needed in the server group. A one-server-node system does not need to implement this distributed consensus. In a two-server-node group, no node can gain a majority, because each node votes for itself as soon as it becomes a candidate.

Installation and Configuration

Consul installation is fairly simple and easy: Just extract the binary file into your local filesystem and run it as a daemon:

- After extracting the consul binaries to each node, create the

/etc/consul.d/config.jsonconfiguration file (Listing 1). When theserverkey is settrue, that particular node acts as the Server node; afalsevalue sets that instance to a Client node.

Listing 1: Config for Server Node

01 {

02 "acl_datacenter": "Datacenter-A",

03 "acl_default_policy": "allow",

04 "acl_enforce_version_8": false,

05 "acl_master_token": "",

06 "check_update_interval": "60s",

07 "data_dir": "/var/consul",

08 "datacenter": "Datacenter-A",

09 "disable_update_check": true,

10 "enable_script_checks": true,

11 "enable_syslog": true,

12 "encrypt": "xxxxxxxxxxxxxx",

13 "log_level": "INFO",

14 "node_meta": {

15 "APPLICATION": "consul",

16 "DATACENTER": "Datacenter-A",

17 "NAME": "Datacenter-A-consul",

18 "SERVICE": "consul",

19 "VERSIONEDCONSULREGISTRATION": "false"

20 },

21 "retry_join": [

22 "consul.datacenter-a.internal.myprivatedns.net"

23 ],

24 "server": true

25 }

- The Amazon EC2 instance tag can be referenced through the

node_metakey. - The important

datacenterkey decides the data center that particular node joins. - After creating the config file, you start the consul daemon (Listing 2).

Listing 2: Starting the Daemon

$ /usr/bin/consul agent -ui -config-dir /etc/consul.d -pid-file /var/consul/consul.pid -client 0.0.0.0 >> /var/log/consul/consul.log 2>&1 &

- Once the

consulprocess starts, you can use theconsul memberscommand (Listing 3) to check all the member details of that particular node's data center.

Listing 3: Data Center Members

[ec2-user@ip-172-31-20-189 ~]$ consul members Node Address Status Type Build Protocol DC Segment ip-172-31-16-22 172.31.16.22:8301 alive server 1.2.0 2 datacenter-a <all> ip-172-31-67-70 172.31.67.70:8301 alive server 1.2.0 2 datacenter-a <all> ip-172-31-23-194 172.31.23.194:8301alive server 1.2.0 2 datacenter-a <all> ip-172-31-27-252 172.31.27.252:8301alive client 1.2.0 2 datacenter-a <default> ip-172-31-31-130 172.31.31.130:8301alive client 1.2.0 2 datacenter-a <default>

- To check all the options available with the

consulcommand, enterconsul help. - For proper communication between the nodes, make sure ports 8300, 8301, 8302 (both TCP and UDP), and 8500 are open in the data center for all nodes. The consul UI can be accessed through port 8500.

All six nodes will be started with this Consul agent: three nodes as server nodes and the remaining three nodes as client nodes.

Service Registration and Discovery

Service discovery is an important feature in Consul and can be used effectively in many ways to run your services in the dynamic cloud infrastructure. For example, you can use Consul's service discovery and health check features to create your own load balancers, along with the use of Nginx, Apache, or HAProxy.

In the example here, Apache is used as a reverse proxy. Instead of using Amazon Elastic Load Balancing (ELB) between Apache and the application layer, the Apache layer can use Consul's service discovery and health check feature to render application node information dynamically.

To use the service discovery feature, each node should have a config file (Listing 4) to register a particular service running on that node with Consul. Once the consul process is started, the node registers the service MyTomcatApp with Consul and shares the service status with the Consul server. The health check path, port, interval, and timeout details are configured under checks (lines 11-19).

Listing 4: Config File

01 {

02 "service": {

03 "name": "MyTomcatApp",

04 "tags": ["http","lbtype=http","group=default","service=MyTomcatApp"],

05 "enable_tag_override": false,

06 "port": 8080,

07 "meta": {

08 "lb_type": "http",

09 "service": "MyTomcatApp"

10 },

11 "checks": [

12 {

13 "name": "HTTP /myapp on port 8080",

14 "http": "http://localhost:8080/myapp",

15 "tls_skip_verify": true,

16 "interval": "30s",

17 "timeout": "25s"

18 }

19 ]

20 }

21 }

Load Balancing

The service discovery feature also can be used to configure Apache or Nginx proxy load balancers. Apache is set up as a reverse proxy in Listing 5 and the application servers as the back ends in Listing 6. This back-end information can be rendered dynamically through the Consul template:

$ consul-template -template "/etc/httpd/conf/httpd.conf.ctmpl:/etc/httpd/conf/httpd.conf:/sbin/service httpd reload" &

Listing 5: Apache Reverse Proxy

<Proxy balancer://mycluster>

{{range $index, $element := service "MyTomcatApp" -}}

BalancerMember http://{{.Address}}:{{.Port}}

{{end -}}

</Proxy>

ProxyPass / balancer://mycluster/myapp/

ProxyPassReverse / balancer://mycluster/myapp/

Listing 6: Back Ends

<Proxy balancer://mycluster> BalancerMember http://172.31.20.189:8080 BalancerMember http://172.31.31.130:8080 </Proxy> ProxyPass / balancer://mycluster/myapp/ ProxyPassReverse / balancer://mycluster/myapp/

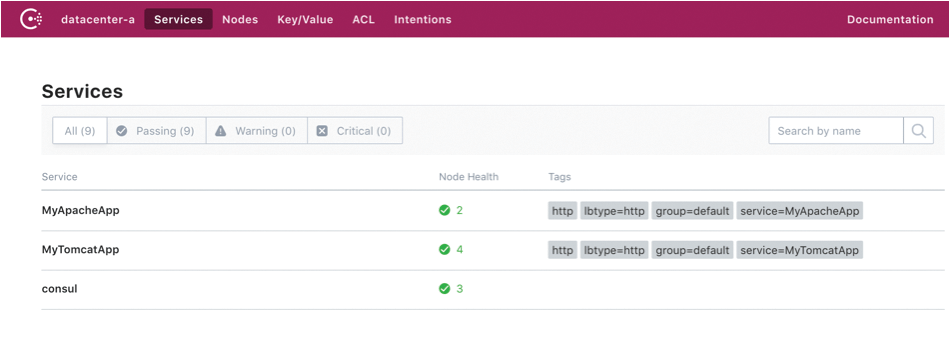

As shown in Figure 2, once the Tomcat service starts up, it is registered, and its health status is periodically updated. The config files can be rendered from the consul templates through the consul-template binary and be run in the background to keep the back-end node information up to date.

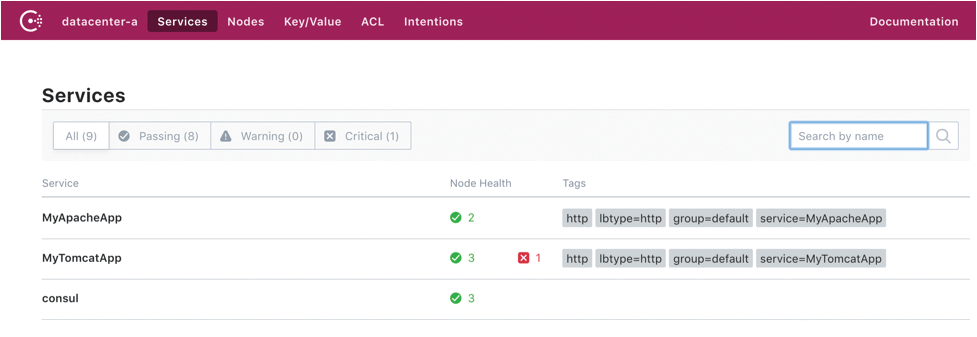

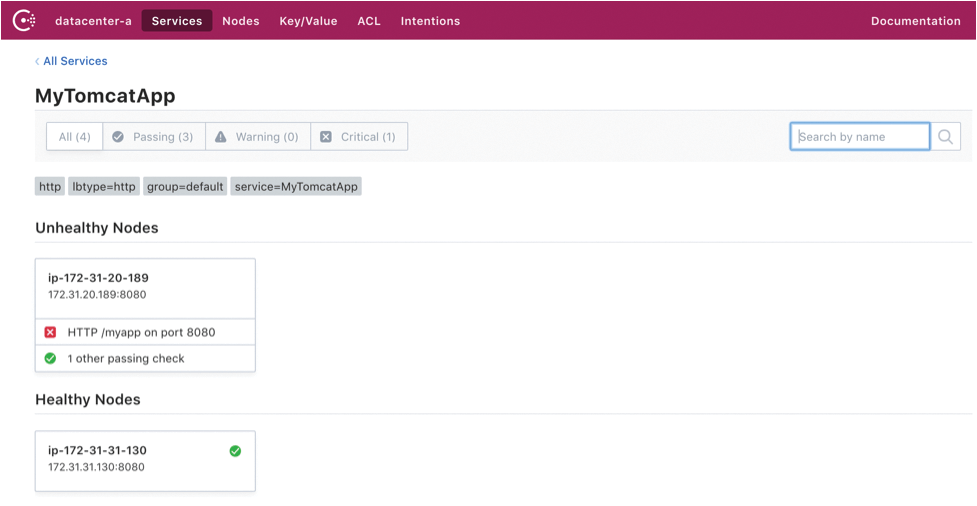

For example, if the application's EC2 instance crashes, that node will be marked down on the Consul service list (Figure 3). Once the application health status is marked down (Figure 4), the consul-template daemon running on the Apache node will update the config file and reload Apache. In this way, Apache can handle and keep its proxy balancer config up to date in the dynamically changing cloud infrastructure.

Consul KV Store

Consul's KV store feature lets you manage not only the application configurations, but the configurations of all software components (e.g., Apache, Tomcat, Nginx, databases). Listing 7 manages the Apache configuration. If you want to change the Apache worker process settings, you can just update the Consul KV store, instead of making the changes to a config file and rebuilding the EC2 instance.

Listing 7: KV Store for Apache Config

01 # worker MPM

02 # StartServers: initial number of server processes to start

03 # MaxClients: maximum number of simultaneous client connections

04 # MinSpareThreads: minimum number of worker threads which are kept spare

05 # MaxSpareThreads: maximum number of worker threads which are kept spare

06 # ThreadsPerChild: constant number of worker threads in each server process

07 # MaxRequestsPerChild: maximum number of requests a server process serves

08 <IfModule worker.c>

09 StartServers {{ keyOrDefault (printf "%s/%s/apache/startservers" $myenv $myapp ) "4" }}

10 MaxClients {{ keyOrDefault (printf "%s/%s/apache/MaxClients" $myenv $myapp ) "300" }}

11 MinSpareThreads {{ keyOrDefault (printf "%s/%s/apache/MinSpareThreads" $myenv $myapp ) "25" }}

12 MaxSpareThreads {{ keyOrDefault (printf "%s/%s/apache/MaxSpareThreads" $myenv $myapp ) "75" }}

13 ThreadsPerChild {{ keyOrDefault (printf "%s/%s/apache/ThreadsPerChild" $myenv $myapp ) "25" }}

14 MaxRequestsPerChild {{ keyOrDefault (printf "%s/%s/apache/MaxRequestsPerChild" $myenv $myapp ) "0" }}

15 </IfModule>

When consul-template sees the missing keys in the Consul KV store, it just hangs; no output config files will be generated, and ultimately the service startup fails. To avoid this situation, keyOrDefault should specify default values to be used when the keys are missing in the KV store. Variables can be used in the key path. In the example in Listing 7, $myenv and $myapp segregate the keys for particular environment and application spaces. Listing 8 shows the values in the Consul KV store for the example, and the rendered config file is shown in Listing 9.

Listing 8: Consul KV Store

$ consul kv get -recurse dev/myapp/apache/MaxClients:400 dev/myapp/apache/MaxRequestsPerChild:2 dev/myapp/apache/MaxSpareThreads:80 dev/myapp/apache/MinSpareThreads:30 dev/myapp/apache/ThreadsPerChild:30 dev/myapp/apache/startservers:4

Listing 9: Apache Config File

# Usual KV store comments as in Listing 7 <IfModule worker.c> StartServers 4 MaxClients 400 MinSpareThreads 30 MaxSpareThreads 80 ThreadsPerChild 30 MaxRequestsPerChild 2 </IfModule>

In Listing 10, only the key is being used. If the requested key in the template exists, the template renders successfully, but if it is missing (e.g., lines 3 and 4), the template rendering just hangs.

Listing 10: Only the key

01 # ...

02 <IfModule worker.c>

03 StartServers {{ printf "%s/%s/apache/startservers" $myenv $myapp | key }}

04 MaxClients {{ printf "%s/%s/apache/MaxClients" $myenv $myapp | key }}

05 MinSpareThreads {{ keyOrDefault (printf "%s/%s/apache/MinSpareThreads" $myenv $myapp ) "25" }}

06 MaxSpareThreads {{ keyOrDefault (printf "%s/%s/apache/MaxSpareThreads" $myenv $myapp ) "75" }}

07 ThreadsPerChild {{ keyOrDefault (printf "%s/%s/apache/ThreadsPerChild" $myenv $myapp ) "25" }}

08 MaxRequestsPerChild {{ keyOrDefault (printf "%s/%s/apache/MaxRequestsPerChild" $myenv $myapp ) "0" }}

09 </IfModule>

Listing 11 shows that the dev/myapp/apache/startservers key is missing, so the template rendering will hang. In this case, you literally have to press Ctrl+C to exit the command or kill the consul-template daemon if it runs in the background.

Listing 11: Missing key

$ consul kv get -recurse dev/myapp/apache/MaxClients:400 dev/myapp/apache/MaxRequestsPerChild:2 dev/myapp/apache/MaxSpareThreads:80 dev/myapp/apache/MinSpareThreads:30 dev/myapp/apache/ThreadsPerChild:30

Mistakes are difficult to avoid when creating or updating a KV store, which will make troubleshooting difficult. To avoid this situation, always use keyOrDefault. On the other hand, how do you troubleshoot missing keys in the template? The consul-template daemon comes with a -log-level option to enable debug logging, but as you can see in the final line of Listing 12, the output is not particularly helpful. All you know from the missing data for 1 dependency message is that one key is missing in the KV store, but you don't know which one. In this situation, the Unix commands awk and grep come in handy:

$ for i in `grep printf httpd.conf1.ctmpl | grep -v keyOrDefault | awk -F"\"" '{print $2}' | awk -F"%s" '{print $3}'`; do echo "key" $i":"; consul kv get dev/myapp$i; done

key /apache/startservers:

Error! No key exists at: dev/myapp/apache/startservers

key /apache/MaxClients:

400

Listing 12: Debug Output Snippet

consul-template -once -template "httpd.conf1.ctmpl:out" -log-level=debug 2018/07/10 01:03:09.580356 [INFO] consul-template v0.19.5 (57b6c71) 2018/07/10 01:03:09.580415 [INFO] (runner) creating new runner (dry: false, once: true) 2018/07/10 01:03:09.580838 [INFO] (runner) creating watcher 2018/07/10 01:03:09.581454 [INFO] (runner) starting 2018/07/10 01:03:09.581511 [DEBUG] (runner) running initial templates 2018/07/10 01:03:09.581532 [DEBUG] (runner) initiating run 2018/07/10 01:03:09.581552 [DEBUG] (runner) checking template 88b8c1d0821f2b9beb481aa808a359bc 2018/07/10 01:03:09.582159 [DEBUG] (runner) was not watching 7 dependencies 2018/07/10 01:03:09.582200 [DEBUG] (watcher) adding kv.block(dev/myapp/apache/startservers) 2018/07/10 01:03:09.582255 [DEBUG] (watcher) adding kv.block(dev/myapp/apache/MaxClients) ... 2018/07/10 01:03:09.582441 [DEBUG] (runner) diffing and updating dependencies 2018/07/10 01:03:09.582476 [DEBUG] (runner) watching 7 dependencies 2018/07/10 01:03:09.588619 [DEBUG] (runner) missing data for 1 dependencies

Accessing Services Through DNS

The registered Consul services can be accessed through DNS, and one of the easiest ways is with Dnsmasq. Adding the /etc/dnsmasq.d/10-consul file shown in Listing 13 should enable Consul DNS lookup.

Listing 13: Consul DNS Lookup

$ cat /etc/dnsmasq.d/10-consul # Enable forward lookup of the 'consul' domain: server=/consul/127.0.0.1#8600 $ dig @localhost -p 8600 MyTomcatApp.service.consul ; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.62.rc1.57.amzn1 <<>> @localhost -p 8600 MyTomcatApp.service.consul ; (1 server found) ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 5337 ;; flags: qr aa rd; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 0 ;; WARNING: recursion requested but not available ;; QUESTION SECTION: ;MyTomcatApp.service.consul. IN A ;; ANSWER SECTION: MyTomcatApp.service.consul. 0 IN A 172.31.31.130 MyTomcatApp.service.consul. 0 IN TXT "VERSIONEDCONSULREGISTRATION=false" MyTomcatApp.service.consul. 0 IN TXT "APPLICATION=app" ;; Query time: 1 msec ;; SERVER: 127.0.0.1#8600(127.0.0.1) ;; WHEN: Tue Jul 10 04:31:28 2018 ;; MSG SIZE rcvd: 134

Conclusions

Consul is one of the best tools for helping you manage your dynamic infrastructure in a cloud environment, and it works well not only with VMs and EC2 instances, but with containers as well. With the appropriate Consul templates, your entire application and production infrastructure maintenance can be automated and overhead reduced.