OpenStack installation with the Packstack installer

Living in the Clouds

For several years, cloud computing in the global IT market has become common, both in corporate infrastructure and among private users. In medium and large companies, it replaces a not very flexible, poorly scalable, and often expensive computer infrastructure, whereas it allows small businesses, startups, and private users to transfer the entire burden of providing IT services to an external supplier.

In addition to the division into private and public clouds, you can divide cloud computing according to the types of services offered. In this category, two types of clouds lead: Infrastructure as a Service (IaaS; e.g., OpenStack and Amazon Web Services), which provides the entire virtual infrastructure, including servers, mass storage, and virtual networks, and Storage as a Service (SaaS; e.g., Dropbox, Hightail), which delivers storage space to clients.

The ways to install an OpenStack cloud range from a tedious step-by-step installation of individual OpenStack services to the use of automated installation scripts, such as Packstack or TripleO (OpenStack on OpenStack), which significantly accelerates cloud installations. Whereas TripleO is used mainly in installations for production environments, Packstack [1] is used for quickly setting up development or demonstration cloud environments.

In this article, I present an example of an IaaS-type RDO [2] OpenStack Pike [3] installation using the Packstack installer.

OpenStack

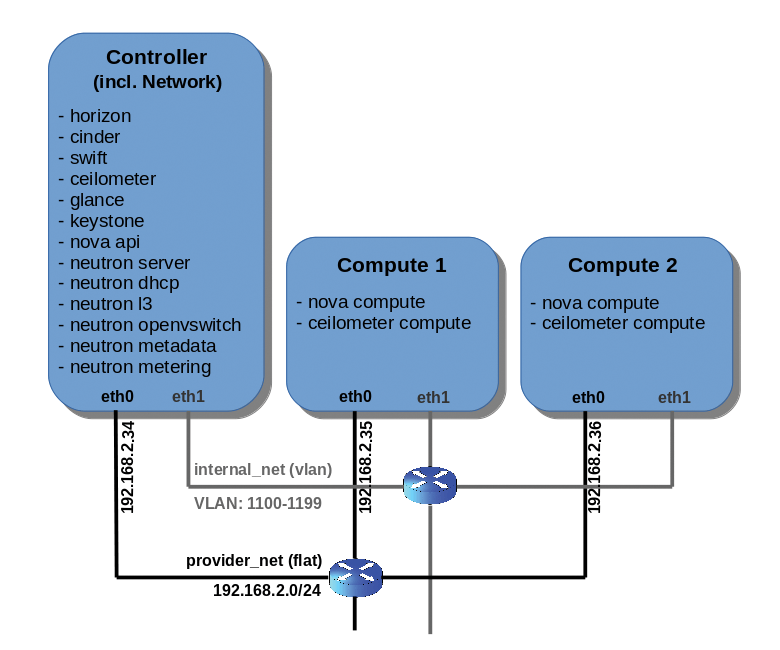

A typical OpenStack environment comprises a cloud controller (Controller node) that runs most of the cloud services, including network services (Neutron), and at least one Compute node (hypervisor) controlling the operation of virtual machines, called instances. The most common reason for integrating a cloud controller with a network node on the same server is the small size of the cloud and access to a relatively efficient server that is able to handle a large number of OpenStack components.

Cloud computing is a system of services distributed between its constituent nodes. The most important OpenStack services include:

- Horizon, a web browser user interface (dashboard) based on Python Django for creating and managing instances (OpenStack virtual machines).

- Keystone, an authentication and authorization framework based on the MariaDB database.

- Neutron, a network connectivity service based on Open vSwitch (OVS) and a Modular Layer 2 (ML2) plugin.

- Cinder, persistent block storage for OpenStack instances with support for multiple back-end devices.

- Nova, an instances management system based on a Linux kernel-based virtual machine (KVM) hypervisor.

- Glance, a registry for instance images.

- Swift, file storage for the cloud.

- Telemetry/Ceilometer, metering engines for collecting billable data and analysis.

- Heat, an orchestration service for template-based instance deployment.

For the test installation, I use three nodes: a Controller node and two Compute nodes (Figure 1). Each node was equipped with a 2.5GHz (4-core) CPU, 16GB of RAM, a 160GB hard drive, two network interface cards (NIC1, provider_net, eth0; NIC2, internal_net, eth1), and a CentOS 7 64-bit operating system. The NIC1 IP addresses for the Controller node and for Compute nodes 1 and 2 were 192.168.2.34, 192.168.2.35, and 192.168.2.36, respectively.

Prerequisites

In the following procedure, I present OpenStack deployments with flat and vlan network types. VLANs are used for tenant internal communication (internal_net) between instances logically running in the same tenant, but physically placed on the separate Compute nodes. Therefore, to allow VLAN traffic between the Compute nodes, VLAN tagging should be enabled on the network switch connecting them.

Before starting the Packstack deployment, the eth0 network interface should have a static IP configuration, with config files for the eth0 and eth1 interfaces; that is, ifcfg-eth0 and ifcfg-eth1 should exist on all nodes in the /etc/sysconfig/network-scripts/ directory and should be in state UP. The NetworkManager service should be stopped and disabled on all OpenStack nodes.

Next, enter the update command on all nodes

[root@controller ~]# yum update [root@compute1 ~]# yum update [root@compute2 ~]# yum update

to update OpenStack.

Packstack Installation

On CentOS 7, the Extras repo, which is enabled by default, provides the RPM that enables the OpenStack Pike repository:

[root@controller ~]# yum install centos-release-openstack-pike

Once again, you need to update the Controller node to fetch and install the newest packages from the Pike RDO (RPM distribution of OpenStack) repo:

[root@controller ~]# yum updater

Once you have the OpenStack Pike repository enabled, enter

[root@controller ~]# yum install openstack-packstack

to install the Packstack RPM package from this repository.

Answer File

The Packstack installer uses the so-called Answer file (answers.txt), which includes all the parameters needed for OpenStack deployment. Although an Answer file is not required for an all-in-one installation, it is mandatory for a multinode deployment.

To generate a preliminary version of the Answer file, including the parameter default values, enter the command:

[root@controller ~]# packstack --gen-answer-file=/root/answers.txt

Some of the generated parameter default values will need to be modified to your needs, so you should edit the /root/answers.txt file and modify the parameters as in Listing 1 for the test installation. Table 1 has a brief description of the modified parameters.

Tabelle 1: Modified answers.txt Parameters

|

Parameter |

Action |

|---|---|

|

|

Install Heat Orchestration service with the OpenStack environment. |

|

|

NTP servers needed by the message broker (RabbitMQ, Qpid) for internode communication. |

|

|

Management IP address of the Controller node. |

|

|

Management IP addresses of the Compute nodes (comma-separated list). |

|

|

Management IP address of the Network node. |

|

|

Keystone admin username required for the OpenStack REST API and Horizon dashboard access. |

|

|

Keystone admin password required for the OpenStack REST API and Horizon dashboard access. |

|

|

OVS bridge name for external traffic; if you use a provider network for external traffic (assign floating IPs from the provider network), you can also enter the value |

|

|

Network types to be used in this OpenStack installation. |

|

|

Default network type to be used for the tenant network creation. |

|

|

VLAN tagging range, along with the physical network (physnet), on which the VLAN networks will be created (physnet is required for flat and VLAN networks); if the physnet has no VLAN tagging assigned, networks created on this physnet will have flat type. |

|

|

Physnet-to-bridge mapping (specifying to which OVS bridges the physnets will be attached). |

|

|

OVS bridge-to-interface mapping (specifying which interfaces will be connected as OVS ports to the particular OVS bridges). |

|

|

OVS bridge interface that will be connected to the integration bridge ( |

|

|

Provision a demo project tenant. |

|

|

Create an OpenStack integration test suite. |

Listing 1: answers.txt Modifications

CONFIG_HEAT_INSTALL=y CONFIG_NTP_SERVERS=time1.google.com,time2.google.com CONFIG_CONTROLLER_HOST=192.168.2.34 CONFIG_COMPUTE_HOSTS=192.168.2.35,192.168.2.36 CONFIG_NETWORK_HOSTS=192.168.2.34 CONFIG_KEYSTONE_ADMIN_USERNAME=admin CONFIG_KEYSTONE_ADMIN_PW=password CONFIG_NEUTRON_L3_EXT_BRIDGE=br-ex CONFIG_NEUTRON_ML2_TYPE_DRIVERS=vlan,flat CONFIG_NEUTRON_ML2_TENANT_NETWORK_TYPES=vlan CONFIG_NEUTRON_ML2_VLAN_RANGES=physnet1:1100:1199,physnet0 CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS=physnet1:br-eth1,physnet0:br-ex CONFIG_NEUTRON_OVS_BRIDGE_IFACES=br-eth1:eth1,br-ex:eth0 CONFIG_NEUTRON_OVS_BRIDGES_COMPUTE=br-eth1 CONFIG_PROVISION_DEMO=n CONFIG_PROVISION_TEMPEST=n

OpenStack Deployment

Once the Answer file is ready, you can proceed with the deployment. To avoid time out errors during the deployment, in some cases, it's worth running the deployment command with an extended time out. The default --timeout value is 300 seconds, which I decided to extend to 600 seconds:

[root@controller ~]# packstack --answer-file=/root/answers.txt--timeout=600

The installation takes approximately 45 minutes, and it strictly depends on the hardware used. During this process, the installer prompts for the SSH password for each OpenStack node. After the successful deployment, you should see the final message shown in Listing 2.

Listing 2: Successful Deployment

**** Installation completed successfully ****** Additional information: * File /root/keystonerc_admin has been created on OpenStack client host 192.168.2.34. To use the command line tools you need to source the file. * To access the OpenStack Dashboard browse to http://192.168.2.34/dashboard . Please, find your login credentials stored in the keystonerc_admin in your home directory. * The installation log file is available at: /var/tmp/packstack/20180122-012409-uNDnRi/openstack-setup.log * The generated manifests are available at: /var/tmp/packstack/20180122-012409-uNDnRi/manifests

If the critical parameters in the Answer file were prepared according to your requirements, a successful installation means that no further post-deployment configuration is needed on any node.

Post-Deployment Verification

Before you start working with your cloud, you should briefly verify its functionality and operation by opening a web browser and entering the Controller's IP address, as specified in the installation output of Listing 2 (i.e., http://192.168.2.34/dashboard), to determine that the Horizon dashboard is accessible. If the dashboard is operational, you should see the Horizon login screen (Figure 2), where you can use the credentials set in the answers.txt file (i.e., admin/password) to log in.

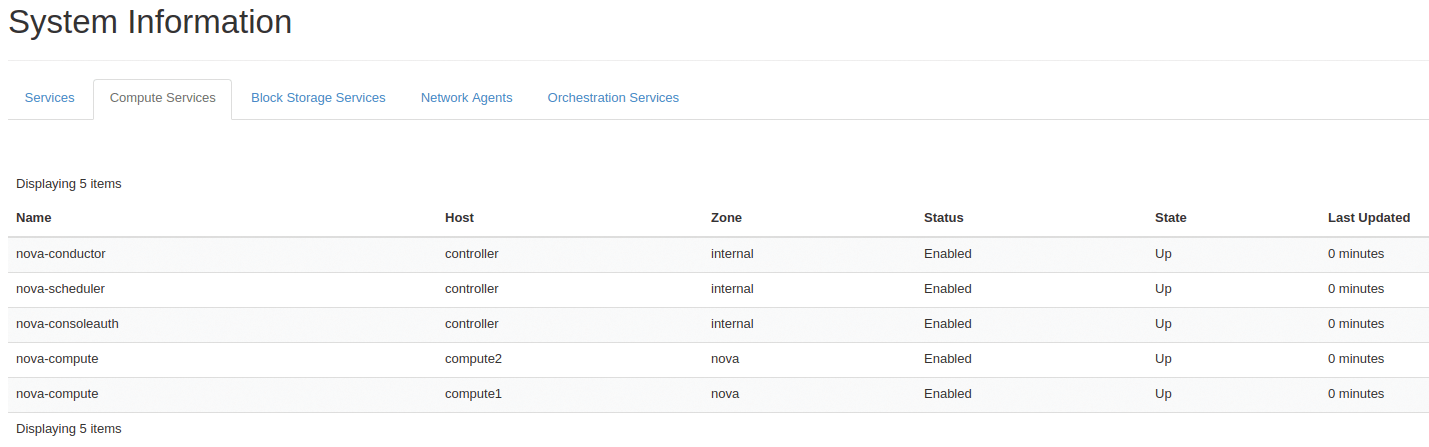

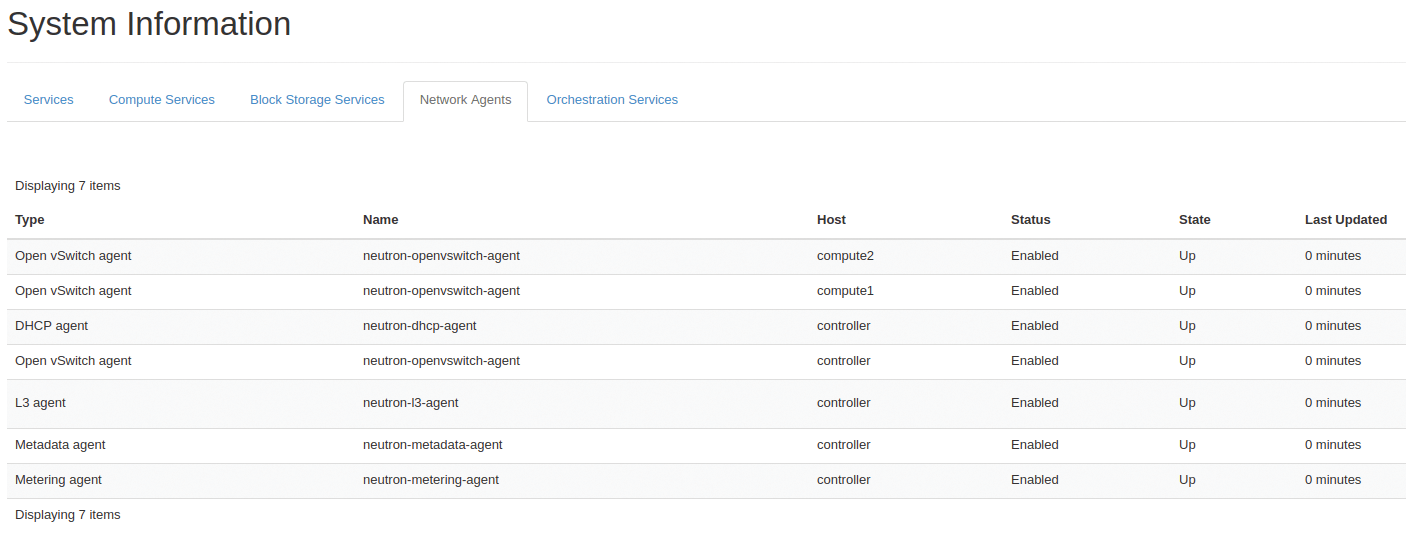

Once logged in, navigate to: Admin | System | System Information and inspect all the tabs to make sure everything is working as expected. The Compute Services tab lists Nova-related daemons running on particular nodes in the cloud. All services should be in Status Enabled and State Up (Figure 3). The Network Agents tab lists all Neutron services working on different nodes (Figure 4).

Next, check network configuration on the Controller node, which also runs Neutron network services. The management IP address is now assigned to the br-ex OVS bridge (as specified in the answers.txt file shown in Listing 1), and eth0 works as a back-end interface for this bridge (Listing 3). In fact, eth0 is a port connected to br-ex (Listing 4).

Listing 3: The Management IP and Back End

[root@controller ~]# ip addr show br-ex

10: br-ex: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN qlen 1000

link/ether d6:57:55:2f:b5:47 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.34/24 brd 192.168.2.255 scope global br-ex

valid_lft forever preferred_lft forever

inet6 fe80::d457:55ff:fe2f:b547/64 scope link

valid_lft forever preferred_lft forever

[root@controller ~]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:6b:55:21 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fe6b:5521/64 scope link

valid_lft forever preferred_lft forever

Listing 4: Querying the Open vSwitch

[root@controller ~]# ovs-vsctl show

...

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Port "eth0"

Interface "eth0"

Port br-ex

Interface br-ex

type: internal

Bridge "br-eth1"

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "eth1"

Interface "eth1"

Port "phy-br-eth1"

Interface "phy-br-eth1"

type: patch

options: {peer="int-br-eth1"}

Port "br-eth1"

Interface "br-eth1"

type: internal

ovs_version: "2.7.3"

The br-eth1 OVS bridge was created to handle internal traffic, and the eth1 interface is connected as a port to br-eth1, acting as its back-end interface.

Packstack does not create external bridges (br-ex) on Compute nodes and does not modify their management IP settings, either, because they do not normally participate in external traffic outside the cloud infrastructure. Packstack only creates br-eth1 OVS bridges to handle internal traffic.

The Keystone Credentials File

During deployment, Packstack created the file /root/keystonerc_admin with admin credentials that let you manage your OpenStack environment from the command line, which, in most cases, is faster than working in the web GUI dashboard. To source the file and import admin credentials to your session variables, enter:

[root@controller ~]# source /root/keystonerc_admin [root@controller ~(keystone_admin)]#

In this way, you avoid being prompted for authentication each time you want to execute the command as the OpenStack admin. Now you can obtain some information about your OpenStack installation from the command line, such as verifying the Compute nodes (Listing 5), displaying a brief host-service summary (Listing 6), and displaying installed services (Listing 7).

Listing 5: Verifying the Compute Nodes

root@controller ~(keystone_admin)]# openstack hypervisor list +----+---------------------+-----------------+--------------+-------+ | ID | Hypervisor Hostname | Hypervisor Type | Host IP | State | +----+---------------------+-----------------+--------------+-------+ | 1 | compute2 | QEMU | 192.168.2.36 | up | | 2 | compute1 | QEMU | 192.168.2.35 | up | +----+---------------------+-----------------+--------------+-------+

Listing 6: Host-Service Summary

[root@controller ~(keystone_admin)]# openstack host list +------------+-------------+----------+ | Host Name | Service | Zone | +------------+-------------+----------+ | controller | conductor | internal | | controller | scheduler | internal | | controller | consoleauth | internal | | compute2 | compute | nova | | compute1 | compute | nova | +------------+-------------+----------+

Listing 7: Installed Services

[root@controller ~(keystone_admin)]# openstack service list +----------------------------------+------------+----------------+ | ID | Name | Type | +----------------------------------+------------+----------------+ | 185c2397ccfd4b1ab2472eee8fac1104 | gnocchi | metric | | 218326bd1249444da11afb93c89761ce | nova | compute | | 266b6275884945d39dbc08cb3297eaa2 | ceilometer | metering | | 4f0ebe86b6284fb689387bbc3212f9f5 | cinder | volume | | 59392edd44984143bc47a89e111beb0a | heat | orchestration | | 6e2c0431b52c417f939dc71fd606d847 | cinderv3 | volumev3 | | 76f57a7d34d649d7a9652e0a2475d96a | cinderv2 | volumev2 | | 7702f8e926cf4227857ddca46b3b328f | swift | object-store | | aef9ec430ac2403f88477afed1880697 | aodh | alarming | | b0d402a9ed0c4c54ae9d949e32e8527f | neutron | network | | b3d1ed21ca384878b5821074c4e0fafe | heat-cfn | cloudformation | | bee473062bc448118d8975e46af155df | glance | image | | cf33ec475eaf43bfa7a4f7e3a80615aa | keystone | identity | | fe332b47264f45d5af015f40b582ffec | placement | placement | +----------------------------------+------------+----------------+

That's it! Your OpenStack cloud of three nodes is ready to go to work. Now you can create project tenants, create virtual network infrastructure inside tenants, launch instances, and so on.

Summary

Although OpenStack comprises mostly well-known and proven technologies, such as KVM and MariaDB, as a whole, it's still a new, promising, and dynamically developing technology. It has become a serious player on the global IT market, making it attractive and competitive against other cloud technologies and commercial virtualization solutions. Each version of OpenStack gains more features and functions, and thus more users.

In this article, I presented how to install a sample, proof-of-concept RDO OpenStack Pike environment for demonstration purposes. Although I did not address OpenStack scalability here, the deployment could easily be extended with Packstack's modified answer.txt file, without any outage or maintenance window, proving the beauty and power of the cloud software.