Keeping the software in Docker containers up to date

Inner Renewal

For a few months, the hype around Docker has sort of died down, but not because fewer people are using the product – quite the opposite: The calm has arrived because Docker is now part of the IT mainstream. Production setups rely as a matter of course on the software that has breathed new life into containers under Linux and spurred other products, such as Kubernetes.

From an admin's point of view, Docker containers have much to recommend them: They can be operated with reduced permissions and thus provide a barrier between a service and the underlying operating system. They are easy to handle and easy to replace. They save work when it comes to maintaining the system on which they run: Software vendors provide finished containers that are ready to run immediately. If you want to roll out new software, you download the corresponding container and start it – all done. Long installation and configuration steps with RPM, dpkg, Ansible, and other tools are no longer necessary. All in all, containers offer noticeable added value from the sys admin's perspective.

Not Everything Is Better

Where there is light, there is shadow, and Docker is no exception. When new software versions are released, administrators often have a vested interest in using the new versions. Security updates are an obvious problem: You will want to repair a critical vulnerability in Apache or Nginx, regardless of whether the service is running on bare metal or inside a Docker container.

New features are also important. When the software you use is finally given the one feature you've been waiting for, for months or years, you'll want to use it as quickly as possible. The question then arises as to how you can implement an update when the program in question is part of a Docker container.

Docker-based setups do allow you to react quickly to the release of new programs. Anyone used to working with long-term support (LTS) distributions knows that they essentially run a software museum and have sacrificed the innovative power of their setup on the altar of supposed stability. They are used to new major releases of important programs not being available until the next update to a new distribution version, and by then, they will already be quite mature.

If you use Docker instead, you can still run the basic system on an LTS distribution, but further up in the application stack you have far more freedom and can simply replace individual components of your environment.

No matter what the reasons for updating applications running in Docker containers, as with normal systems, you need a working strategy for the update. Because running software in Docker differs fundamentally from operations without a container layer, the way updates are handled also differs.

Several options are available with containers, which I present in this article, but first, you must understand the structure of Docker containers, so you can make the right decisions when updating.

How Docker Containers Are Built

Most admins will realize that Docker containers – unlike KVM-based virtual machines – do not have a virtual hard drive and do not work like complete systems because the Docker world is based on kernel functions, such as cgroups or namespaces.

What looks like a standalone filesystem within a Docker container is actually just a folder residing in the filesystem of the Docker host. Similar to chroot, Docker uses kernel functions to ensure that a program running in a container only has access to the files within that container.

However, Docker containers comprise several layers, two of which need to be distinguished: the image layer and the container layer. When you download a prebuilt Docker image, you get the image layer. It contains all the parts that belong to the Docker image and is used in read-only mode. If you start a container with this image, a read-write layer, the container layer, is added.

If you use docker exec to open a shell in a running container and use touch to create a file, you will not see a "permission denied" error, specifically because the container layer allows write access. The catch, though, is that when the modified Docker container is terminated, the changes made in it are also irrevocably lost. If the container restarts later from the same base image, it will look exactly the same as after the first download.

From the point of view of container evangelists, this principle makes sense: The idea of a container is that it is quickly replaceable by another, so if something needs to be changed in a container, it makes sense simply to replace the container.

Far too easily, container advocates forget that not every application on the planet follows the microservice mantra. Nginx and MariaDB are not divided into many services that arbitrarily scale horizontally and are natively cloud-capable. The approach of replacing a running container also works in this case, but requires more preparation and entails downtime.

Option 1: Fast Shot

The list of update variants for Docker containers starts with option 1: the simple approach of installing an update directly in the running container. If you use a container based on Ubuntu, CentOS, or another common Linux distribution, you typically have the usual set of tools, including rpm or dpkg, which the major distributors use to generate their container images.

That's why it's easy to install updates: Using docker exec, you start Bash in the running container and then run

apt-get update && apt-get upgrade

(in this example) to load updates into the container. However, you will soon be confronted with the problem I referred to earlier in this article: The changes are not permanent. They also bloat the image file of the running container in the host system.

However, such a process can make sense: For example, if a zero-day vulnerability appears for a central service, and exploits are already out there in the wild, updating the running application in the container gives you a short breather in which to schedule the correct update. Either way, it's true: If you import updates for distribution packages within a container, you should also update the basic images on your own system in parallel.

In some cases, the option of a service update in the container is not technically available: Anyone who has built a Docker container so that it defines the service (e.g., MariaDB) as an ENTRYPOINT or as a CMD in the Dockerfile has a problem. If you were to kill -9 in this kind of container, the entire container would disappear immediately. From Docker's point of view, the program in question was the init process, without which the container would not run.

For all other scenarios except serious security bugs that immediately force an update, the urgent advice is to keep your hands off this option. It contradicts the design principles of containers and ultimately does not lead to the desired sustainable results.

Option 2: Fast Shot with Glue

Intended more as a cautionary example, I'll also mention option 2, which is based directly on option 1: updating containers on the fly, followed by creating an additional container layer. Docker does offer this option. If you change a container on the fly and want to save the changes permanently, the docker commit command creates a new local container image that is used to start a new container.

If you install updates in a container as described and then run docker commit, you have saved the changes permanently in the new image. Theoretically, this also avoids the problem of being unable to restart the service in the container without exiting the container – you stop the old container and start the new one, so the downtime is very short.

From an administrative point of view, however, this scenario is a nightmare, because it makes container management almost completely impossible. An updated single container differs from the other containers, and in the worst case, it will behave differently. The advantage of a uniform container fleet that can be centrally controlled is therefore lost.

This situation gets even worse if you perform the described stunt several times: The result is a chaotic container conglomerate that can no longer be recreated from well-defined sources. The entire process is totally contrary to the operational concept of containers because it torpedoes the principle of the immutable underlay (i.e., the principle that the existing setup can be automated and cleanly reproduced from its sources at any time).

Although option 1 can still be used in emergencies, admins are well advised not even to consider option 2 in their wildest dreams.

Prebuilt Containers

Those of you who don't want to build your Docker containers yourselves – as described in option 3 – might find a useful alternative in the Docker containers offered by the large projects. Like Ubuntu, Canonical, and others, it is now common practice for providers of various programs to publish their own work on the Internet as ready-to-use, executable Docker containers. Although not perfect, because the admin has to trust the authors of the respective software, this variant does save some work compared with doing it yourself.

Importantly, certain quality standards have emerged, and they are given due consideration in most projects. One example is the Docker container offered by the Prometheus project, which only contains the Prometheus service and can be controlled by parameters passed in at startup.

The sources, especially the Dockerfile on which the container is based, are available for download from the Prometheus GitHub directory. In case of doubt, you can download this Dockerfile, examine it, and, if necessary, adapt it to your requirements (Figure 1).

Admins should definitely keep their fingers off container images on Docker Hub whose origin cannot be determined. These infamous black boxes may or may not work, and they are practically impossible to troubleshoot. Anyone who relies on prebuilt containers needs to be sure of the quality of the developers' work and only then take action.

This approach has another drawback: Not all software vendors update their docker images at the speed you might need. Although distributors roll out updates quickly, several days can pass before for an updated Docker image is available for the respective applications.

Option 3: Build-Your-Own Container

If you want to be happy with your containers in the long term, you should think about your operating concept early on; then, option 3 is ideal for application and security updates: building containers yourself.

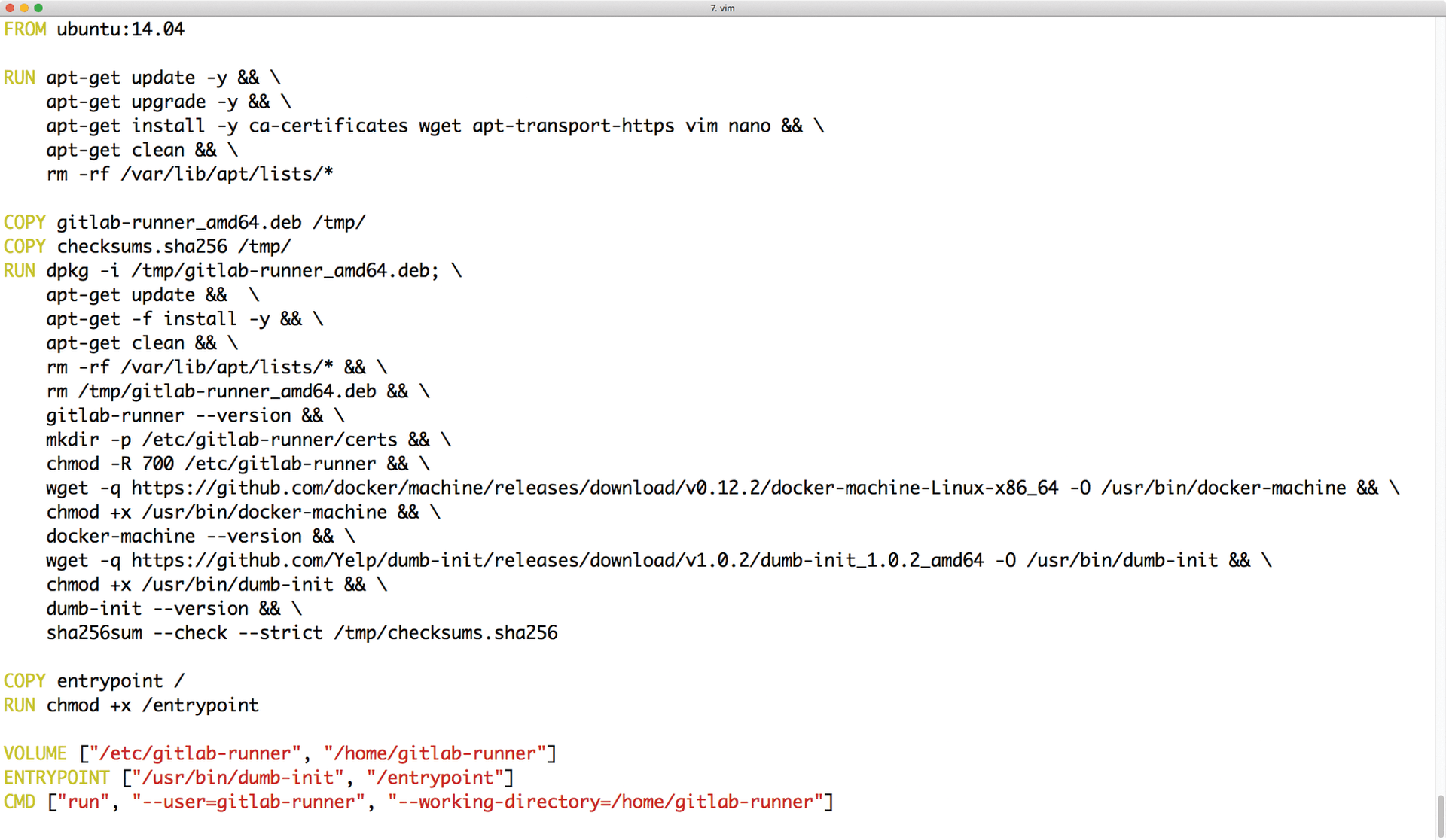

Creating a Docker container is not particularly complicated. The docker build command in combination with a Dockerfile are the only requirements. The syntax of the Dockerfile is uncomplicated: Essentially, it contains elementary instructions that tell Docker how the finished container should look. The Docker guide is useful and explains the most important details [1].

While Docker is processing the Dockerfile, it executes the instructions it describes and creates various image layers, all of which will later be available in read-only mode. A change of the image later on is only possible if you either build a new image or go for the very nasty hack of option 2. When preparing Dockerfiles, you should be very careful (Figure 2).

docker build command does to create a base image.Big distributions can help: They usually offer their systems as basic images, so if you want to build your own container based on Ubuntu, you don't have to install an Ubuntu base system with debootstrap and then squeeze it into a container. It is sufficient to point to the Ubuntu base image in the Dockerfile, and Docker does the rest on its own.

Unlike many images provided by Docker users on Docker Hub, you do not increase your security risk: If you trust a regular Ubuntu installation, you have no reason to mistrust the finished container packetized by Ubuntu – they are the same packages.

Another good reason to rely on basic images from distributors is that they are optimized for the disk space they need, so only the necessary packages are included. If you use debootstrap, however, you automatically get many components that cannot be used sensibly in the context of a container. The difference between a basic DIY image and the official Ubuntu image for Docker can quickly run to several hundred megabytes.

Automation Is Essential

A Dockerfile alone does not constitute a functioning workflow for handling containers in a meaningful way. Imagine, for example, that dozens of containers in a distributed setup are exposed to the public with a vulnerability like the SSL disaster Debian caused a few years ago [2]. Because the problem existed in the OpenSSL library itself, admins needed to update SSL across the board. In case of doubt, containers would not be exempt, creating a huge amount of work.

In the worst case, admins face the challenge of using different DIY containers within the setup that are reduced to the absolutely essential components. All containers might be based on Ubuntu, but one container has Nginx, another has MariaDB, and a third runs a custom application.

If it turns out that an important component has to be replaced in all these containers, you would be breaking a sweat despite having DIY containers based on Dockerfiles. Even if new containers with updated packages can be created ad hoc from the existing Dockerfiles, the process will take a long time if it is executed manually.

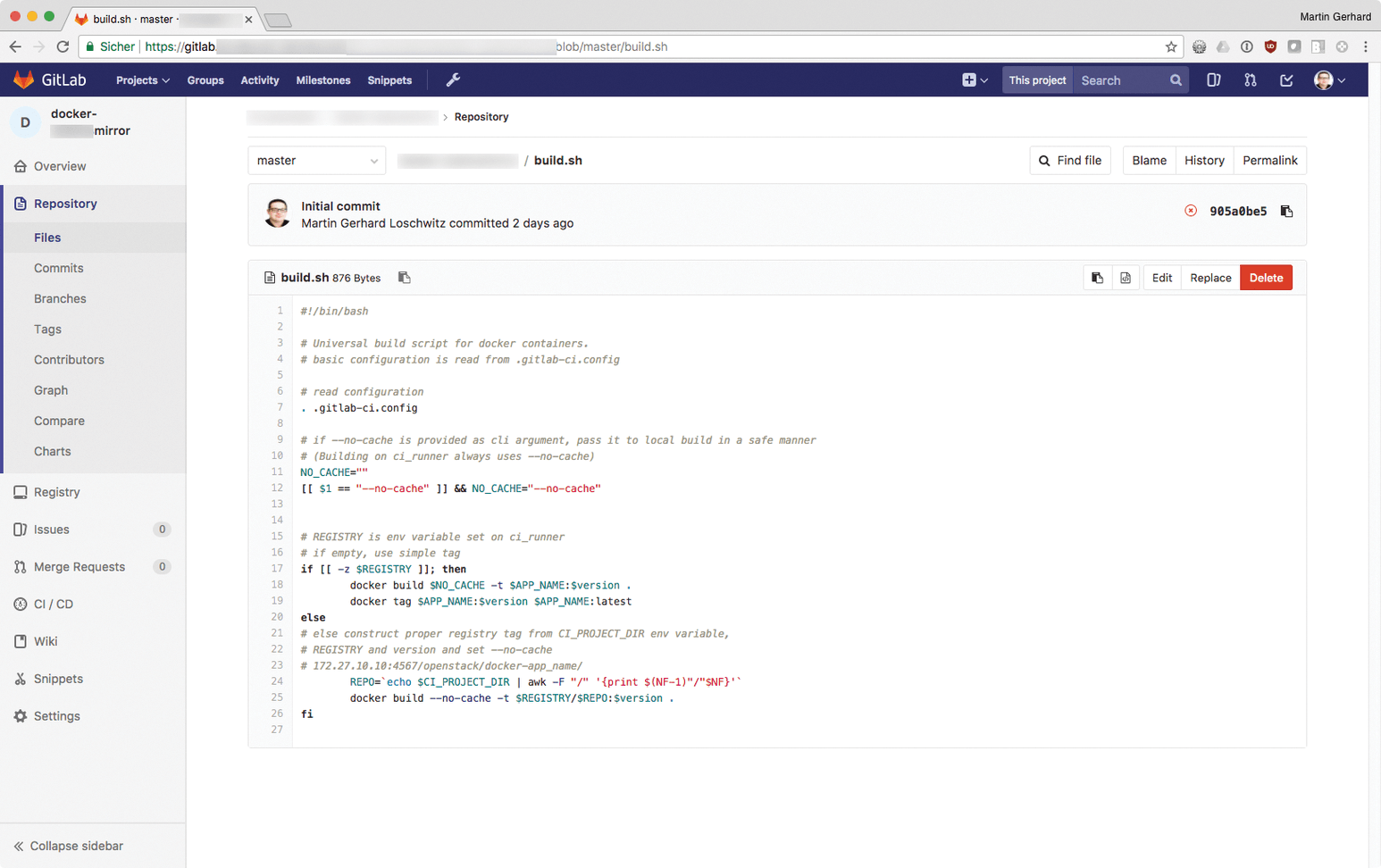

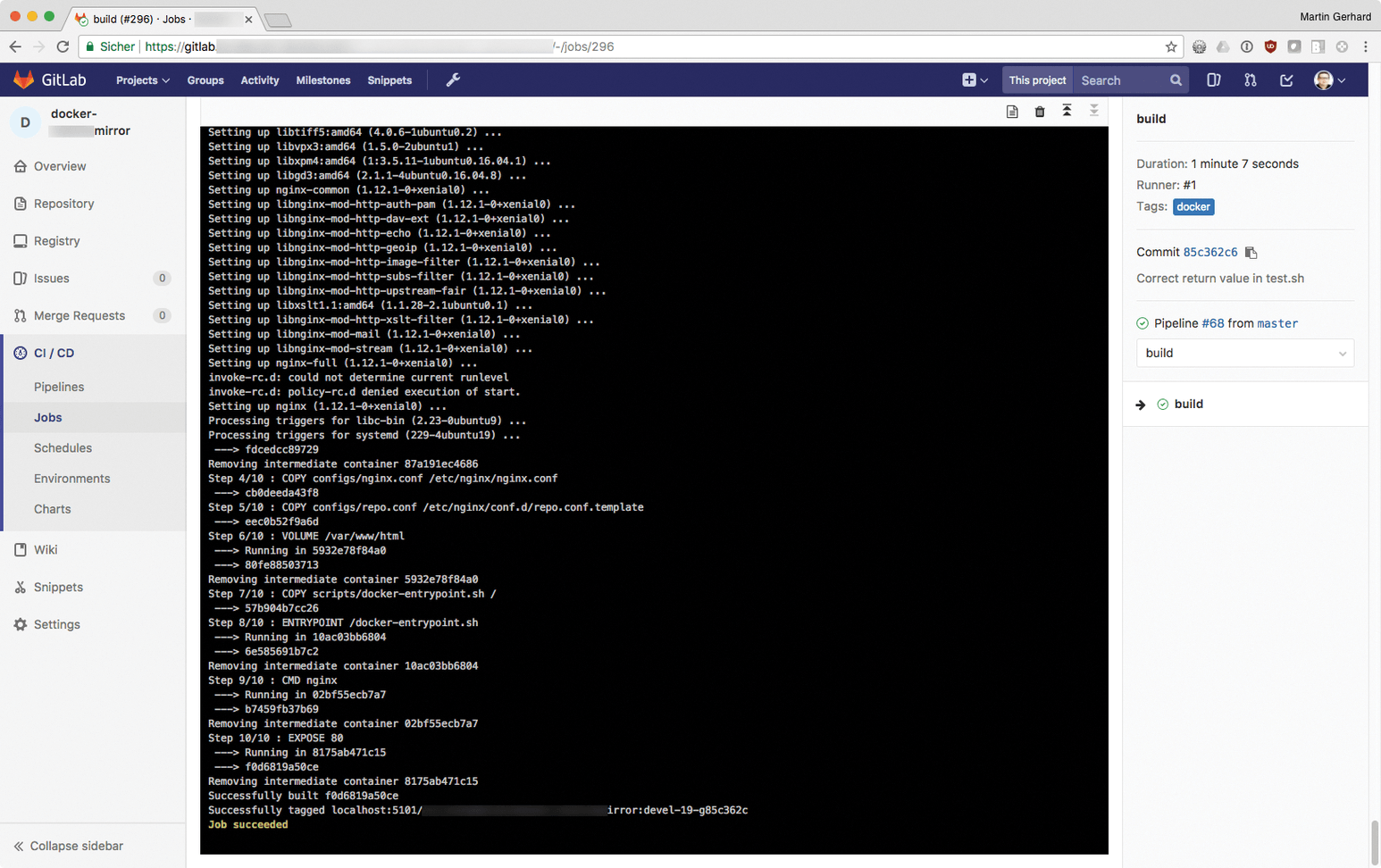

Whether you are handling application updates or security updates, it makes more sense to create an automatic workflow that generates Docker images at the push of a button. This approach has been mentioned in a previous ADMIN article with the focus on the community edition of GitLab [3]. GitLab has its own Docker registry, and with its continuous integration/continuous delivery (CI/CD) functions, the process of building containers can be fully automated. At the end of the process, GitLab provides the new images from the registry so that you just need to download them to the target system and replace the old containers with new – done (Figures 3 and 4).

push.Orchestration Is Even Better

If you handle considerable parts of your workflow with containers, you can hardly avoid a fleet manager like Docker Swarm or Kubernetes. It would be beyond the scope of this article to go into detail on how the various Kubernetes functions facilitate the process of updating applications in containers – in particular, the built-in redundancy functions that both Kubernetes and Swarm bring with them. However, the operation of large container fleets can soon become a pain without appropriate help, so you need to bear this topic in mind.

Persistent Data and Rolling Updates

The last part of this article is reserved for a sensitive topic that, based on my own experience, many admins find difficult in everyday life – namely, persistent data from applications running in Docker containers. Persistent data also plays an important role in the context of application updates, and depending on the type of setup, they either make life much easier for the administrator or make it unnecessarily difficult.

Technically, the details are quite clear: The simplest way to bind persistent storage to a Docker container is to use Docker volumes. If the default configuration of Docker is running, it will create new files in /var/lib/docker. Created with the correct parameters, a Docker volume is persistent and survives the deletion of the container to which it originally belonged.

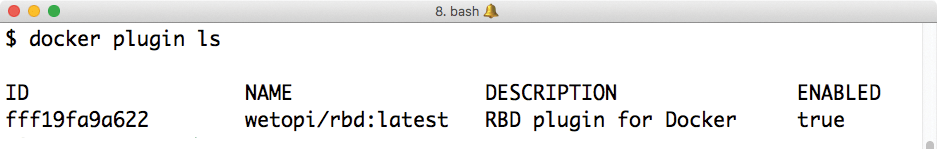

Different storage plugins can be used to connect other types of storage to Docker. For example, if you create a volume of the rbd-volume type in Docker, you can post it directly on a connected Ceph cluster (Figure 5). Even with the standard version in local files, though, distribution updates can be considerably simplified, which I explain in the next section using MariaDB as an example.

Using Volumes for Dynamic Updates

Anyone running a Docker container with MariaDB will normally have a Docker volume for the data. From an operational point of view, this provides a decisive advantage for updates: You can prepare the new container with the new version of MariaDB in the background while the old container continues to run as before. In specific terms, this means you download a new base image of MariaDB or build your own container image, which you test thoroughly.

If it works as desired, simply stop the old container at time t, delete the connection between the old image and the volume, and connect it directly to the new container with the new MariaDB. Assuming you have ensured that the volume is mounted in the container at the correct place in the filesystem (i.e., where MariaDB expects the data in the new container), the downtime will be negligible.

The persistent volume procedure offers another advantage: If you want to test with real data whether problems can be expected during an upgrade, you can create a clone of the existing Docker volume and connect it to the new container. The risk of problems during the update of the live system can thus be successfully reduced further.

Conclusions

Rolling out security and application updates in containers is not a particularly difficult task if you have a clear concept. Tinkering with solutions that involve updating running containers is reserved for emergencies and should never be used longer than absolutely necessary. The ideal way is to build your containers and all the necessary parts yourself, with tools such as GitLab and Docker providing practical help.

If you don't want to do this, you can use the ready-made images from different software providers – they don't differ greatly from their DIY counterparts when it comes to performing updates. However, you might have to wait awhile until an updated version of the required software is available as a container.