Highly available storage virtualization

Always On

To ensure that companies have permanent access to their own data, many technologies for data storage and management have been established over the years. One solution is storage virtualization, which is a useful approach for coping with massive data growth. In this article, I provide an overview of basic technologies and explain how to implement highly available storage area network (SAN) scenarios.

Data Storage Virtualization

Much like server virtualization, data storage virtualization promises better utilization of resources, simplified central management, and increased data availability. Various technical approaches come together under the storage virtualization umbrella. Each adds a logical – virtual – layer to the storage environment that abstracts servers and applications from the actual physical storage, allowing this storage to be combined into larger areas or pools.

The server operating system itself offers a simple type of storage virtualization by grouping several individual physical hard drives, or logical unit numbers (LUNs), into volume groups and creating logical volumes or devices from them. These are then addressed by the OS as a single virtual hard drive. This type of storage virtualization is used very frequently, especially in Unix-style operating systems that come standard with a Logical Volume Manager. For higher data availability, these volume groups can also be mirrored in RAID 1, which – assuming the corresponding SAN infrastructure is in place – enables synchronous databases at two different locations. However, centralized management, which allows all servers and the associated storage resources to be administered and monitored in one place, is not typically available.

SAN as a Pioneer

More complex types of storage virtualization occur within a SAN, which forms the basis for high bandwidths and shared storage. Two fundamentally different technologies are used: the out-of-band and the in-band methods. With out-of-band virtualization, the data and control information take different paths. However, the enormous complexity of such solutions for SAN has meant that it has not asserted itself on the market, and there are only very few such solutions that use this approach today. In comparison, many storage and virtualization manufacturers have in-band products in their portfolio. The data flows from the server to its disks (LUNs) exclusively via storage virtualization, which is designed as a storage server or virtualization appliance.

Storage subsystems with built-in virtualization functionality are a slightly different form of in-band virtualization. These storage systems have many terabytes of internal hard disk capacity that can virtualize other storage systems. This "external storage," connected by fibre channel or iSCSI, identifies itself to the server in exactly the same way as the capacities of the main system and can therefore not be identified as "external storage."

All these solutions decouple the LUNs or volumes of the storage systems from the server; are capable of boosting performance through effective caching, pooling, or tiering across multiple disk arrays and disk types; and provide additional functions, such as standardized cloning, snapshots, encryption, and mirroring for all virtualized storage resources in a uniform manner. These solutions are particularly well suited for the use of high-availability (HA) storage across several locations.

Virtualized vs. Software-Defined

Software-defined storage (SDS) technology goes one step further. For example, on each physical server involved, hardware-independent software responsible for storage virtualization is installed, which acts as a hypervisor, bundling and centrally orchestrating the storage resources of the servers. In the case of VMware with vSAN or Windows 2016 with Storage Space, such functions are already included in the operating system, which allows the storage resources of the individual servers to be completely decoupled from the hardware and grouped into pools. Services such as deduplication, compression, and data protection are also offered.

A kind of erasure coding (i.e., the intelligent storage of data on several instances) ensures that the data is stored in a fail-safe manner. Compared with conventional SAN storage virtualization, this also means that the local or directly connected hard drives of the individual servers can be managed. SDS solutions can even integrate the unused RAM of the servers as a kind of cache with extremely fast access times. SDS as a relatively new virtualization technology is generally considered to have the greatest potential for the future. However, it remains to be seen to what extent this technology can also be used for highly heterogeneous server environments or I/O-intensive applications.

Utilized and Uninterruptible

One of the biggest advantages of storage virtualization beyond uniform management of all storage resources is the significantly better utilization of all connected storage systems and data areas. Reserve capacities that would otherwise have to be maintained on each individual storage system for spontaneous expansion of individual applications without virtualization are almost completely eliminated. Additionally, software functions such as deduplication and compression are inherited uniformly to all connected storage arrays or hard drives, thus opening up huge savings potentials.

Capacity expansion of such virtualization solutions is easily achieved by adding additional disks to existing storage systems or adding new storage systems to virtualization. Data migration, (e.g., when replacing a complete array) then takes place within the solution without interruption. The virtualization itself can also grow by adding additional nodes or appliances during operation.

Precisely Calculating the Savings Potential

If a company plans to use SAN storage virtualization, then it makes sense to compare carefully the solutions by the various vendors because the concepts are often very different, and not all of them will fit your requirements. In addition to the purchase price, license costs have a major effect. An extension or a more powerful upgrade of the solution during operation is just as much a must-have as is support for the existing storage hardware. Support for all standard drivers in your operating systems is equally important, to avoid the need to install and maintain manufacturer-specific drivers across the board.

If technologies such as VMware VVols or VAAI are already in use, the virtualization solution must of course also support these technologies. Manufacturers often indicate unrealistic savings opportunities for the use of storage virtualization, which often do not show up later on to the extent promised. Without precise data analysis in advance, reliable statements can rarely be made. Some providers perform data analysis before a purchase and even offer savings guarantees in terms of deduplication or compression, which makes it easy to compare acquisition costs and savings potential.

Five Nines for HA

HA when accessing your own data is essential for small and large companies today. Even a few hours of failure of important applications can result in considerable costs or sales losses, not to mention a data loss of even a few megabytes. These availability requirements are particularly important for a central SAN infrastructure with fibre channel and storage systems, which form the backbone of the IT infrastructure. If parts of it fails, it often affects many servers and applications, and it takes a huge amount of time and effort to bring all systems back online. The famous five nines (i.e., 99.999 percent availability) means only around five minutes downtime per year, which is equivalent to continuous availability.

But what does a highly available storage infrastructure mean and what are its criteria? The German Federal Office for Information Security (BSI) describes these criteria in the paper "M 2.354 Use of a highly available SAN solution" [1] as follows:

The term "highly available" refers here to a high level of resistance to loss events. Highly available solutions are also commonly referred to as "disaster-tolerant." In relation to the stored data of an institution, this means that a storage system is built up with the help of SAN components in such a way that

- all data is stored at two locations,

- the SAN components of the storage solution are coupled at the two sites but are not interdependent,

- each component is configured redundantly,

- an incident at one location does not impair the functionality of the components at the second location.

On the basis of these BSI criteria, it quickly becomes clear that redundant SAN components and dual fabrics alone are not enough. The establishment of an HA storage infrastructure across two different locations is absolutely essential. Such a solution must be well planned and well thought out to implement all of the company's requirements. The most effective way to implement such concepts is with a SAN virtualization solution.

Especially when it comes to HA, such solutions achieve high scores compared with conventional storage systems. When implementing such a project, all existing storage systems can continue to be used. Virtualization slots in between the existing storage infrastructure and the servers. All leading memory manufacturers have products in their portfolios that use similar technical approaches. The requirements for such an HA solution are high and correspondingly complex to implement and operate.

HA Structure

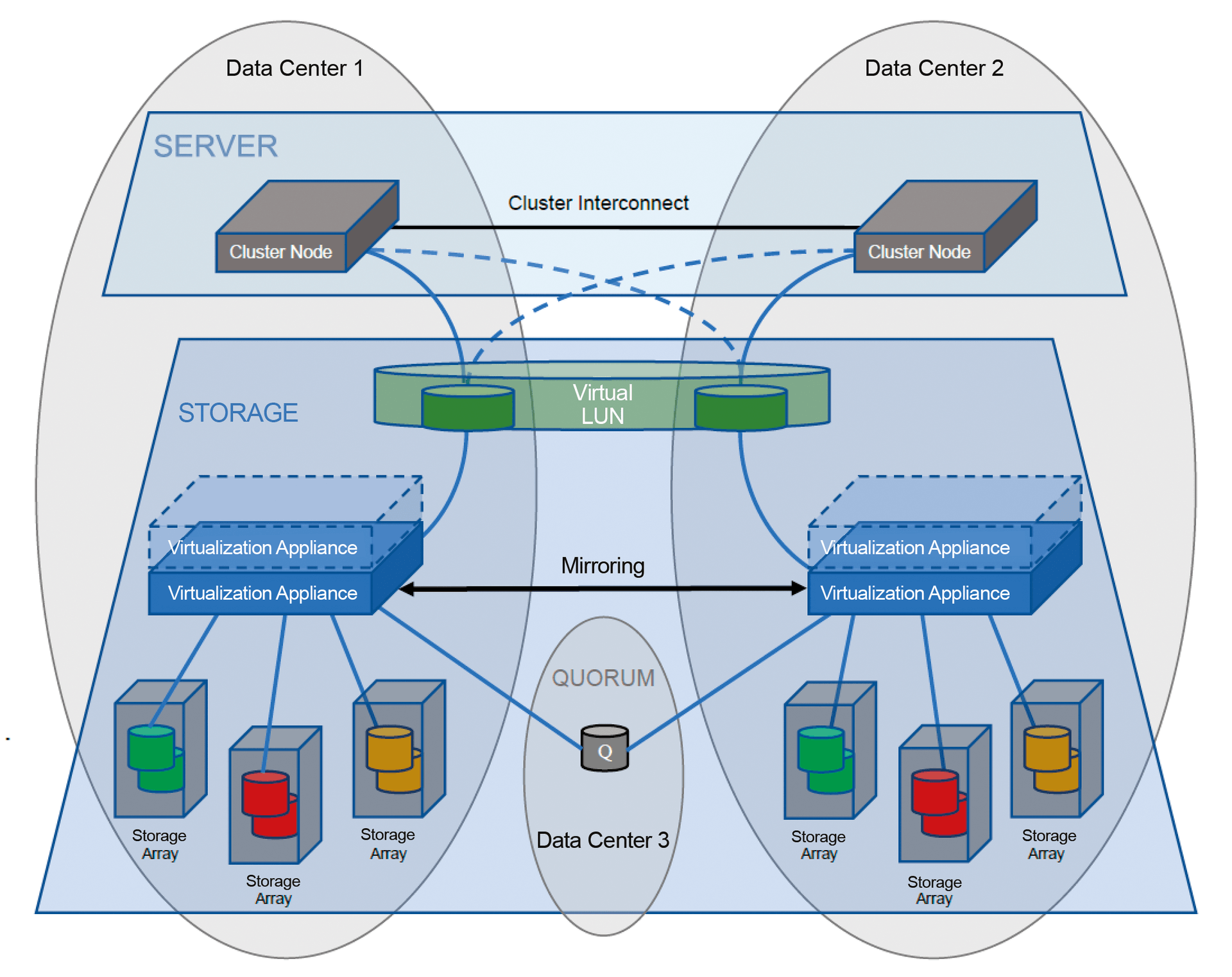

HA storage virtualization typically comprises at least two storage clusters or appliances that are set up at different fire or smoke zones or different locations. Both data center locations have storage arrays that transfer their LUNs via a SAN to the virtualization at the respective location and thus form the back end of the virtualization (Figure 1).

Depending on the solution, the two locations can be up to 300 kilometers apart. Fast Fibre Channel or InfiniBand connections exist between the storage clusters or appliances at both locations for synchronous data mirroring. For server access to storage virtualization, the SAN also extends across both locations. The connections between the two sites are usually realized using DWDM or dark fiber and are best managed over two different routes, as well, for redundancy. Companies should pay attention to approximately equal line lengths to avoid different latency times between the individual paths.

The SAN, which is the link between storage virtualization, the SAN storage itself, and the connected servers, also plays a crucial role. In addition to the data traffic between the server and virtualization, the data needs to flow across this link from virtualization to storage. Additionally, data mirroring between the storage servers or appliances at the different locations must be implemented with as little latency as possible. It might make sense to build different, smaller SANs for the data traffic between virtualization and servers (front end) and virtualization and storage (back end) for mutual isolation of the data traffic to the extent possible.

Quorum Device as a Referee

To prevent a split-brain situation, wherein, in the event of a failure, both storage clusters would continue to work independently of each other without synchronizing data, many solutions provide one or more quorum devices or file share witnesses as decision makers. Connected by Fibre Channel, iSCSI, or an IP network, such quorum devices should preferably be located at a third remote location. The distances of the individual virtualization nodes to the quorum devices are also subject to certain limitations, depending on the transmission technology.

The quorum device is not required in normal operation and the failure or loss of connection to the virtualization nodes has no effect. In the event of an error, however, the quorum device plays a decisive role, because only the location that has access to the quorum device remains active and continues to work. This is also decisive in other error situations when both virtualization nodes can no longer communicate with each other. Some solutions offer the possibility of defining a primary location that remains online in certain error situations and is given corresponding priority in interactions with the quorum device.

Data Consistency Through Mirroring

From the point of view of the connected servers, highly available storage virtualization over two locations, despite its underlying complexity, only looks like a simple LUN with a large number of logical paths, which the server usually addresses with different host bus adapters and separate SAN fabrics. Because the virtualization nodes at both locations transmit the same array serial number, device IDs, and other identifiers to the connected hosts via SAN and the SCSI protocol, even though they are two different devices on two different virtualization nodes, they are identified and used as one LUN from one storage array.

All write I/Os of the hosts are then transmitted to the virtualization node of the other location before they are reported back to the host as complete and written. Various reservation mechanisms also ensure that no competing access from other hosts takes place during this write process to the LUN. All of this happens very quickly and does not lead to major delays. This ensures that the data on both sides are always exactly the same and consistent.

Most of these solutions can use an active/active approach, which means that a virtual LUN can be accessed almost simultaneously by the virtualization nodes at both locations. However, active/passive solutions also exist in which the virtual LUN can only be accessed from one location. In the event of an error, such solutions must then switch LUN access in the background and rotate the mirror direction. This switching then takes place within the SCSI timeout times and is thus transparent for the server and the applications.

Consistent HA Required

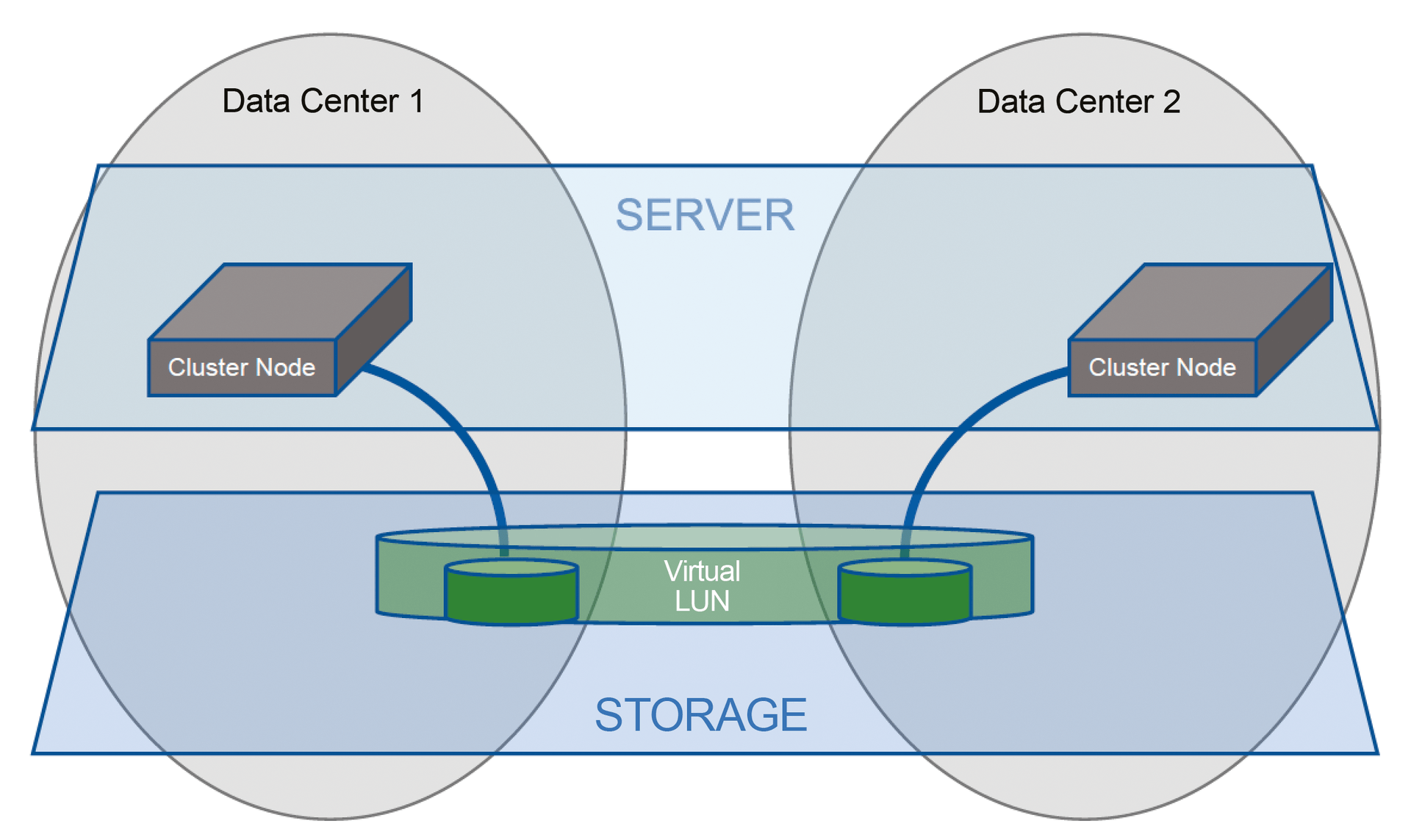

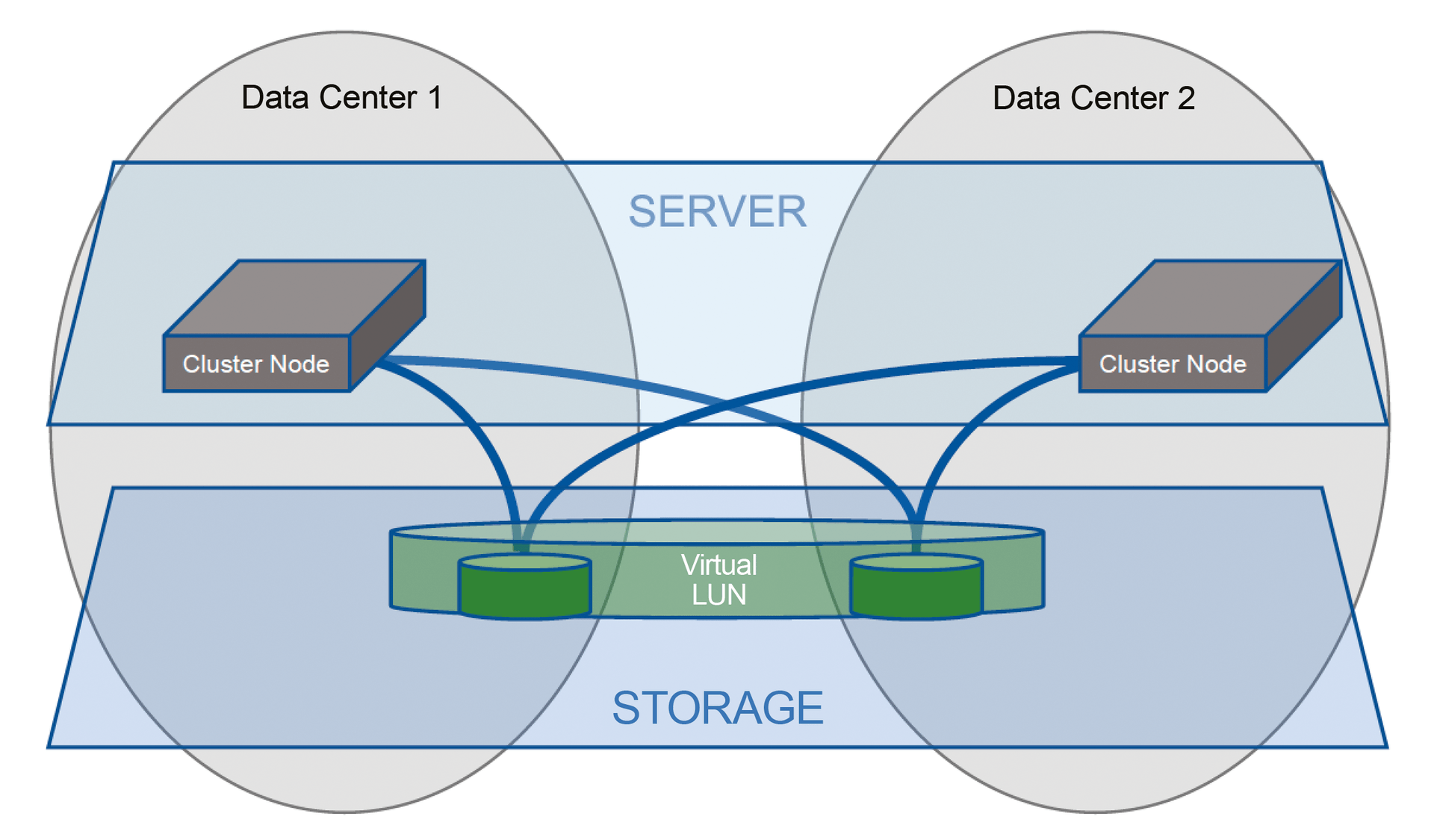

This amount of storage provisioning only makes sense if the server and network landscape is also available. For servers, this is usually implemented as a cluster or MetroCluster configuration. As with storage, the servers and cluster nodes should be distributed across both locations or smoke zones. Connecting these hosts to HA storage over the SAN is accomplished by two different methods with corresponding effects: local and cross-link disk path configuration.

In the local configuration, only the LUNs at its own location are presented to the respective cluster node (Figure 2). If this cluster node loses access to its LUNs, the applications must inevitably be clustered to the other node that has access to the mirrored LUNs at the other location.

In the cross-link configuration, the LUNs from the other location are also presented to all cluster nodes (Figure 3). If the local LUNs are lost, the node can access the LUNs at the other location without interruption through the SAN and the long-haul line and continue to work without swinging the cluster. The disadvantage of this configuration, apart from the higher latency in the event of an error, is that the cluster must also be configured in normal operation in such a way that it should only use the paths to the "local LUN" to avoid permanently writing to the LUNs at the other location with high latency times.

Asymmetric Logical Unit Access (ALUA), which is a standardized function of the SCSI-3 protocol and is supported by most operating systems, makes this possible in a very simple way. However, the storage solution must also provide appropriate ALUA information to the server. If it does, the server receives the optimized path setting and then implements it accordingly by using only the optimized data paths sent from storage. The non-optimized paths are then only used in case of an error and are put in standby until then. If the storage product does not support ALUA, the only option is to set up optimized access controls manually on the server or to use manufacturer-specific drivers or path failover software that can be provided by storage providers and are available for the common operating systems.

However, the installation of such special drivers usually requires a reboot of the server and version maintenance and compatibility checks if the operating systems are patched regularly.

It is very important that the settings for the host bus adapter and path failover software are made exactly as specified by the storage virtualization manufacturer and that the redundant LUN paths on the servers are checked regularly – a prerequisite for uninterrupted operation of the applications. Even if certain LUN paths are not normally used actively, they must still be available for an error situation. It would be fatal if, in the event of a site failure, the cluster panic mechanisms did not work because of incorrectly set parameters or missing redundant LUN paths on the server, despite having an expensive, highly available storage solution in place.

Practicing Failure

Once a virtualization solution is implemented and in operation, you still need to be prepared for any failures. It is important that the operating personnel is familiar with handling such a complex solution, even in an error situation. An operating manual and a contingency manual have often proved their value for such environments. The operating manual explains the basic functionality of the virtualization solution and describes daily tasks in detail.

The contingency manual is used in emergency situations. In addition to the most important emergency telephone numbers, it should also describe in detail all possible error states and recovery procedures.

An emergency always comes unexpectedly and thus generates panic and stress in many places. In a situation like this, an effective contingency manual is often worth its weight in gold. In this context, it also makes sense to generate regularly possible error states in the scope of a disaster case test, to process the recovery procedures, and to check their correctness. After all, a storage solution changes constantly because of new software versions and functionalities, and the server landscape and SAN infrastructure are also subject to a regular update cycle, which can result in significantly changed failure behavior.

Watch for Pitfalls

Despite the many advantages, there are also some potential downsides of highly available storage virtualization. Once such a solution is established, it is very time-consuming to replace it with a solution from another vendor or to stop using virtualization. If a company also uses a solution to virtualize third-party storage from other manufacturers, it can happen that, in the event of a failure, the buck is passed between the individual manufacturers, and the actual problem then fades into the background and is only solved very hesitantly.

Conclusions

Storage virtualization is already a quasi-standard today. For important data, the use of an HA storage environment can make a decisive contribution to uninterrupted operation in the event of the failure of individual components – or an entire data center – or to meeting legal requirements in the area of data storage. However, the maintenance of this storage environment and familiarity with handling it are essential if a company wants to be prepared for an emergency.