Supporting WebRTC in the enterprise

Nexus

Although it caused huge excitement at its announcement by Google in 2011, web real-time communication (WebRTC) has somewhat faded from the general tech consciousness. Maybe it was oversold as a done deal back at the time, when it should have been presented as the start of a long and painful journey toward inter-browser real-time communications nirvana. Although the froth has disappeared off the top, the high-quality microbrew continues to ferment, and WebRTC maintains a steady upward trajectory in terms of development, progress toward standardization, and adoption, with the goal of making rich interpersonal communications easier and more prevalent than ever before. In this article, I'll explore some of the underpinnings of WebRTC, its popular use cases, and how to solve common user problems – many of which are the same as the common problems that arise in any form of real-time unified communications – so you can help your users reap the benefits that modern WebRTC-enabled browser apps have to offer.

WebRTC

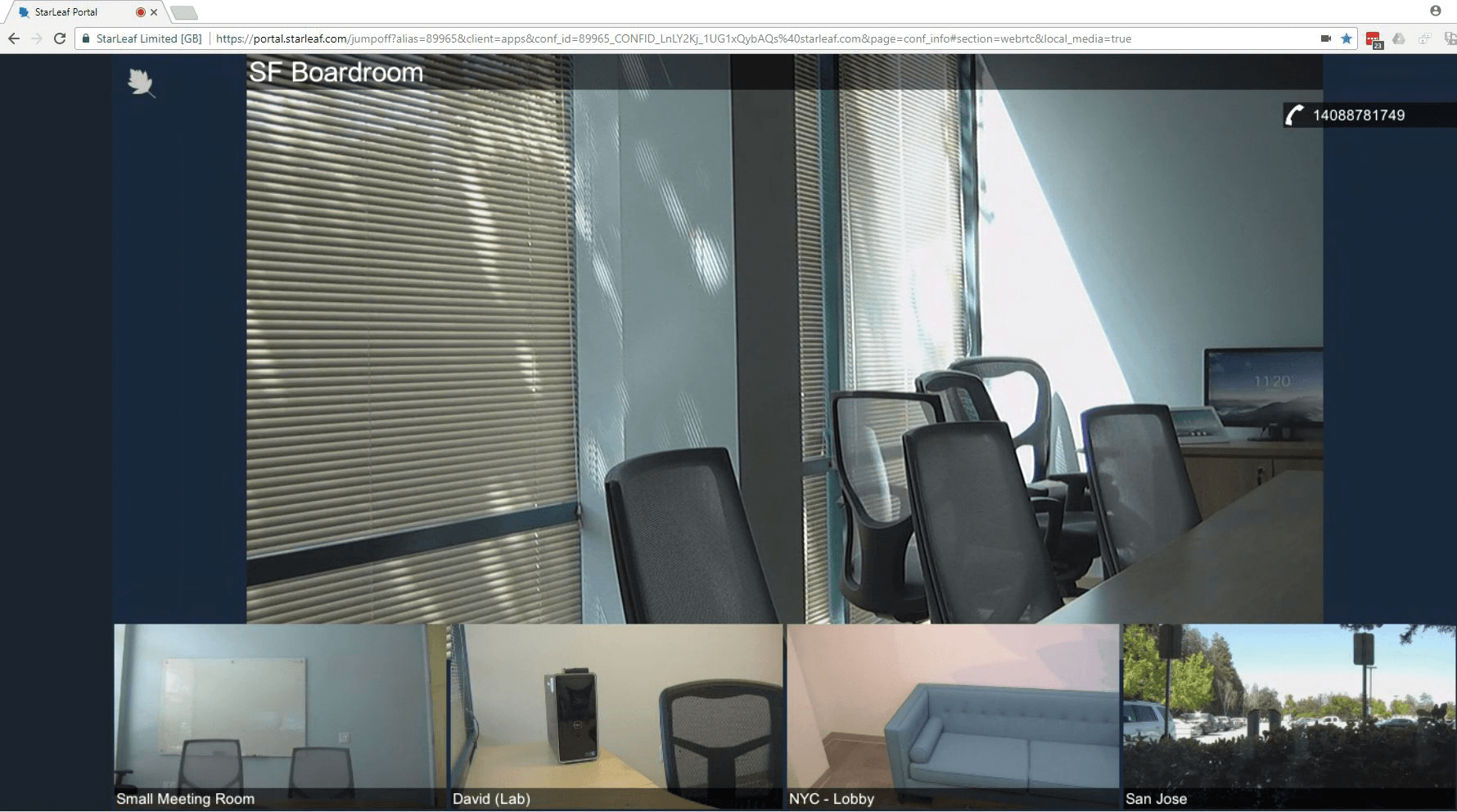

WebRTC-compliant browsers can access media devices directly on their host system and then exchange and render the resulting media tracks (audio, camera video, desktop content, and arbitrary data) directly with other WebRTC endpoints, which are normally browsers but can also be conferencing services, native apps, or gateways to other communications networks, such as a public switched telephone network (PSTN). WebRTC places much emphasis on peer-to-peer communication, wherein a browser exchanges media directly with one or many other browsers. However, an equally common scenario, especially in enterprise-grade WebRTC apps, is a client-server relationship, in which all media is routed through a central hub.

Although the client-server approach comes at the expense of optimal routing of media over the network (typically the Internet), it allows the application to provide many other benefits that enterprise applications require, including many more participants in a web conference, recording, interoperability with other services, and much tighter control over network address translation (NAT) traversal by implementing traversal using relay NAT (TURN) server functionality. Figure 1 shows a typical example of such a conference, combining WebRTC participants, hard video conference systems, and telephone callers.

WebRTC is made up of separate IETF and W3C standards, currently in Candidate Recommendation status, with each covering a different aspect of setting up a peer-to-peer connection. The essential parts include the following.

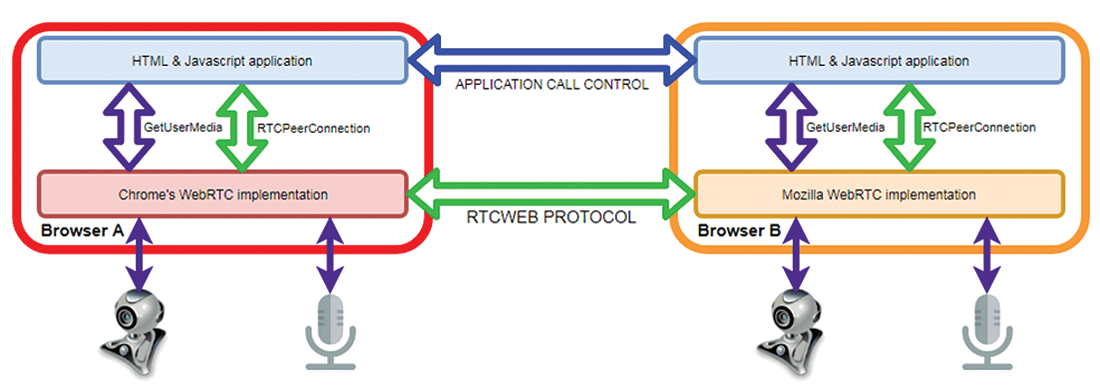

- MediaStream (aka

getUserMedia) is "… a set of JavaScript APIs that allow local media, including audio and video, to be requested from a platform" [1]. This allows a JavaScript app to enumerate, access, and control the available cameras, speakers, and microphones on a device. MediaStream allows specific limits to be placed on the resolution of the video streams (camera and screen-share) that will be returned, which will have a significant effect on the bit rate of the media streams that are transmitted after the connection is established.getUserMediais a W3C Candidate Recommendation by W3C's Media Capture Task Force and is a part of HTML5. - WebRTC 1.0 (aka

RTCPeerConnection) is the API used to control the browser's WebRTC peer-to-peer connections. This is what a JavaScript app uses to initiate and conduct a real-time call containing video, audio, and screen-sharing streams [2].RTCDataChannelis the equivalent API for sending arbitrary data streams between browser peers, with file sharing being a typical example. - Real-time communication in web browsers (RTCWEB) is not an API, but the protocol that two WebRTC endpoints use to set up a call with each other – when instructed to do so by the WebRTC API. It covers signaling, NAT traversal, media capability negotiation, bandwidth and rate control, and security [3].

- JavaScript Session Establishment Protocol (JSEP) is a subset of

RTCPeerConnectionand is used for exchanging the offer/answer Session Description Protocol (SDP) and Interactive Connectivity Establishment (ICE) protocol of RTCWEB between the browser's WebRTC implementation and the JavaScript app. This approach is necessary because RTCWEB relies on the application's signaling channel (whose protocol and transport type are not specified by any part of WebRTC) to carry SDP/ICE data from one browser to another [3]. SDP is used for negotiating the video and audio codecs that the browsers will use, and ICE is for determining to which IP address/port combination each browser will send its media flows. The JavaScript app uses JSEP to "ask" WebRTC for the information when needed (and then sends it to the far end through the signaling channel), and vice versa, to "give" it to WebRTC when it is received from the far side.

WebRTC does not specify a high-level call control protocol, nor the type of network transport it should use – that part is left up to the application. An implementer of a WebRTC app that wants to interoperate with other WebRTC apps might base their signaling on an existing standard like the Session Initiation Protocol (SIP) or XMPP/Jingle (and, in fact, the SDP information that RTCWEB generates is designed to be interoperable directly with "normal" SIP calls). More commonly, given that interoperation between different WebRTC solutions is rare, WebRTC apps will simply implement their own signaling methods. Figure 2 shows how the getUserMedia application, WebRTC, and RTCWEB interoperate within and between browser peers.

What WebRTC Can Do

By their very nature, WebRTC applications are delivered in the form of web pages and JavaScript programs, which means your users are most likely accessing them and using them of their own accord without you knowing about it. Popular applications based on WebRTC include Google Hangouts, Facebook Messenger, Amazon Chime, Appear.in, and GoToMeeting's browser implementation [4]. Google recorded a 45% year-on-year increase in the use of Chrome's WebRTC features in 2017, at a run rate of 1.5 billion minutes in a week. This number is huge but still miniscule compared with the 3 billion minutes of Skype calls that are made each day!

WebRTC is the only (currently) viable inter-browser real-time communication solution; it is easy to use in development and offers free access to secure, high-quality media codecs. One consequence of the relative ease of implementing a WebRTC solution is the appearance of many more applications that are "unified communications (UC) enabled"; that is, their fundamental purpose is not general communications, but to provide a specialized app for some other purpose, such as telemedicine. As these apps increase in number and quality, it's highly likely that usage will increase toward Skype-like levels. IT departments will have to adapt to meet user demand, rather than try to manage the scale of usage.

Barriers to Successful Usage

The frustration and wasted time caused by failed video calls mean that users have very limited patience with new apps, and the nuances of firewalls and network bandwidth are (rightly) irrelevant to anyone who's simply trying to get a day's work done. No app exists in isolation – its success is hugely dependent on its host device, operating system, and network. I will point out some of the common stumbling blocks to successful use of a WebRTC app and suggest some approaches that might help you identify and solve them. I'll present these in five categories:

1. Device availability – enumeration and permission for cameras, microphones, and speakers.

2. Browser capabilities – support for the WebRTC APIs and RTCWEB, with or without plugins.

3. Signaling channel – connection to the signaling server for application call control and SDP/ICE exchange.

4. Discovery – NAT traversal possibilities: direct, session traversal utilities for NAT (STUN), and TURN.

5. Network bandwidth – media flowing from endpoint to endpoint; bandwidth availability and rate control.

Many of the troubleshooting considerations are generic to any application that requires users to communicate using their PCs.

Device Availability

This category is as much about human factors as it is about technology and causes a significant number of real-time communication attempts to fail. You might assume that everyone would realize that a functioning webcam, microphone (unmuted), and audio output device (volume turned up) are essential to any successful video calling experience. You would be wrong. Users need to be made aware of the need to prepare properly for live video calls, just as they would for an in-person meeting, especially when they are planning to connect to a scheduled meeting with many other participants, where five minutes spent untangling headphone cords compounded by the number of other connections turns into a serious waste of valuable person-hours. Well-designed apps facilitate this process by leveraging the getUserMedia device enumeration capabilities to allow the user to select and test their microphone, speakers, and camera easily as part of the connection process.

Browser Capabilities and WebRTC Support

WebRTC is still only a Candidate Recommendation, and even when it is a standard, a browser will not need to implement the full range of possible features natively to be compliant. For example, screen-sharing capability is an optional part of the standard. The major browsers all differ in the range of WebRTC features that they support and the manner in which they do so. The "Is WebRTC ready yet?" website [5] gives an at-a-glance view of the current state of support across all major browsers. It also has a more detailed legacy view showing the state of various components within the different browsers (Figure 3). Although this particular view is out of date in one significant area (it doesn't reflect the fact that Safari 11 does have native support for WebRTC), it gives a good idea of what the different components all are and the quality of support in the different browsers.

![Browser compatibility results example [5], not updated for Safari 11. Browser compatibility results example [5], not updated for Safari 11.](images/Figure_3.png)

You will notice that Internet Explorer is completely absent from the table. WebRTC apps can be made to work in Internet Explorer by use of a plugin that implements a JavaScript polyfill. A couple of such plugins are available, the most common being the one from Temasys. The app includes Temasys's adapter.js script that prompts the user to download the plugin if the WebRTC APIs are not already present in the browser (Figure 4).

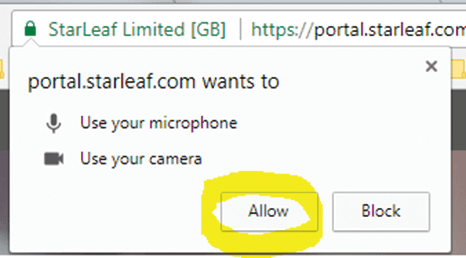

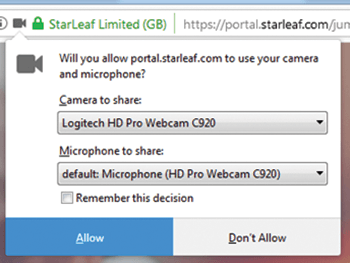

All browsers require you to grant permission for camera and microphone access. The first time you attempt to access a WebRTC-enabled application, the browser will seek permission by means of a pop-up, which is the most obvious indication that the app is, in fact, a WebRTC app. Connection attempts commonly fail simply because many users are conditioned to ignore or cancel any sort of pop-up; if the website is not secured by a valid HTTPS certificate, Chrome will not even display the permissions dialog. Users should be aware that they do need to agree to pop-ups like those shown in Figures 5 and 6. The Firefox version allows you to select the specific camera and microphone, where a choice exists, so you should make sure to choose devices that actually work, as opposed to, say, the camera inside the closed lid of a docked laptop. I have personally witnessed the IT director for a global manufacturer abandon a WebRTC-based conference for this very reason.

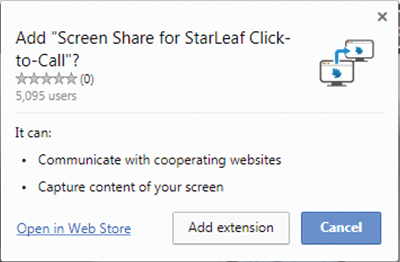

Chrome requires you to install an extension for screen sharing (Firefox allows it natively), more for reasons of security than technology, because the extension can be implemented in about 10 lines of JavaScript. The extension needs to be packaged with the name of the website for which it is intended and that is explicitly listed in its manifest file.

The upshot of this is that users are best off using Chrome or Firefox for their WebRTC requirements, especially if they are required to share their screens (or application windows or browser tabs) during their call, and should be made aware that they need to allow all access/installation requests related to their session (Figure 7).

Setting Up a Signaling Channel

After access to suitable devices has been obtained, the call needs to be established, and creating the signaling channel is the first step. It must provide a path for full-duplex communication between both endpoints, but because no direct network connection yet exists between the two (ICE negotiation has not happened yet), it must be routed via an intermediary server. This server will often be the same server that is managing and delivering the app itself, and routing the signaling that way provides the additional benefit of that server being able to exert additional control over the call setup process. HTTP is a client-server protocol, not a full duplex communications channel, so it is no good for peer-to-peer signaling. WebSockets (wss://) is a common choice that uses well-known TCP ports, but it could equally be a socket.io connection using an arbitrary port.

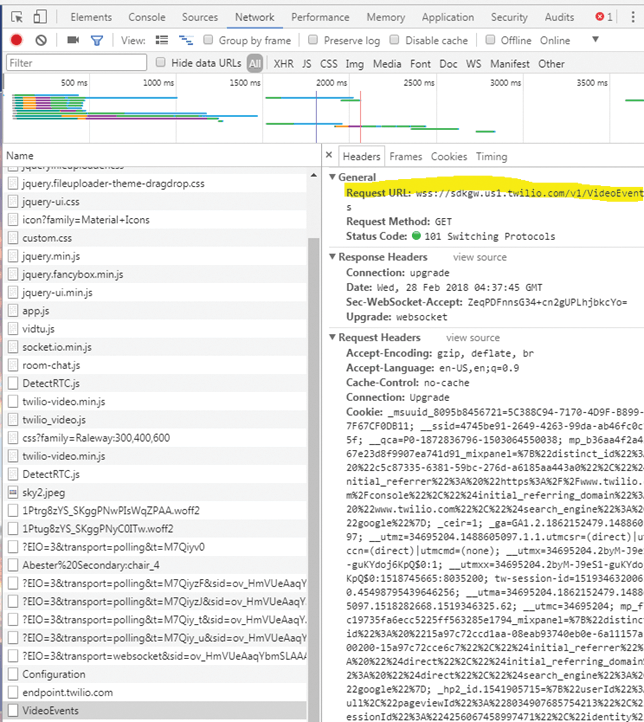

If users are experiencing problems at this stage, you can make use of the Network tab in their browser's developer tools section to see exactly what IP address and port their browser is trying to connect to for signaling (Figure 8) and open up the firewall accordingly. The WebSocket Secure (WSS) default port is 443, so this should work in most cases. Web proxies should support the HTTP CONNECT method on port 443. Any proxy that inspects secure content will rely on a self-signed certificate to re-encrypt the content, and WSS signaling channels fail to connect if the browser can't establish a root certificate authority path for these certificates.

With the signaling channel established, the high-level call control traffic (the equivalent of SIP's INVITE, TRYING, RINGING, OK) can be exchanged by the JavaScript apps on both sides, followed by the SDP and ICE content that the app gets from WebRTC using the JSEP-related parts of RTCPeerConnection.

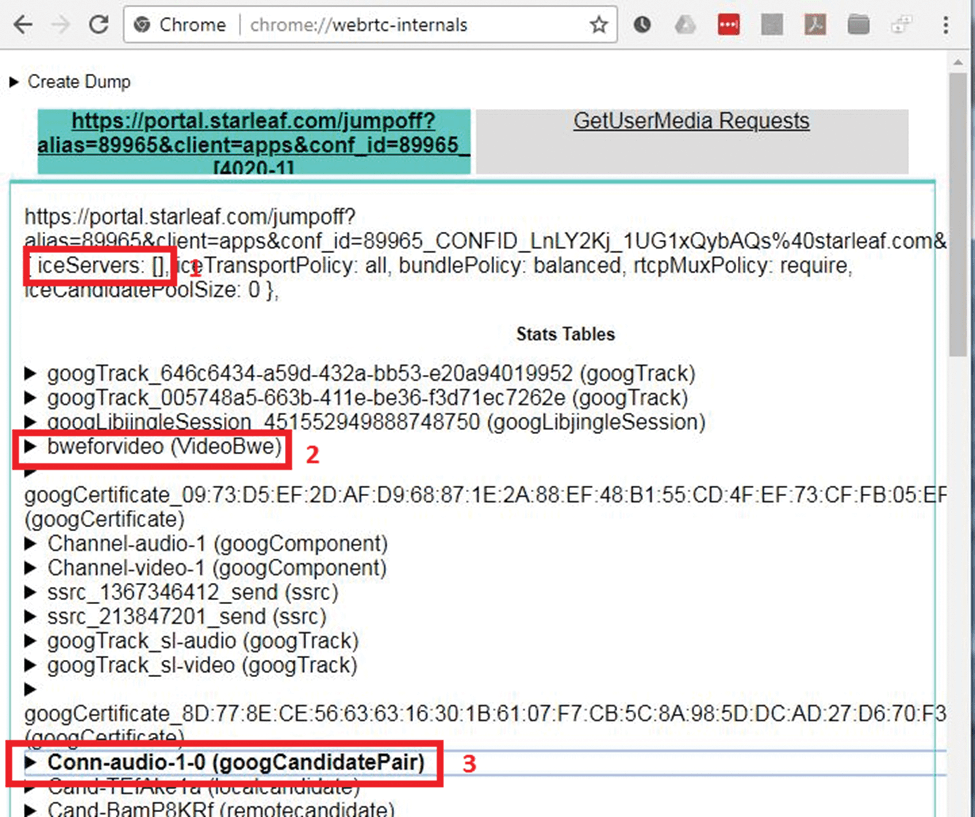

At this juncture, I will introduce Chrome's webrtc-internals page, a comprehensive WebRTC troubleshooting tool built right into the Chrome browser (which I hope you have realized by now is the go-to browser for the serious WebRTC practitioner). Opening a tab to chrome://webrtc-internals shows a real-time overview of all the WebRTC activity for that entire browser instance. If all you see is Create Dump, there is no activity. If you see WebRTC activity, a GetUserMedia Requests tab shows you all the active device access requests. For each current RTCPeerConnection, you'll see another tab that gives access to a bewildering array of information, most of which, fortunately, is not necessary for troubleshooting at the level of this article. Figure 9 shows an example of the webrtc-internals page from Chrome 64, with three points of interest highlighted: (1) parameters supplied by the app to RTCPeerConnection, which might include (although not in this example) the specific TURN servers to use; (2) a section that summarizes the results of the bandwidth estimation (BWE) process used by RTCWEB to estimate how much network bandwidth is available; and (3) detailed information about ICE candidate pairs that shows the IP addresses and ports being used for the media channels.

Discovery

A key piece of information that needs to be established during the signaling process is the IP address and port number on which the endpoint can receive a media stream from the remote endpoint, or the media return address. This process as a whole is ICE, and discovery is the first part, in which each endpoint determines a list of IP addresses and port numbers (ICE candidates) that the remote endpoint might be able to use to send media back. In an ideal world, an ICE candidate would simply be the host's own private IP address and a free port of its own choosing, and in the simple scenario of two endpoints communicating across the same LAN, that is indeed what would be used. The chance that method will work just fine is small but definite, so WebRTC will include all of the device's IP addresses as ICE candidates. However, it is more likely that each client is behind a different NAT, and the remote endpoint will not be able to route media packets back to the local endpoint's private IP address.

The local endpoint needs a way to figure out what public IP address/port number it ought to give the remote endpoint. The correct answer to this problem depends, among other things, on whether the local Internet connection uses domestic-grade cone NAT (in which a single public IP address/port pair allows packets from any source to reach a specific inside host) or commercial-grade symmetric NAT (in which access to an internal host via a given IP address/port combination is restricted to the single public host to which the internal host initiated communication beforehand). This gets complicated, because an endpoint – especially a browser on an end-user's device – does not know the topology that exists between it and the public Internet. This problem is by no means unique to WebRTC and was solved a long time ago in the SIP/VoIP world by means of STUN and TURN servers. RTCPeerConnection takes full advantage of this same technology. See the "STUN and TURN" box for information on how it works.

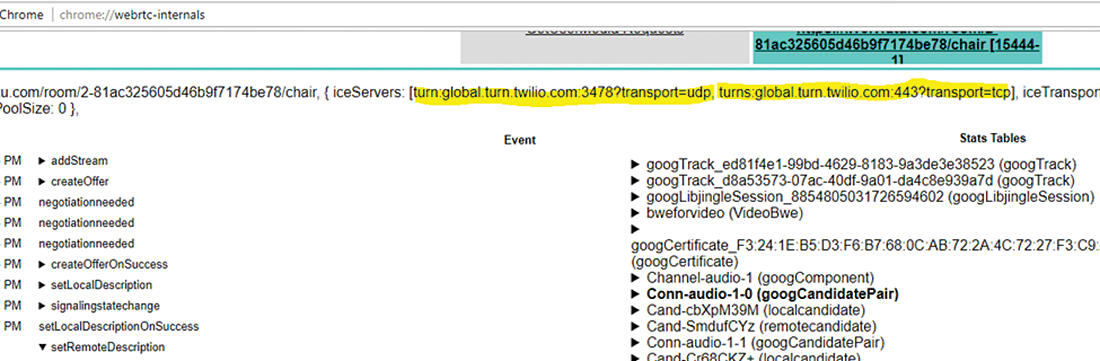

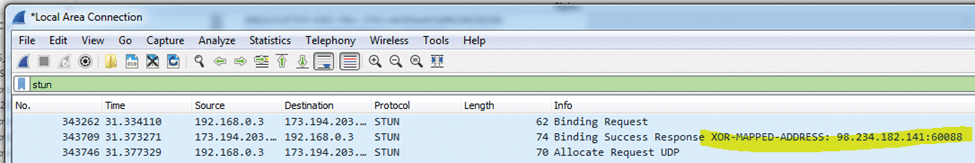

The discovery of reflexive and relay candidates yields potential points of failure because it relies on connections to external services on not-that-well-known ports. Allowing access to the standard STUN/TURN port 3478 (and its TLS equivalent 5349) will usually solve this problem. Some services (e.g., Google Hangouts) use other ports. The application server might tell RTCPeerConnection which STUN/TURN service(s) to connect to during the signaling process (Figure 12). Although the default transport for STUN requests is UDP, as with SIP, the request URL allows for a different transport to be specified. In Wireshark, the display filter stun reveals the STUN traffic (Figure 13).

Sometimes you will find that STUN/TURN traffic is being sent to standard ports (e.g., 80 and 443) but failing nonetheless. In this case, a web proxy is a common culprit; for example, the web proxy might be blocking the request for authentication reasons.

The webrtc-internals page also shows useful ICE information. In Figure 13, the app is requiring WebRTC to use its chosen TURN servers to forcibly route the media via the application's server (because this WebRTC app needs to make a recording of the conference and transcode the video codec for maximum inter-browser interoperability).

Network Bandwidth

The outcome of a successful signaling and discovery phase is an agreement between the endpoints as to what type of media to send to what IP address:port destination. The endpoints now start to transmit media tracks to each other, and if the ICE process has gone according to plan, that media should arrive at its destination within a few milliseconds of being transmitted. However, scope for user frustration still exists in the form of media quality problems. For a smooth conversation, media packets need to travel between endpoints with as little loss and delay as possible – any latency or jitter of more than about 150ms starts to become noticeable, and 300ms (in one direction) is regarded by IETF as the maximum acceptable amount.

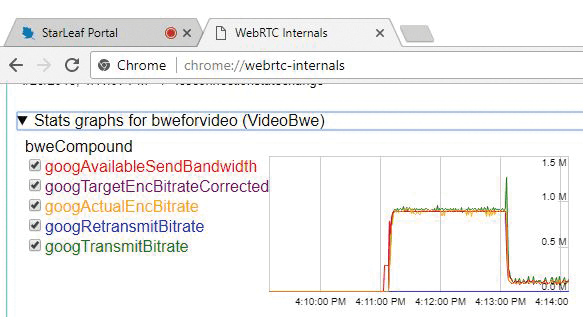

When starting to send a media track, WebRTC implementations use a BWE algorithm to probe rapidly upward to the highest possible bit rate that the network seems able to carry without incurring unacceptable levels of loss and delay, while also allowing a high initial bit rate so that the media starts off at a high quality. On an unconstrained network, and in the absence of any additional video resolution limits requested by getUserMedia, that rate will be approximately 2.5Mbps for a 1080p video camera or screen-share channel.

When the media flow is established, the endpoints will then implement rate control by gathering statistics for packet delay and packet loss. Up to a point, steady delay will not cause the transmitted bit rate to drop significantly, but when the amount of delay varies significantly from one packet to the next (i.e., high jitter), the endpoints will start to reduce their transmitted bit rates rapidly to just a few hundred kilobits per second, resulting in blurry video (Figure 14a and b).

Packet loss of more than about 10% will cause the same reduction in resolution. Figure 15 clearly shows Chrome's response to the sudden onset of 10% packet loss. The green spike is an increase in bit rate caused by retransmissions, followed by a very large reduction in transmitted bit rate, as determined by its congestion control algorithm. The same effect is observable when high jitter is reported by the far end. WebRTC reacts in a very conservative way, drastically reducing the bit rate of its transmitted video streams when network congestion exceeds certain thresholds, for the sake of keeping the streams going consistently at a lower quality rather than allowing frozen images or video artifacts.

One consequence of the adoption of WebRTC on desktop and mobile devices is that every network those devices connect to, be it the main data network or the guest WiFi network, has to be able to supply the high bandwidth and low latency quality of service that real-time video communication needs. The traditional practice of connecting dedicated video conferencing equipment and VoIP phones to a dedicated VLAN, separate from the data VLAN used by people's desktops, is becoming irrelevant, and network administrators will need to bear this in mind when designing their networks and allocating bandwidth.

Identifying the underlying cause(s) of network packet loss is much more difficult than identifying the fact that it is occurring. Unlike a firewall problem, where the exact device whose configuration needs to be fixed is usually obvious, the network connection between two endpoints relies on many individual hops for each to behave perfectly. The culprit is frequently one of the "ends" of the connection – the browser's own network connection, which might be a weak WiFi signal; a slightly suspect cable that works just fine for downloading a web page, but falls apart under the bit rate demands of live high-definition video; or a heavily loaded Internet gateway full of cross-traffic. Before undertaking a more systematic troubleshooting process, you should focus initial troubleshooting efforts on these connections. However, eventually you will come to a point at which you need forensic identification of packet loss causes, and here is a process that can help:

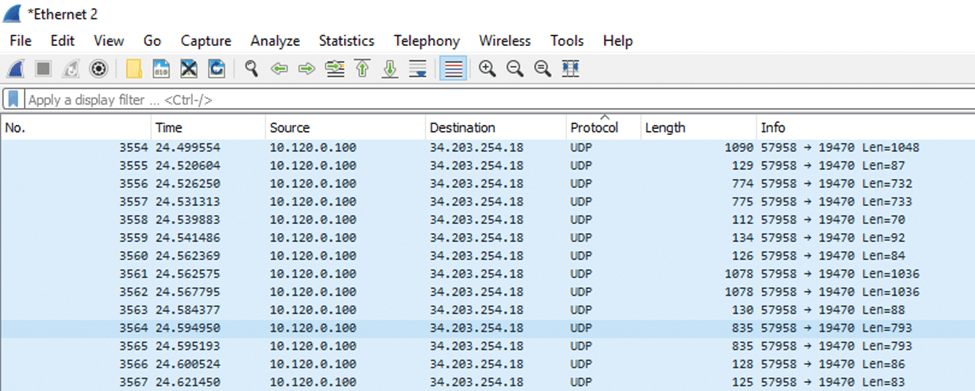

- Use the webrtc-internals page or a tool like Wireshark (Figure 16) to identify the IP address to which the browser is trying to send real-time media. The culprit will usually, but not always, be a UDP stream; the short packets in this example are audio and the long ones are camera video or screen share. Note that different-sized packets can be treated differently by routers, so your user, or their remote peer, might be experiencing a clean audio connection but terrible video.

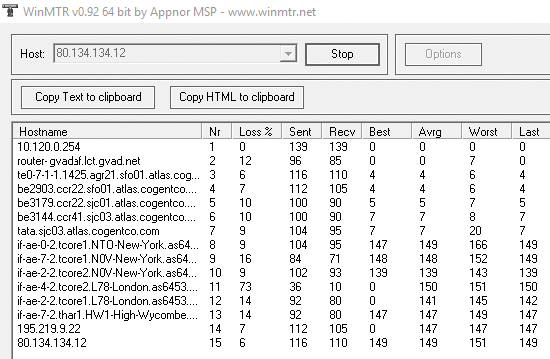

- Run a traceroute (MTR) tool with the host set to the public source/destination of the WebRTC media stream. These results need to be interpreted; for example, some individual hops will be configured to ignore all ICMP traffic anyway, resulting in 100% apparent packet loss. You are looking for levels of packet loss that start at a certain hop and then propagate all the way to the destination host. The hop at which the loss starts is the one that needs attention. In Figure 17, the loss starts at my Internet gateway, because I deliberately introduced it with a (network emulation)

netemrule.

Of course, the problem could be at either end of the connection – in the sending browser's upload or the receiving browser's download – and the user experiencing the media quality problems is often not the user in whose network the packet loss originates. This need for a coordinated effort is one of the things that makes packet loss troubleshooting difficult. The bottom line is that, when many users on a network start using WebRTC apps that each try to transmit and receive multiple 2.5Mbps video streams, Internet connection upgrades are often the outcome.

WebRTC Test Tool

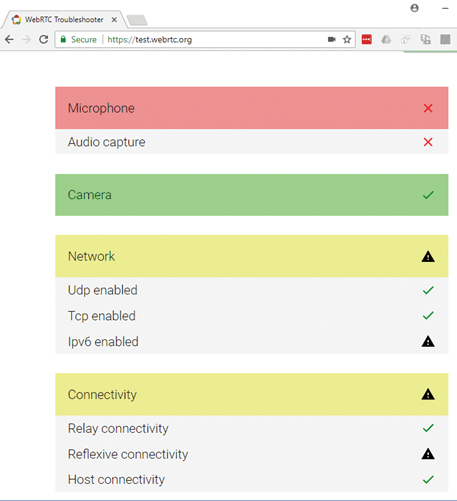

All of the main topics discussed here – device availability, browser capabilities, connection establishment and signaling, and network performance – can be neatly tested at a high level by a single tool on the WebRTC.org website [6]. WebRTC.org is the home of the WebRTC open source project, and this tool is a reliably up-to-date method of determining compatibility with the latest WebRTC capabilities. The tool runs through each of the main categories and reports on each of the problems it finds. Figure 18 shows an example of its output. If it highlights any problems on your users' browsers, the explanations here should help you isolate the source.

Conclusions

WebRTC currently accounts for only a small percentage of unified communications traffic, but it is continuing to grow because of its potential to bring high-quality, easily developed, and interoperable real-time voice, video, and data communication to all manner of applications by means of ubiquitous web browsers. However, users can still encounter frustrating connectivity challenges that deter them from using the technology. Luckily, these problems are usually much easier to solve than would be the case with legacy unified communications applications that tended to be highly proprietary and require a high level of expertise and certification to support. By understanding the connection process and the common failure points, you can help your users adopt this technology and reap its productivity benefits.

The ultimate winning technology for browser real-time communications might be found in one of the nascent technologies, such as Object Real-Time Communications (ORTC) or WebRTC NV (next version), not WebRTC as it currently exists. Nonetheless, the troubleshooting considerations discussed here will hold true for those standards, as well.