Effective debugging of Docker containers

Bug Hunt

Docker must have passed the peak of its hype. New versions no longer trigger the same kind of hustle and bustle in the community and no longer provoke a flood of specialist articles, not least because a considerable amount of interest has shifted away from Docker in recent months toward solutions that use Docker as a basis for larger setups – such as Kubernetes.

Docker is now mainstream. Today it is no longer unusual to use the container solution where you might have relied on virtual machines (VMs) in the past. In this respect, Docker is only one of many virtualization solutions. However, the number of users who deal with Docker in the production environment is growing. As a result, an increasing number of developers and admins, who are unfamiliar with Docker, initially have problems with building and starting a Docker container.

In fact, many things can go wrong when building and operating a Docker container. If you approach a Docker problem with the mindset of an experienced kernel-based VM (KVM) admin, you will not get very far: Most things in Docker are fundamentally different from real VMs. Although you can log in to the latter and investigate a problem in the familiar shell environment, Docker offers its own interfaces for reading logfiles and displaying error messages, for example.

In this article, I describe in detail the options for debugging Docker and Docker containers. To start, I introduce various tips to make Docker containers debugging-friendly right from the start.

A Debugging Mindset

Building your own containers makes sense, because prebuilt containers from Docker Hub expose you to the risk of a black box model. On the outside, the container often does what it is supposed to do – what is inside the container and how it has been built, however, remain unclear. Compliance issues already preclude this approach in many companies. If you source your containers on the web, you will also have a hard time debugging.

However, it is not difficult to build Docker containers by starting with the manufacturer's distribution image, as discussed in a previous article on Docker and GitHub [1]. In the end, it takes little more than an error-free Dockerfile containing the central instructions. If you follow this path and build your own containers from the start, you can design them so that they are easy to debug from the beginning, if you follow several basic rules.

One Container per Application

First and foremost – and even experienced Docker administrators often disregard this logical recommendation: Put each application into its own Docker container instead of combining several applications into one large container.

The idea of using only one container for several applications probably still reflects the long-standing thinking of VM admins: KVMs produce a certain amount of overhead that multiplies when many small programs are spread over many individual VMs.

However, for containers, this factor is only of minor importance, because a container needs very little space on disk, and container images from vendors are so small that they hardly matter.

As compensation, accepting the disk space overhead offers considerable benefits in terms of debugging. For one thing, investigating a container in which only one application is running does not immediately affect your other applications. Moreover, a single container with one application is easier to build and replace than a conglomerate of several components.

Last but not least, Docker is by definition (because of the way Dockerfiles are constructed) predestined for one application per container. Because Docker containers usually do not have init system like systemd, the admin usually calls the program to be started directly in the Dockerfile, which receives virtual process ID (PID) 1 within the container; the container considers the program to be its own init process, so to speak.

Combining several services and programs into the container against your better judgement almost inevitably leads to problems. You then have to work with shell scripts in the background, which might achieve the desired effect but will definitely make debugging far more difficult.

Proper Container Startup

Launching Docker containers is a complex matter and of great importance with regard to debugging. Whether and how you can debug a container depends on how you call the desired application. Startup is a topic that regularly leads to despair when creating Docker containers, because in the worst case, the container crashes immediately after the docker run command.

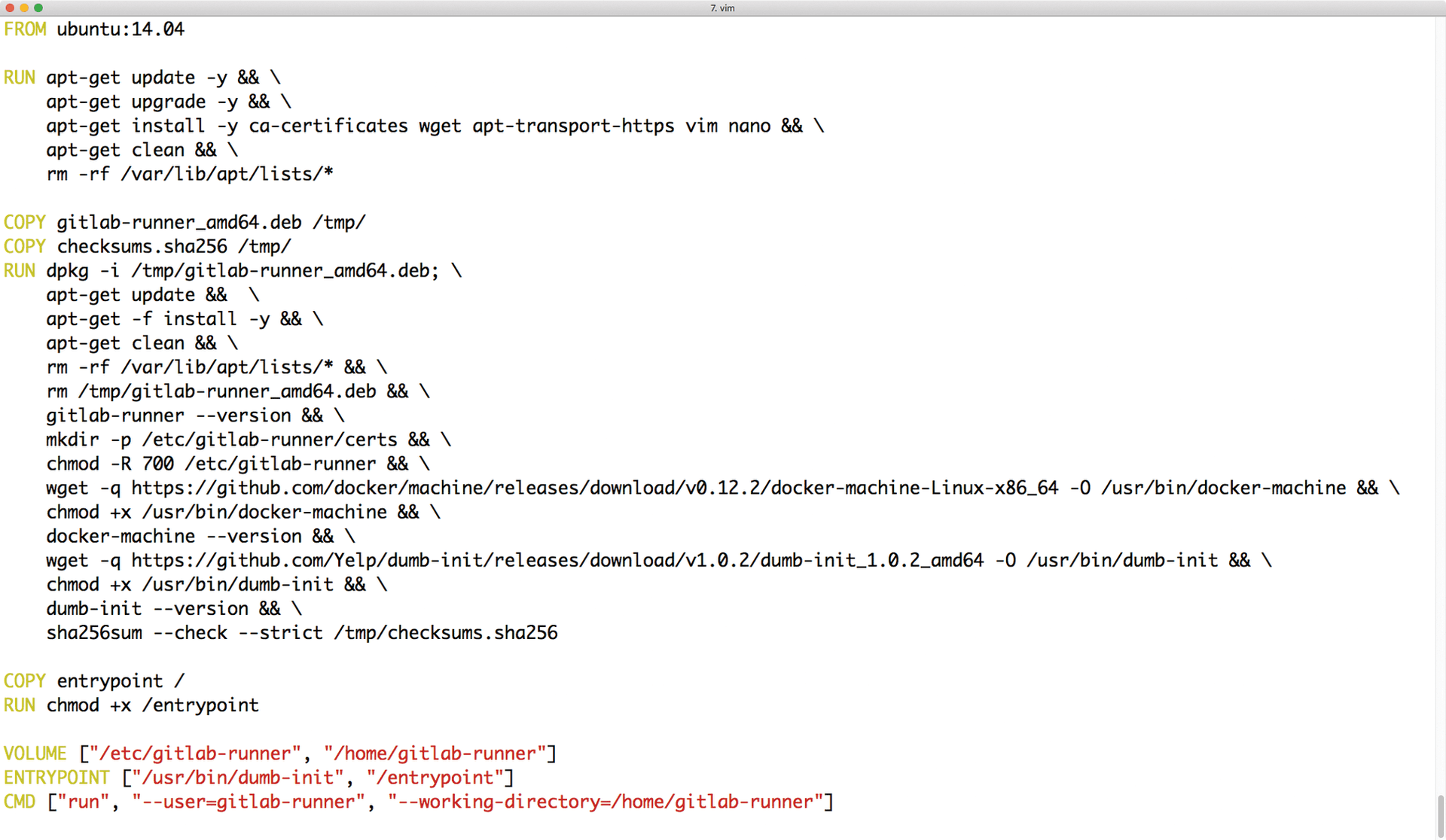

When starting a Docker container, two entries in the Dockerfile play a role: ENTRYPOINT and CMD. Many administrators don't realize the difference between these two at first. Within the container, the CMD is passed as a parameter directly to the program or script defined in ENTRYPOINT. If the container itself does not specify an ENTRYPOINT, it is usually /bin/sh -c. If the CMD is /bin/ping and you do not define a separate ENTRYPOINT, the container calls the /bin/sh -c /bin/ping command at startup (Figure 1).

ENTRYPOINT – as shown in GitLab's Docker containers – determines the init process within the container.The two values can therefore also be used in combination: For example, it is possible and customary to define a program as the ENTRYPOINT and to supply it with parameters with CMD. The entry in ENTRYPOINT is then the process with virtual PID 1 within the container.

As already mentioned, ENTRYPOINT should ideally not be a shell script that starts multiple processes. If this cannot be avoided for some reason, all commands in that shell script, except for the last one, must either terminate cleanly or retire to the background with the use of &. However, the last command must neither expire with a return value of 0 nor disappear into the background because, then, the entire Docker container terminates.

If Startup Goes Wrong

If you have taken all prescribed precautions and the container still crashes on startup, you have several ways to approach the problem. What most people don't know is that the Docker call at the command line, with which you start the container, also has an -e parameter, which you can use to overwrite the Dockerfile's ENTRYPOINT directly at the command line.

Additionally, you will also want to specify the -it parameters and make sure the -d parameter is missing in the container call. The Docker container then starts with the shell as its program; in the next step, you can then enter the command defined as the actual Dockerfile's ENTRYPOINT.

However, you should make sure the command doesn't try to disappear into the background as a daemon. Once these conditions are met, it quickly becomes clear what the problem is. A change to the Dockerfile is a remedy.

Incidentally, the value of CMD also can be overwritten at the command line. To do this, simply append the desired value to the Docker command. This method is practical because it is difficult to define parameters when defining the ENTRYPOINT; instead, you can pass them in using CMD.

Program Output

A key issue when debugging applications in Docker containers is their output to stdout and stderr. If the program in the ENTRYPOINT starts with the parameters from CMD, you won't notice a thing outside of the container. On normal systems, reading logs is not a big challenge: They run a syslog daemon, which collects output from the running programs and writes it to various logfiles. However, a Docker container does not have a separate syslog daemon.

In Docker, logging therefore works in a fundamentally different way than in normal systems. Because there is no systemd – or in fact any init system at all – in Docker containers, applications in Docker containers are usually designed for operation in the foreground. This setup works well as long as you adhere to the "one application per container" principle; then, the logs usually end up automatically in the stdout standard output channel.

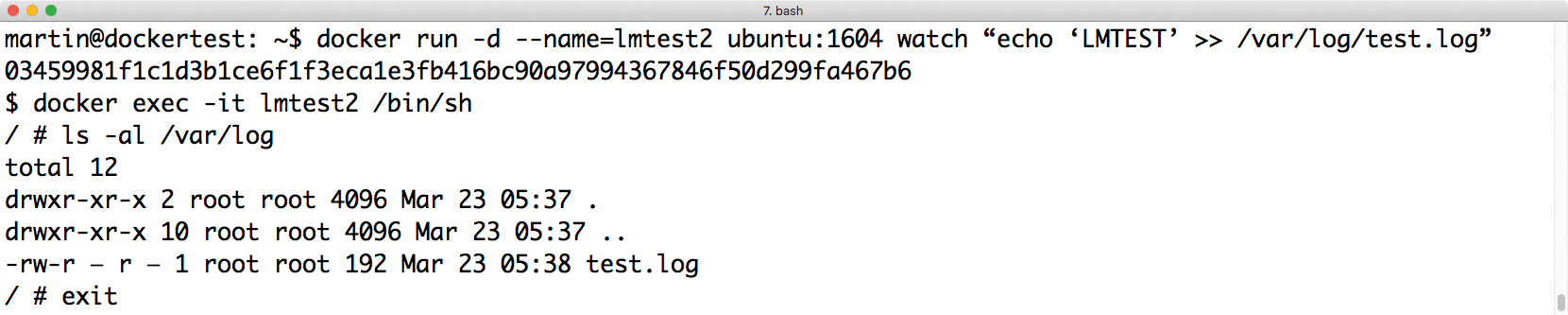

The interesting thing here is that Docker has a logs operation that uses the host command line to display all of a container's output to its default output channel. If the application inside the container uses this channel diligently, the output becomes visible on the host. The command is very easy to use:

docker logs test

displays the output to stdout for the test Docker container. If you want to see the logfiles continuously, insert another -f between logs and test or your choice of container name. Basically docker logs works in a very similar way to tail -f.

As an alternative to docker logs -f, you also have the docker attach command. It simply replicates the input of the standard output channel in real time on the admin terminal and is therefore very similar to docker logs -f. In contrast to the contents of attach, the contents of docker logs can be displayed even if a container is not running – but the local image of the special container must not have been deleted with docker rm.

This property makes the command ideal for scenarios in which a container crashes immediately after docker run is called. The output of docker logs usually contains the reason; attach can only be used with a running container (Figure 2).

logs command outputs the contents of stdout, the default output channel of the container.See What's Going On

The top tool is indispensable for many administrators: It displays the process table (i.e., a list of all processes currently running on the system, including their respective states). If you use Docker for containerization, you don't have to forgo such a process overview: docker top <Name> continuously displays the processes for the <Name> container at the command line.

If you follow the mantra of one process per container, the command's output will not be very extensive, but sometimes a container starts child processes or creates threads that can be observed with top. The docker ps command works in the same way, producing output similar to ps in the shell.

Temporarily Stopping a Container

The following tip does not refer so much to the container's inner workings: Docker supports the pause and unpause commands. These commands let you pause running containers without stopping them completely or even deleting them. In everyday life, this can be useful if you need to debug one container and stop another to prevent it from sending further data to the container under investigation. It would take considerably more effort to stop the other container completely and then restart it. However, the combination of pause and unpause ensures that the application in the container simply continues to run at the end of the process as if nothing happened in the meantime.

Debugging Inside the Container

Even if a container launches successfully, things can still go wrong. If you have little experience with Docker, you may be confused. Unlike a real VM, you can't just SSH in to containers to see what's going on. But don't panic, Docker offers a solution, which is probably it's most powerful debugging tool: docker exec.

This command can best be compared with chroot at the command line. After calling docker exec <Name Command> at the command line, Docker calls the whole command within the container. At the system level, then, Docker calls the specified command within the namespaces defined for that container, such as the network and process namespaces that already exist for the container.

If you append the -it parameters docker exec also works interactively:

Docker exec -it /bin/bash

This command calls a Docker shell inside the ping container, which you can use just like a normal shell. If you are looking for a way to "log in" to the running Docker container, you will find it in docker exec (Figure 3).

docker exec catapults you into a shell inside the container, where you can look around.Inside the Glass House

Accordingly, you have the possibility to call all binaries available in the container from a shell started in this way. If something doesn't work, the usual suspects like ss or ip will help – provided they are included in the Docker image on which the container was built. If you build the containers yourself, you should pay attention to your basic configuration, because the Linux distributors' basic images do not include all the tools you are used to in your daily work.

Other Linux tools like ls or ps also work in the Docker container. However, you should not forget that you really only have one view inside the container: the view you are forced into by the various namespaces and security policies. If you want to debug components outside the container, you first need to leave it.

Also be aware that filesystem changes only affect the overlay image of the running Docker container. If you delete this container and restart it from the base image, any modifications are gone. If you encounter changes you want to make, you will ideally also want to rebuild the container's base image.

In this context, it is important that a running container's main process cannot simply be restarted from within the container. After changing a configuration file, it can only be reloaded with SIGHUP if the tool supports the signal. A SIGKILL with the plan to restart the process afterward would immediately stop the running shell, because the service is virtual PID 1 in the container. An init crash on a Linux system leads to a kernel panic. With Docker, the affected container just terminates immediately.

Forensic Investigations

If you encounter content during the examination of a Docker container that you would like to look at in more detail, you are faced with a challenge: For good reason, the container's files are strictly separated from the host's files. However, docker supports a cp command that can copy files from running containers to the host. For example, if you suspect that malware has been distributed over a Docker-operated web server after a break-in, you can access these files and put them through in-depth forensics.

In addition to the logs command mentioned before, docker stats is very useful. It continuously displays a container's metrics, such as resource consumption with regard to the CPU, RAM, or the network. Containers running amok can thus be quickly identified and withdrawn from circulation.

Container Inspection

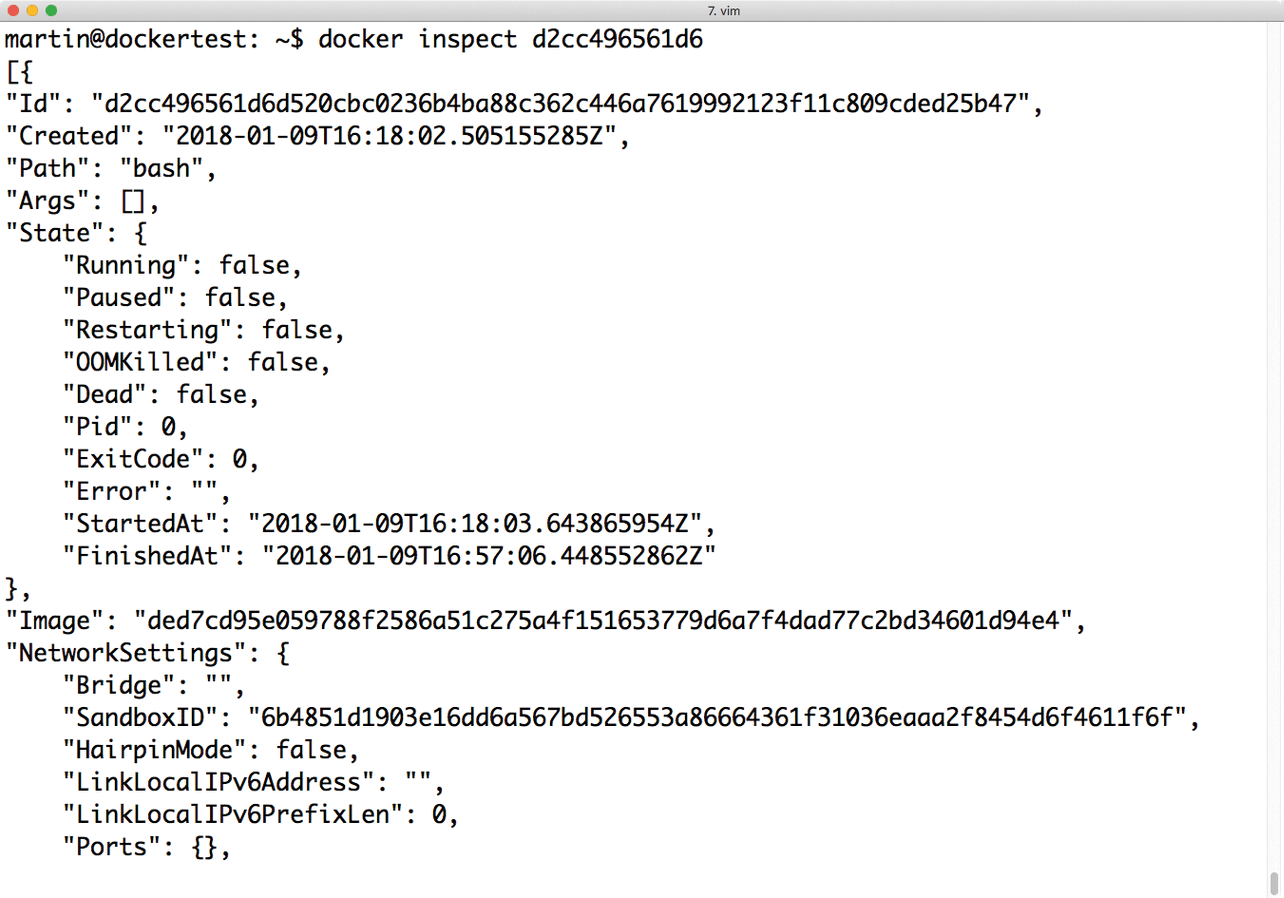

In addition to the many tricks and tips already discussed, Docker itself also offers various helpful information sources. Docker's inspect command is a very powerful tool, which tells you about almost every property of a container.

If you start a container by typing docker, the docker command-line tool contacts the Docker daemon's API. The command for starting a container takes the form of a REST request in JSON format, which the client sends to the server. The server then processes the request and uses the JSON file for internal management of the container.

The file also is updated if the container's state has changed (i.e., because you have connected a new Docker volume to it). The inspect command lets you display a JSON file's contents onscreen, thus opening up a reliable source of information.

Field Info

The State field entry provides information about the container status. If you have exposed ports for the container at startup, the ports are listed in NetworkSettings.Ports. Another practical feature is that the inspect output shows the container's currently connected volumes and their paths on the host filesystem.

The same applies to the container logfile. The Docker daemon logs the output to stdout for each container, so you can access it with the logs command. The container's JSON file stores the automatically generated path to the file with the output to stdout, so you can also access it directly.

By far, however, the most important information in the inspect output is the environment that the container received from the Docker daemon at startup time. It is quite common to influence the container's configuration with the use of environment variables passed to docker run with the -e parameter. In the Dockerfile, these variables can then be processed with the values that have been set accordingly.

If something fails to work when passing in the environment parameters, you can discover from the inspect output which variables were set for the container at startup (Figure 4).

docker inspect command displays detailed information about the container, such as the connected networks or their current states.Examining Layers

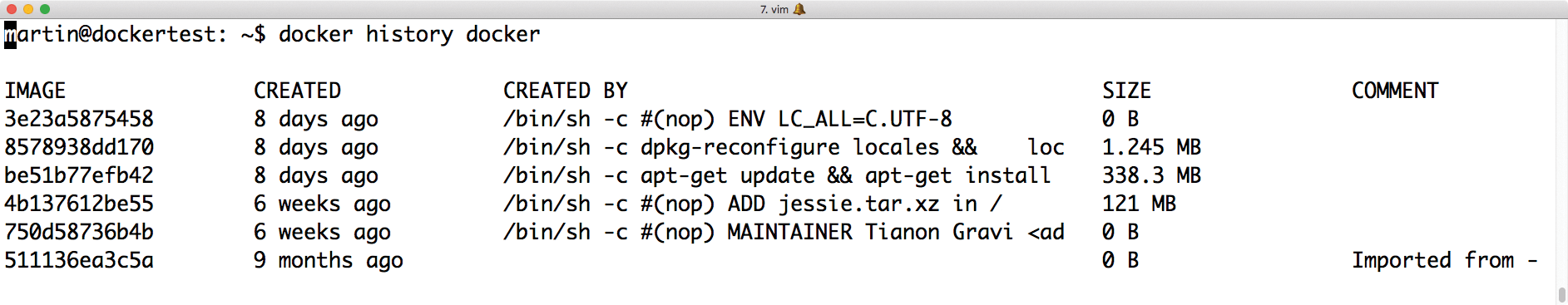

If you find yourself in the harrowing situation of needing to debug a setup that you did not build yourself and that is based on prebuilt Docker Hub containers, you face a real challenge. You often have to deal with a layer cake: Various users have changed and modified the original Docker Hub image so that it is almost impossible to trace who is responsible for what change and when it happened.

Docker itself offers a little help here: The docker history command can be used to display the changes an image has undergone during its lifetime, including any comments and corresponding notes – which might shed some light on the matter (Figure 5).

docker history reveals what changes have been made to a Docker image.