Continuous integration with Docker and GitLab

Assembly Line

Various articles have already explained why it is not a good idea to rely on prebuilt Docker containers (e.g., from Docker Hub [1]). The containers found there are usually black boxes, whose creation process lies completely in the dark. You don't want that at the core of your infrastructure.

The good news: You can easily build your own containers for Docker on the basis of official containers from the various manufacturers. Practically every common Linux distribution, such as Ubuntu, Debian, and CentOS, offers official Docker images that reside on Docker Hub. Their sources are freely available for any user to download.

GitLab [2] proves to be extremely useful in this context: It comes with its own Docker registry and offers many tools to achieve true continuous integration (CI). One example is pipelines: If you put tests in your Docker container directory, GitLab automatically calls these tests every time you commit to the Git directory after rebuilding the Docker container.

In this article, I present in detail a complete Docker workflow based on GitLab. Besides the necessary adjustments in GitLab, I talk about the files required in a GitLab Docker container directory.

Conventional Approach

The classic approach is to install the services needed on central servers (see the "Deep Infrastructure" box), provide them with the corresponding configuration files, and operate them there – possibly with Pacemaker or a similar resource manager for high availability. However, this arrangement is not particularly elegant: If you want to compensate for the failure of such a node quickly, you would first have to invest a great deal of time in automation with Ansible or other tools.

Moreover, you would have to follow the maintenance cycles of the distributor whose Linux product is running on your own servers. If an update goes wrong, in the worst case, the entire boot infrastructure is affected.

Doing It Differently

Another approach is to roll out individual services such as NTP or dhcpd in the form of individual containers on a simple basic Linux distribution (e.g., Container Linux [3], formerly CoreOS). A minimal basic system then runs on the boot infrastructure, downloading containers from a central registry during operation and simply running them. If you want to update individual services, you install a new container for the respective program.

If you want to rebuild the boot infrastructure completely (e.g, because of important Container Linux updates), you need to install a new Container Linux, which automatically downloads all relevant containers on first launch and executes them again. Container Linux even offers a configuration framework for automatic instructions in the form of Ignition [4].

Of course, the containers must be prepared such that they already contain the required configuration and all the relevant data. For updates, you also need to be able to rebuild a container at any time and without much overhead. However, this is easier said than done: In fact, you need to build a comprehensive continuous delivery (CD) and continuous integration system for this workflow that can reliably regenerate your Docker containers.

Step 1: Docker Registry in GitLab

A working CI process for Docker containers in GitLab relies on two functioning components. Although the GitLab CI system is a standard feature of GitLab, it requires what is known as a Runner to perform the CI tasks. Additionally, the Docker registry is the target for the CI process, because the finished Docker image resides there at the end of the process.

Step 1 en route to Docker CI with GitLab is to enable the Docker registry in GitLab. The following example assumes the use of the Community Edition of GitLab based on the GitLab Omnibus version. To enable the registry, you just need three lines in /etc/gitlab/gitlab.rb:

registry_external_url 'https://gitlab.example.com:5000' registry_nginx['ssl_certificate'] = </path/to/>certificate.pem registry_nginx['ssl_certificate_key'] = </path/to/>certificate.key

If you have already stored the certificate for GitLab and the SSL key in /etc/gitlab/ssl/<hostname>.crt and /etc/gitlab/ssl/<hostname>.key, you can skip the path specification for Nginx in the example, leaving only the first line of the code block. The example assumes that GitLab's Docker registry uses the same host name as GitLab itself, but a different port. Also, the GitLab Docker registry is secured by SSL out of the box.

Once gitlab.rb has been extended to include the required lines, trigger the regeneration of the GitLab configuration with Chef, which is part of GitLab's scope if you have the Omnibus edition:

sudo gitlab-ctl reconfigure sudo gitlab-ctl restart

Using netstat -nltp, you can then check whether Nginx is listening on port 5000. On a host with Docker installed, you can also try

Docker login gitlab.example.com:5000

to connect to GitLab's Docker registry.

Incidentally, in the default configuration, GitLab stores the Docker images in the /var/opt/gitlab/gitlab-rails/shared/registry/ directory. If you want to change this, you just need

gitlab_rails['registry_path'] = "</path/to/folder>"

in gitlab.rb, followed by regeneration of the GitLab configuration and a restart.

Step 2: Activate Runner

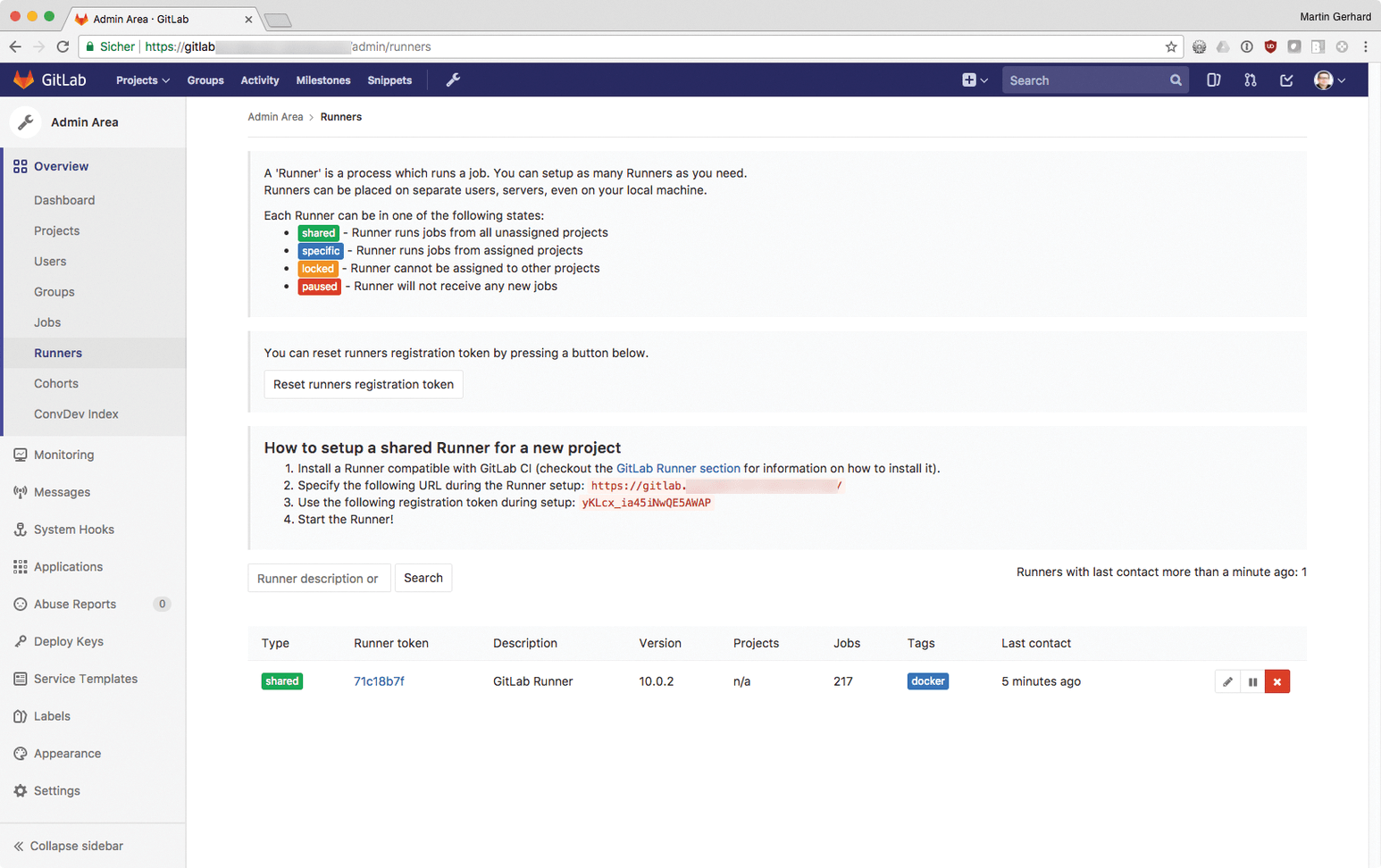

Without at least one Runner, nothing works in GitLab. A Runner is something like a remote command receiver running on a system waiting for instructions from the GitLab CI suite. It covers the entire CI process, including building the image and carrying out the defined tests. If you haven't used GitLab CI yet, you usually won't have an active Runner, so creating such a Runner is the next step on the way to automating Docker containers.

The Runner can reside on the same host as GitLab itself, but it is more elegant if the Runner has its own system and does not have to share its resources with other services. Additionally, the Docker Community Edition must be installed on the Runner host for the Docker command to work. You need to pick up an authentication token for the new Runner as the first step: In GitLab, click Overview | Runners on the admin page, where you will find a corresponding token (Figure 1).

The GitLab developers provide a package installation script that can be found online for Debian-based [5] and RPM-based [6] systems (except openSUSE). If you are brave, download the script and execute it immediately with:

curl -L | sudo bash

If you want to look into the script first, download it and execute it manually as root. At the end of the process, the gitlab-runner package is installed.

Finally, it is time to register the Runner in GitLab. To start the process, enter:

sudo gitlab-runner register

The program wizard first asks for the GitLab URL and then for the token from the previous step. Enter the host name accordingly and answer the prompt for possible tags by typing Docker and the prompt related to running Untagged jobs with true.

Your response to the question about pinning to the current project needs to be false. Finally, as the executor, enter shell – a more complicated setup would connect the GitLab Runner directly to Docker via the dedicated executor, but it is not necessary in this basic example.

At the end of the process, the new Runner appears in GitLab: A new entry can now be found where you previously retrieved the token. By the way, various parameters of the Runner can be changed here retroactively at any time. If something goes wrong during the initial setup of the Runner, the wrong configuration is not set in stone.

Step 3: Activate GitLab CI for Project

The final step in GitLab preparation is to activate the CI framework for the sample project. To do this, first create a new project in GitLab and then navigate to its settings. There, click Settings | CI/CD and then Expand next to General Pipeline Settings to open the most important configuration settings. Set the Git strategy for pipelines value to git clone.

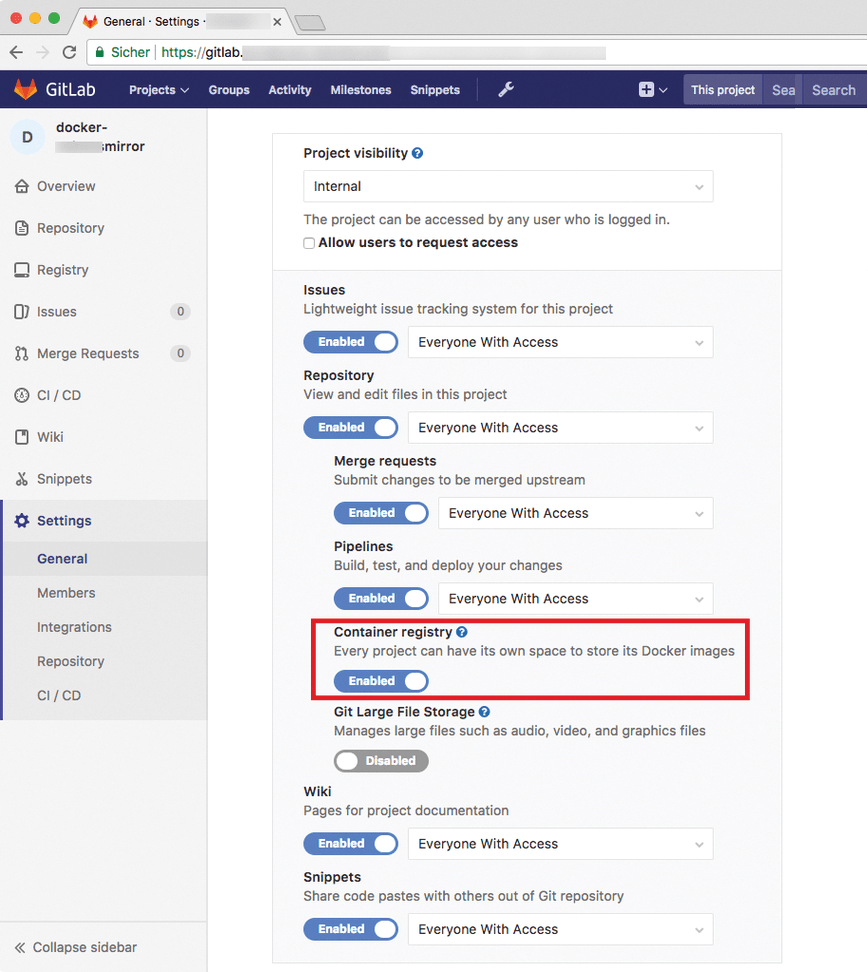

Next, click Settings and right-click Permissions. Pressing Expand makes the project settings visible; here, you need to set the Container registry value to Enabled (Figure 2) to enable the GitLab CI framework for the sample project. However, you still need a trigger to launch any CI automation – which you will address in the last step.

Step 4: Define CI Pipelines

GitLab expects the .gitlab-ci.yml CI project configuration file in the root directory of the project. The content and principle of the desired YAML file is similar to that of a makefile: Define the parts (stages) of the CI process and stipulate which jobs belong to each stage. Because GitLab simply calls a shell externally to perform the individual steps in the stages, the individual stages can be defined in the form of shell scripts, which you can access in .gitlab-ci.yml and then simply reference. This arrangement helps keep the YAML file itself understandable.

Basically, the names of the individual jobs can be chosen freely, but the YAML hierarchy of GitLab CI has a few reserved keywords. Under stages, for example, GitLab expects a list of the CI stages of the project. With before_script and after_script, GitLab accepts commands defined before and after individual jobs are run. Variables can be set with the variables directive. See the GitLab documentation [7] for details.

In the example, .gitlab-ci.yml has the following stages: pre_cleanup, build, test, upload, and post_cleanup, which are also defined in stages. The jobs are then defined:

<name>:

script: <command>

stage: <section>

tags:

- dock

The name of a job can be, but does not have to be, identical to the section of the build process to which the job belongs. However, making it match contributes considerably to clarity. The complete build job could look like this:

build:

script: "bash -x build.sh"

stage: build

tags:

- Docker

This entry in .gitlab-ci.yml causes GitLab CI to call the bash -x build.sh command each time it processes the build section in the CI process. The actual commands needed to create the image for Docker (i.e., Docker build) are then in build.sh, which gives this solution elegance and helps avoid a mess in .gitlab-ci.yml. By the way, the environment of a job can be defined dynamically, which enables further standardization.

For example, it makes sense to create a shell script named .gitlab-ci.config that exports the IMAGE, IMAGE_NAME, and VERSION environment variables to the shell environment. If you then get these environment variables into the shell by using ./.gitlab-ci.config in the first stage of the CI process, all further commands of this job have access to them. Also important is that a script directive in a job description can call multiple commands if YAML notation is used.

Similar to the build example, you can add additional jobs for the other stages in .gitlab-ci.yml; each section gets its own shell script (e.g., pre_cleanup.sh, test.sh, upload.sh, and post_cleanup.sh). The final interesting question against this backdrop is what the content of those shell scripts should be.

Working with Shell Scripts

Basically, each shell script should call precisely the commands that execute the correspondingly named build process. In the pre_cleanup step, pre_cleanup.sh is designed to ensure that the Runner does not already have a Docker container with the same name as the container to be built – it would be impossible to launch a new container otherwise on running test.sh.

Similarly, pre_cleanup.sh ensures that a Docker image does not exist with the same name and version in the Runner's local image list; otherwise, the build would again fail.

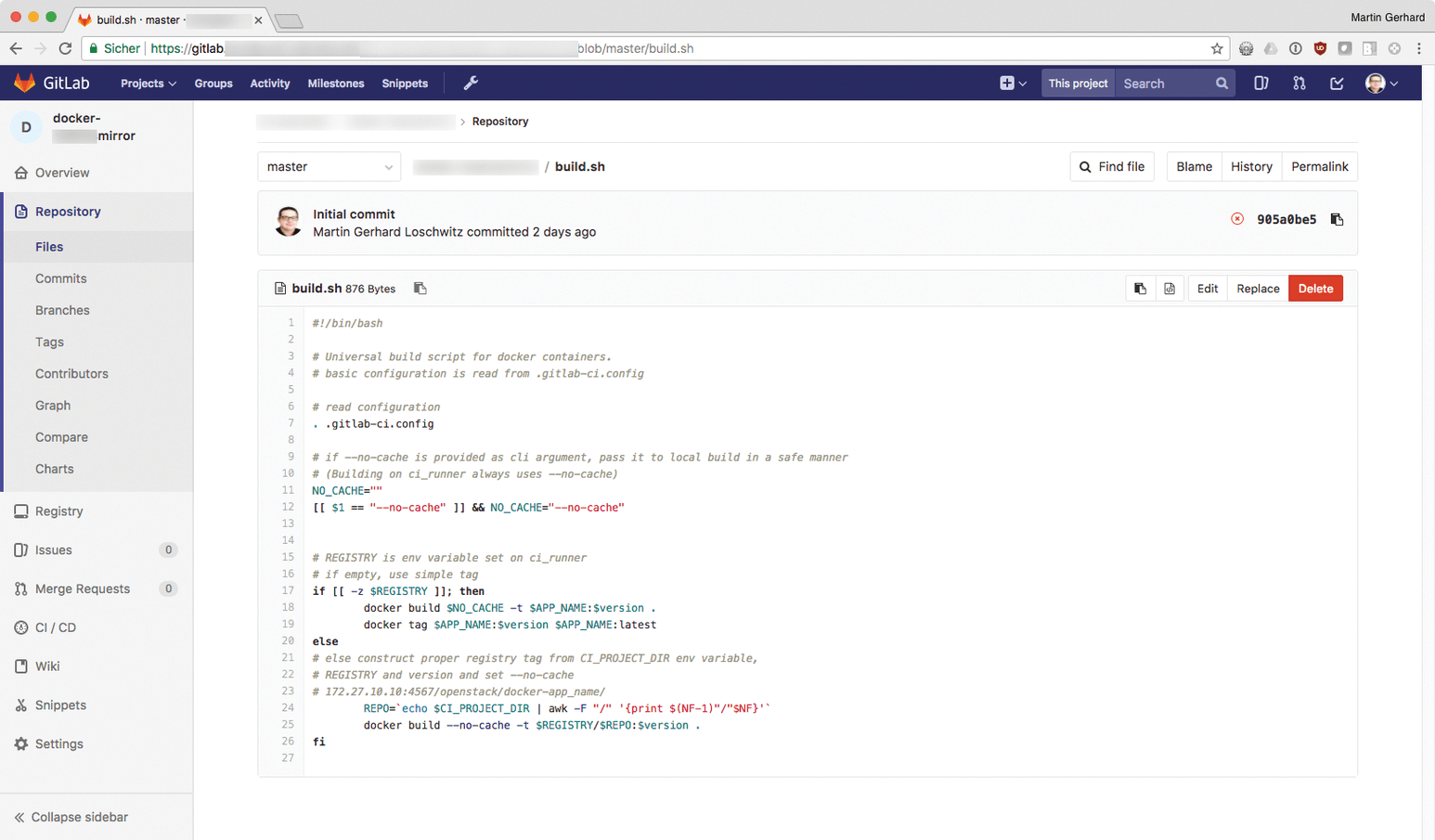

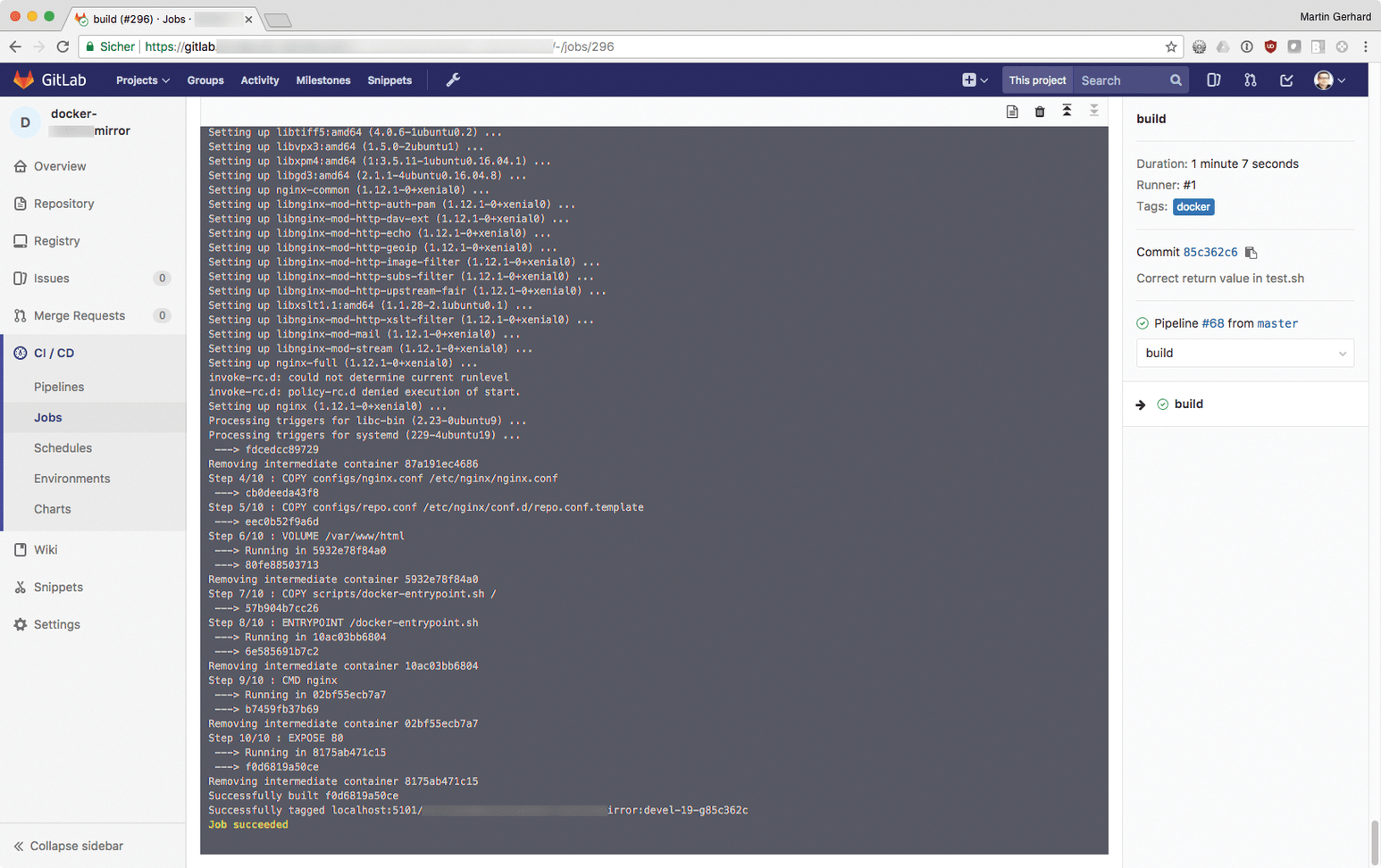

All commands that build the container belong in build.sh – first of all, Docker build and possibly additional necessary commands (Figure 3). The most interesting part is certainly test.sh. Here, you have the possibility to define arbitrary tests in shell syntax so that you can check whether the automatically built container is really working as intended.

build.sh example: This variant of the script builds the image and tags it.For example, if you want the container to launch Nginx on port 80 and port 443, a for loop in combination with netstat or ss could check whether an existing Nginx instance actually opens a TCP/IP socket within 30 seconds of the Docker run call. The IP addresses of the loopback interface (e.g., 127.127.127.127.127) are ideal for this test. The Docker run call must of course be filled with appropriate parameters, and the Docker container itself must be able to use these environment variables.

The output behavior of the shell script is also very important: It must return 1 immediately if it encounters an error. If the tests run successfully, it should return 0 at the end, so that GitLab CI can check off the test as "successful" in its database. Uploading the image to the Docker registry with upload.sh is now possible. However, you should make sure you not only upload the image, but also set an appropriate tag.

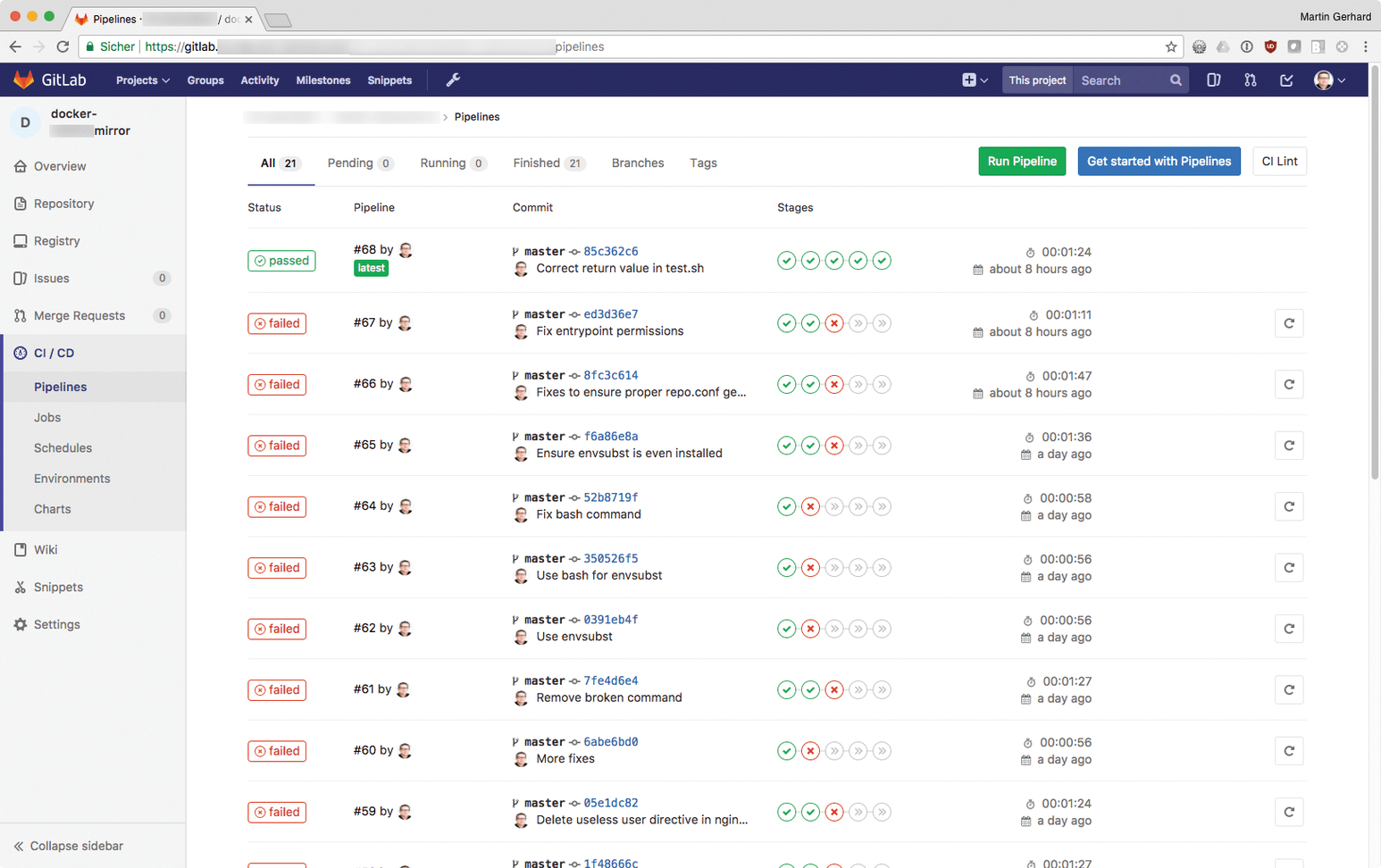

Incidentally, you can track GitLab CI/CD jobs live by opening the web interface, navigating to the respective project and clicking CI/CD. GitLab lists both the Pipelines (Figure 4) and the Jobs (Figure 5). If a test goes wrong, GitLab informs you – by email, as well.

Where's the Beef?

If you've built Docker images before, you might be asking yourself where the Dockerfile got to. After all, it contains all the relevant statements for Docker build. Clearly, besides the various CI scripts, you still need all the other files that you would need without CI – first and foremost, the Dockerfile.

Conclusions

GitLab CI and its Docker integration prove to be a very practical GitLab add-on. If you use GitLab anyway and want to build Docker containers, you should take a close look at GitLab CI, especially if you do not want to distribute your apps with Docker but are looking for a very lean and efficient method to operate deep infrastructure components.