Persistent volumes for Docker containers

Solid Bodies

Docker guarantees the same environment on all target systems: If the Docker container runs for the author, it also runs for the user and can even be preconfigured accordingly. Although Docker containers seem like a better alternative to the package management of current distributions (i.e., RPM and dpkg), the design assumptions underlying Docker and the containers distributed by Docker differ fundamentally from classic virtualization. One big difference is that a Docker container does not have persistent storage out of the box: If you delete a container, all data contained in it is lost.

Fortunately, Docker offers a solution to this problem: A volume service can provide a container with persistent storage. The volume service is merely an API that uses functions in the loaded Docker plugins. For many types of storage, plugins allow containers to be connected directly to a specific storage technology. In this article, I first explain the basic intent of persistent memory in Docker and why a detour through the volume service is necessary. Then, in two types of environments – OpenStack and VMware – I show how persistent memory can be used in Docker with the appropriate plugins.

Planned Without Storage

The reason persistent storage is not automatically included with the delivery of every Docker container goes back to the time long before Docker itself existed. The cloud is to blame: It made the idea of permanent storage obsolete because storage regularly poses a challenge in classic virtualization setups. If you compare classic virtualization and the cloud, it quickly becomes clear that two worlds collide here. A virtual machine (VM) in a classic environment rightly assumes that it is on persistent storage, so the entire VM can be moved from one host to another. However, this requires redundant memory in the background on which the data is stored centrally. Local memory (e.g., on the hard drive of the virtualization server) does not allow the flexibility described.

Because of the complicated tinkering in the background, the concept of persistent storage in the cloud was difficult right from the start. Amazon, the industry leader, distinguishes between two storage types in the Amazon cloud: Ephemeral storage is volatile storage that belongs to a specific VM and only exists as long as the VM exists, whereas volumes are persistent and can be connected to and disconnected from VMs at will. The normal case, as Amazon makes clear, is ephemeral storage. Because the basic assumption is that every VM in the cloud is completely automated anyway, in the event of a crash, any VM can be, at least theoretically, restored quickly. The sober assumption is that the application simply has to accommodate this design: Applications need to store their data in a central database instead of locally.

Volume Service for Persistent Storage

Although Docker itself is not used exclusively in the cloud context, it has adopted some of the cloud's design maxims, including those regarding persistent storage. However, the idea of "cloud-ready" applications that do not contain their own data often cannot be reconciled with the reality of the everyday sys admin. Even the greatest supporters of the concept must admit at some point that, in the end, some situations need persistent memory. Those who have to store their data in a database, as mentioned above, outlast the restart of the container or, if necessary, are linked to another container (e.g., as part of a database update).

The Docker developers solve the problem with their volume service: A volume is by definition a virtual hard drive that can be connected to any container and provides permanent storage. At the heart of the solution is the volume API: If you define persistent memory for a container, calling docker on the command line leads to a corresponding API request to the volume API that provides the desired memory and then connects it directly to the target VM.

Redundancy Is Essential

When dealing with persistent storage, Docker clearly must solve precisely those problems that have always played an important role in classic virtualization. Without redundancy at the storage level, for example, such a setup cannot operate effectively; otherwise, the failure of a single container node would mean that many customer setups would no longer function properly. The risk that the failure of individual systems precisely hitting the critical points of the customer setups, such as the databases, is clearly too great in this constellation.

The Docker developers have found a smart solution to the problem: The service that takes care of volumes for Docker containers can also commission storage locally and connect it to a container. Here, Docker makes it clear that the volumes are not redundant; that is, Docker did not even tackle the problem of redundant volumes itself. Instead, the project points to external solutions: In fact, various approaches are now on the market that offer persistent storage for clouds and deal with issues such as internal redundancy. One of the best-known representatives is Ceph, and to enable the use of such storage services, the Docker volume service is coupled with the plugin system that already exists, thus providing redundant volumes for Docker containers with the corresponding plugin of an external solution.

The Docker documentation also refers to "storage drivers," which involves storing data in the container and focuses on the container's root filesystem. The developers point out that normal data in the container should be on volumes so that the actual container is not changed.

Connecting OpenStack and Docker

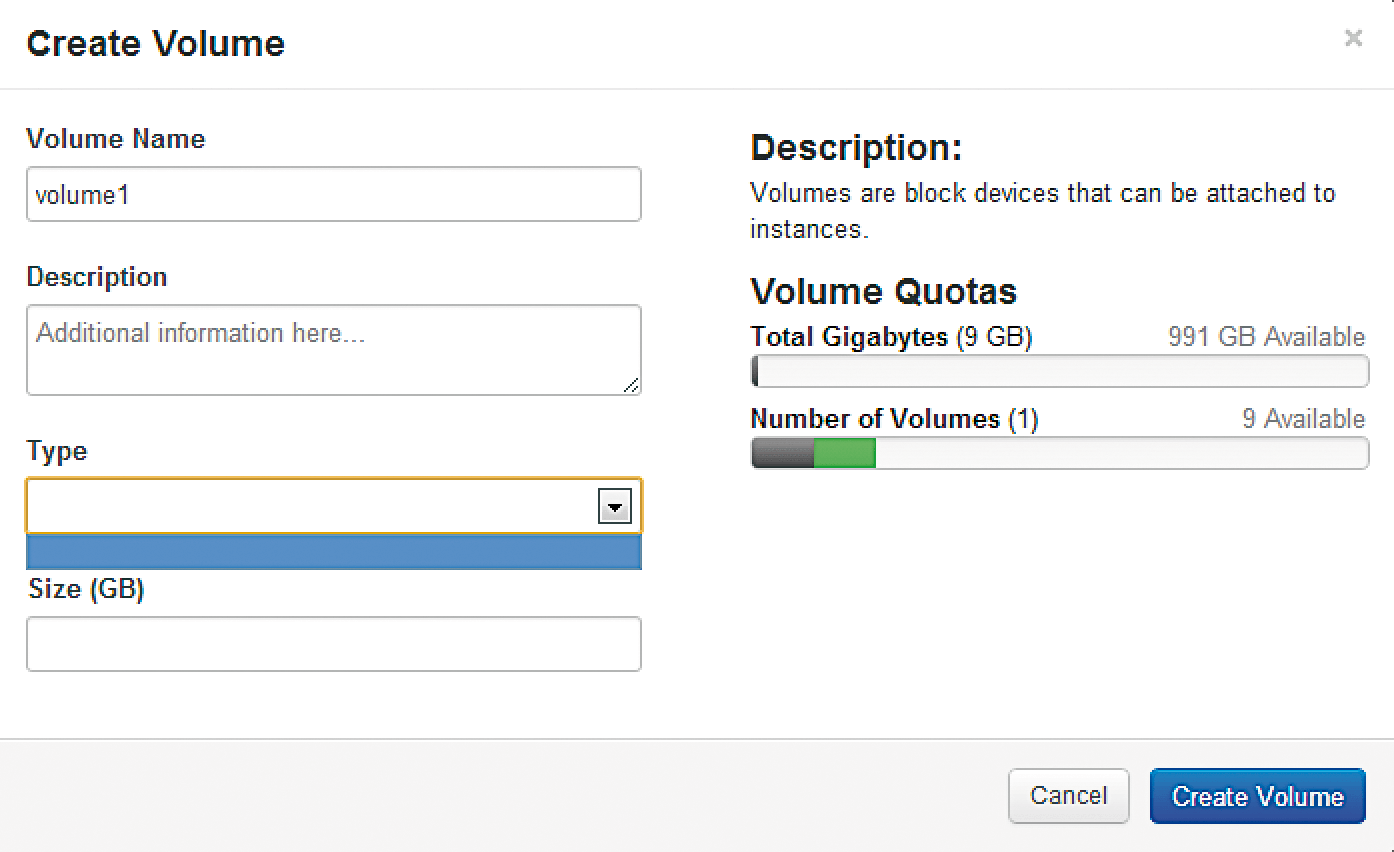

OpenStack is currently the marketing star when it comes to open source solutions in the public cloud: Anyone who starts building an OpenStack cloud today knows what they are getting into with this project, especially since OpenStack components are no longer only used as part of a complete OpenStack installation. In particular, the OpenStack network service Neutron and the storage service Cinder (Figure 1) have stepped out of the shadow of their parent project and become meaningful without a complete OpenStack, although that's not the rule: If you run these services, you will usually also deploy the rest of OpenStack to sell public cloud services to customers.

Companies that operate OpenStack as part of a public cloud installation also want to offer their customers Docker containers on a regular basis. OpenStack and Docker do not harmonize very well: Many OpenStack and Docker design approaches clash, and the use of a Docker driver for the Nova virtualization component is now orphaned. Nevertheless, OpenStack and Docker can be combined: It is not absolutely necessary that Nova manage Docker containers, but if you already have a public cloud with OpenStack and storage management, you can connect Docker directly to Cinder. This elegant solution saves you the need to build a second storage repository explicitly for Docker.

Kuryr Supports Containers

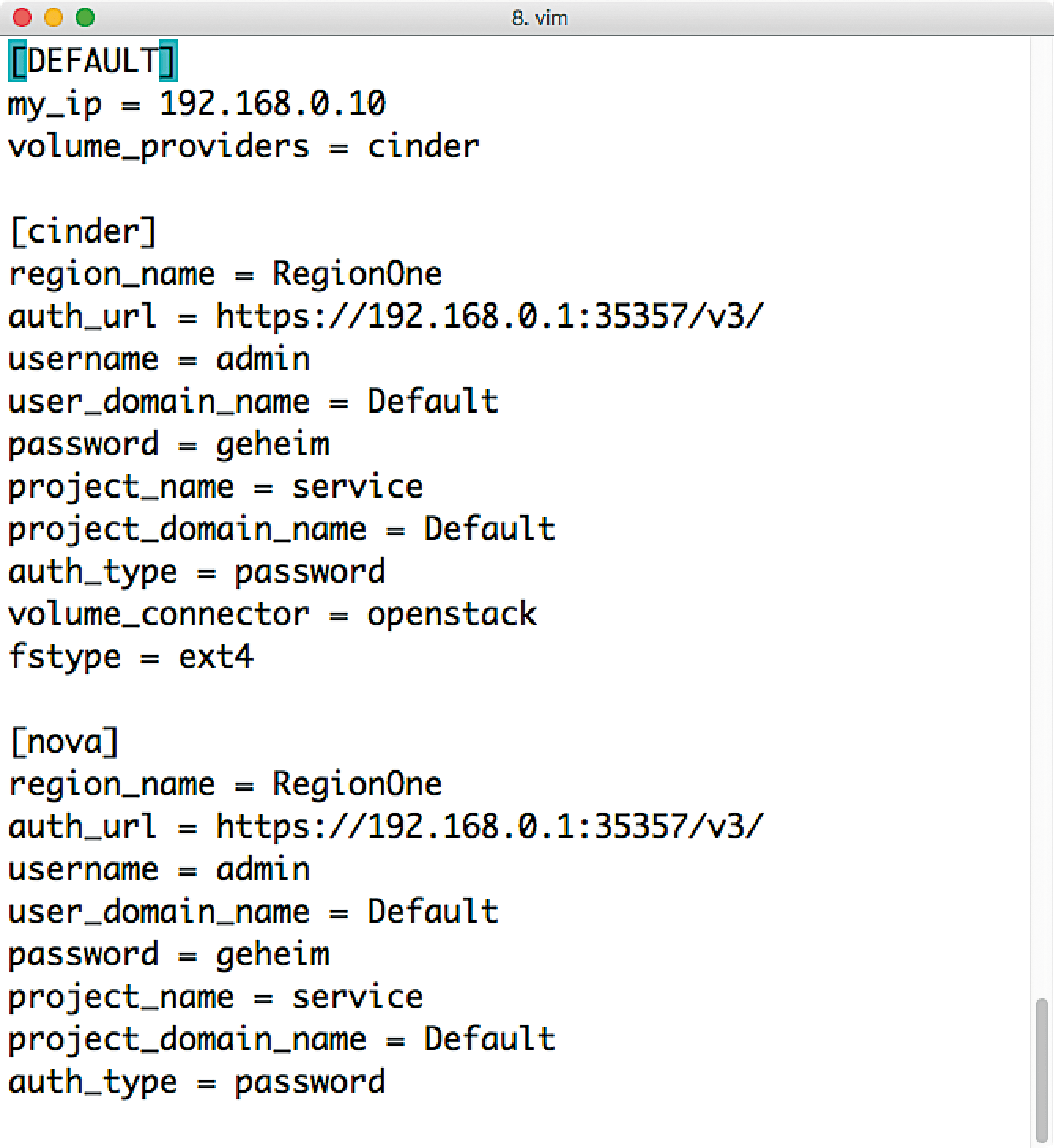

For some time the OpenStack project has been developing a collection of tools under the name Kuryr that better maps the storage and network model of Docker. The "Fuxi" sub-project, which is responsible for storage in Kuryr, is a separate service that implements the Docker volume API and thus acts directly as a rival for Docker at the command line.

Basically, Fuxi acts as a translator: It translates the incoming command of the Docker command-line client into an API call for child processes, evaluates the return value, and connects the running container to the appropriate volume. For this to work, Fuxi consists of two components: The API described above is part of the package, as is the Fuxi plugin in Docker itself.

The Fuxi API service must run on every host on which containers with Docker are to run, because the Fuxi plugin always connects to the API on its own server. If you don't use Docker directly, but still use Kubernetes for Docker management, you can still use Fuxi. In addition to the Fuxi API and the Fuxi driver for Docker, a third module is needed: the integration layer between Kubernetes and Fuxi. If this is missing, Docker can create volumes with Fuxi, but Kubernetes will not know anything about this storage.

Using Fuxi

Once the OpenStack admin has rolled out Fuxi to the Docker node (Figure 2) and provided it with an appropriate configuration (the tool's documentation provides valuable clues), corresponding volumes can be created on the command line:

$ docker volume create --driver fuxi --name it-admin-test --opt size=1 --opt fstype=ext4 --opt multiattach=true --opt volume_provider=cinder

The last parameter is especially important: In addition to Cinder, Fuxi also can create volumes with the OpenStack NFS service Manila. If you omit this parameter, the Fuxi driver for Docker simply denies the service.

Alternative to Fuxi

By the way: If you don't like Fuxi, you can turn to the alternative cinder-docker-driver [1]. In principle, it works much like Fuxi: A daemon plays the translator between Cinder and Docker on the Docker host, and the corresponding Docker plugin consumes the output. This solution, also based on Go, is not quite as complex as Fuxi and comes without Kubernetes support.

Containers in VMs

Recently, it has become increasingly fashionable to run Docker containers in VMs that in turn run on OpenStack. This nested virtualization might seem strange at first glance, but there are good reasons to do it this way: You do not have to provide your own metal for your Docker containers but can directly use the infrastructure available in a cloud. The performance on a VM is not automatically worse than on real hardware. However, with regard to Docker, you should note one important point in this scenario: Because the available memory is usually managed by OpenStack, but Docker runs in a VM, it cannot directly access the Cinder memory, so it is not necessary to attach volume plugins to Docker.

All storage management is done in OpenStack in this case. For Cinder, it is only relevant that it sees the storage device attached to its host VM. With the normal volume driver, it can then be integrated into containers. You do not get a high-availability problem in this scenario either: If the container data for Docker is on a Cinder volume, it is already implicitly redundant if the underlying memory offers this function. If the original VM with the Docker container fails, you can restart it on another host with the same data and the service works as usual.

VMware Cloud Alternative

VMware has been active in the virtualization market for decades, so it's no wonder the software is so widespread. vSphere as a comprehensive solution for virtualization is particularly common in larger companies. However, the challenge that vendors face with respect to Docker volumes in VMware is no different from OpenStack: Much like OpenStack, vSphere comes with its own storage management, vSphere Storage, that mounts storage in the background so that it can be used seamlessly in vSphere. A provider that now wants to supply Docker containers with persistent storage has the choice of two options: Build separate Docker storage based on Ceph or connect Docker to the existing VMware storage.

To make this work, VMware provides a service named vSphere Docker Volume Service (vDVS) comprising two parts: The ESX code running directly in VMware and the Docker plugin, which is officially certified by Docker, so you can purchase it online directly from the Docker store. The ESX part is available as a download, so it can be integrated into ESX with just a few clicks. The GitHub page for the plugin [2] contains all the relevant information.

If you want to use the service, you need at least ESXi 6.0 or higher and Docker v1.12 or later. VMware recommends version 1.13, or 17.03 if you want to use the "managed plugin" – the variant from the Docker store. If this is the case, the plugin installation is simple after installing the VIB package in ESXi:

$ docker plugin install --grant-all-permissions --alias vsphere vmware/ docker-volume-vsphere:latest

To then create a volume in vSphere storage, simply use the command:

$ docker volume create --driver=vsphere --name=it-admin-test -o size=10gb

The volume can then be connected to a Docker container from the command line as usual.

Integration with Ceph

OpenStack and VMware are virtualization solutions that also manage the available physical storage in the background. If such a management layer does not exist in a setup, an existing Ceph cluster can also be integrated directly into Docker's volume system. However, this doesn't work as smoothly as would be desired from an administrator's point of view, because an official volume plugin from the manufacturer hasn't seen any updates for ages and simply doesn't work with current Docker versions. Although you can find free alternatives, one of them is marked as "experimental" and another is also orphaned. At the time of going to press, the best chances were offered with the rbd plugin by developer Wetopi: It works with current Docker versions and can also communicate with current Ceph versions.

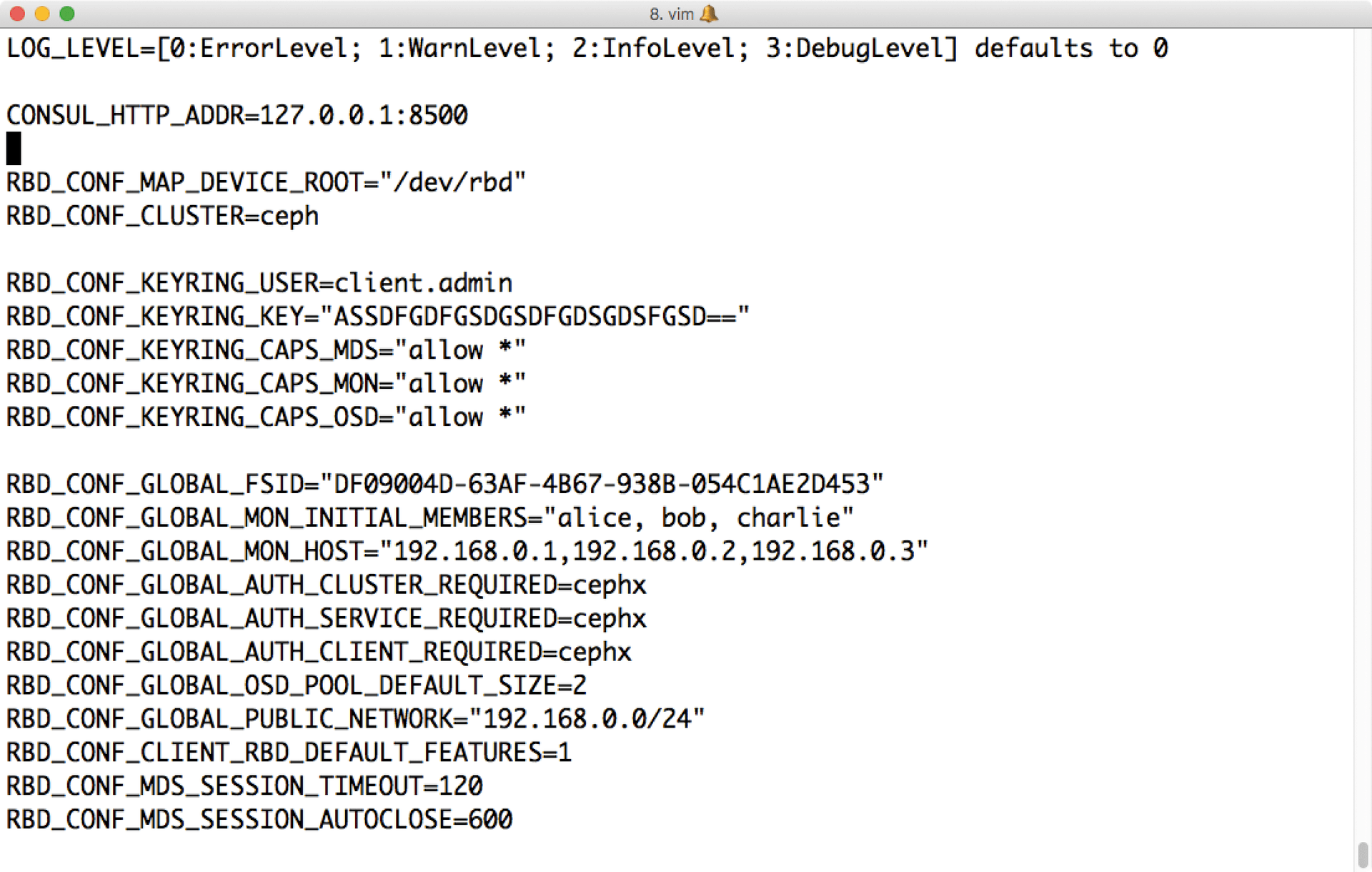

Please note that the plugin requires access to the Consul service discovery and configuration tool, because it stores any configuration data beyond the boundaries of the hosts. The parameters that can be stored in Consul are listed by the plugin author on the plugin page on Docker Hub [3]. If the required parameters are unclear, the Docker administrator should consult an administrator of the running Ceph cluster.

As soon as Consul is running with the corresponding configuration (Figure 3), a Ceph cluster is available in the setup; assuming that at least Docker 1.13.1 is used, the plugin can be installed with the command:

$ docker plugin install wetopi/rbd --alias=wetopi/rbd LOG_LEVEL=1 RBD_CONF_KEYRING_USER=client.admin RBD_CONF_KEYRING_KEY="KEY"

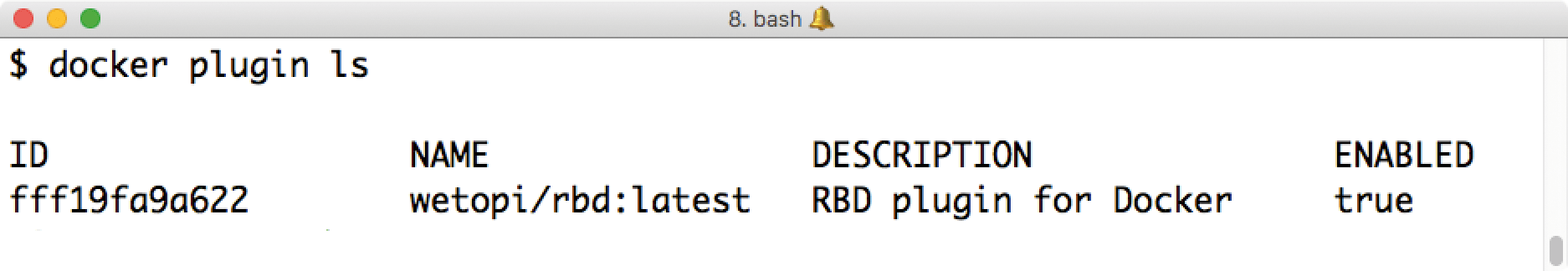

The values for RBD_CONF_KEYRING_USER and RBD_CONF_KEYRING_KEY are also available from the Ceph admin. Entering Docker plugin ls then shows whether the installation worked (Figure 4).

docker plugin ls command shows the currently loaded plugins. You can use several plugins at the same time.The following command finally creates a volume called it-admin-test, which can then be connected to a container:

$ docker volume create -d wetopi/rbd -o pool=rbd -o size=206 it-admin-test

The size value describes the volume size in megabytes; the pool value refers to the Ceph pool in which the new volume is created. If the Ceph admin provides another pool for Docker volumes, the value must be adjusted accordingly.

Docker Volumes in Everyday Life

The three paths described here based on OpenStack, VMware, and Ceph each end with the docker command to create a volume. However, this is not the only task you have to complete when handling volumes. The most attractive volume is worth nothing if it is not connected to a container, as is the case for a container with Nginx:

$ docker run -d -it --volume-driver wetopi/rbd --name=nginx --mount source=it-admin-test, destination=/usr/share/nginx/html, readonly nginx:latest

The command mounts the it-admin-test volume in the /usr/share/ path within the container. Note that Docker automatically copies content that is already there to the volume. The readonly parameter at the end ensures you can read, but not modify, the directory in the container.

If you want to get rid of the volume at a later time, stop and then delete the container and the volume with the command:

docker volume rm <name>

The example above also contains the volume driver to be used. If you use Fuxi with OpenStack or VMware instead, the corresponding name must be entered after --volume-driver. Some volume drivers also offer the option of passing parameters when mounting the volume: In the example, these should be separated by commas after --mount.

If you want to find out about existing volumes, the docker volume ls command displays the volumes currently created, and docker volume inspect <Name> outputs the details of a container.

Conclusions

Containerized applications cannot do without persistent data storage. To connect to a storage back end, Docker offers the concept of storage volumes, so OpenStack, vSphere, or Ceph storage can be used in the same way as storage appliances from various manufacturers.