Detecting security threats with Apache Spot

On the Prowl

Every year, cybercrime causes damage estimated at $450 billion [1]. Average costs have risen by around 200 percent per incident over the past five years, with no end in sight. The Herjavec Group even predicts annual losses of several trillion dollars by 2021 [2].

Under the auspices of the Apache project, industry giants such as Accenture, Cloudera, Cloudwick, Dell, Intel, and McAfee have joined forces and are trying to solve the problem with state-of-the-art technology. In particular, machine learning (ML) and the latest data analysis techniques are designed to improve the detection of potential risks, quantifying possible data loss and responding to attacks. Apache Spot [3] uses big data and modern ML components to improve the detection and analysis of security problems. Apache Spot 1.0 has been available for download since August 2017, and you can easily install a demo version using a Docker container.

Detection of Unknown Threats

Traditional deterministic, predominantly signature-based threat detection methods often fail. Apache Spot, on the other hand, is a powerful aggregation tool that uses data from a variety of sources and a self-learning algorithm to search for suspicious patterns and network behavior. According to the Apache Spot team, several billion events per day can be analyzed in the environment, if the hardware allows it, which means the processing capacity is significantly greater than that of previous security information and event management (SIEM) systems. Whether the system processes data from networks, Internet applications, or Internet of Things (IoT) environments is irrelevant because of their identical technological bases. The most important tasks include identifying risky network traffic and unknown cyberthreats (almost in real time). Apache Spot also offers a modular and scalable architecture that can be customized to meet your needs.

Traditional deterministic approaches to intrusion detection fail with the amount of data transferred daily over the Internet and the ever more complex data schemas. The number of threat vectors resulting from mobility, social media, and virtualized cloud networks does not make things any easier. For example, it takes an average of 146 days (98-197 days, depending on the industry) to uncover data breaches [4]. This critical risk window needs to be closed.

Apache Spot uses an innovative approach to identify new and previously unknown threats. The system visualizes fraud detection and in particular identifies compromised credentials and policy violations. Spot has compliance monitoring and can make network and endpoint behavior visible. Its strength lies in detecting malicious behavior patterns of zero-day threats, including undocumented ones.

Apache Spot collects network traffic data and DNS logfile records. The correlated data is then forwarded to a Hadoop cluster running on the Cloudera Enterprise Data Hub (Figure 1). The analysis tools and algorithms for ML contributed by Intel are used to detect, evaluate, and respond to suspicious actions. The process of reassessment is continuous and generates risk profiles that support the administrator in evaluating, researching, and conducting forensics. The decisive factor is the detection of previously unknown security problems on different levels. The use of intelligent learning mechanisms also promises to reduce false positives significantly.

![The Apache Spot architecture [5] shows how the system collects and evaluates traffic data from arbitrary sources and searches for anomalies and previously unknown attack patterns. The Apache Spot architecture [5] shows how the system collects and evaluates traffic data from arbitrary sources and searches for anomalies and previously unknown attack patterns.](images/F1_ITA_1217_P04_01.png)

Ensuring Safety

In contrast to traditional security tools, Spot does not use historical information but makes its own way with monitored network data. According to Intel, network data is checked for hidden and deep-seated patterns that indicate possible dangers. The environment runs on Linux operating systems with Java support. Apache Spot is based on four core components:

- Parallel Data Ingestion Framework. The Spot system runs on a Cloudera Enterprise Data Hub and uses an optimized open source decoder to load network flow and DNS data into a Hadoop system. The decoded data is stored in several searchable formats.

- ML. Apache Spot uses a combination of Apache Spark, Kudu, and optimized C code to run scalable learning algorithms. The ML component filters suspicious traffic from normal traffic and characterizes the behavior of network traffic for optimization and further network analysis.

- Operative Analytics. This component includes noise filtering, whitelisting, and heuristics to identify the most likely patterns that indicate security threats, which reduces the number of false positives and non-threatening indicators.

- Interactive Visual Dashboard. Control and monitoring is via a web interface.

Functionality is based on resource, security, and data management. As experience over the past few years has shown, signature-based and rule-based security solutions are no longer sufficient to protect against new cyberattacks. Apache Spot seemingly closes this gap and takes the discovery of suspicious connections and previously unrecognized attacks to a new level.

Data Collection and Intelligence

The quality of a security solution depends in particular on the database, but generally speaking, more data does not necessarily mean more security. The core problem lies rather in data acquisition and evaluation. The Spot team not only promises service availability of almost 100 percent without data loss, but also faster and more scalable functionality. Data collection is handled by special collector daemons that run in the background and collect relevant information from filesystem paths. These collectors detect new files generated by network tools or previously created data and translate them into a human-readable format with the use of tshark and the NFDUMP tools.

After data transformation, the collectors store the data in the Hadoop distributed filesystem (HDFS) in the original format for forensic analysis and in Apache Hive in Avro and Parquet formats for SQL query accessibility. Files with a size of more than 1MB are forwarded to Kafka; smaller files are streamed via Kafka to Spark Streaming. Kafka stores the data transmitted by the collectors so that they are then available for analysis by Spot Workers, which read, analyze, and store the data in Hive tables. This procedure prepares the raw data for processing by the ML Engine algorithm.

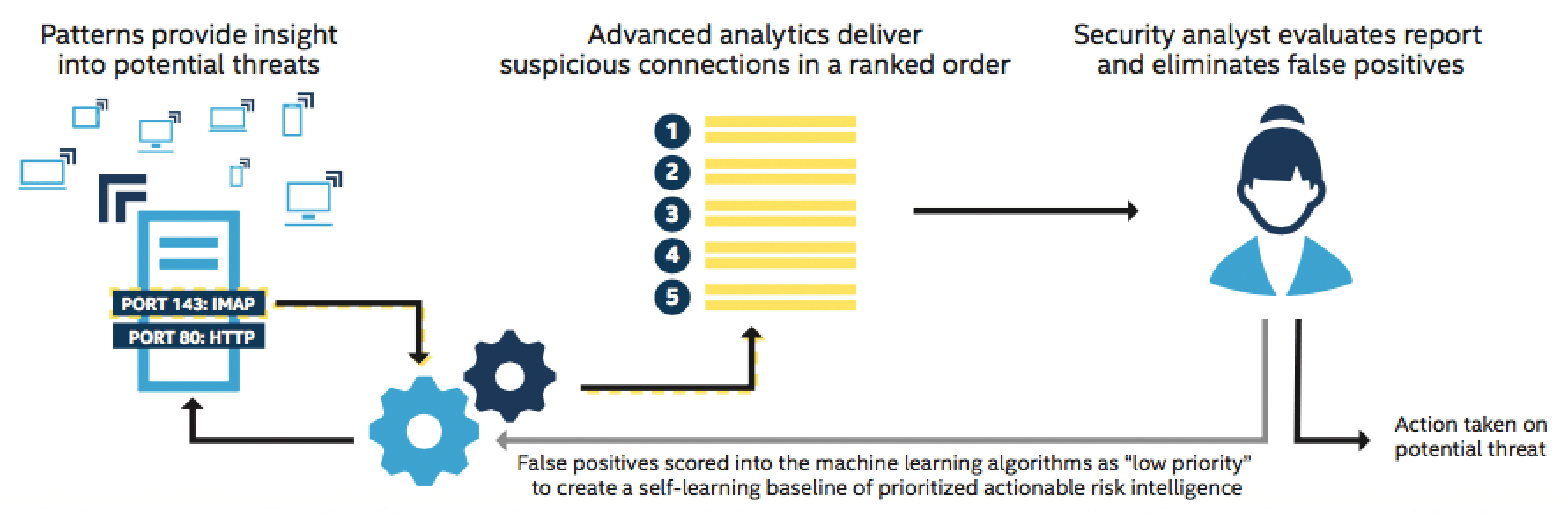

Apache Spot's ML component includes routines to analyze suspicious connections. The latent Dirichlet allocation (LDA) can discover hidden semantic structures in the collection. LDA is a three-tier Bayesian model that applies Spot to network traffic. The network log entries are converted into words by aggregation and discretization and then checked for IP addresses, words in protocol entries, and network activities. Spot assigns an estimated probability to each network protocol entry, highlighting events with the worst ratings as "suspicious" for further analysis (Figure 2).

Apache Spot has a number of analysis functions that can be used to identify suspicious or unlikely network events on the monitored network. The determined events are subject to further investigations. For this purpose, the system uses anomaly detection to ascertain typical and atypical network behavior and to generate a behavior model for each IP address from the results.

Content analysis is based on ML technologies and can analyze Netflow, DNS, and HTTP proxy logfiles. In Apache Spot terminology, logfile entries are called "network events." Spot analyzes suspicious content with the help of Natural Language Processing, extracts the relevant information from log entries, and uses it to build a "topic model."

All Threats at a Glance

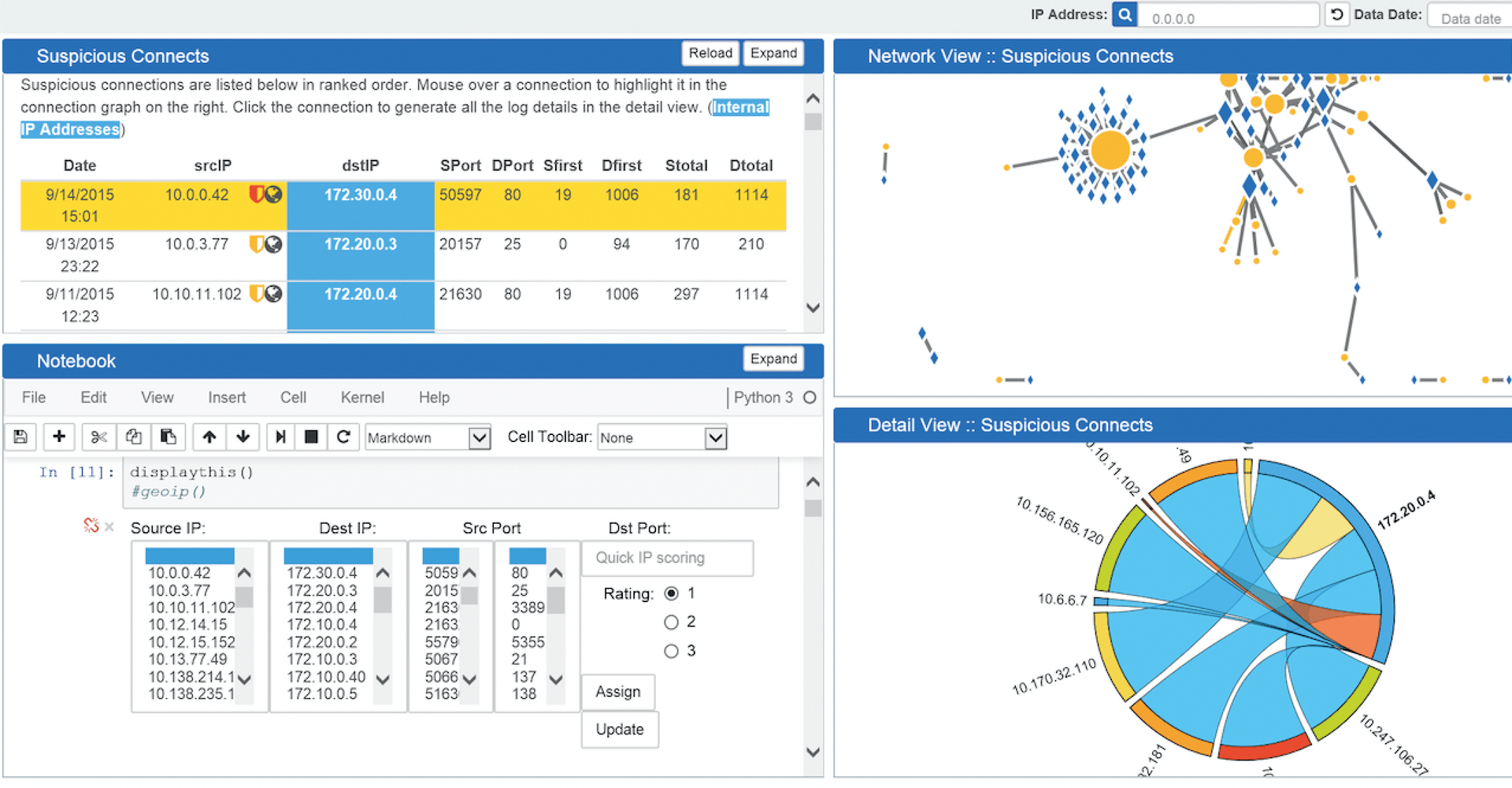

Apache Spot has extensive visualization tools that allow further analysis of suspicious activities detected by the ML algorithm. The web-based interface displays a list of suspicious network activities detected by Apache Spot as potential security threats under Suspicious. The overview displays different information for each critical event, such as the time, IP address, rating, type of traffic, port, and protocol.

The starting point of the visualization is the dashboard, which presents a summary of the network infrastructure to be monitored and shows the number of networks, endpoints, and critical events. The dashboard also lists the most critical user, endpoint, and network threads. The assessment of the status is visualized by corresponding status information (e.g., Very High, High, Medium), together with a corresponding color. In the Network menu, Apache Spot displays the network structure in a separate Network View (Figure 3). In addition to a graphical representation of the traffic over a defined time period, a tabular overview lists the busiest systems. A visual map gives you a bird's eye view of the infrastructure.

Depending on the information requirements and user type, you can switch between simple and expert modes. In the Notebook panel, you can determine the risk configuration for each connection. If you switch from simple to expert mode, you can adjust the criteria by which the data is filtered and discarded. Possible filters include source and destination IPs and ports and a rating. In this way, you can filter out exactly the information that matters to you. For each event, you can call up additional information in the Details View, such as the type of service and the router and interfaces used.

Threat investigation takes place in the Threat Investigation tab and is the final step of the analysis before Apache Spot displays the Storyboard. At this point, security analysts can perform a custom scan for a given threat. The Storyboard provides you with overviews of incident progression, impact analysis, and geographic location, along with an event timeline. The Ingest Summary presents a visualization of the events on the timeline.

Data Model for Universal Threat Detection

Most companies already have threat detection systems, with an intrusion detection system here, a threat detection and response solution there, and other tools in other network segments. This haphazard approach creates a problem that makes it more difficult to detect security problems: mountains of incompatible or duplicated data. A uniform data format for the detection of threats would be very important.

Apache Spot therefore uses the Open Data Model (ODM), which bundles all security-relevant data (e.g., events, users, networks, endpoints, etc.) in a single view. A consolidated view makes the relevant contexts visible at the event level. Another advantage is that the ODM allows sharing and reuse of threat detection models, algorithms, and analyses.

The ODM has a common taxonomy for describing security data used to detect threats, with schemas, data structures, file formats, and configurations in the underlying Hadoop platform for collection, storage, and analysis. The Spot system defines the relationships between the different security data types and links the protocol data to user, network, and endpoint identity data.

The Apache Spot ODM is particularly useful for out-of-the-box analysis to detect potential threats in DNS, flow, and proxy data. In addition to the standard analyses created in Apache Spot, you can create custom analyses according to your specifications. In the future, third-party plugins are conceivable that analyze the data that is collected and consolidated by Spot. The development team hopes to see significant contributions from the Apache Spot community to the development of threat detection models, algorithms, pipelines, visualizations, and analytics on the basis of the data model.

Quick Start in Spot

With the release of Apache Spot 1.0 and the ensuing assessment of its production capability, a mature version is available for evaluation. Because Apache Spot integrates with any infrastructure, no changes to the existing environment are required. However, commissioning is not trivial. To get a first impression, you can also install a demo system. All you need is a Docker installation; then, execute the container:

docker run -it -p 8889:8889 apachespot/spot-demo

To access the web interface, go to http://localhost:8889/files/ui/flow/suspicious.html#date=YYYYYY-MM-DD. For example, if you use 2016-07-08 at the end of the URL, you end up in the overview of suspicious network events on July 8, 2016, where you can conduct a detailed investigation with Spot. Alternatively, a manual installation is possible: At the core of Apache Spot is a Hadoop system. HDFS, Hive, Impala, Kafka, Spark, YARN, Zookeeper, and HDFS must also be present before installation. Once you have set up the required users, you can download the Spot code. After unpacking, use the spot.conf Spot configuration file, which is located in the /home/safety/incubator-spot/spot-setup directory.

Using keys such as NODES and DSOURCES, you determine the network nodes and data directories to be monitored. Use the next steps to install the ingest and ML components. The analytical tasks are handled by the spot-oa module. The final step is the installation of the UI component. The Spot Dashboard can then be accessed.

Conclusions

Without question, Apache Spot has what it takes to become a classic. At first glance, the environment seems to offer everything that IT managers expect from a modern security solution. The fact that Spot is already being used in production on eBay and some other large companies gives hope that the high expectations will be fulfilled in practice. However, it is still too early for a final evaluation.