Dialing up security for Docker containers

Container Security

Container systems like Docker are a powerful tool for system administrators, but Docker poses some security issues you won't face with a conventional virtual machine (VM) environment. For example, containers have direct access to directories such as /proc, /dev, or /sys, which increases the risk of intrusion. This article offers some tips on how you can enhance the security of your Docker environment.

Docker Daemon

Under the hood, containers are fundamentally different from VMs. Instead of a hypervisor, Linux containers rely on the various namespace functions that are part of the Linux kernel itself.

Starting a container is nothing more than rolling out an image to the host's filesystem and creating multiple namespaces. The Docker daemon dockerd is responsible for this process. It is only logical that dockerd is an attack vector in many threat scenarios.

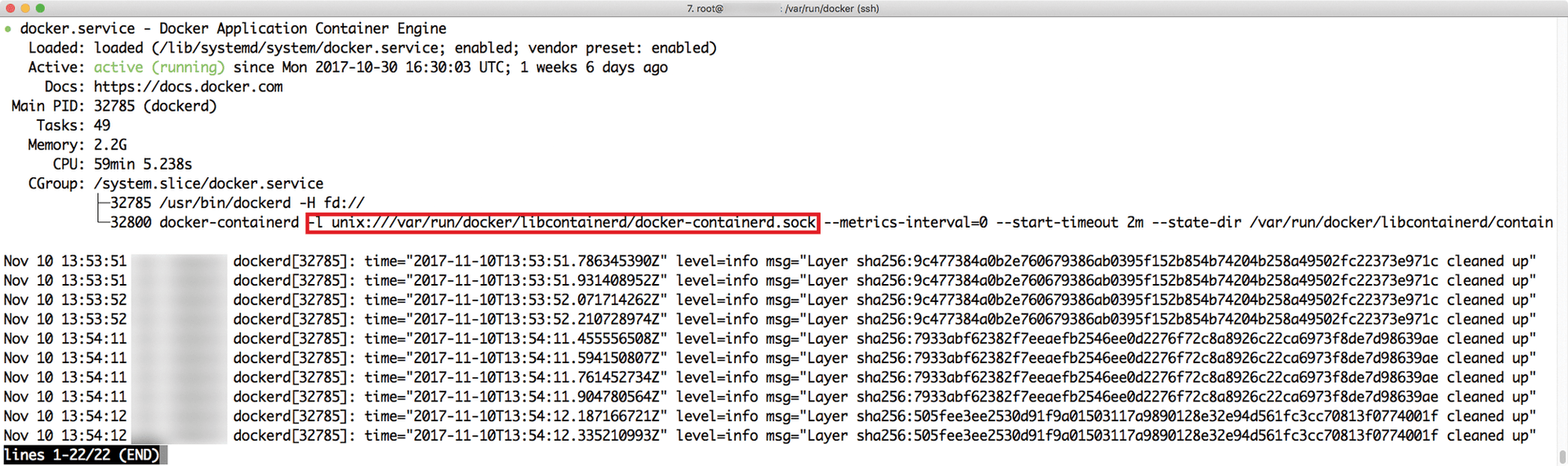

The Docker daemon has several security issues in its default configuration. For example, the daemon communicates with the Docker command-line tool using a Unix socket (Figure 1). If necessary, you can activate an HTTP socket for access via the network.

The problem is: HTTP access is not secure. If you want security at the Docker daemon level, one option is to implement a system using TLS with client certificates.

To set up TLS with Docker, go to the configuration file of the Docker daemon and enable tlsverify, tlscacert, tlscert, and tlskey [1] with the corresponding files as parameters. Then store those files in the client configuration in the folder ~/.docker; use the keywords tls, tlscert, tlskey, and tlscacert to ensure that Docker also finds them; and set the environment variable DOCKER_TLS_VERIFY to 1. If you then call the Docker command at the command line, Docker automatically uses the certificates to log on to the server.

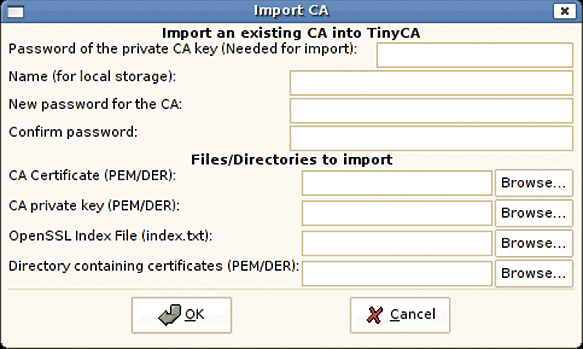

You either have to buy the appropriate certificates for this approach, or you are forced to operate a suitable certificate authority (CA) yourself. Tools like TinyCA [2] help make this task a little less painful (Figure 2).

Privileges System

A seasoned admin will keep the amount of work performed with root system administrator privileges to the absolute minimum. With Docker containers, the root account is still a dangerous thing: Because a container is not a complete VM, user management within the container and outside it are also not completely separate. An admin who starts a container with root privileges will have to contend with a whole bunch of far-reaching authorizations.

Docker itself comes with several features to make sure that the privilege level does not become a problem. When a container is started, it creates several namespaces at kernel level in the background, one for the network connections and one for the processes within the container.

Applications within the container therefore have no way to get information about the processes outside the container – provided that there is no vulnerability in the namespace implementation of the Linux kernel. Docker also creates control groups for new containers. Control groups limit the access to resources by the container, so that a single container, for example, cannot use all the CPU power available on the host.

Because the container also requires access to central directories such as /sys, the Docker developers have adopted the concept of Linux capabilities in Docker. A Docker container itself only has the rights of an unprivileged application – unless the admin chooses otherwise. In addition, a whole series of additional capabilities, such as CHROOT to allow the chroot function to be executed in a container or SYS_RAWIO to get direct access to storage devices, provide some protection.

With the --cap add and --cap-drop options for Docker, admins can grant each container only the privileges it actually needs. For example, if a container needs to use a privileged port – one with a number lower than port 1024 – you simply activate the NET_BIND_SERVICE capability flag for the container.

Another approach found in various documents is the --privileged switch. If you start a container in privileged mode, it can do virtually everything that root is allowed to do on the host system. Unlike the truism about working as root, many users actually follow this recommendation – they give the container comprehensive permissions, although it probably doesn't need them at all.

One of the most important recommendations for safe Docker operation is to start containers in privileged mode only in absolutely exceptional cases and always check carefully whether it is absolutely necessary. The potential damage that can be caused by a privileged container running amok includes taking down the host.

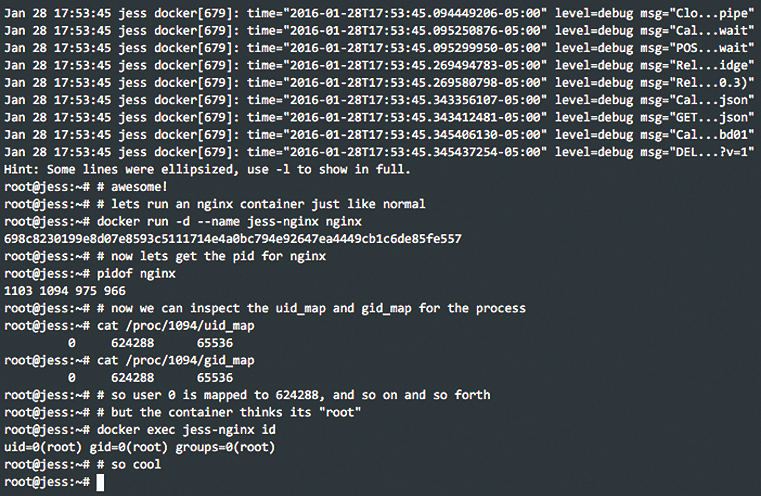

Since version 1.10, Docker also supports the possibility to use separate user namespaces for containers. User administration within the container is then completely isolated from the outside. Start the Docker daemon with the --userns-remap parameter to enable separate user namespaces. If you enable this feature, the container runs in a user namespace that maps the user root of the host system to an arbitrary UID, although the service running in the container still believes it has root privileges (Figure 3).

Seccomp

Several external tools are also available to help with Docker security. One example is the seccomp function, which is part of the Linux kernel and was originally developed for Google Chrome. Seccomp is designed to limit the permitted system calls (syscalls) to the absolute minimum necessary.

Syscalls are functions anchored in the kernel on any POSIX-compatible operating system that can be called by external programs. The most popular system calls in Linux are those for interaction with files in filesystems, namely open(), read(), and write(). However, the kernel also supports system calls that deeply affect the running system, such as clock_settime(), which can change the time of the target system; mount() is also a system call.

Seccomp lets you define profiles: The profile determines which system calls a program has access to [3]. The target program must support seccomp, because it must select the profile with which it will be associated and set it using the seccomp() syscall. If a program that is restricted by a seccomp profile attempts to execute a syscall that is not explicitly allowed in the profile, the kernel of the host operating system sends the SIGKILL signal without further ado.

Seccomp functionality acts as an extension of the Linux capability system. Not all operations that an admin might want to stop a Docker container from doing can be covered by capabilities – seccomp jumps into the breach, providing a way to restrict operations that capabilities can't control. Since version 1.10, Docker is able to set seccomp profiles based on individual containers.

Mandatory Access Control

Few admins will admit to liking Mandatory Access Control (MAC), but no discussion of container security is complete without at least mentioning the MAC option.

The MAC principle asserts that each user or program should only have access to the resources it actually needs. The most prominent implementations of MAC for Linux are SELinux and AppArmor, and neither of these options is especially popular. Many manuals and how-tos provide instructions for switching SELinux to Permissive mode or deactivating AppArmor right from the outset. Many people don't like to work with MAC, because it sometimes prevents access to files or functions for reasons that are initially difficult to understand.

However, it does not make sense to remove SELinux or AppArmor from the setup right from the start [4]. Docker has gone to great lengths to make SELinux (and AppArmor on Ubuntu systems) as convenient as possible. If you run Docker containers on a host, you should never switch off SELinux completely.

If SELinux or AppArmor block too much, study and customize the profiles instead of killing the entire service. Because the isolation between the host and container in Docker is weaker than in a conventional VM, MAC is a great help for container environments.

Even More Security with Docker Enterprise Edition

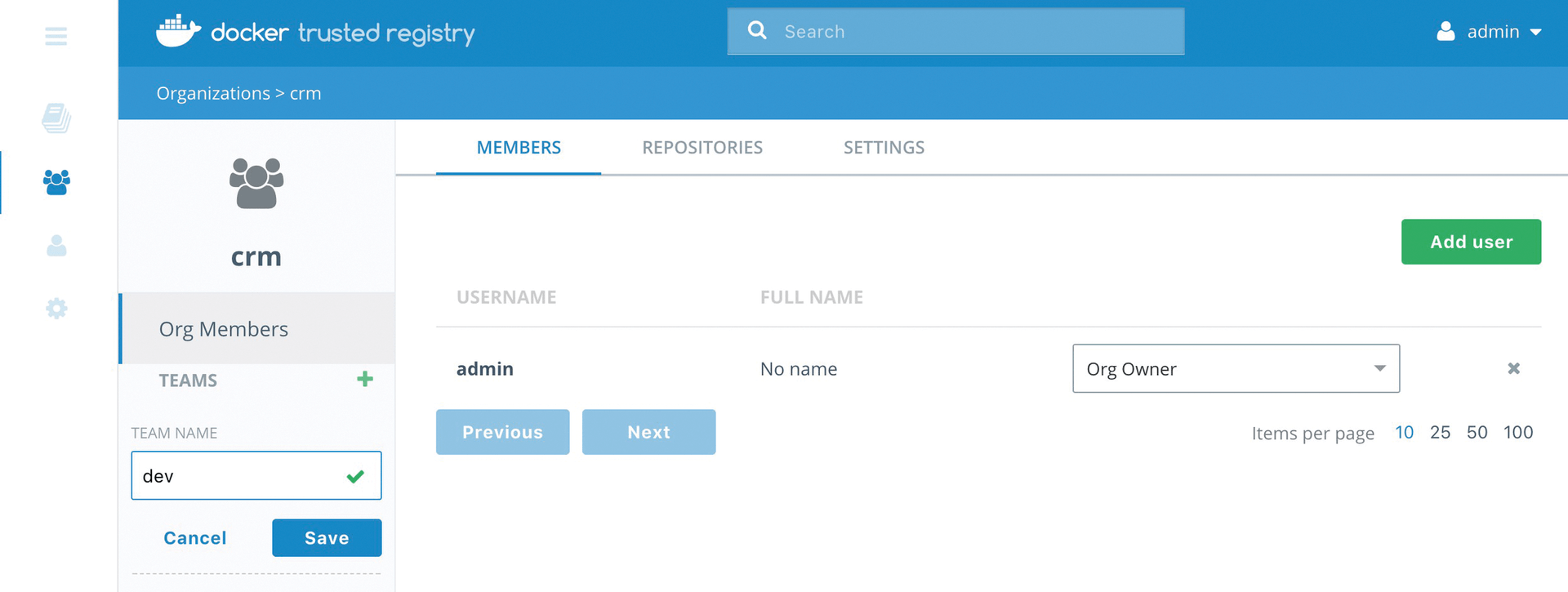

If you use Docker on a large scale, you might wish to consider buying the Docker Enterprise Edition (Figure 4). The Enterprise Edition comes with several additional features that are missing in the Community Edition. The most prominent addition is probably the Role-based Access Control (RBAC) system.

RBAC makes it possible to connect Docker to an existing user directory, such as LDAP. On the basis of defined roles, it is then possible to determine in a granular manner who can do what in Docker. This option completely decouples Docker user management from the system user management. The Enterprise Edition also comes with some graphical management tools that allow easy configuration for various security functions.

Know the Source

Docker Hub is the largest online exchange for Docker images. If you want to provide a container for the community on the Docker Hub, it's not difficult: First register, then complete some technical details, and then you can start.

Previous articles in ADMIN have already pointed to the inherent dangers of this system several times. Many Docker containers are black boxes, which means that you have to trust that the container creator had noble intentions. Image providers sometimes upload the Docker file on which a container is based to GitHub, thus allowing easy inspection by potential users. Docker Hub, however, has no way to verify that the uploaded image actually matches the referenced Dockerfile.

If you create your own Docker container, be sure to base it on an official distributor image.

If you develop your own containers, you also have to think about how to keep the containers secure in the future: If a security update appears for libraries or programs within the container, the easiest solution is to build the container again from scratch using a Dockerfile with the updated package. This approach presupposes that you operate a complete continuous integration environment for your own containers, such as an environment based on GitLab [5].

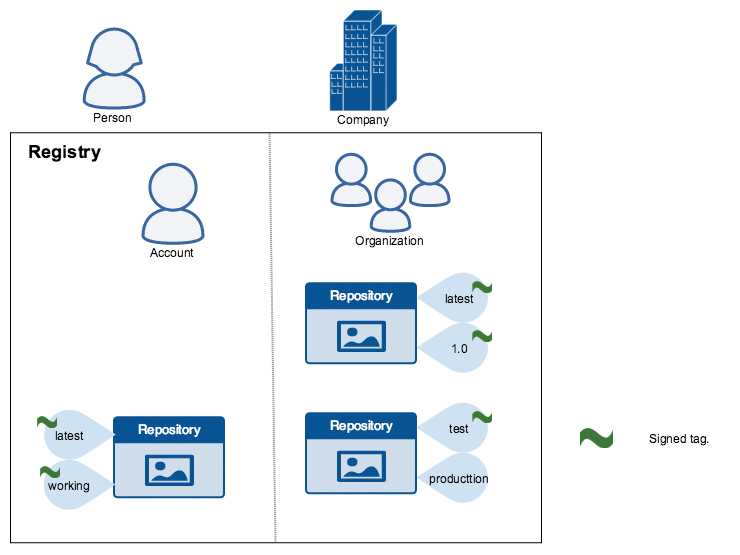

Another important security tool is Docker's Content Trust feature, which only lets you download images from another registry that are digitally signed. Docker's registry protocol provides the possibility to tag individual images (Figure 5). The signing function of the Docker registry is based on these tags. Using the docker command, you can create the necessary keys once only and then choose whether or not to digitally sign the tag when tagging images.

If you use the signature function, the docker pull command on the target host lets you enable the signature check by setting parameters. If communication takes place via HTTPS, which is normal for Docker's registry function, the client running on the target host can safely assume that the image loaded from the registry using a signed tag is actually the image uploaded by the key owner – and that the image is unchanged. Even if you don't run your own registry, this function is very practical, because it also works with other remote registry services – if the content trust function is enabled.

Conclusions

Docker containers are theoretically less secure than VM systems such as KVM.

Steps such as using TLS and MAC can help to lock down the Docker environment. Seccomp is also recommended if containers of several customers run on the same system.

Admins should also pay attention to permissions: Container documentation sometimes states that privileged mode is required. Similar to the unspeakable wget URL | sudo bash constructs, which are found all over on the web, this privileged mode requirement is often a sign of bad container design rather than technical necessity.

Last but not least, be sure you are using a reliable container image. It may be tempting to download and launch any old image from the Docker Hub, but you are running a risk if the image is out of date or if you can't verify exactly what is actually inside the image file.

Develop a suitable continuous integration/continuous delivery workflow and use it to build containers for your own requirements. At the very least, admins should only use images that come from trusted sources. In addition to increased security, this operating concept has the side effect of making container operations easier and friendlier.

If you spend some time and energy on addressing the security issues, you'll find that you can operate Docker containers quite securely.