Automated orchestration of a horizontally scalable build pipeline

Nonstop

With the information I provide in this article, you can deploy and test a Jenkins-based horizontally scalable build pipeline using the technologies shown in the "Technology List" box.

For readers who are unfamiliar with Jenkins, a tutorial is outside the scope of this article, and ample resources and tutorials can be found online, but to summarize, Jenkins is a tool used for continuous integration of software projects. For example, a development team can commit code changes to a central repository. On submission (e.g., git push), Jenkins can take over, automatically building the software for the development team, checking that it compiles (sending notifications and error messages if not), and otherwise continuing with the next phase, often with automated unit test runs.

A Jenkins system comprises a "master" machine and an arbitrary number of "slave" machines. The slaves are used to offload build jobs from the master. Such a configuration helps you avoid the need to upgrade a single master machine's hardware resources when the build load of a project increases.

Naturally, software projects tend to increase in size, in terms of codebase and number of developers, and a project can grow unexpectedly fast, straining the existing build system and process such that an IT team then needs to take time out to expand a server's CPU or RAM resources (i.e., vertical scaling). The solution demonstrated in this article avoids vertical scaling headaches, instead allowing horizontal scalability with arbitrary Jenkins slave machines spawned inside their own Docker containers.

An Ansible tutorial is also outside the scope of this article, but again, plenty of tutorial resources on Ansible can be found online [1]. In short, Ansible is an automated deployment tool that can bootstrap servers or hosts at the application level with ssh, scp, and sftp. For example, Ansible could be used to install Python automatically on 20 virtual machines (VMs) or servers. Alternatives to Ansible include Chef and Puppet, but Ansible is popular because of its simplicity and reliability.

Deployment with VirtualBox and Vagrant makes it very straightforward to try out and test the technologies in a local environment such as a laptop. The laptop or desktop used needs a minimum 8GB of RAM to run the two VMs in this example. VirtualBox networking can be quite challenging, so getting this setup to work will give you the confidence that a cloud or data center deployment will work without major issues.

You might wonder why Docker Swarm has been chosen as the container orchestration technology, as opposed to the currently popular Kubernetes. I found that Docker Swarm worked out of the box with the VirtualBox network configuration used here: one host-only adapter and one NAT adapter for each of the two VMs.

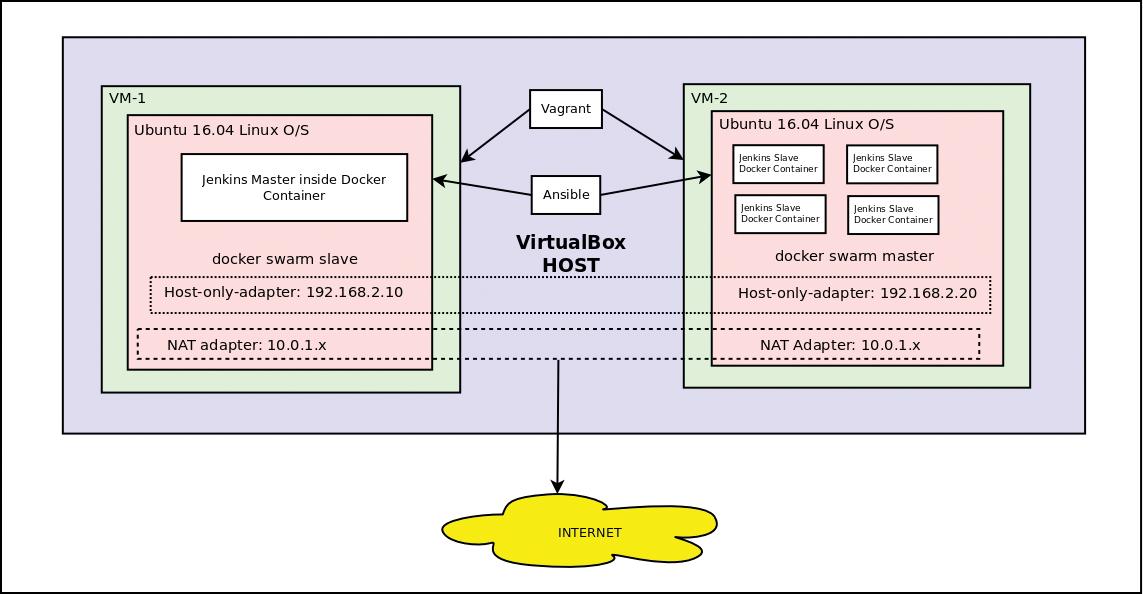

The example can be deployed automatically using Vagrant and Ansible, ending with the architecture illustrated in Figure 1. The arrows from the Ansible and Vagrant boxes illustrate how Vagrant controls the VM infrastructure, whereas Ansible controls what happens inside the operating system – in this case, Ubuntu Linux 16.04. For a cloud deployment, the Vagrant code can be ported easily to a cloud-based infrastructure-as-a-service (IaaS) tool such as Terraform or AWS CloudFormation.

The complete deployment is free to download on GitHub [2]. VirtualBox, Vagrant, and Ansible versions should be the same as those shown in the "Technology List" box. Note that Docker Swarm does not have to be installed, because it is included with Docker by default, which is another advantage of using Docker Swarm over Kubernetes (see the "Kubernetes" box).

Once the GitHub repository has been cloned to a local directory on a Linux machine (it's theoretically possible with Windows but is untested!), you will need to change into the root directory of the downloaded Git repository and type the command:

vagrant up

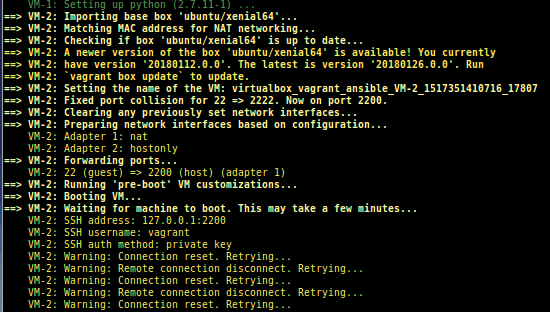

The Vagrantfile file included in the same directory can be studied to find out exactly what it does, and you can modify it if desired (e.g., to add a third VM). The file essentially bootstraps two VMs, each containing one host-only adapter network and one NAT network (refer to Figure 1 for the IP address subnets), and then runs a script named bootstrap.sh, which installs Python on each VM (Figure 2). A Python installation is necessary at this stage, because it subsequently allows Ansible to be run.

Similarly, you would install the Puppet or Chef client/daemon at this stage for a Chef or Puppet deployment. If using AWS, for example, Python could be installed using CloudFormation or Terraform before initiating an Ansible deployment run.

Deployment by Ansible

An automated Ansible deployment to the running Ubuntu 16 VMs is efficient and flexible. A tutorial or beginners guide to Ansible is out of scope of this text but can be found on the Ansible website [4] for the interested reader.

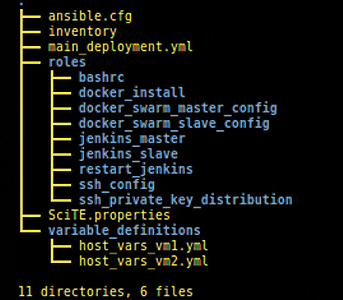

An Ansible deployment is organized into "playbooks," which can themselves be categorized using Ansible "roles." Roles are useful for organizing Ansible playbook runs into sections. The top-level directory tree of this Ansible deployment is shown in Figure 3 (ignore the SciTE properties file; this is just an artifact of using the SciTE editor):

-

ansible.cfg. A pointer to theinventoryfile, which contains labels and domain names or IP addresses. In this case, these are the IP addresses of the two VMs used in the deployment (i.e., for the VirtualBox host-only adapter interfaces used for internal routing between the VMs). -

main_deployment.yml. The main driver of the Ansible playbook run. This YML file calls the lower-level role playbooks organized under therolesdirectory. -

variable_definitions. A directory containing host variables for each of the two VMs,host_vars_vm1.ymlandhost_vars_vm2.yml. These files contain (for each VM) a path to the private SSH key generated by Vagrant, a local directory path prefix (which needs to be modified to point to your installation directory), and the home directory and username of the account running inside each of the VMs.

During the following summary description of each role within the roles directory relating to Docker, Docker Swarm, Jenkins, and the Jenkins master and slave bootstrapping and configuration (along with important shell commands that feature in the playbooks), you should refer back to Figure 1 frequently to help you fully understand the final target architecture that is built with Vagrant and Ansible.

The bashrc, ssh_config, and ssh_private_key_distribution roles configure .bashrc files, /etc/ssh/sshd_config, and distribution of private keys to the VMs so they can talk to each other using the same private keys generated by Vagrant for host access to the VMs. (I do this for simplification and convenience, but you might want to modify the key distribution to make it more secure – for example, by using Ansible Vaults to store secrets).

Listing 1 is an example of an Ansible task in ssh_private_key_distribution that distributes Vagrant's VM-1 private SSH key to a VM.

Listing 1: ssh_private_key_distribution

- name: distribute VM-2 private ssh key to VM

copy:

src: ../../.vagrant/machines/VM-2/virtualbox/private_key

dest: "{{ home_dir }}/.ssh/vm-2_priv_ssh_key"

owner: "{{ username }}"

group: "{{ username }}"

mode: 0600

- name: add private ssh keys to /etc/ssh/ssh_config in all VMs

become: yes

lineinfile:

dest: "/etc/ssh/ssh_config"

line: "IdentityFile ~/.ssh/vm-2_priv_ssh_key"

regexp: "vm-2_priv_ssh_key"

state: present

insertafter: EOF

The docker_install playbook in this role installs Docker Community Edition on each VM by running a series of Ansible tasks according to the installation instructions in the official online Docker Community Edition documentation. Readers who want to try this deployment on Red Hat-based systems will need to port some of the tasks from Ubuntu (Listing 2).

Listing 2: Red Hat-Based Deployment

- name: add correct apt repo

become: yes

apt_repository:

repo: deb [arch=amd64] https://download.docker.com/linux/ubuntu {{lsb_release_output.stdout}} stable

state: present

The jenkins_master, jenkins_slave, and restart_jenkins roles contain playbooks used to install, set up, and configure a Jenkins master and Jenkins slaves across the VMs.

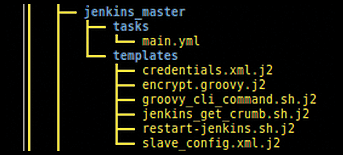

An example of the Ansible directory tree structure for the jenkins_master role is shown in Figure 4. Under this role, a tasks directory contains main.yml, and a templates directory contains Jinja2 templates for use with Ansible. (A discussion of Jinja2 Ansible templates is outside the scope of this article.) The templates are used along with Ansible variables to copy (templated) files from the source (host) to the target (VM), with Ansible variables that are replaced by specific values during the transfer given by {{ ... }}. This feature really starts to demonstrate the power of Ansible as an automated deployment tool.

jenkins_master role files.The jenkins_master role/playbook bootstraps Jenkins to a basic level, so that further progress can be automated, and the Jenkins master and slave Docker containers are pulled:

git clone https://github.com/blissnd/jenkins_home.git {{ home_dir }}/jenkins_home

docker pull jenkins/jenkins

docker pull jenkinsci/ssh-slave

Some SSH key administration is then carried out before launching the Jenkins master container:

docker run -p 8080:8080 --rm -d -v /home/vagrant/jenkins_home:/var/jenkins_home jenkins/jenkins

At this point, script templates are copied across, while being replaced with Ansible variables, and then run on the target VM. These include getting a command-line token from the (now running) Jenkins server and running a Groovy script over the command line that encrypts the private SSH key into a form that can be used by a Jenkins master to communicate with Jenkins slaves. (See the "Bootstrapping Jenkins" section for explanation as to why it works this way.)

Finally, the credentials template is copied (now containing the correct SSH keys), and Jenkins is restarted.

The docker_swarm_master_config and docker_swarm_slave_config roles are responsible for configuring Docker Swarm on each of the VMs.

Ansible is an excellent tool choice for accomplishing this task in a fully automated way. The swarm is initialized on VM-2. Note that the roles of master and slave have been reversed for the Docker swarm (i.e., VM-1 runs a Jenkins master but is itself a Docker swarm slave, and VM-2 runs Jenkins slaves but is itself a Docker swarm master). The following command initializes the swarm:

docker swarm init --advertise-addr 192.168.2.20

Notice the IP address given is that of the VirtualBox host-only adapter of VM-2. The next steps in the playbook are the extraction of the Docker swarm security token, some error checking, and a join command so that the Docker swarm master also joins the swarm. For your benefit, a commented out task showing how to promote a Docker swarm node to a swarm master is given at the end of the playbook .yml file.

The docker_swarm_slave_config role contains only one task that simply adds the slave to the swarm, and a close look at it reveals how to access Ansible host variables from a different playbook run. After successfully running the Vagrant command

shell:"{{hostvars['192.168.2.20'] ['docker_swarm_join_command']}}"

to bring up the VMs and install Python on them, the complete deployment can be run by changing into the Ansible directory and running Ansible

cd Ansible ./ansible-playbook main_deployment.yml

Avoiding SSH Problems

To prevent SSH problems when re-running Ansible, it might be necessary to delete the .ssh/known_hosts file

rm ~/.ssh/known_hosts

and might be the only solution to SSH problems when all other causes, such as incorrect keys, have been exhausted.

Ansible Modules and the Shell

The attentive reader might notice that many shell commands have been used in the playbooks, as opposed to Ansible modules. Shell commands have been used intentionally for the purposes of this exercise to demonstrate the commands behind the modules. This approach aids in the learning process with regard to shell commands and is useful for readers who might want to try some of the steps manually, without the aid of Ansible.

Bootstrapping Jenkins

At this stage, it is worth mentioning an important point regarding bootstrapping of the Jenkins Master Docker container. To be able to handle Jenkins command-line and API commands used later in the deployment, a small group of Jenkins plugins have to be installed from the outset. This step normally happens when Jenkins is first launched; it requests the initial token/key for activation, gives the option to install basic plugins, and sets up an initial user account.

To accomplish this in an automated way, a baseline Jenkins configuration is automatically cloned from the jenkins_home GitHub repository. The git clone command is executed toward the beginning of the Jenkins container bootstrapping process in the corresponding Ansible playbook to the URL https://github.com/blissnd/jenkins_home.

You do not have to clone this repository manually! It will be done automatically during the Ansible playbook runs. The above information and URL is given purely for informational purposes.

One point of potential confusion that is worth highlighting at this stage is the somewhat complex configuration of the SSH keys used by the Jenkins master to communicate with and bootstrap the Jenkins slaves. Jenkins has a quirk regarding storage of SSH keys.

Although the SSH slave configuration GUI appears to allow use of an SSH private key file, and indeed even presents the option to point to an existing .ssh directory, this does not appear to work as intended, at least not in the Jenkins Docker image used here. Most users likely will paste the SSH key ASCII text directly, which works fine, thus avoiding the aforementioned issue during manual deployments. This option is unfortunately not possible when using the automated Ansible process, which requires a programmatic or scripted solution from the command-line interface or REST API.

After some investigation, it turned out that Jenkins encrypts the SSH key, possibly with its own master key, and then stores that result inside the credentials.xml file in the Jenkins home directory. Therefore, for automated deployment of the Jenkins Docker slaves, a method had to be found (1) to use the command-line interface to get the Jenkins token for use in API calls and (2) to pass in a Groovy script to encrypt the private SSH key before storing it in credentials.xml.

Putting It All Together

Because of the many variables regarding time outs, potential SSH problems, and so on that are difficult to anticipate, you might have to run

ansible-playbook main_deployment.yml

several times before it runs successfully through to the end. Normally, running the same Ansible deployment again will resolve such problems and allow it to move on.

Note: Do not rerun just because you see errors in red, because some can be ignored safely. If the Ansible run continues and reaches the end, everything should be running fine, even if errors in red are seen along the way.

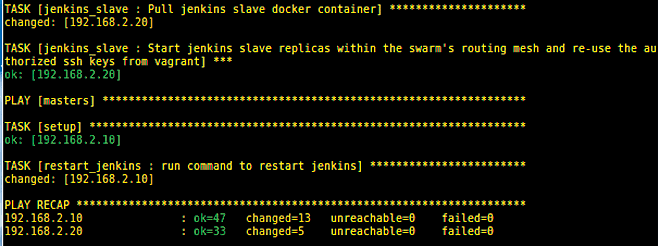

At the end of the final (successful) run, you should see a result as in Figure 5. Now point your host browser to http://192.168.2.10:8080/, which should be available both from outside the Docker container and outside the VM (i.e., from the host machine).

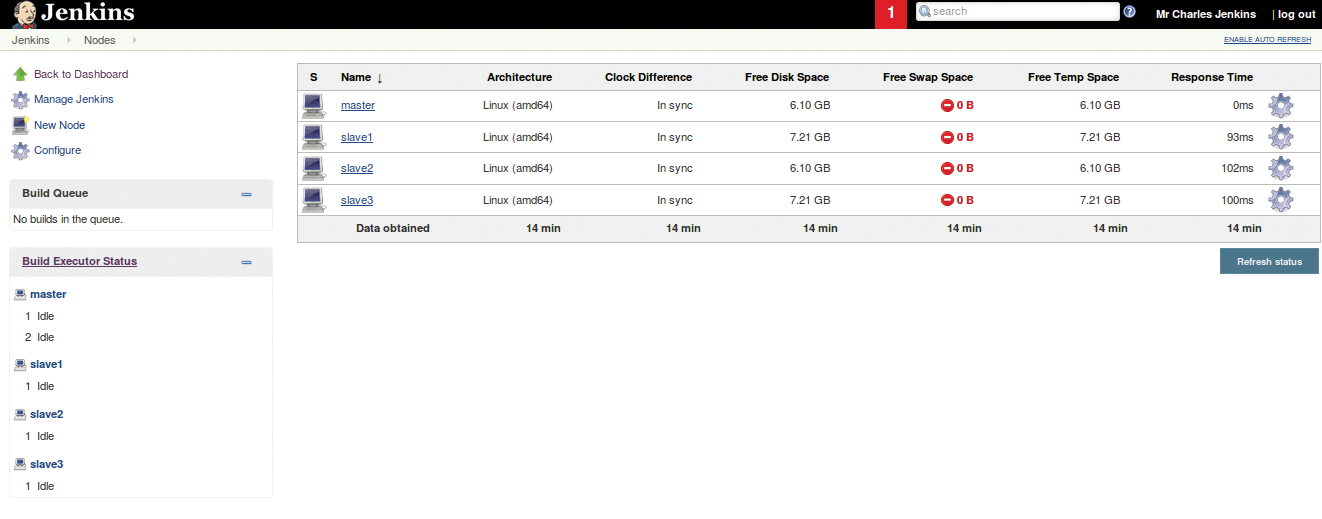

You should see the Jenkins login screen, where you can log in with username jenkins and password jenkins. Clicking on Build Executor Status on the left should show the master and three slave machines up and running (Figure 6).

The red warning indications regarding zero swap space available can be ignored if using SSD storage. For conventional hard drive usage, swap space can simply be added to each of the VMs if desired, which will cause the swap space errors to disappear from the page [5].

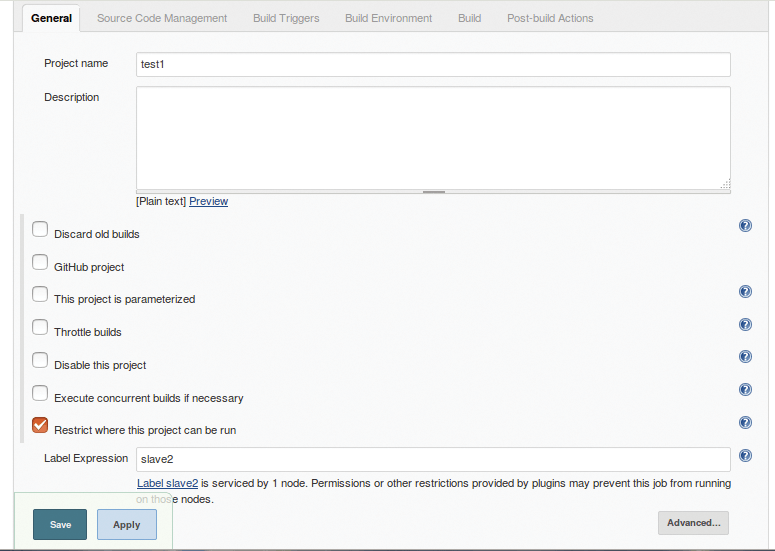

A default test job is included on the main Jenkins page as an example. Click on the job (test1) and select Configure on the left. You will see that this job has been selected to run on the slave machine named slave2 (Figure 7). As can be seen from the screenshot, the option for where to run the job is Restrict where this project can be run.

Now go back to the main Jenkins page (http://192.168.2.10:8080/). Click on the job test1 and then Build Now on the left to see the job running (a new blue circle appears on the left). Click on this topmost blue circle and then on Console Output on the left. You will see the job has run successfully on slave2 (Listing 3).

Listing 3: Console Output

Started by user Mr Charles Jenkins Building remotely on slave2 in workspace /home/jenkins/workspace/test1 [test1] $ /bin/sh -xe §§ /tmp/jenkins5029989702823914095.sh + echo hello hello Finished: SUCCESS

Now if you ssh to VM-2 with Vagrant (which must be done from the virtualbox_vagrant_ansible directory),

vagrant ssh VM-2

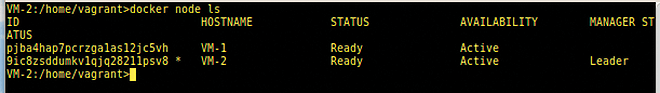

the following command will show that both VMs are active within the Docker swarm (Figure 8):

docker node ls

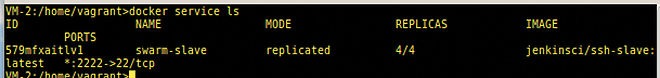

Similarly, the following command shows the four slave replicas within the Docker swarm (Figure 9):

docker service ls

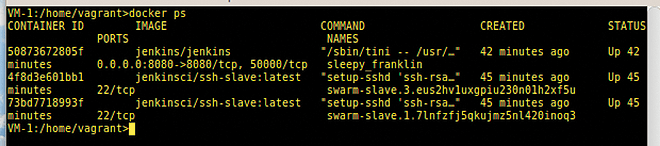

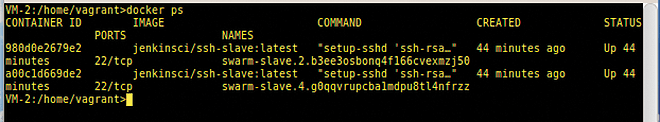

If you ssh to each VM and do a docker ps on each, you will see the Jenkins slave Docker containers running across all VM nodes active inside the Docker swarm's routing mesh (Figures 10 and 11).

Note that the VM-1 Docker process list includes the master Jenkins container. Other than that, Docker Swarm has distributed the Jenkins slave containers uniformly over the active VM nodes.

Conclusion

Jenkins empowers a continuous integration process and can even assist with continuous delivery to a test team or customer. The example in this article was deployed automatically using Vagrant and Ansible and shows how to achieve horizontal scalability with arbitrary Jenkins slave machines spawned inside their own Docker containers.