Monitoring and service discovery with Consul

Staying on Top

The cloud is rightly considered one of the most significant developments in IT in recent years: It clearly divides the industry into two groups – service providers and users – each of which has specific requirements.

One requirement concerns monitoring: Conventional monitoring in a cloud makes neither the providers of the large platforms nor their users happy, because what makes the cloud special is that it serves up resources dynamically. If the user needs a large amount of power at the moment, they book a corresponding number of virtual machines (VMs). If they only need a fraction of these resources later on, they return the redundant capacity to the cloud provider's pool.

The pool, however, must be monitored very carefully by the provider. The provider needs to know at all times how many resources can still be distributed to users – and when it's time to scale up the platform by adding more hardware.

A Different Kind of Monitoring

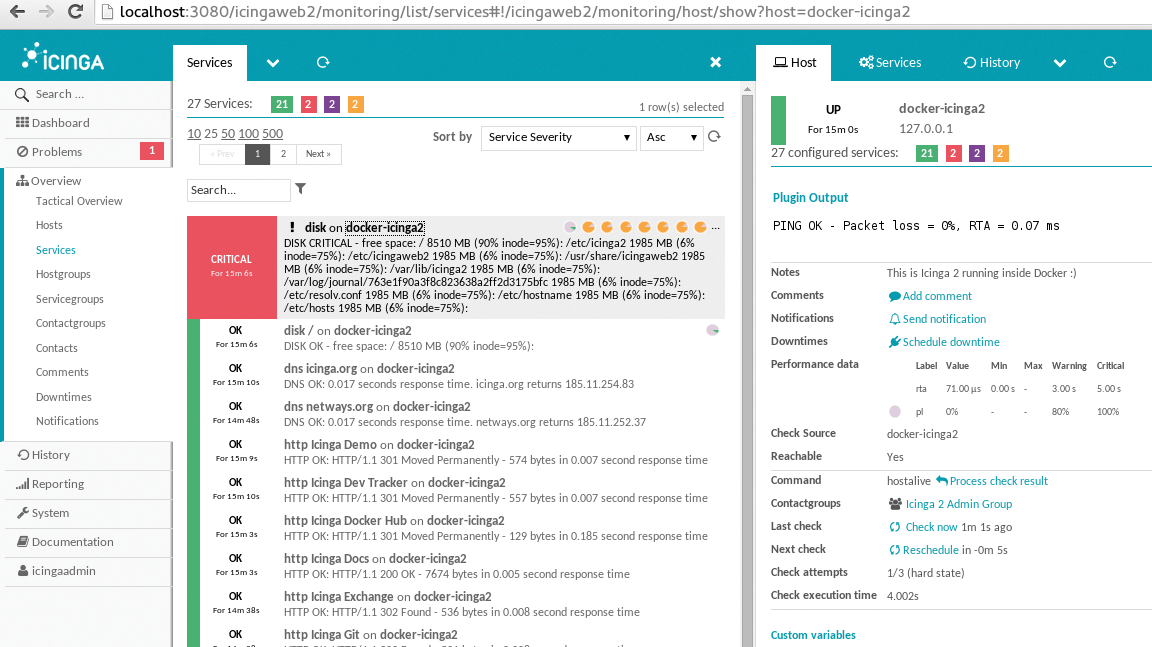

From the user's and the provider's point of view, traditional monitoring approaches are of limited suitability for monitoring cloud platforms. Their view of the world is usually binary: Either a system or a service works as required, so that the corresponding entry on the monitoring system is green, or not, in which case the entry is red, and an escalation spiral is set in motion (Figure 1). If necessary, admins are dragged from their beds.

This principle is not enough in clouds. Monitoring also means that the provider receives regular information about the utilization of the platform from their monitoring system so they can expand the platform if necessary and with ease, in contrast to many classic monitoring systems. If you install 200 nodes, you do not want to add the new hosts manually to your monitoring system in a process taking hours. Instead, some kind of automatic detection is required.

Monitoring requirements are changing even more radically from the user's perspective: Users not only want to know whether their service is still available in principle, but also how high the current load is. Once the point is reached where the existing web server VMs are fighting a losing battle, the cloud platform ideally starts additional VMs and integrates them seamlessly into the existing virtual environment. If the load decreases again, the cloud ideally shuts down VMs that are no longer needed, so the customer does not incur unnecessary costs.

Additionally, the failure of a single component within a virtual cloud environment should not affect its functionality. If the setup is built correctly, it survives the failure without problems. Monitoring in the cloud should only sound an alert if a real problem has occurred that restricts the functionality of the environment. The ability to adapt appropriately to change is key in these cases.

Against this background, it is interesting that monitoring in clouds also differs in other respects: If you only want to monitor systems, you build the monitoring instance as an external component, independent of the setup itself. In the cloud, however, the results of monitoring operations immediately change the setup (e.g., with the addition or removal of VMs). The classical view of conventional monitoring obviously no longer works in such environments; in fact, clouds sometimes radically redefine monitoring.

How does the provider design its cloud monitoring to be flexible and dynamic? How do customers monitor their setups so that they automatically scale horizontally or at least semi-automatically? These are the questions I investigate in this article, with the focus on Consul [1], which itself provides monitoring functions but can also be perfectly coupled with solutions such as Prometheus [2].

What Works and What Doesn't

To begin, you should realize what can be achieved in terms of automatic scalability. Cloud providers have an easier job, because clouds usually only scale horizontally and never shrink. Once the admin has added a node to the setup, they can usually assume that the node will remain in the setup permanently. If the active monitoring system sounds an alarm in the event of a system failure, the provider's point of view is simple: it is almost always an error when entire servers disappear.

From the user's perspective, however, it can be an absolutely legitimate scenario for VMs that were previously part of the setup to disappear – that is, when the cloud reduces the setup because the current load is low. If monitoring alerts in such a case, it is a genuine false alarm. What applies to the provider is, of course, also true for the cloud user. If the cloud adds new VMs to the existing system, these should ideally also be included automatically in the existing monitoring.

Good Luck, Bad Luck

From the cloud providers' point of view, the good news is that a whole range of different monitoring solutions now exist that enable the automatic detection of new nodes through auto-discovery. It looks much less rosy from the users' point of view, because monitoring for use within clouds is almost always focused on a specific platform, and usually a crucial part of it.

Take Amazon, for example. Here, a separate service ensures that virtual setups expand and contract as needed; the provider markets the corresponding functionality under the name Auto Scaling [3]. However, it is practically impossible to use it outside of Amazon Web Services (AWS). The setup is specifically adapted to AWS and is an implicit part of Auto Scaling.

Similarly, OpenStack [4] has a separate service called Senlin [5] for automatic scaling, which, however, has not achieved widespread distribution to date. If you build your setup for OpenStack and Senlin, you can hardly put it to meaningful use in a non-OpenStack environment.

There is a good reason for exclusive automatic scaling: If a cloud scales a setup, an existing monitoring system must communicate intensively with the APIs of that cloud to obtain the necessary information about the desired state of the components. This is the only way to find out whether the actual state matches the target state or whether VMs that should be active are missing in the setup. This task can be handled much better if the components involved are part of the cloud itself.

Smart Monitoring with Consul

Consul helps users who want to monitor cloud environments independent of the tools available in a specific installation. Consul can also be used on bare metal for comprehensive monitoring, making it a kind of miracle weapon for modern, flexible environments.

Some admins might recall Consul from HashiCorp. The program written in Go was presented by the manufacturer as early as 2014 and was initially intended primarily for operation in clouds. That Consul is also very useful for various tasks on physical servers only gradually became apparent over the years.

HashiCorp itself markets Consul as a tool for three central tasks in distributed environments:

- Service Discovery: Consul can act as a central interface between servers and clients. Servers register as services with Consul, which it keeps in a list. Clients request exactly that list if required and find out, for example, at what address their server can currently be reached.

- Health Checking: Consul automatically checks whether the servers that have logged on to it are still working. In this way, Consul avoids telling a client the address of a MySQL database that is currently inactive.

- Key Value (KV) Store: Consul also maintains a configuration database according to the key-value principle to which clients can add arbitrary entries. Because Consul is a cluster service, of which an instance will ideally be running on every system in the setup, all information of the KV store is available at any time on all systems of the platform.

Consul offers several advantages with regard to its architecture: Consul contains a cluster consensus algorithm that automatically implements a quorum and thus prevents split-brain situations from arising in the first place, which is particularly important for the KV store.

Consul is also extremely lightweight. The program enters the system as a Go binary, has no dependencies, and only needs a configuration file. Therefore, Consul is easy to install on older systems where current versions of Python or Ruby are not available. Moreover, Consul is very frugal when it comes to resource requirements.

Consul's biggest advantage is undoubtedly that it is designed to work with other programs out of the box. A huge amount of information can be retrieved from Consul via a RESTful interface. As a hook back to the old world, Consul also offers the option of generating configuration files from information in its own service directory.

In fact, Consul makes it possible to implement the new type of monitoring that is necessary in dynamic environments. Two examples illustrate this: monitoring hardware and monitoring dynamic environments.

Conventional Monitoring

When working with real hardware, one of the most interesting questions is how the admin integrates a greater number of new servers into the existing monitoring system as fast as possible. Because clouds are extremely dynamic, 500 new servers wanting to be integrated into a setup in a week is not unusual.

Classical approaches like Icinga or Nagios have a difficult time keeping up. For example, the administrator has to help with an automation solution such as Ansible or Puppet. Consul does this work for the admin by cleverly combining the functions of the software.

Consul on All Systems

To use Consul in physical setups, you need to roll out the service on all participating systems in the first step, which is best done with the help of an automation solution such as Ansible. Consul has two different operating modes: server and agent. In everyday life, the two operating modes differ primarily in that the servers decide on the quorum and execute the consensus algorithm, whereas the agents primarily take care of themselves. Clouds almost always contain a category of servers that operate many cloud services as controllers – they are also ideal for the role as servers in Consul. The normal compute nodes, on the other hand, only work as agents.

The result of simply rolling out Consul on all systems results in a comprehensive database of all available cluster nodes. Where to go from there is also a question of the admin's personal preferences. The simplest option is to have Consul check whether or not certain services are available.

Case 1: Service Monitoring

From the admin's point of view, it is not complicated to integrate a service into the Consul service database. A simple command to the Consul API is sufficient. Alternatively, services can be stored directly on the hosts in the form of configuration files for Consul. If you want to tell Consul that Keystone, the OpenStack authentication component, is running on port 5000 on the controllers, this shell command will do the trick:

echo '{"service": {"name": "keystone", "tags": ["auth"], "port": 5000}}' | sudo tee /etc/consul.d/keystone.json

Afterward, you just need to restart the Consul agent on the respective host: Consul already knows the Keystone service for this host. Clients can discover the existing services in a directory, either with a DNS request or by asking Consul directly with a RESTful request.

If you want to add monitoring to this configuration, you have several options: an HTTP-based check, a check in which Consul calls arbitrary external scripts, and a classic Ping test, wherein Consul checks that a service responds. You can add the checks again either via a REST request or a JSON configuration on the respective system.

For the Keystone process described above, this might look like:

echo '{"service": {"name": "keystone", "tags": ["auth"], "port": 5000, "check": {"script": "curl localhost:5000 > /dev/null 2>&1", "interval": "10s"}}}' >/etc/consul.d/keystone.json

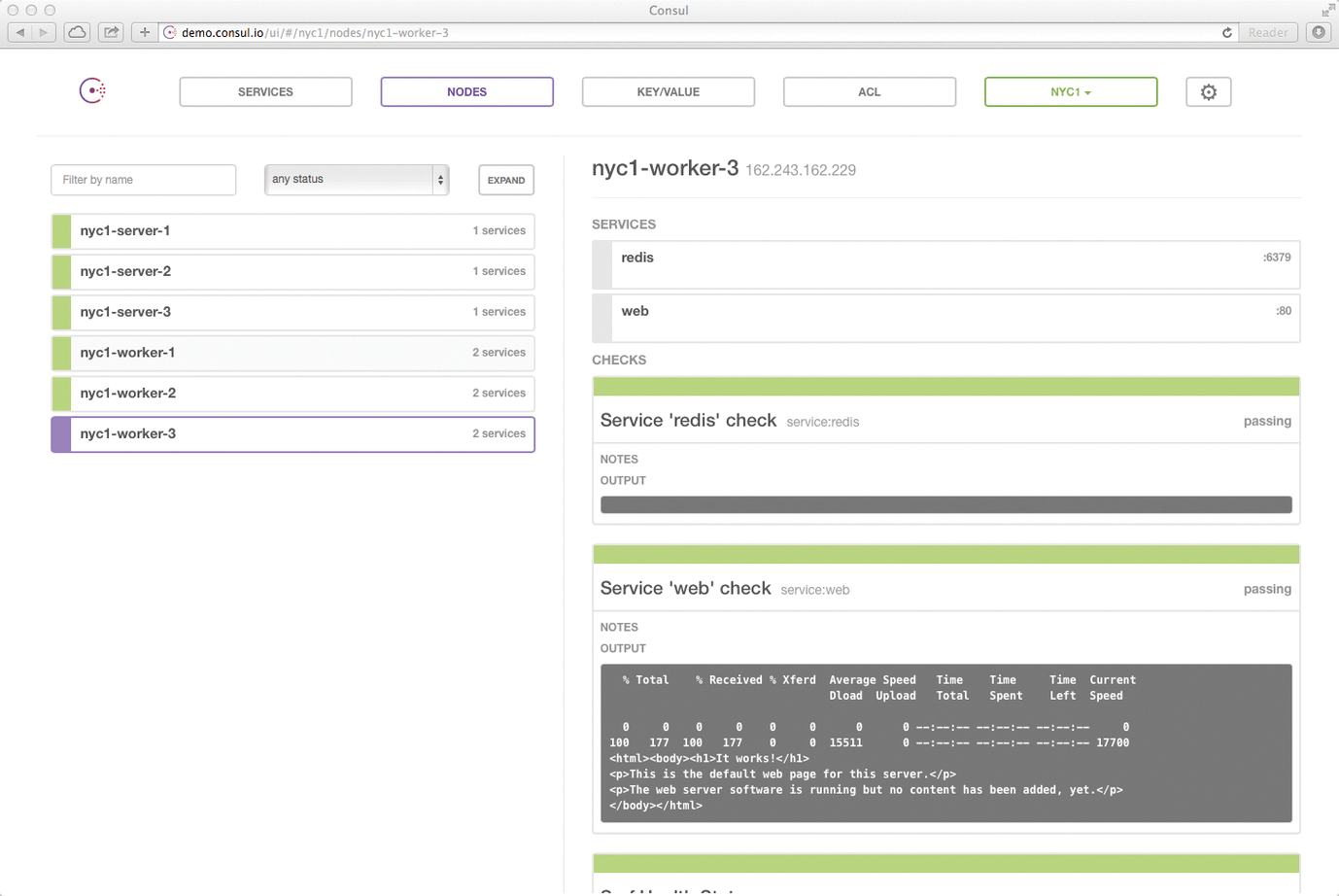

Assuming Keystone listens on localhost:5000, the described check would attempt to open the Keystone homepage every 10 seconds with an HTTP request. Consul offers its own web interface (Figure 2) that lists the results of the monitoring queries. It also offers the possibility of defining alerts. Consul then escalates according to the established rules, including the ability to send email.

Case 2: Connecting with Prometheus

One advantage of the described monitoring solution with Consul alone is that it manages without bloated monitoring systems like Icinga or Prometheus. On the other hand, at the hardware level, these systems usually exist in some form anyway; usually they were present before Consul came into the picture. Therefore, Consul should not be regarded as competition to the existing monitoring systems, but as an extension of the current setup.

Prometheus – the king of the hill for monitoring, alerting, and trending – can obtain the list of hosts to be monitored directly from Consul, because the current list of all hosts is always available there. At the same time, Prometheus, with its various exporters, now offers much more granular monitoring than would be possible with Consul alone. Especially with bare metal, Consul is often not the best choice; for example, monitoring SMART messages from your storage devices cannot be achieved reliably with Consul. Prometheus, on the other hand, offers a SMART exporter.

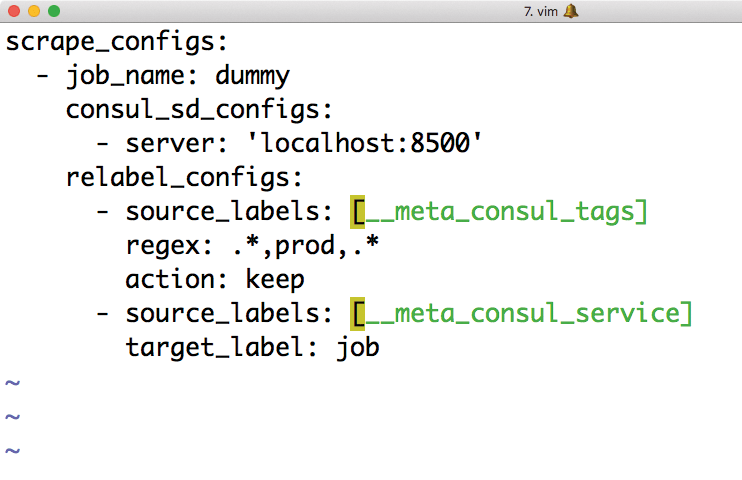

The Prometheus developers are aware that the combination of Prometheus and Consul is attractive. In fact, Prometheus now comes with an interface out of the box to dock to an existing Consul cluster and obtain the list of existing hosts and their services (Figure 3).

The rest is just the daily grind: If the admin rolls out Consul along with the Prometheus exporters for collecting metrics on the affected hosts and registers these as local services in Consul, Prometheus receives all the relevant data from Consul and can query all exporters there directly.

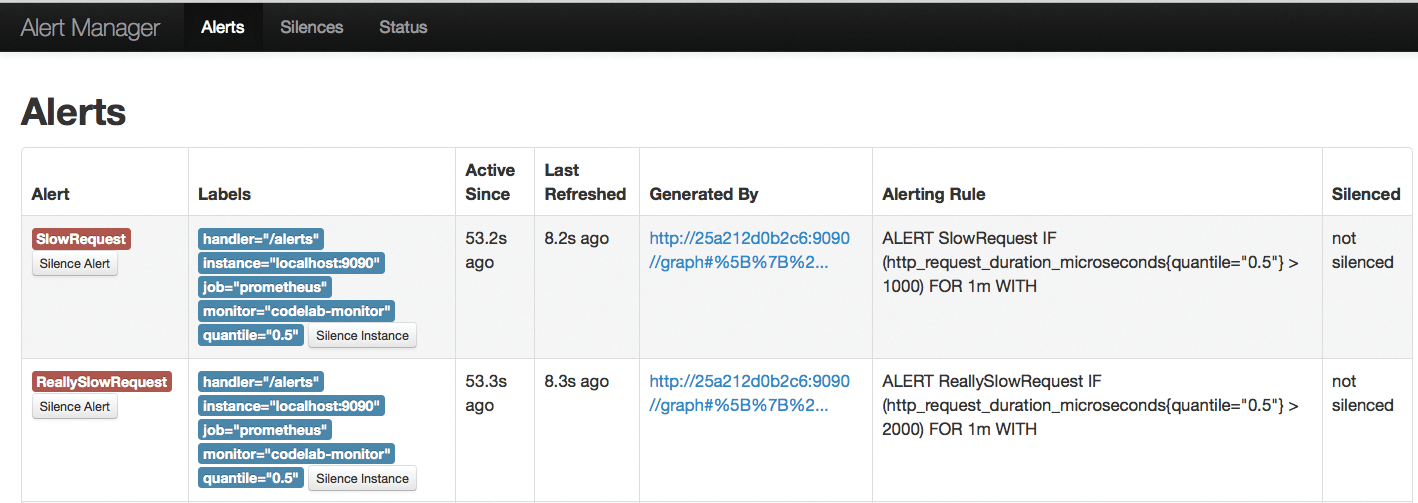

At this point, you would handle alerting exclusively via Prometheus; if the Ping metrics for a host are missing, Prometheus can sound the alert (Figure 4). The combination of automation, Consul, and Prometheus allows fully automated monitoring in this scenario, because once you have automatically rolled out Consul on new hosts with all exporters and the necessary service definitions, the host is a valid monitoring target for Prometheus.

If you prefer to use a more classic monitoring system than Prometheus, you still have options: With its template function, Consul can create files on the local filesystem that contain details from the Consul host and service database. Details can be found in the instructions on the HashiCorp GitHub site [6]. A bridge between Icinga, Zabbix, or other monitoring systems and Consul will also work.

Consul in the Cloud

Consul shows its various strengths even better when used as a component of VMs in the cloud, because it was originally developed for this use case. As already mentioned, the monitoring standards in virtual setups are different from those for bare metal, because the focus is always on the availability of a specific service to the outside world. The primary goal of the administrator is to integrate Consul with cloud-integrated load balancers. Several examples illustrate this case.

First, the automatic service detection included in Consul plays a prominent role. If you build your virtual setup in such a way that the IP address of the usable database is always stored in Consul, you can automatically let your clients talk to this IP.

Consul's DNS back end shows its strengths, as well: The individual VMs within the cloud use Consul as the primary service for DNS queries, so the admin simply stores the value for accessing the required database in Consul as the target host for all database queries.

When setting up a virtual environment, however, this scenario means extra work. In the beginning, you have to think the setup through, and then implement it accordingly (e.g., when creating a template for the cloud orchestration solution). This includes providing the database service in Consul with appropriate service checks right from the start and building the database in the background so that several front ends are available (e.g., Galera and MariaDB).

Integrating Consul with External Services

Even if you have configured your virtual Consul-based environment perfectly for high availability and secured it against failure, you still have one problem left to solve: seamless scalability. Although not impossible, it might require you to rethink various points.

Many clouds offer Load Balancing as a Service (LBaaS). On the one hand, this is practical because the cloud architect no longer has to worry about the load balancer; instead, the cloud provisions it completely automatically. On the other hand, the load balancer system in such a setup is usually hidden from the eyes of the user and can only be configured via an API, although it is usually just a normal Linux VM under the hood.

If you want to use Consul to build an automatically scalable and monitored setup, it makes sense to take the somewhat painstaking path of building your load balancer as a separate VM (e.g., with HAProxy). The part that automatically starts new VMs when the system load is correspondingly high remains in the cloud.

If the newly started VMs automatically become part of the Consul cluster with the additional web front ends, then HAProxy can be built using the Consul template function so that it automatically transfers new VMs into the load balancer, as described in detail online [7].

The big advantage of such a solution is now obvious: A setup based on this principle can be used in virtually any cloud, because it does not depend on cloud-specific functions.

Conclusions

Monitoring in the cloud works differently from conventional monitoring systems – at least with regard to VMs. In the case of bare metal, things haven't changed that much: The admin still wants to know when individual servers fail, to take quick countermeasures. The combination of Consul, Prometheus, and Grafana offers ideal conditions (Figure 5). In the virtual environment, however, the availability of services, rather than individual systems, has become far more the focus.

Consul is good for both scenarios: At the data center, it supports automated monitoring of huge networks of systems with manageable overhead. In virtual environments, Consul is the link between the VMs running services and the users accessing those services.