Efficiently planning and expanding the capacities of a cloud

Cloud Nine

Planning used to be a tedious business for data center operators, taking some time from the initial ideas from customers to the point at which the servers were in the rack and productive. Today this time span is drastically shortened, thanks to the cloud.

The cloud provider must control the infrastructure in the data center to the extent that the platform can expand easily and quickly (i.e., hyperscalability), without major upheavals and its associated costs. Even before installing the first server, you would do well to think about various scalability factors, taking into account the way in which customers will want to use the cloud resources and your ability to enable capacity expansion effectively and prudently.

Although the question of how the available capacity in the data center can be used as efficiently as possible is important, another critical question is how cloud admins collect and interpret metric data from their platform to identify the need for additional resources at an early stage.

In this article, I examine the ingredients for efficient capacity planning in the cloud and explain how they can be implemented best in everyday life. The appropriate hardware makes horizontal scalability easy, and the correct software allows metering and automation.

Farsighted Action

Cloud environments reduce administrative overhead to such an extent that the operator can and must provide within seconds what formerly involved weeks of lead time. The cloud provider must promise its customers that they will have access to virtually unlimited resources, which in turn means a huge amount of effort – both operationally and financially. This scenario only works if the provider has a certain buffer of reserve hardware at the data center. However, providers cannot know what resources which customer will want to use in their setup and when. The question of how cloud capacities can be managed and planned is therefore one of the most important for cloud providers.

New Networking

Networking plays a central role. In the classic setup, each environment is planned carefully, and its scalability limits are fixed right from the start. Anyone planning a web server setup, for example, expects to have to replace the entire platform after five years anyway, because the guarantee on the installed servers will expire. The admins plan these installations with a fixed maximum size and design the necessary infrastructure, such as the network, to match.

Cloud admins don't have this luxury. A cloud must be able to grow massively within a day or week, even beyond the limits of the original planning. Besides, a cloud has no expiration date: Because cloud software can usually scale horizontally seamlessly, old servers can be replaced continuously by new ones.

If you look at existing networks in conventional setups, you usually come across a classic tree or hub-and-spoke structure, wherein one or – with a view to reliability – two switches are connected to other switches by means of appropriately fast lines, to which the nodes of the setup are then attached. This network design is hardly suitable for scale-out installations, because the farther the admin expands the tree structure downward, the less network capability arrives at the individual nodes.

Better Scaling

If you are planning a cloud, you will want to rely on a Layer 3 leaf-spine architecture from the start because of its scalability. This layout differs from the classic approach primarily in that the switches are dumb packet forwarders without their own management functions.

Physically, the network is divided into several layers: The leaf layer establishes the connection to the outside world through routers. The spine switches are installed in each rack and connected to the leaf switches via an arbitrary number of paths.

In this scenario, packets no longer find their way through the network according to Layer 2 protocols but are exchanged between hosts using the Border Gateway Protocol (BGP). Each switch functions as a BGP router and each host also speaks the BGP protocol.

If horizontal scaling is required, all levels of the setup can easily be extended by adding new switches, even during operation. New nodes simply use BGP to inform others of the route via which they can be reached. This routing of the switches means that even hosts that are not on the same network can communicate with each other without problem. Therefore, if you want to roll out 30 new racks in your cloud ad hoc, you can do so. In contrast, classical network designs quickly reach their limits.

However, BGP can currently only run on switches that offer the feature as an expensive part of their own firmware – or that support Cumulus Linux. Mellanox sets a good example by offering future-proof 100Gb switches with Cumulus support. (See a previous ADMIN article [1] about the Layer 3 principle for more information.)

CPU and RAM

The admin comes under fire from a completely different angle during capacity planning of CPU and RAM resources in the cloud. First, the issue of overcommitting these two assets is extremely sensitive. In the case of RAM, overcommitting is prohibited from the outset: KVM offers this option, but under no circumstances does the admin want the out-of-memory killer of the Linux kernel to take down any virtual machines (VMs) in the cloud because it runs out of RAM. Overcommitting available CPUs works better, but don't exaggerate – single overcommitting is usually the best option.

The relationship between CPU and RAM is of much greater importance for capacity planning in the cloud. If you look at the offer for most clouds, you will notice that a fixed number of CPUs are often assigned a certain amount of RAM. For example, if you commit a virtual CPU, you have to add at least 4GB of RAM, because it is the smallest available option.

Cloud providers have not switched to this system because they want to annoy their customers. Rather, the aim is to keep waste as low as possible on the supplier side. For example, a state-of-the-art server with two Xeon E5-2690 processors offers a total of 14 CPU cores and 28 threads. Systems like this are usually equipped with 256 or 512GB of RAM. As a result of overcommitting CPU resources, a total of 56 virtual CPU cores are available, of which the provider normally passes 50 on to its customers.

If you assume the ratio of CPU to RAM provided through corresponding hardware profiles is 1:4, a customer using 12 virtual CPU cores must add at least 48GB of memory. If five of these VMs are running on the system, a small remainder of CPU and RAM remains, which is divided between any smaller profiles.

If you do not follow the 1:4 rule of thumb and offer an arbitrary ratio instead, a customer with especially CPU-heavy tasks could commit a VM that needs 32 virtual CPU cores but only 16GB of RAM. If you also have a customer who needs a large amount of RAM but only a few CPUs, the available RAM would simply be idle on this system. It would be virtually impossible for the cloud to start additional VMs because of the lack of available virtual CPUs.

The cloud provider has to keep this waste as low as possible; otherwise, data center capital is tied up. The ratio of 1:4 for CPU and RAM is appropriate in most cases, but it is not carved in stone. If you can foresee for your own cloud that other workloads will occur regularly, you can of course adjust the values accordingly. Ultimately, you should order hardware to suit the foreseeable workload.

Collecting Data

If the cloud is well planned and built, you can move on to the trickiest part of capacity planning in the cloud – gazing into your crystal ball to identify the need for new hardware at an early stage. From a serious point of view, it is impossible to predict every resource requirement in good time. A customer that rents a platform overnight and needs 20,000 virtual CPUs in the short term would cause most cloud providers on the planet to break a sweat, at least for a short time.

However, such events are the exception rather than the rule, and the growth of a cloud can at least be roughly predicted if the admin collects the appropriate metrics and evaluates them adequately. You should ask: Which tools are available? Which metrics should responsible planners collect? How do you evaluate the data?

Collecting metrics works differently in clouds than in conventional setups. For example, given a cloud of 2,000 physical nodes, with 200 values per system to be collected every 15 seconds, you would net 1,600,000 measured values per minute, or a fairly significant volume.

Metering solutions such as PNP4Nagios attached to monitoring systems can hardly cope with such amounts of data, especially if classical databases such as MySQL are responsible for storing the metric data in the background.

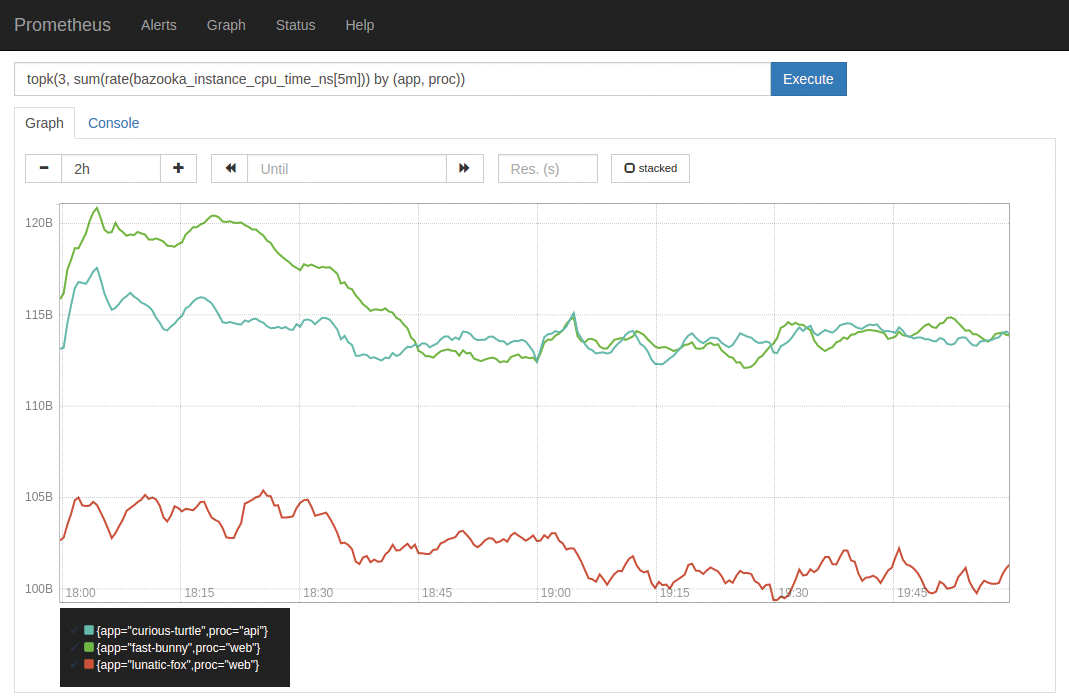

Solutions like Prometheus or InfluxDB, whose design is based on the time series database (TSDB) concept, work far better. Monitoring also can also be treated as a byproduct of data collection. For example, if the number of Apache processes on a host is zero, the metric system can be configured to trigger an alarm. Both Prometheus and InfluxDB offer this function.

The Right Stuff

If you rely on Prometheus or InfluxDB for monitoring and trending, the next challenge is just around the corner: What data should you collect? First the good news: Both Prometheus and InfluxDB offer a monitoring agent (Node Exporter and Telegraf, respectively) that regularly reads the basic vital values of a system, even in the standard configuration. These tools allow you to collect values such as CPU load, RAM usage, and network traffic without additional overhead.

In most cases, you can find additional agents for the cloud: An OpenStack exporter for Prometheus [2], for example, docks onto the various OpenStack API interfaces and collects known OpenStack data (e.g., the total number of virtual CPUs in use), which also finds its way into the central data vault.

Therefore, if you rely on TSDB-based monitoring, you can collect the values required for metering with both Prometheus and InfluxDB without much additional overhead (Figure 1). Cloud-specific metrics are read by corresponding agents; one of the most important parameters is the time it takes the cloud APIs to respond.

The prettiest data treasure trove is of no use if the information it contains is not evaluated meaningfully. Out of habit, many admins tend toward the golden mean. If API requests are processed in 50ms on average, the situation is quite satisfactory.

However, this assumption is based on the mistaken belief that the use of the cloud is distributed evenly over 24 hours. Experience has shown that this is not the case. Activity during the day is usually much higher on a platform than at night. If API requests are processed very quickly at night but take forever during the day, the end result is always a satisfactory average number, but completely useless to explain the experience of the daytime user.

Similarly, if a particularly large number of CPUs (amount of RAM, network capacity) are free at night, but the platform is running at full capacity during the day, customers who want to start VMs during the day do not benefit. If enough bandwidth is available at night, but the network is completely saturated during the day, the frustration on the customer side increases.

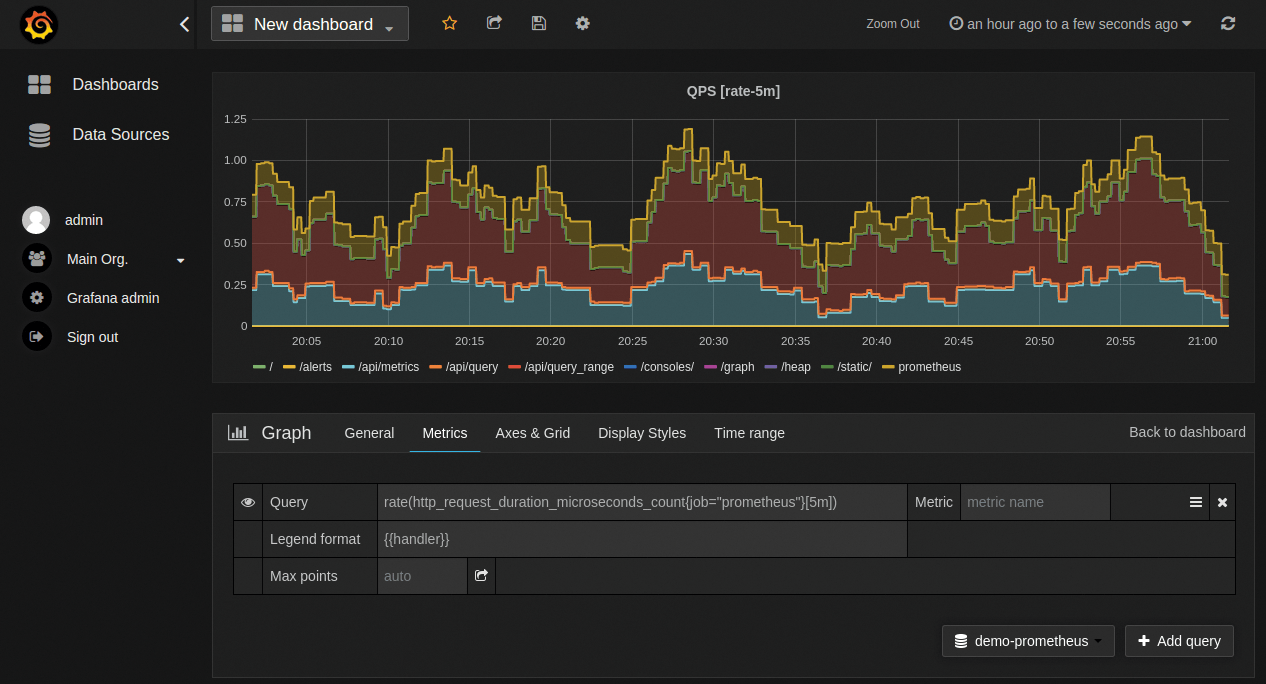

Working with Percentiles

Percentiles are much more helpful in capacity planning than the golden mean. For example, the 99th percentile of the time required to process an API request is much more meaningful than an average value, because it tells you that 99 percent of all requests ran at a particular speed or better. Monitoring solutions such as Prometheus and InfluxDB offer a function to calculate the percentiles of a dataset.

Strictly speaking, the median is also a percentile: the 50th percentile of the total frequency distribution. Percentiles work like this: First, you create a table of all values for a specific event. For example, if you are interested in the time required to process an API request and you have 100 measured values for this parameter, you would create a table with all 100 values in ascending order. If you want the 90th percentile, you take the first 90 percent of the entries and, of those, the highest value. That's the 90th percentile. This works analogously with all percentiles. Accordingly, you can tune the platform such that this value improves.

By the way, Grafana [3] is recommended for displaying the values from Prometheus and InfluxDB; Grafana can run queries directly on the database and thus also use the built-in percentile function (Figure 2).

Indirectly, percentiles also play a role in capacity planning. On the basis of the percentiles for certain factors (e.g., the average bandwidth available to a VM, the time taken to process API calls, or available RAM), you can see the extent to which the cloud is already running at full capacity. Everyone can define their own minimum and maximum values; however, it has proved advantageous to have at least 25 percent of CPU and RAM capacity in reserve to serve major customers.

Achieving Hyperscalability

The third and final aspect of capacity planning is how well a data center can scale. From the administrator's point of view, it does not help if you have recognized in good time that you need more hardware but are then unable to make the hardware available within an acceptable period of time.

Adding resources to a cloud is usually a multiphase process. Phase 0 is the formulated need (i.e., determining that more capacity is necessary and to what extent). Next, you determine the hardware: On the basis of the questions that play a role in capacity management, you then define which servers you need and in what configurations before ordering them. Even here you can expect considerable delays, especially in larger companies, because when it comes to hundreds of thousands or millions of dollars, pounds, or euros, long approval chains mostly have to be observed.

As the admin, you only get back into the driver's seat after the desired hardware has arrived. At this point, it must be clear which server is to be installed where and in which rack so that the responsible people at the data center can start installing the servers and the corresponding cabling immediately.

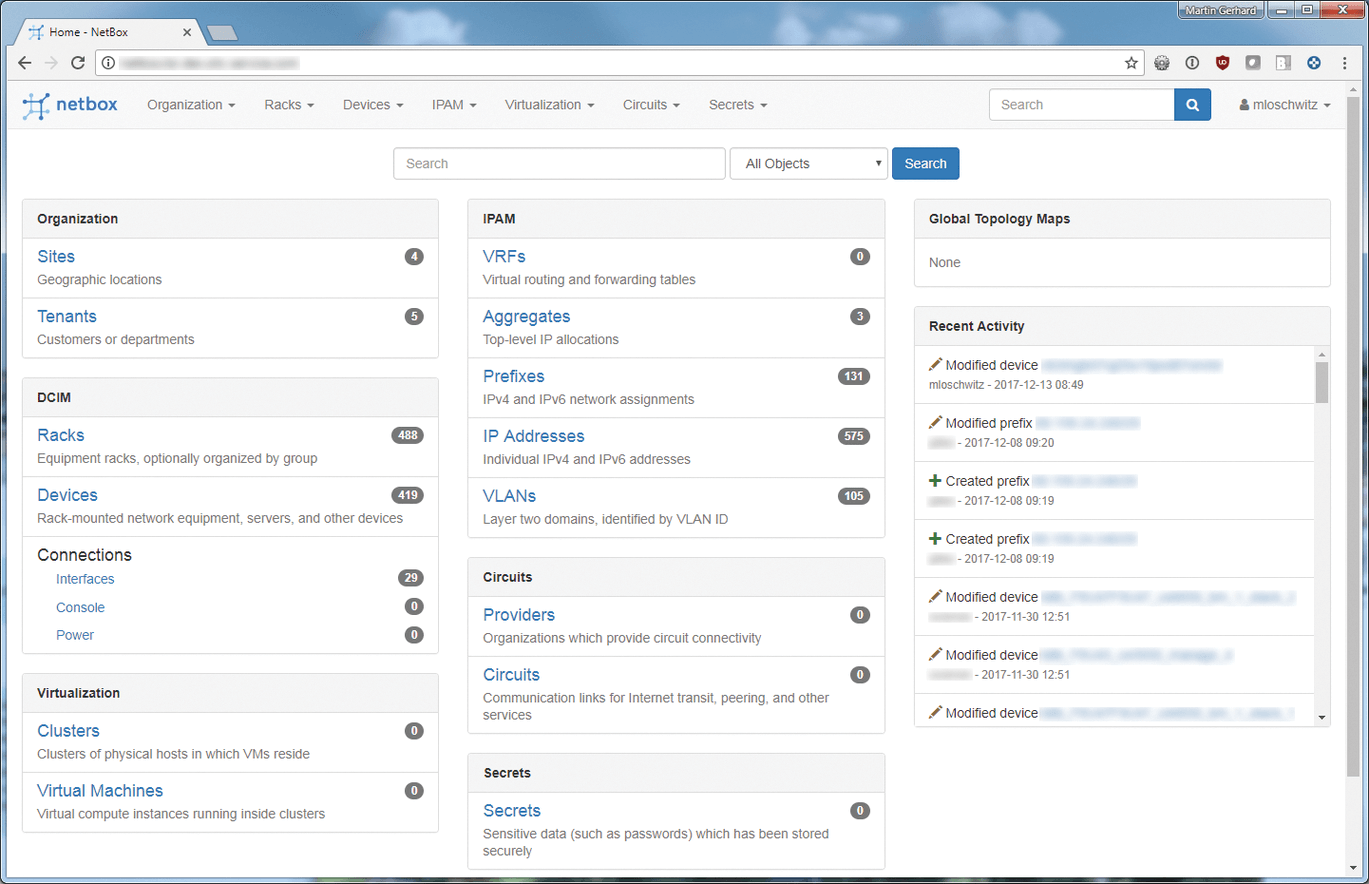

Of course, topics such as redundancy and the availability of electricity also play an important role. Such a setup can be planned and designed particularly efficiently if you have a combined tool from Data Center Inventory Management (DCIM) and IP Address Management (IPAM) at your disposal: From this central source of knowledge, data center personnel can extract all the information they need when they need it. NetBox [4] is an example of a solution that fits this requirement very well. In fact, it was developed for exactly this purpose (Figure 3).

Essential Automation

After the installation of additional hardware in the rack, it's time to install the operating system and cloud solution on the new systems. This process shows how clearly a conventional setup differs from a modern cloud: In the old world, the operating system was usually installed manually, because the effort was nothing compared with the effort that developing a completely automated installation routine would have meant.

In the cloud, however, this approach obviously no longer works. Extending a platform can easily mean 300 or 400 new servers at the same time, so a manual installation would be pointless and extremely tedious. In clouds, therefore, the goal is to press an On button, and all subsequent work is completely automated. Ultimately, the requirement is that the new server automatically becomes a member of the existing cloud network, and the cloud solution you use automatically takes care of supplying it with new VMs for customers.

Correct Infrastructure Planning

To make the concept work, some preliminary labor is necessary that should be done right at the beginning of the cloud construction process, including providing central components in your infrastructure that are necessary for the automatic installation of nodes. Typical services that belong to this boot infrastructure are NTP, DHCP, TFTP, and HTTP for providing a local package mirror of your own standard distribution and DNS for local name resolution. DHCP might make you frown, but experience shows that it is practical in large setups because, in an emergency, it allows nationwide reconfiguration of the network without changing each host individually.

With NetBox, you can use a trick: By definition, NetBox has at least one IP address for each device on the platform; otherwise, the IPAM component of the platform would be useless. Because NetBox has its own API and also provides a Python library for direct access to the API, NetBox and DHCP can be connected easily. A script based on Pynetbox [5] retrieves a list of all network interfaces, including their MAC addresses and the corresponding IP addresses, from NetBox and generates a configuration file for the DHCP daemon, dhcpd.

In the end, you have a pseudo-DHCP solution: Every device gets its IP addresses via DHCP, but because the configuration of the DHCP server is based on static data from NetBox, the IP is not dynamic.

Automating the Installation

NetBox is also great for automating the installation of the operating system: If you want to generate host-specific Kickstart files, you can also do so based on information from NetBox, which is easily available via Pynetbox.

By cleverly combining features that many distributions have been offering for years and building the necessary infrastructure, you create a working automation environment that makes the simultaneous installation of hundreds of servers child's play. All the work that can be automated is handled automatically by the platform.

Even this scenario has potential for optimization: For example, if you can obtain information about new servers from the manufacturer in advance, you can integrate them automatically into NetBox via Pynetbox – provided the details are available in machine-readable form. The fewer manual steps required to transform new sheet metal into functioning servers for the cloud, the more cost-effective it is to go live – and the closer you get to the state of hyperscalability. If you want to scale clouds, you can't avoid following this path; otherwise, efficient capacity planning for a cloud is virtually impossible.