OpenShift by Red Hat continues to evolve

Live Cell Therapy

My last look at OpenShift [1] was mid-year 2017. The sobering results of a close inspection: The product, which Red Hat was pushing like a re-invention of the wheel, was actually no more than a commercial distribution of the Kubernetes container orchestration [2].

However, things have changed. In version 3.0, Red Hat shifted the product onto the new Kubernetes underpinnings and has since made continuous improvements in many parts of the software. What features will administrators running an OpenShift cloud benefit from most? What is the greatest benefit for users? From which innovations will developers reap the greatest benefits? I'll answer those questions in this article.

New Brooms

Since version 3.4, a noticeable change has been made in naming the individual products in the OpenShift series. OpenShift is a kind of umbrella term for several products of differing versions. OpenShift Origin refers to the original open source product on which the commercial variants of OpenShift are based. The open source version is of interest if you don't want to pay money to Red Hat. In return, you will not receive any support.

If you need support, you can choose between Red Hat's public OpenShift installation as a kind of public cloud – an OpenShift installation hosted by Red Hat on behalf of customers – and a private OpenShift installation in your own data center, previously called OpenShift Enterprise, but now renamed OpenShift Container Platform.

However, the manufacturer is in a hurry to clarify that nothing has changed except the name of the product. The motivation for the name change is thus likely to be that Red Hat wants to mesh OpenShift with other components in its portfolio in a more meaningful way, including improving integration with CloudForms, which I discuss later in this article.

Kubernetes and Docker Updates

Because the OpenShift release cycle is quite short, Red Hat is keeping pace with the development of Kubernetes and Docker [3]. The resulting innovations are not immediately visible to users, because the purpose of OpenShift is to offer an integrated product with a GUI and all the modern conveniences. As in the past, this will only be possible almost exclusively on Red Hat Enterprise Linux (RHEL) or CentOS systems.

If you use OpenShift Origin in combination with a commercial OpenShift version, you have to use Red Hat Linux. You can choose between the normal or the absolute minimum RHEL Atomic Host version. No matter which variant you choose, for OpenShift 3.6 (the latest version when this went to press), an up-to-date Docker from the Docker Extras directory is required on the hosts.

Simpler Installation

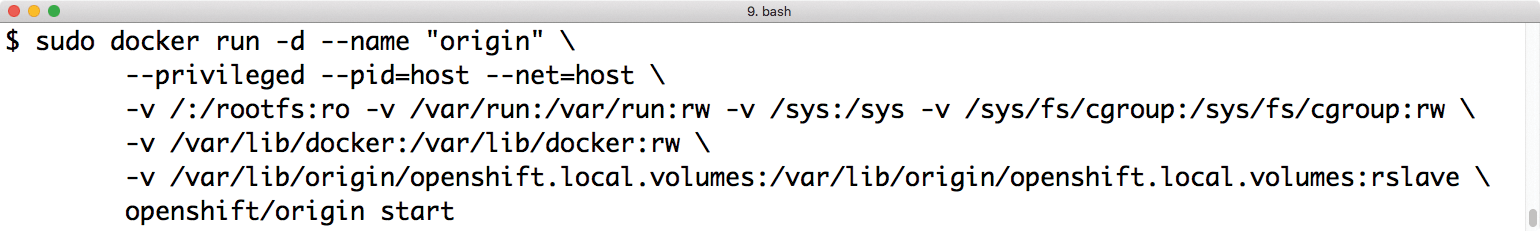

Red Hat continues to offer two ways to launch its own OpenShift deployment: with a normal RHEL OpenShift installed via RPM and with a prebuilt OpenShift installed in the form of a Docker container. However, in the latest OpenShift versions, Red Hat has simplified and accelerated the container-based setup significantly. Based on a Docker image from Red Hat's Docker Hub, a complete and working OpenShift environment can now be rolled out with a single command in a few seconds [4].

However, such an all-in-one setup is only of limited suitability for production operations. Red Hat also admits this without hesitation and recommends the Ansible-based RPM installation for larger, more complex setups. However, for developers who want to experiment and test in a clean OpenShift environment, the new deployment mechanism is a real blessing.

These are the facts: The RPM variant is well suited for large setups, but people who only want to test OpenShift or base developments on it are well served by the containerized installation (Figure 1).

Authentication with LDAP

User administration is not one of the most popular topics among admins, because it is very complex and connected not only to IT, but to many other aspects of a company. Sales, billing, and technology ideally use the same data when it comes to managing those who use commercial IT services.

As early as the summer of 2006, OpenShift offered an impressive list of mechanisms for dealing with authentication. The classic approach, deployed for user authentication in most setups, is OAuth.

The challenge is that the user data that OAuth validates has to come from somewhere. An LDAP directory is a typical source of such data. Whether you have a private OpenShift installation involving only internal employees or a public installation that is also used by customers, LDAP makes it easy to manage this data.

The first version of OpenShift release cycle 3 offered LDAP as a possible source of user data. However, OpenShift 3.0 could only load user accounts from LDAP at that time. Existing group assignments were possibly swept under the carpet. What sounds inconsequential could potentially thwart a successful project: Because OpenShift handles the assignment of permissions internally by groups, you would have to maintain two user directories for a combination of LDAP and OpenShift 3.0 by keeping the user list in LDAP and the group memberships in OpenShift.

The developers have put an end to this nonsense: OpenShift can now synchronize the groups created in LDAP with its own group directory. In practical terms, you just need to create an account once in LDAP and add it to the required groups for it to have the appropriate authorizations for the desired projects in OpenShift.

Image Streams and HA

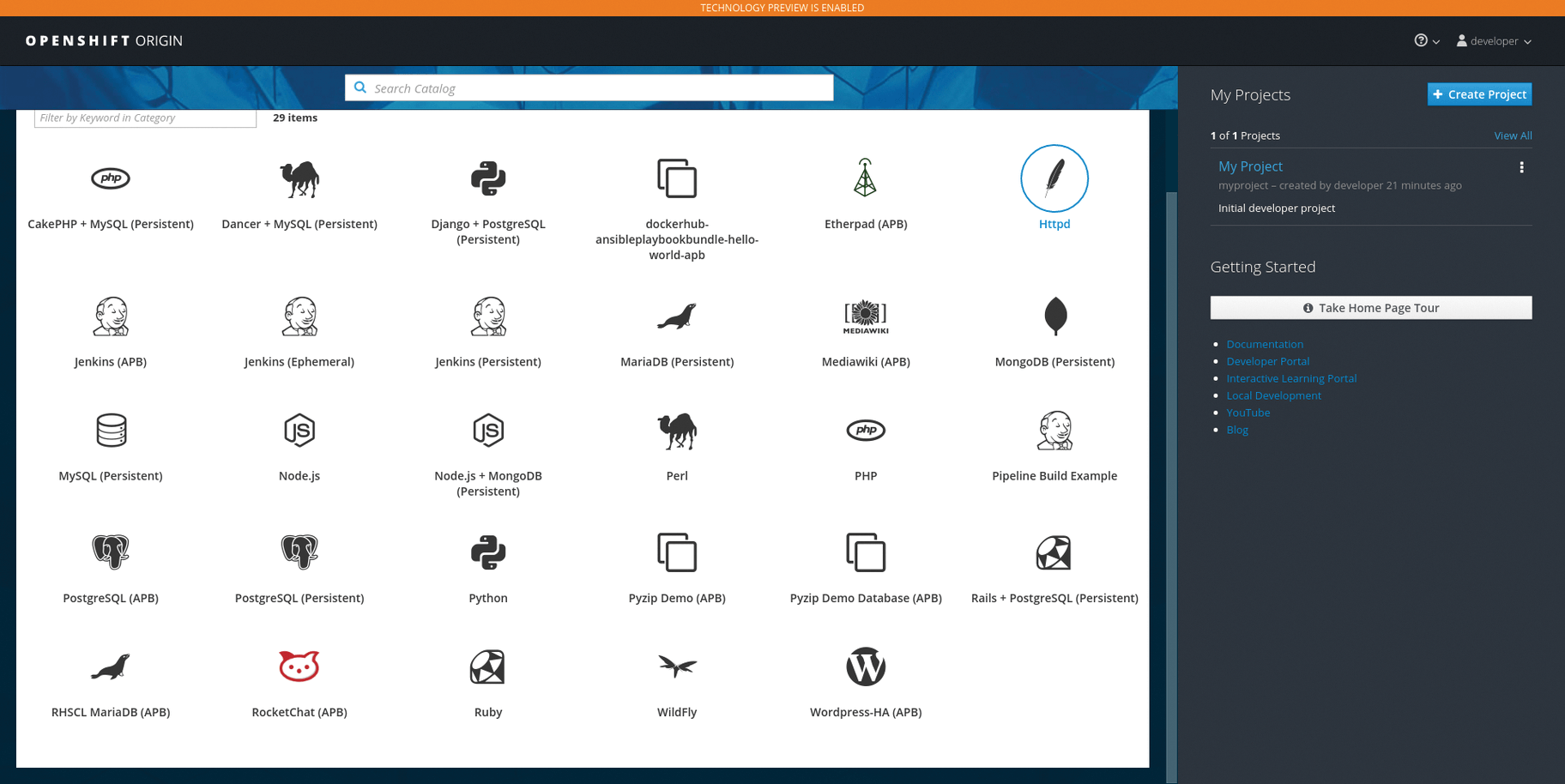

Various Platform-as-a-Service (PaaS) services will also be easier to install in the future. OpenShift takes its prebuilt images from image streams, and they have considerably expanded these in current OpenShift versions, so that Rocket.Chat or WordPress can now be rolled out automatically (Figure 2).

As beautiful as the colorful world of cloud-ready applications and stateless design may be, these solutions are still subject to elementary IT laws. Kubernetes as the engine of OpenShift comes with a few components that can be a genuine single point of failure if they malfunction. One example is the Kubernetes Master: It operates the central key-value store in the form of Etcd, which contains the entire platform configuration. Central components such as the scheduler or the Kubernetes API also run on the master node. In short, if the master fails, Kubernetes is in big trouble.

Several approaches are possible to eliminate this problem. The classical method would be to make the central master components highly available with the Pacemaker [5] cluster manager, which would mean the required services restart on another node if a master node fails. In previous versions of OpenShift, Red Hat pursued precisely this concept, not least because most of the Pacemaker developers are on the their payroll.

In fact, this solution involves many unnecessary complexities. Kubernetes has implicit high-availability (HA) functions. For example, the Etcd key-value store has its own cluster mode and is not fazed even by large setups. The situation is similar with the other components, which, thanks to Etcd features, can run easily on many hosts at the same time.

The OpenShift developers have probably also noticed that it makes more sense to use these implicit HA capabilities. More recent OpenShift versions only use the native method for HA; Pacemaker is completely out of the equation.

One tiny restriction is that it has no influence on those components that are part of the setup but not part of Kubernetes. For example, if you run an HA cluster with HAProxy on the basis of Pacemaker to support load distribution to the various Kubernetes API instances, you still need to run the setup with Pacemaker. However, in Kubernetes, Pacemaker is a thing of the past.

Persistent Storage

Storage is just as unpopular as authentication in large setups. Nevertheless, persistent storage is a requirement that must somehow be met in clouds, and OpenShift ultimately gives you nothing but. No matter how well the customer's setups are geared to stateless operation, in the end, there is always some point at which data has to be stored persistently. Even a PaaS environment with web store software requires at least one database with persistent storage.

However, building persistent storage for clouds is not as easy as for conventional setups. Classical approaches fail when the setups used reach certain values. For scalable storage, several solutions, such as GlusterFS [6] or Ceph [7], already exist, but on the client side they require appropriate support.

The good news for admins is that, in the past few months, the OpenShift developers have massively increased their efforts in the area of persistent storage. You can use the persistent volume (PV) framework to assign volumes to PaaS instances, and on the back end, the PV framework can now talk to a variety of different solutions, including GlusterFS and Ceph – both in-house solutions from Red Hat – and VMware vStorage or the classic NFS.

Of course, these volumes integrate seamlessly with OpenShift: Once the administrator has configured the respective storage back end, users can simply assign themselves corresponding storage volumes within their quotas. In the background, OpenShift then operates the containers with the correct Kubernetes pod definitions.

Load Balancers and Routers

One challenge that OpenStack has had to deal with on a regular basis as a virtualization solution and as cloud software is the integration of external appliances into the cloud, such as firewalls or classic load balancers from Palo Alto Networks or F5 Networks [8]. Because OpenShift builds a cloud and uses software-defined networking (SDN), at least to some extent, it faces a real problem: The physical appliance somehow has to be integrated into the customer's virtual network, although it is not visible in the underlay.

Using F5 as an example, Red Hat has now demonstrated in OpenShift that external appliances can be integrated into SDN. If you have an F5 with BIG-IP DNS version 11.6 or higher, you can use it not only as an external firewall in OpenShift, but also directly on your container network as a router.

However, first and foremost, it is of interest for companies that operate their own OpenShift installations or create such setups for customers. Red Hat doubtfully will be able to fulfill such special requests in the public OpenShift cloud, for example. However, if you are upgrading a private OpenShift installation and are already using F5, the combination of the two technologies provides a more elegant solution for routing and load balancing than is possible with the available OpenShift tools.

Of course, it is also aimed at those companies that have to use external routers or load balancers for compliance reasons and are committed to F5 in this respect. Thanks to the new functions, which became fully available for the first time in OpenShift 3.1, the chance to use OpenShift has now been opened up for those with compliance commitments.

Multicast for Pods

Another groundbreaking innovation, at least for many applications, is that Kubernetes can now handle multicasts between many pods. Until now, multicast traffic could be sent from the source, but everything stopped at the pod border, meaning that multicast traffic could never reach its destination.

Multicast traffic isn't very popular with network administrators anyway – in fact, many people get annoyed when they hear the word – but this does not change the fact that some software relies on multicast for operations (e.g., when it comes to service discovery or exchanging large amounts of data between many clients).

Current versions of OpenShift offer such applications a home for the first time. In the 3.6 version, the feature is no longer a technology preview; it can be used officially in production setups. Multicast can now be enabled individually for a project.

Network Policies for More Security

The security groups available in OpenStack are very popular with the OpenStack user community. Simply put, they provide an iptables-based approach to restricting access to individual VMs. For example, the default security group allows traffic to the outside world but does not allow incoming traffic. OpenShift did not have a feature comparable to security groups in its program. Kubernetes itself provides a network policy flag as a possible object for pod definitions, but OpenShift ignored it and didn't use it until recently.

In OpenShift 3.5, the developers deliver at least part of the feature. Although it is still marked as a Technology Preview and only works in setups based on Open vSwitch, the range of functions on offer is considerable. In fact, network policy in Kubernetes replicates what security groups in OpenStack have been able to do for years. If you enable the flag for a pod, it automatically blocks all incoming traffic except for the exceptions you define explicitly.

It will probably take some time before OpenShift fully supports network policy and officially releases it for production. However, a start has been made, and those of us who need appropriate packet filters, for compliance reasons in particular, will be happy about the new possibilities.

Attention to Detail: The GUI

The homemade graphical user interface (GUI) is, according to Red Hat, a unique selling point for OpenShift. No wonder: Kubernetes itself comes without a GUI and once again proves that the coolest software does not help if the learning curve is extremely steep. Red Hat, on the other hand, has agile and uncomplicated administration at the top of its feature list. Whether admin, customer, or developer, Red Hat wants everyone to perceive the OpenShift GUI as a genuine facilitator in everyday life.

The changes to the GUI have been correspondingly widespread. Almost half of the changelogs for the previous versions are full of GUI changes. Starting with the login, if you like, you can now add a branded page to your OpenShift installation, so that users logging in will not be put off by the generic OpenShift interface when logging in to OAuth.

Once you are logged in, you will come across UI innovations in every nook and cranny. For example, Red Hat has built-in search filters in many places that make it far easier to check the content of long lists than before. The search mask reacts while you are entering text: If you want to see the Apache pod, it appears when you type Apa in the search box.

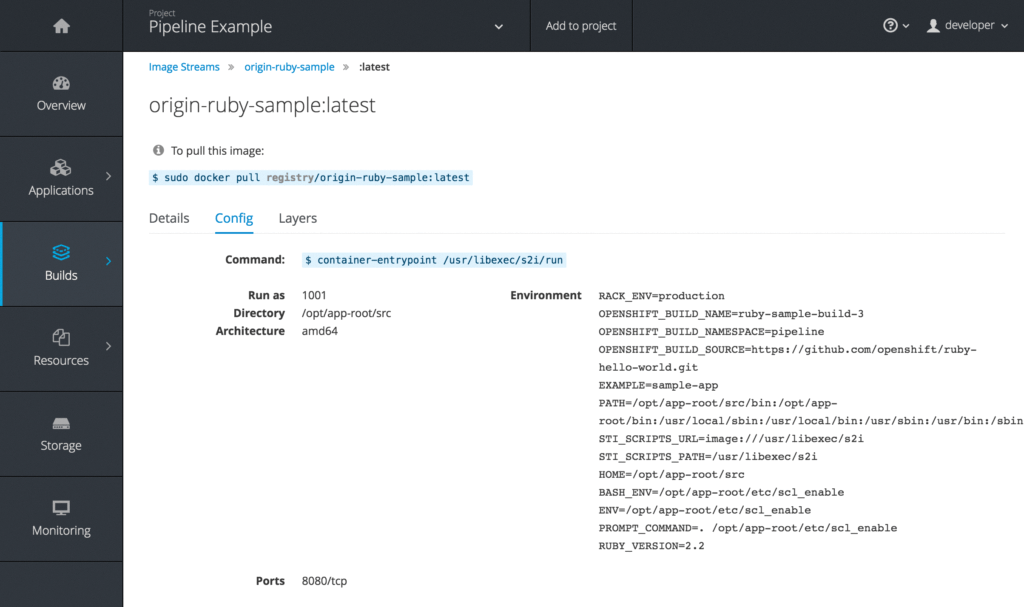

The GUI built by Red Hat for the Docker registry, which is part of OpenShift, is even more useful. Remember, if you want to launch a Kubernetes pod from Docker containers on a host, you need to specify the Docker image you want to use as a basis in the pod definition. In the default configuration, Docker collects the images from the Docker Hub and then runs them locally.

It doesn't work that way with Kubernetes; after all, the overall concept envisages building suitable PaaS containers in OpenShift and rolling them out into production operation. To do so, however, local Docker instances must be able to communicate with a local registry in which the prebuilt images are stored. A registry that fits the bill is included with OpenShift.

In the early 3.x releases of OpenShift, the registry was provided entirely without a GUI. For example, if you wanted to know which images were stored there, you had to go to the command line, where you typically faced an impossibly long image list that you had to sort manually. Those days are over. In the OpenShift GUI, the registry now has its own page, including the previously described search fields. Each image stored in the registry can be examined individually, and the GUI also displays additional details of the images (Figure 3). All in all, the OpenShift GUI is now far more convenient to use than before.

Bundled with CloudForms

An ideal situation for software vendors is that different environments can be managed comfortably in a single user interface. Red Hat has at least two candidates in its portfolio for which admins might well want exactly that: OpenShift and CloudForms.

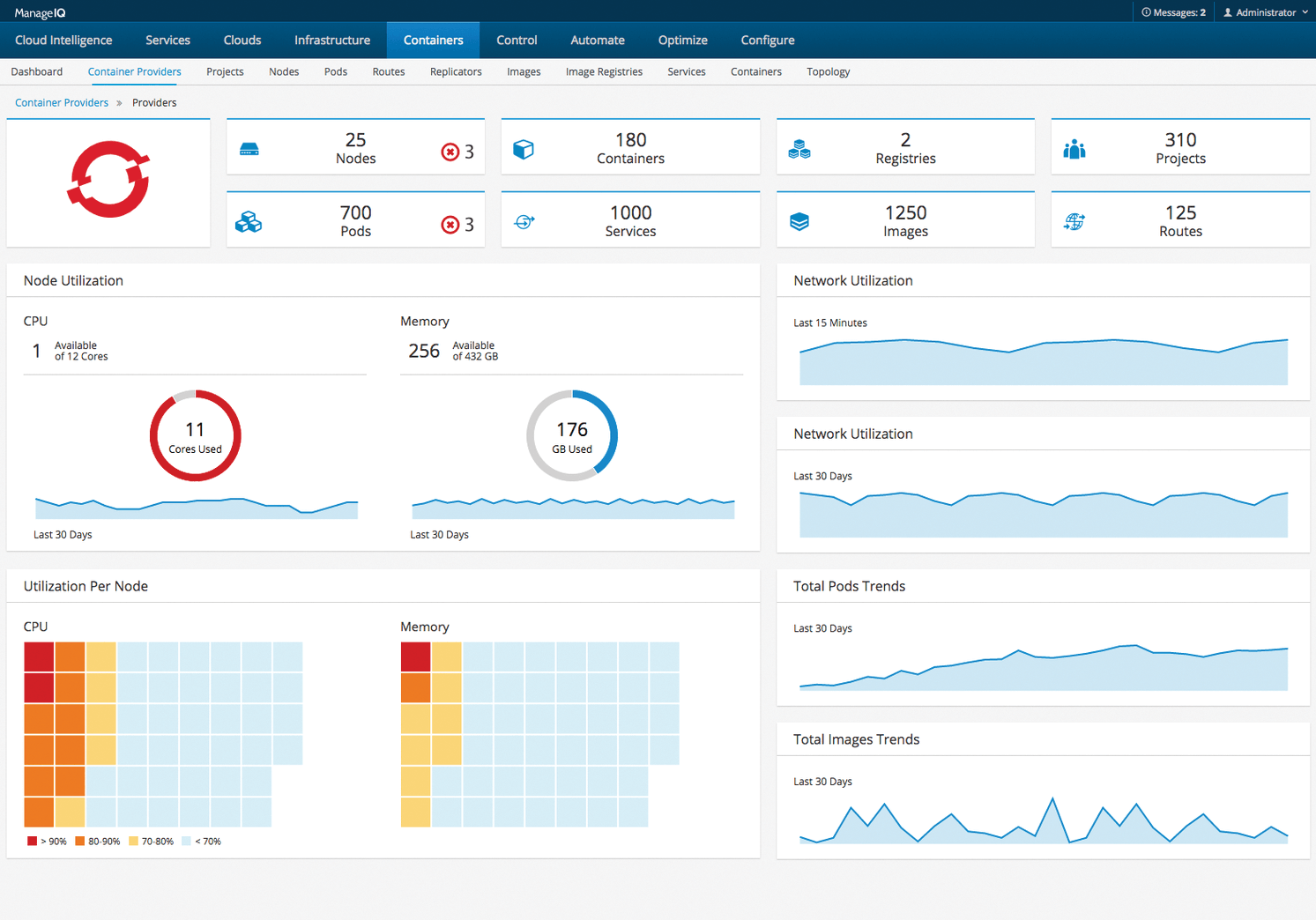

Since OpenShift 3.1, Red Hat has consistently offered the option of integrating CloudForms in such a way that it rolls out its services on OpenShift as pods. Don't expect a single management GUI, though, because CloudForms comes with a convenient GUI of its own. Of course, combining CloudForms and OpenShift makes sense, because the two components work together well (Figure 4).

OpenShift and Jenkins

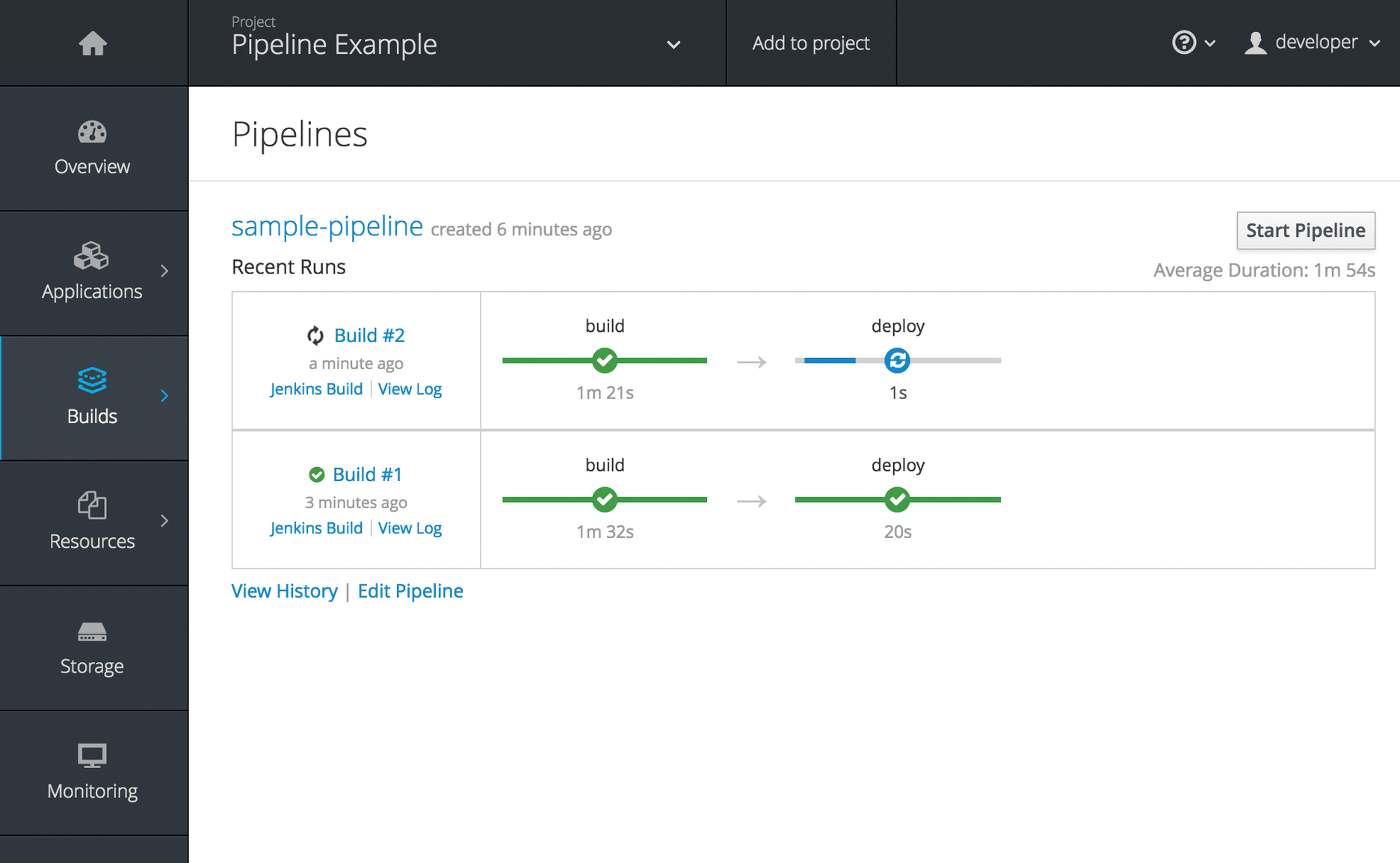

One major motivation for using OpenShift is that you can build your own container infrastructure on the solid foundation of continuous integration (CI) and continuous delivery (CD). To make sure this works, the OpenShift developers connected their software to the Jenkins CI/CD tool through a separate plugin and introduced build pipelines at the same time.

The idea is that you can develop your containers in the form of Git directories or at least manage the Docker files in them. When a developer checks in a change for a container, Jenkins automatically starts to rebuild the Docker image and uploads the finished image to the registry.

Functional checks are also carried out completely automatically. A build pipeline can consist of any number of steps that you define freely. This feature is very useful for image developers (Figure 5).

Costs

The big question is how much Red Hat wants for the product. For the online version, this is an easy question to answer: The starter package – with one project, 1GB of RAM, and 1GB of storage – is free of charge. For a minimum of $50 per month, the Pro Plan comes with more initial RAM, CPU, and storage, which can also be expanded as needed. Complete pricing information can be found online [9].

Red Hat continues to quote prices for a dedicated environment at a minimum of $48,000 per year, and you can add various resources for a surcharge. Red Hat will only quote prices for on-premise installation.

Conclusions

Red Hat's OpenShift continues to evolve in leaps and bounds. If you want to enter the world of Kubernetes now and you have little or no previous experience, the product is exactly what you need: The barriers to entry are pleasingly low, and you can look forward to having a Kubernetes platform up and running in next to no time.

The GUI adds genuine value for administrators, users, and developers by filling in the gap in Kubernetes in a gratifying way. However, experienced console jockeys still have the ability to control their environment from the command line.

Many of the features that have been implemented over the past few months are very helpful, such as F5 support. I am definitely looking forward to the next set of innovations.