Command-line tools for the HPC administrator

Line Items

The HPC world has some amazing "big" tools that help administrators monitor their systems and keep them running, such as the Ganglia and Nagios cluster monitoring systems. Although they are extremely useful, sometimes it is the small tools that can help debug a user problem or find system issues. Here are a few favorites.

ldd

The introduction of sharable objects [1], or "dynamic libraries," has allowed for smaller binaries, less "skew" across binaries, and a reduction in memory usage, among other things. Users, myself included, tend to forget that when code compiles, we only see the size of the binary itself, not the "shared" objects.

For example, the following simple Hello World program, test1, uses PGI compilers (16.10):

PROGRAM HELLOWORLD write(*,*) "hello world" END

Running the ldd command against the compiled program produces the output in Listing 1. If you look at the binary, which is very small, you might think it is the complete story, but after looking at the list of libraries linked to it, you can begin to appreciate what compilers and linkers do for users today.

Listing 1: Show Linked Libraries (ldd)

$ pgf90 test1.f90 -o test1

$ ldd test1

linux-vdso.so.1 => (0x00007fff11dc8000)

libpgf90rtl.so => /opt/pgi/linux86-64/16.10/lib/libpgf90rtl.so (0x00007f5bc6516000)

libpgf90.so => /opt/pgi/linux86-64/16.10/lib/libpgf90.so (0x00007f5bc5f5f000)

libpgf90_rpm1.so => /opt/pgi/linux86-64/16.10/lib/libpgf90_rpm1.so (0x00007f5bc5d5d000)

libpgf902.so => /opt/pgi/linux86-64/16.10/lib/libpgf902.so (0x00007f5bc5b4a000)

libpgftnrtl.so => /opt/pgi/linux86-64/16.10/lib/libpgftnrtl.so (0x00007f5bc5914000)

libpgmp.so => /opt/pgi/linux86-64/16.10/lib/libpgmp.so (0x00007f5bc5694000)

libnuma.so.1 => /lib64/libnuma.so.1 (0x00007f5bc5467000)

libpthread.so.0 => /lib64/libpthread.so.0 (0x00007f5bc524a000)

libpgc.so => /opt/pgi/linux86-64/16.10/lib/libpgc.so (0x00007f5bc4fc2000)

librt.so.1 => /lib64/librt.so.1 (0x00007f5bc4dba000)

libm.so.6 => /lib64/libm.so.6 (0x00007f5bc4ab7000)

libc.so.6 => /lib64/libc.so.6 (0x00007f5bc46f4000)

libgcc_s.so.1 => /lib64/libgcc_s.so.1 (0x00007f5bc44de000)

/lib64/ld-linux-x86-64.so.2 (0x000056123e669000)

If the application fails, a good place to look is the list of libraries linked to the binary. If the paths have changed or if you copy the binary from one system to another, you might see a library mismatch. The ldd command is indispensable when chasing down strange issues with libraries.

find

One of those *nix commands you don't learn at first but that can really save your bacon is find. If you are looking for a specific file or a set of files with a similar name, then find is your friend.

I tend to use find two ways. The first way is fairly straightforward: If I'm looking for a file or a set of files below the directory in which I'm located (pwd), then I use some variation of the following:

$ find . -name "*.dat.2"

The dot right after the find command tells it to start searching from the current directory and then search all directories under that directory.

The -name option lets me specify a "template" that find uses to look for files. In this case, the template is *.dat.2. By using the * wildcard, find will locate any file that ends in .dat.2. If I truly get desperate to find a file, I can go to the root directory (/) and run the same find command.

The second way I run find is to use its output as input to grep. Remember, *nix is designed to have small programs that can pipe input and output from one command to another for complex processing. Here, the command chain

$ find . -name "*.dat.2" | grep -i "xv426"

takes the output from the find command and pipes it into the grep command to look for any file name that contains the string xv426 – be it uppercase or lowercase (-i). The power of *nix lies in this ability to combine find with virtually any other *nix command (e.g., sort, unique, wc, sed).

In *nix, you have more than one way to accomplish a task: Different commands can yield the same end result. Just remember that you have a large number of commands from which to draw; you don't have to write Python or Perl code to accomplish a task that you can accomplish from the command line.

ssh and pdsh

It might sound rather obvious, but two of the tools I rely on the most are a simple secure tool for remote logins, ssh, and a tool that uses ssh to run commands on remote systems in parallel or in groups, pdsh. When clusters first showed up, the tool of choice for remote logins was rsh. It had been around for a while and was very easy to use.

However, it was very insecure because it transmitted data – including passwords – from the host machine to the target machine with no encryption. Therefore, a very simple network sniff could gather lots of passwords. Anyone who used rsh, rlogin, or telnet between systems across the Internet, such as when users logged in to the cluster, left them wide open to attacks and password sniffing. Very quickly people realized that something more secure was needed.

In 1995, researcher Tatu Ylönen created Secure Shell (SSH) because of a password sniffing attack on his network. It gained popularity, and SSH Communications Security was founded to commercialize it. The OpenBSD community grabbed the last open version of SSH and developed it into OpenSSH [2]. After gaining popularity in the early 2000s, the cluster community grabbed it and started using it to help secure clusters.

SSH is extremely powerful. Beyond just remote logins and commands run on a remote node, it can be used for tunneling or forwarding other protocols over SSH. Specifically, you can use SSH to forward X from a remote host to your desktop and copy data from one host to another (scp), and you can use it in combination with rsync to back up, mirror, or copy data.

SSH was a great development for clusters and HPC, because it finally provided a way to log in to systems and send commands securely and remotely. However, SSH could only do this for a single system, and HPC systems can comprise hundreds or thousands of nodes; therefore, admins needed a way to send the same command to a number of nodes or a fixed set of nodes.

In a past article [3], I wrote about a class of tools that accomplishes this goal: parallel shells. The most common is pdsh [4]. In theory, it is fairly simple to use. It uses a specific remote command to run a common command on specified nodes. You have a choice of underlying tools when you build and use pdsh. I prefer to use SSH because of the security if offers.

To create a simple file containing the list of hosts you want pdsh to use by default, enter:

export WCOLL=/home/laytonjb/PDSH/hosts <C>WCOLL<C> is an environment variable that points to the location of the file that lists hosts. You can put this command in either your home or global <C>.bashrc<C> file.

SSHFS

I've written about SSHFS [5] in the past, and it has to be one of the most awesome filesystem tools I have ever used. SSHFS is a Filesystem in Userspace (FUSE)-based [6] client that mounts and interacts with a remote filesystem as though the filesystem were local (i.e., shared) storage. It uses SSH as the underlying protocol and SFTP [7] as the transfer protocol, so it's as secure as SFTP.

SSHFS can be very handy when working with remote filesystems, especially if you only have SSH access to the remote system. Moreover, you don't need to add or run a special client tool on the client nodes or a special server tool on the storage node; you just need SSH active on your system. Almost all firewalls allow port 22 access, so you don't have to configure anything extra (e.g., NFS or CIFS); you just need one open port on the firewall – port 22. All the other ports can be blocked.

Many filesystems allow encryption of data at rest. Using SSHFS in combination with an encrypted filesystem ensures that your data is encrypted at rest and "over the wires," which prevents packet sniffing within or outside the cluster and is an important consideration in a mobile society in which users want to access their data from multiple places with multiple devices.

A quick glance at SSHFS [8] indicates that the sequential read and write performance is on par with NFS. However, random I/O performance is less efficient than NFS. Fortunately, you can tune SSHFS to reduce the effect of encryption on performance. Furthermore, you can enable compression to improve performance. Using these tuning options, you can recover SSHFS performance so that it matches and even exceeds NFS performance.

vmstat

One *nix command that gets no respect is vmstat [9]; however, it can be an extremely useful command, particularly in HPC. vmstat reports Linux system virtual memory statistics, and although it has several "modes," I find the default mode to be extremely useful. Listing 2 is a quick snapshot of a Linux laptop.

Listing 2: vmstat on a Laptop

01 [laytonjb@laytonjb-Lenovo-G50-45 ~]$ vmstat 1 5 02 procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu----- 03 r b swpd free buff cache si so bi bo in cs us sy id wa st 04 1 0 0 5279852 2256 668972 0 0 1724 25 965 1042 17 9 71 2 0 05 1 0 0 5269008 2256 669004 0 0 0 0 2667 1679 28 3 69 0 0 06 1 0 0 5260976 2256 669004 0 0 0 504 1916 933 25 1 74 0 0 07 2 0 0 5266288 2256 668980 0 0 0 36 4523 2941 29 4 67 0 0 08 0 0 0 5276056 2256 668960 0 0 4 4 9104 6262 36 5 58 0 0

Each line of output corresponds to a system snapshot at a particular time (Table 1), and you can control the amount of time between snapshots. The first line of numbers are the metrics since the system was rebooted. Lines of output after that correspond to current values. A number of system metrics are very important. The first thing to look at is the number of processes (r and b). If these numbers start moving up, something unusual might be happening on the node, such as processes waiting for run time or sleeping.

Tabelle 1: vmstat Output

|

vmstat Column |

Meaning |

|---|---|

|

procs |

|

|

r |

No. of processes in uninterruptible sleep |

|

b |

No. of processes waiting for run time |

|

memory |

|

|

swpd |

Amount of virtual memory used |

|

free |

Amount of idle memory |

|

buff |

Amount of memory used as buffers |

|

cache |

Amount of memory used as cache |

|

swap |

|

|

si |

Amount of memory swapped in from disk (blocks/sec) |

|

so |

Amount of memory swapped out to disk (blocks/sec) |

|

io |

|

|

bi |

No. of blocks received from a block device (blocks/sec) |

|

bo |

No. of blocks sent to a block device (blocks/sec) |

|

system |

|

|

in |

No. of interrupts per second, including the clock |

|

cs |

No. of context switches per second |

|

cpu |

|

|

us |

Time spent running non-kernel code (=user time+nice time) |

|

sy |

Time spent running kernel code (=system time) |

|

id |

Time spent idle |

|

wa |

Time spent waiting for I/O |

|

st |

Time stolen from a virtual machine |

The metrics listed under memory can be useful, particularly as the kernel grabs and releases memory. You shouldn't be too worried about these values unless those in the next section (swap) are non-zero. If you see non-zero si and so values, excluding the first row, you should be concerned, because it indicates that the system is swapping, and swapping memory to disk can really kill performance. If a user is complaining about performance and you see a node running really slow, with a very large load, then it's a good possibility that the node is swapping.

The metrics listed in the io section are also good to watch. They list blocks either sent to or received from a block device. If both of these numbers are large, the application running on the nodes is likely doing something unusual by reading and writing to the device at the same time, which can hurt performance.

The other metrics can be very useful, as well, but I tend to focus on those mentioned first before scanning the others. You can also send this data to a file for postprocessing or plotting (e.g., for debugging user problems on nodes).

watch

At some point, you will have to debug an application. It might belong to you, or it might belong to another user, but you will be involved. Although debugging can be tedious, you can learn a great deal from it. Lately, one tool I've been using more and more is called watch.

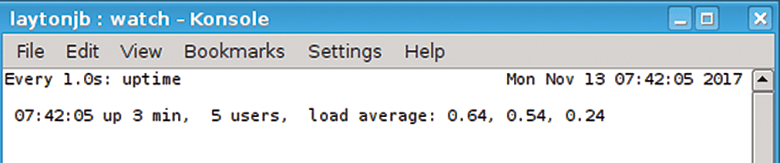

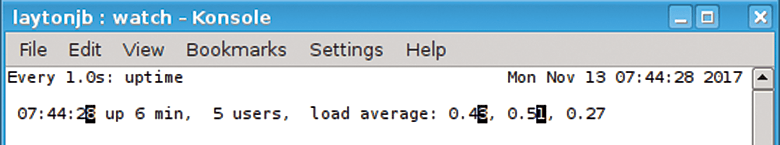

The cool watch [10] tool can help you immensely, just by doing something extremely simple: run a command repeatedly and display the output to stdout. For example, assume a user has an application hanging on a node. One of the first things I want to check is the load on the node (i.e., whether it's very high or very low). Rather than repeatedly typing uptime in a console window as the application executes, I can use watch to do this for me; plus, it will overwrite its previous output so you can observe the system load as it progresses, without looking at infinitely scrolling terminal output.

For a quick example, the simple command

$ watch -n 1 uptime

tells watch to run a command (uptime) every second (-n 1). It will continue to run this command forever unless you interrupt it or kill it. You can change the time interval to whatever you want, keeping in mind that the command being executed could affect system performance. Figure 1 shows a screen capture from my laptop running this command.

watch -n 1 uptime command.One useful option to use with watch is -d, which highlights differences between iterations. This option gives you a wonderful way to view the output of time-varying commands like uptime. You can see in Figure 2 that changes are highlighted (I'm not using a color terminal, so they show up as characters with a black background). Notice that the time has changed as well as the first two loads.

watch -n 1 -d uptime command.One bit of advice around using watch is to be careful about passing complicated commands or scripts. By default, watch passes commands using sh -c; therefore, you might have to put the command in quotes to make sure it is passed correctly.

You can use watch in conjunction with all kinds of commands. Personally, I use it with uptime to get a feel for what's happening on a particular node with regard to load. I do this after a node has been rebooted to make sure it's behaving correctly. I also use watch with nvidia-smi on a GPU-equipped node, because it is great way to tell whether the application is using GPUs and, if so, lets me see the load on and the temperature of the GPU(s).

One thing I have never tried is using watch in conjunction with the pdsh command. I would definitely use a longer time interval than one second, because it can sometimes take a bit of time to gather all the data from the cluster. However, because pdsh doesn't guarantee that it will return the output in a certain order, I think the output would be jumbled from interval to interval. If anyone tries this, be sure to post a note somewhere. Perhaps you know of a pdsh-like tool that guarantees the output in some order?

An absolute killer use of watch on a node is to use it with tmux [11], a terminal multiplexer (i.e., you can take a terminal window and break it into several panes). If you are on a node writing code or watching code execute, you can create another pane and use watch to track the load on the node or GPU usage and temperatures, which is a great way to tell whether the code is using GPUs and when. If you use the command line, tmux and watch should be a part of your everyday kit.

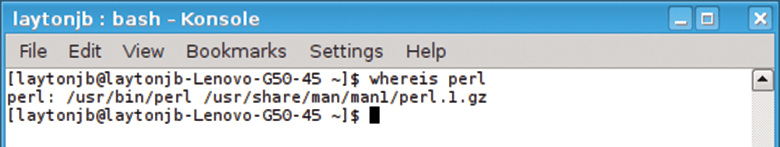

whereis and which

The $PATH variable in Linux and *nix tells you the directories or paths that the operating system will use when looking for a command. If you run the command voodoo and the result is an error message like can't find voodoo, but you know it is installed on your system, you might have a $PATH problem.

You could look at your $PATH variable with the env command, but I like to use the simple whereis command, which tells you whether a command is in $PATH and where it is located. For example, when I looked for perl (Figure 3), the output told me where the man pages were located, as well as the binary.

whereis command.Think about a situation in which your $PATH is munged, and all of a sudden, you can't run simple commands. An easy way to discover the problem is to use whereis. If the command is not in your $PATH, you can now use find to locate it – if it's on the system.

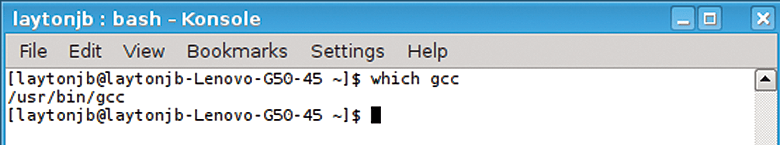

Another useful command, which, is very helpful for determining what version of a command will be run when executed. For example, assume you have more than one GCC compiler on your system. How do you know which one will be used? The simple way is to use which, as shown in Figure 4.

One way I use which quite a bit is when I create new modules for lmod, and on more than one occasion, I have damaged my $PATH so that the command for which I'm trying to write a module isn't in the $PATH variable. Therefore, I know I managed to munge something in the module.

I promise you that if you are a system administrator for any kind of *nix system, HPC or otherwise, at some point, whereis and which are going to help you solve a problem. My favorite war story is about a user who managed to erase their $PATH completely on a cluster and could do nothing. The problem was in the user's .bashrc file, where they had basically erased their $PATH in an attempt to add a new path.

lsblk

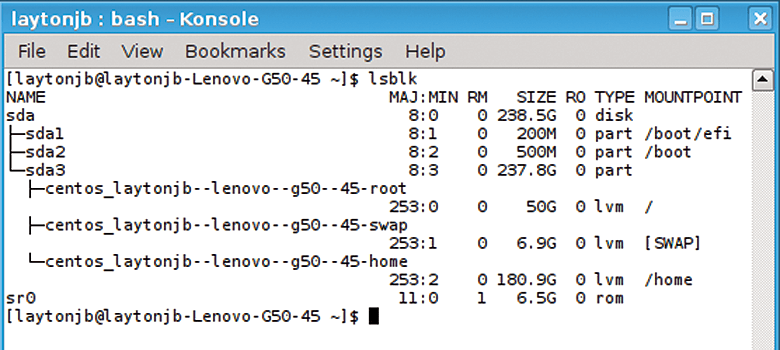

When I get on a new system, one of the first things I want to know is how the storage is laid out. Also, in the wake of a filesystem issue (e.g., it's not mounted), I want a tool to discover the problem. The simple lsblk command can help in both cases.

As you examine the command, it seems fairly obvious that ls plus blk will "list all block devices" on the system (Figure 5). This is not the same as listing all mounted filesystems, which is accomplished with the mount command (which lists all network filesystems, as well).

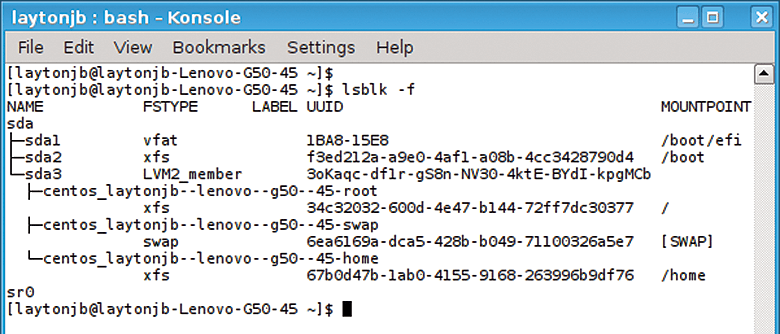

lsblk command.The default "tree" output shows the partitions of a particular block device. The block device sizes, in human-readable format, are also shown, as is their mount point (if applicable). A useful option is -f, which adds filesystem output to the lsblk output (Figure 6).

lsblk -f command.kill

Sometime in your administrative career, you will have to use the kill [12] command, which sends a signal to the application to tell it to terminate. In fact, you can send a host of signals to applications (Table 2). These signals can accomplish a number of objectives with applications, but the most useful is SIGKILL.

Tabelle 2: Process Signals

|

SIGHUP |

SIGUSR2 |

SIGURG |

|

SIGINT |

SIGPIPE |

SIGXCPU |

|

SIGQUIT |

SIGALRM |

SIGXFSZ |

|

SIGILL |

SIGTERM |

SIGVTALRM |

|

SIGTRAP |

SIGSTKFLT |

SIGPROF |

|

SIGABRT |

SIGCHLD |

SIGWINCH |

|

SIGIOT |

SIGCONT |

SIGIO and SIGPOLL |

|

SIGFPE |

SIGSTOP |

SIGPWR |

|

SIGKILL |

SIGSTP |

SIGSYS |

|

SIGUSR1 |

SIGTTIN |

|

|

SIGSEGV |

SIGTTOU |

I call SIGKILL the "extreme prejudice" option. If you have a process that just will not die, it's time to use SIGKILL:

$ kill -9 [PID]

Theoretically, this should end the process specified, but if for some crazy reason the process won't die (terminate), and you need it to die, the only other action I know to take is to shut down the system. Many times this can result in a compromised configuration when the system is restarted, but you might not have much choice.

As with whereis and which, I can promise that you will have to use kill -9 to stop a process. Sometimes, the problem is the result of a wayward user process, and one way to find that process is to use the commands mentioned in this article. For example, you can use the watch command to monitor the load on the system. If the system is supposed to be idle but watch -n 1 uptime shows a reasonably high load, then you might have a hung process taking up resources. Also, you can use watch in a script to find user processes that are still running on a node that isn't accessible to users (i.e., it has been taken out of production). In either case, you can then use kill -9 to end the process(es).

Summary

I hope in this article that I have pointed out some useful commands for HPC administration. Although the commands tend to be very simple, they can be very powerful (e.g., watch); they are also very useful for plain old Linux administration, not just for HPC. Keep these commands close by on a Post-it note; when you're beginning to debug an issue, a glance at the list will remind you to start with simple tools. You can move on to the "fancy" solutions after you have found the problem. These tools have saved my bacon more than one time, and I hope they help you.