A hands-on look at Kubernetes with OpenAI

Learning in Containers

OpenAI [1] is a non-profit, privately funded research institution in San Francisco, where I work with about 60 other employees on machine learning and artificial intelligence. In concrete terms, my colleagues are examining how to teach a computer new behavioral patterns through experience, without being deliberately programmed to handle a task. Contributors include Elon Musk (Tesla, SpaceX) [2] and Sam Altman (Y Combinator) [3], among others.

The people of OpenAI contribute academic publications on the web, presentations at conferences, and software for researchers and developers. In this article, I show how OpenAI prepares its Kubernetes cluster to run artificial intelligence experiments across thousands of computers.

Go Deep

The company's main focus is on deep learning – that is, researching large neural networks with many layers. In recent years, deep learning has gained importance because it can generally solve extremely complicated problems.

For example, the AlphaGo bot, developed by Google's DeepMind, has learned how to play the Chinese board game Go, which is considered to be extremely complicated, with a far wider range of moves than chess. Go experts agreed that it would take at least 20 to 30 years until a computer could beat the best human Go players. However, in the spring of 2016, the AlphaGo deep-learning software defeated the (at that time) top Go player, Lee Sedol, and even a team of five world champions in 2017.

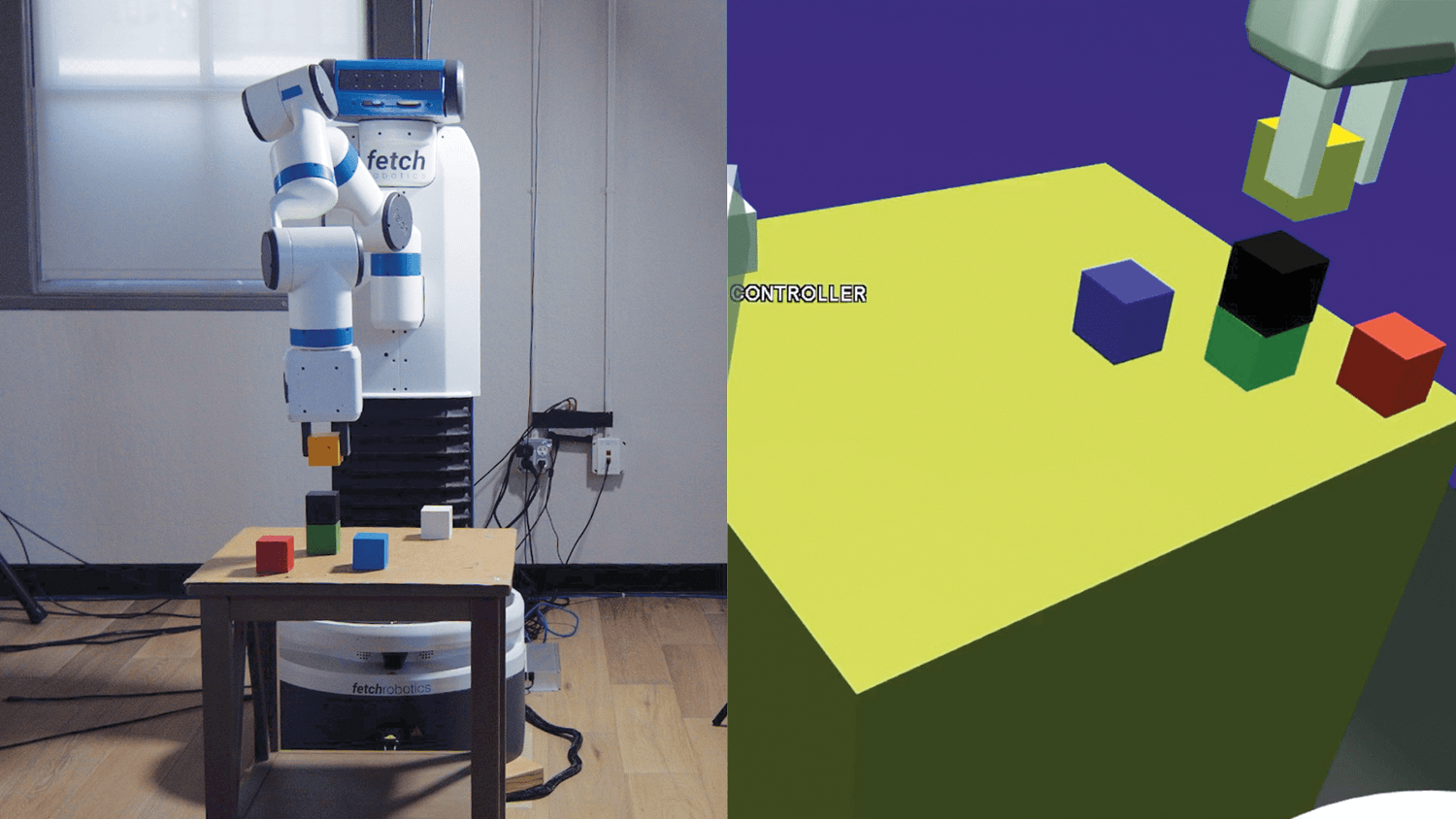

In contrast, OpenAI researches algorithms that, unlike AlphaGo, learn not just a single game, but a wide range of tasks. The company recently developed a robot that observes only once how a person does a task previously unknown to the robot; then, Fetch (the robot's internal name) is able to repeat the task in a new environment (Figure 1) [4]. The robot even learned the concepts of gripping and stacking by observing people.

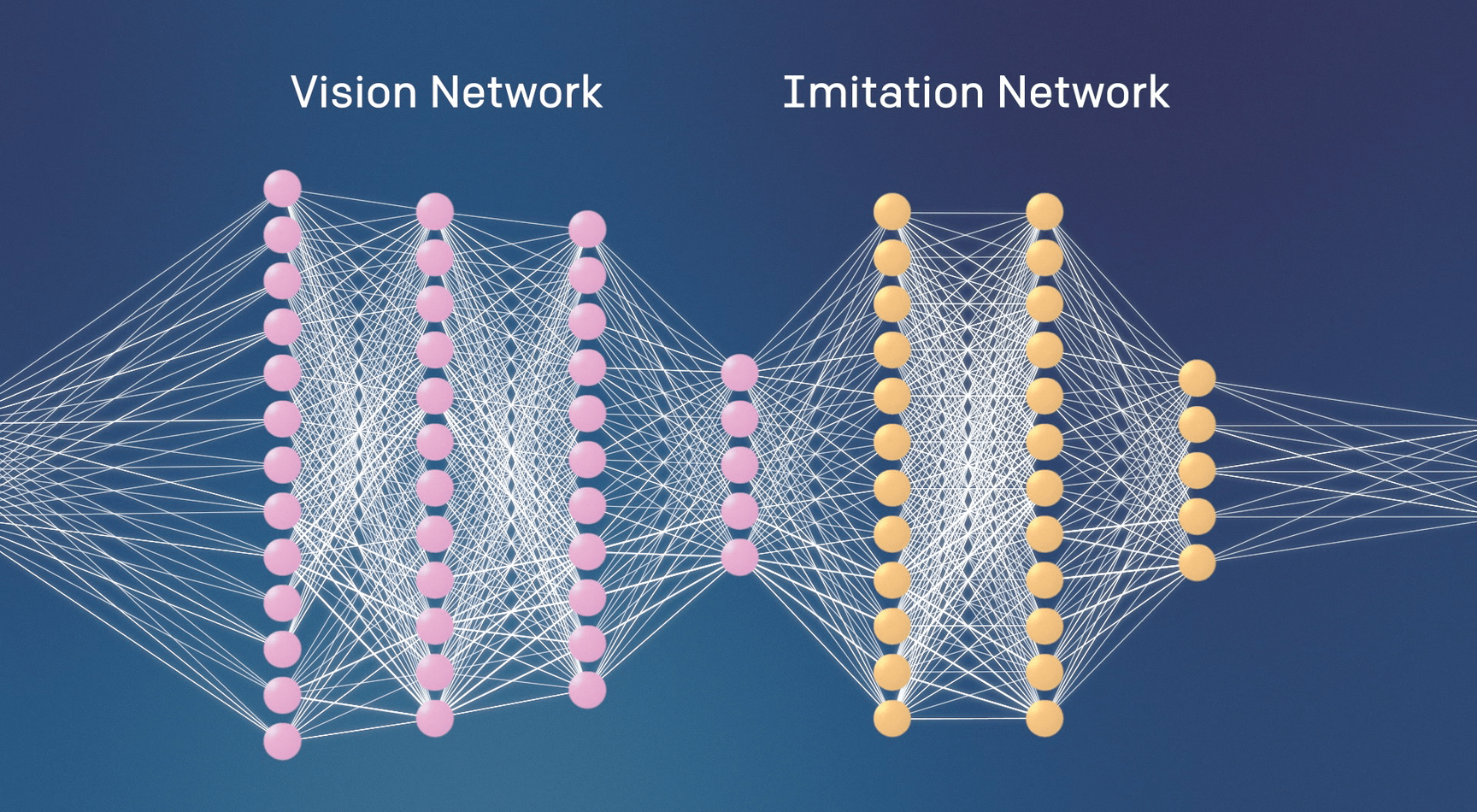

Despite the proven success of Google, Facebook, OpenAI, and various university projects, no one knows what the perfect structure of a deep learning neural network looks like. The question is, which structure is best at allowing a network to learn new skills? The bulk of the research in the company, then, comprises experiments with new learning algorithms and network structures, which require the use of a complex technical infrastructure. OpenAI researches structures (Figure 2) and seeks to find out how data moves through them.

Bare-Metal Performance

For researchers to work productively, the team must be in a position to distribute new algorithms and network topologies to thousands of computers in seconds and to evaluate the results. An extremely flexible infrastructure is needed. The project requirements are articulated as follows:

- Performance is vital: A computing neural network requires massive computing power, which is why the project uses graphics processing units (GPUs), for example.

- Computer topologies must be customizable: The project must be able to implement unforeseen changes in machine structure, such as distributing an originally centralized computation to hundreds of computers.

- Usage fluctuates rapidly: Researchers commonly try out new methods on a single CPU core for two months and then suddenly need 10,000 cores for two weeks.

To achieve the best possible raw performance, the project needs direct access to the hardware as well as to the CPU and accelerators such as GPUs. Modern hypervisors such as Xen run the CPU code natively, but all I/O operations generate administrative overhead. OpenAI needs the maximum bandwidth, in particular for communicating with GPUs via the PCIe bus. Classical virtualization is thus out of the question.

On the other hand, a large bare-metal server farm would be a nightmare for users and admins. Instead of relying on one cloud provider, OpenAI researchers use all kinds of providers – Microsoft Azure, Amazon Web Services (AWS), Google Compute Engine (GCE), and our own data centers – for cost savings from volume discounts for thousands of computers, among other things. However, most of our researchers are not Linux or cloud experts; learning three or more cloud APIs is out of the question. Instead, they need tools that empower them to implement their ideas independently, without having to ask engineers for help.

The company relies on Docker containers [5] and Kubernetes [6] to master this balancing act between usability and performance. Containers let you describe all the dependencies and packages (e.g., TensorFlow [7]) for an experiment. The container image can be saved as a snapshot and sent via the network, much like a virtual machine (VM). Because of kernel integration, the images have no performance overhead – unlike VMs.

The researchers describe their experiments and algorithms as containers and Kubernetes pods. The infrastructure team then ensures that Kubernetes provides the required computers (nodes) to accommodate all the pods.

The team is currently running a number of Kubernetes clusters with up to 2,000 nodes. Thus, the company is probably one of the largest Kubernetes users, which means, on the minus side, that Kubernetes (often referred to as Kube) traverses shaky ground and has to fight for stability. To ensure that third parties also benefit from our experience in dealing with helpful Kubernetes data structures and abstractions, I describe in the following section some of the "gotchas" encountered by the team.

Uncharted Territory

The typical life cycle of an experiment proceeds with one or two researchers, who test new ideas for several weeks or months. During this time, they only need minimal computing capacity. At some point (usually just before the deadline of an academic journal), they need tens of thousands of CPU cores in one fell swoop to carry out much larger calculations. To avoid the cost of out-of-work CPUs, they need to change the size of the cluster at run time. The solution is an autoscaler (kubernetes-ec2-autoscaler) [8], which OpenAI has released under an MIT license on GitHub. The autoscaler specifically works by:

- fetching a list of all pods that currently cannot be placed on a node;

- creating a plan to launch a sufficient number of nodes, taking into consideration several factors, such as providing cheap computing power, providing GPUs, co-locating related jobs, and complying with the various user preferences; and, finally,

- putting the plan into practice.

At this point, the Kube API shows one of its strengths: Because the autoscaler uses an API to access all the resources, it allows OpenAI to create a kind of programmable infrastructure. Kubernetes provides extremely practical and extensible data structures that can be tracked easily on a home computer:

kubectl get nodes

shows the data structure for a node (Listings 1 and 2) on a running cluster. The labels serve as custom markers in which to store metadata such as the location, type of CPU, and machine equipment. The autoscaler then provides machines with the correct metadata, depending on the requirement.

Listing 1: Show Nodes

$ kubectl get nodes NAME STATUS AGE VERSION 10.126.22.9 Ready 3h v1.6.2

Listing 2: Kubernetes Node Data Structure in YAML

$ kubectl get node 10.126.22.9 -o yaml

apiVersion: v1

kind: Node

metadata:

creationTimestamp: 2017-06-07T08:15:30Z

labels:

openai.org/location: azure-us-east-v2

name: 10.126.22.9

spec:

externalID: 10.126.22.9

providerID: azure:////62823750-1942-A94F-822E-E6BF3C9EDCC4

status:

addresses:

- address: 10.126.22.9

type: InternalIP

- address: 10.126.22.9

type: Hostname

allocatable:

alpha.kubernetes.io/nvidia-gpu: "0"

cpu: "20"

memory: 144310716Ki

pods: "28"

nodeInfo:

architecture: amd64

containerRuntimeVersion: docker://1.12.6

kernelVersion: 4.4.0-72-generic

osImage: Ubuntu 14.04.5 LTS

GPU Scheduling

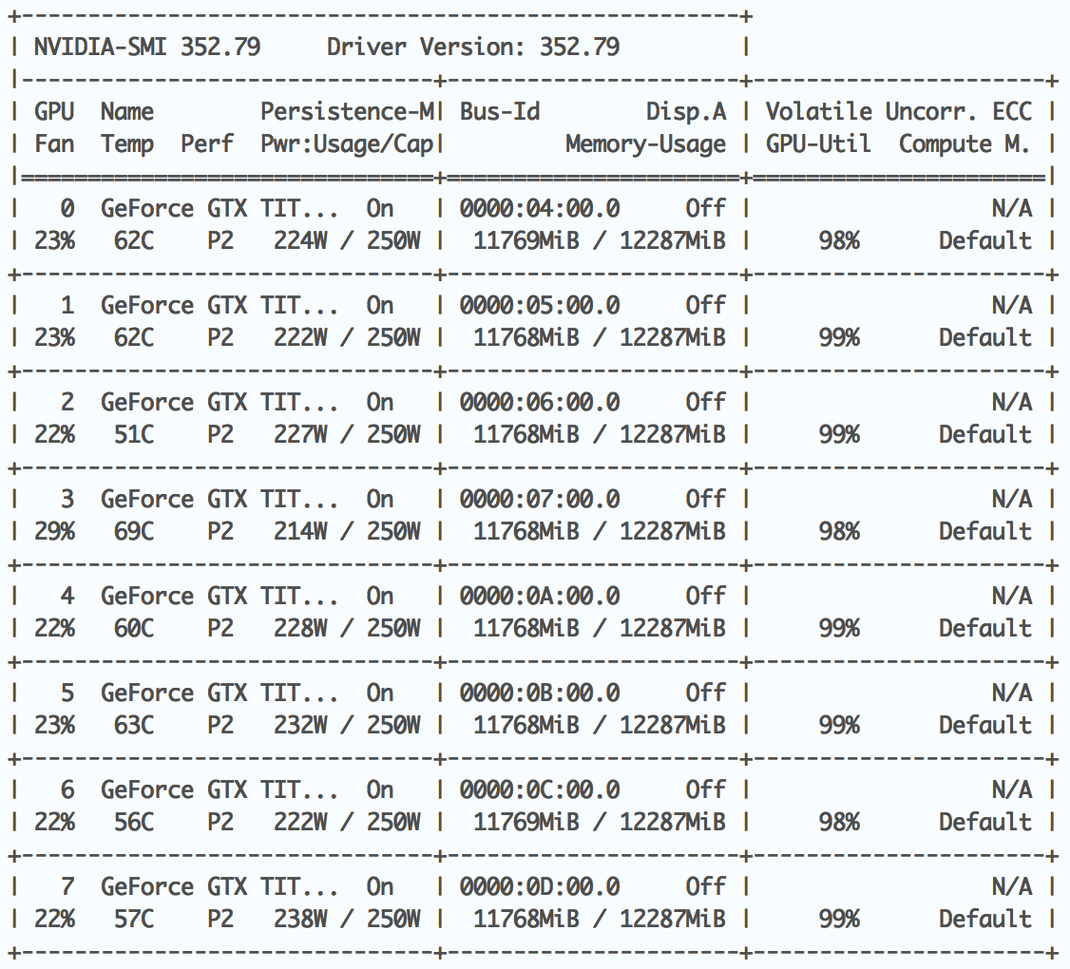

Graphics processors do most of the calculations in the field of deep learning, because the individual "neurons" of the network, similar to the pixels of a screen, act independently of each other. Computer games, for example, use this parallelism for hardware-accelerated 3D rendering. OpenAI relies on Nvidia's CUDA [9] to map neural networks using GPUs (Figure 3).

nvidia-smi – top for GPUs – reports on a machine with eight fully utilized Nvidia graphics processors.In connection with Kubernetes, the problem is managing multiple GPUs as well as other resources (CPU, RAM, etc.). For example, suppose you have a node comprising 64 cores and eight Nvidia graphics cards. A pod requires 16 CPU cores and two graphics cards for experiments. You are probably wondering how you can notify the Kubernetes scheduler of these resource requirements.

The CPU part is easy: Set the spec.containers[].resources.requested.cpu field to 16; Kubernetes will never place more than four such pods on the node, so it does not exceed the specified capacity.

To negotiate which pods get which GPUs, you use good old flock(2) [10] inside the pods to secure exclusive locks on GPU devices /dev/nvidia<X>, where <X> is a value between 1 and 8 (Figure 4).

Originally, Kube could not do much with GPUs, so the schema above only worked if the relationship between the CPU and GPU was constant. If another pod required 16 CPU cores but eight graphic cards, without batting an eye, Kube would place that pod on the same node; all of a sudden, you ran out of GPUs. The solution was to split the nodes into several classes using labels and only place a certain type of pod in a class. It was certainly not ideal, but it fulfilled its purpose.

Meanwhile, since version 1.6, Kubernetes has natively supported GPU scheduling. Thus, the hack was replaced with a clean version, but without changes visible to users [11]. This is also a good example of how the infrastructure team handles such changes to Kubernetes without the assistance of all users.

Teething Problems

One potential performance bottleneck in the operation of Kubernetes is communication between the Kubernetes master and the Kubelet, the program that runs on each of the nodes and launches and manages the pod container. When the clusters used by the company became bigger over time, it turned out that there was a surprisingly large volume of traffic – one of the clusters with about 1,000 nodes generated about 4-5GBps of data. (By the way, the Kubernetes master spent about 90 percent of its time encoding and decoding JSON.)

Kubernetes assumes that all nodes on a fast and cheap network communicate with one another. If you want to stretch a single Kubernetes cluster across multiple data centers, you need to dig deep into your bandwidth pockets. Only since version 1.6 was released in March 2017 has communication between the components become far more efficient, thanks to gRPC (Google's RPC framework), which uses protocol buffers, not JSON. Nevertheless, latencies of a few hundred milliseconds can still lead to problems or slow response times when you run kubectl (Kubernetes command-line interface) commands.

Another surprise may be that some elements of Kube work on the "eventual consistency" principle, so the effect of an instruction is only realized after some time. For example, the service resource in Kubernetes follows the principle that facilitates load balancing between multiple pods:

1. Someone (e.g., the replica set controller) generates a new pod, which is part of the service.

2. The scheduler positions the pod, and the Kubelet launches the container.

3. The service controller generates the service endpoint for the pod in a corresponding service.

The pod runs in the period between steps 2 and 3 but is not visible to the service. No wonder the pod doesn't respond to HTTP requests to the service. Normally this isn't a problem, because this period is very small. However, it sometimes takes several minutes for large clusters with thousands of services and pods and posed a big problem for our company, because our experiments needed several minutes to launch.

After a bit of research, it soon became apparent that the service controller is a single process that iterates across all services in a for loop and activates new pods (i.e., creates the service endpoints). Kubernetes hides all this behind the service abstraction. As a symptom, you can only see that your service is down without knowing why.

In addition to performance problems with large clusters, the Kube abstractions are unclean ("leaky") in other areas. The previously mentioned autoscaler ensures that the size of the cluster adapts to the requirements; to do this, it launches new nodes or removes excess nodes from the cluster. However after a few months of intensive use, it transpires that Kubernetes does not always deal well with emerging and disappearing nodes: For example, it deletes logging output from pods when the underlying node goes offline, although the pod itself still exists.

Many of these details are not yet sufficiently tested and documented because Kube is still too new; delving deep into the source code [12] was therefore unavoidable in the end.

Conclusions

After one and a half years of intensive use, our impression is that Kubernetes provides well-designed, Unix-compatible data structures and APIs to create an interface between the users and operators of an infrastructure. This interface offers flexibility to users when faced with changing requirements and for the operators when deploying new cloud resources. The users of the infrastructure do not need to know all the details; the Kube API can serve as an organizational interface between the infrastructure team and other teams.

However, Kubernetes is not a panacea and does not replace an experienced team of administrators. An out-of-the-box installation package provides a Kube cluster with a single Bash script, but what looks simple can be deceptively complex. The ready-made cluster, at least, is not sufficient for the requirements of OpenAI; it still does not pay sufficient attention to the changing needs of system users who want to connect to a new cloud provider or other external systems, such as databases, and who need some additional features, such as the previously mentioned GPU scheduling.

One great strength of Kubernetes, which I've previously neglected, is the open source developer community [13]. New Kube versions usually trigger great anticipation, especially in projects, because the users appreciate the time savings they will experience in the future with access to the new features.

Under the management of the Cloud Native Computing Foundation, supported by the Linux Foundation and Google, the Kubernetes ship is making good headway – and at an impressive speed.